Convection is single or multiphase fluid flow that occurs spontaneously through the combined effects of material property heterogeneity and body forces on a fluid, most commonly density and gravity (see buoyancy). When the cause of the convection is unspecified, convection due to the effects of thermal expansion and buoyancy can be assumed. Convection may also take place in soft solids or mixtures where particles can flow.

Convective flow may be transient (such as when a multiphase mixture of oil and water separates) or steady state (see convection cell). The convection may be due to gravitational, electromagnetic or fictitious body forces. Heat transfer by natural convection plays a role in the structure of Earth's atmosphere, its oceans, and its mantle. Discrete convective cells in the atmosphere can be identified by clouds, with stronger convection resulting in thunderstorms. Natural convection also plays a role in stellar physics. Convection is often categorised or described by the main effect causing the convective flow; for example, thermal convection.

Convection cannot take place in most solids because neither bulk current flows nor significant diffusion of matter can take place. Granular convection is a similar phenomenon in granular material instead of fluids. Advection is the transport of any substance or quantity (such as heat) through fluid motion. Convection is a process involving bulk movement of a fluid that usually leads to a net transfer of heat through advection. Convective heat transfer is the intentional use of convection as a method for heat transfer.

History

In the 1830s, in The Bridgewater Treatises, the term convection is attested in a scientific sense. In treatise VIII by William Prout, in the book on chemistry, it says:

[...] This motion of heat takes place in three ways, which a common fire-place very well illustrates. If, for instance, we place a thermometer directly before a fire, it soon begins to rise, indicating an increase of temperature. In this case the heat has made its way through the space between the fire and the thermometer, by the process termed radiation. If we place a second thermometer in contact with any part of the grate, and away from the direct influence of the fire, we shall find that this thermometer also denotes an increase of temperature; but here the heat must have travelled through the metal of the grate, by what is termed conduction. Lastly, a third thermometer placed in the chimney, away from the direct influence of the fire, will also indicate a considerable increase of temperature; in this case a portion of the air, passing through and near the fire, has become heated, and has carried up the chimney the temperature acquired from the fire. There is at present no single term in our language employed to denote this third mode of the propagation of heat; but we venture to propose for that purpose, the term convection, [in footnote: [Latin] Convectio, a carrying or conveying] which not only expresses the leading fact, but also accords very well with the two other terms.

Later, in the same treatise VIII, in the book on meteorology, the concept of convection is also applied to "the process by which heat is communicated through water".

Terminology

Today, the word convection has different but related usages in different scientific or engineering contexts or applications.

In fluid mechanics, convection has a broader sense: it refers to the motion of fluid driven by density (or other property) difference.

In thermodynamics, convection often refers to heat transfer by convection, where the prefixed variant Natural Convection is used to distinguish the fluid mechanics concept of Convection (covered in this article) from convective heat transfer.

Some phenomena which result in an effect superficially similar to that of a convective cell may also be (inaccurately) referred to as a form of convection; for example, thermo-capillary convection and granular convection.

Mechanisms

Convection may happen in fluids at all scales larger than a few atoms. There are a variety of circumstances in which the forces required for convection arise, leading to different types of convection, described below. In broad terms, convection arises because of body forces acting within the fluid, such as gravity.

Natural convection

Natural convection is a flow whose motion is caused by some parts of a fluid being heavier than other parts. In most cases this leads to natural circulation: the ability of a fluid in a system to circulate continuously under gravity, with transfer of heat energy.

The driving force for natural convection is gravity. In a column of fluid, pressure increases with depth from the weight of the overlying fluid. The pressure at the bottom of a submerged object then exceeds that at the top, resulting in a net upward buoyancy force equal to the weight of the displaced fluid. Objects of higher density than that of the displaced fluid then sink. For example, regions of warmer low-density air rise, while those of colder high-density air sink. This creates a circulating flow: convection.

Gravity drives natural convection. Without gravity, convection does not occur, so there is no convection in free-fall (inertial) environments, such as that of the orbiting International Space Station. Natural convection can occur when there are hot and cold regions of either air or water, because both water and air become less dense as they are heated. But, for example, in the world's oceans it also occurs due to salt water being heavier than fresh water, so a layer of salt water on top of a layer of fresher water will also cause convection.

Natural convection has attracted a great deal of attention from researchers because of its presence both in nature and engineering applications. In nature, convection cells formed from air raising above sunlight-warmed land or water are a major feature of all weather systems. Convection is also seen in the rising plume of hot air from fire, plate tectonics, oceanic currents (thermohaline circulation) and sea-wind formation (where upward convection is also modified by Coriolis forces). In engineering applications, convection is commonly visualized in the formation of microstructures during the cooling of molten metals, and fluid flows around shrouded heat-dissipation fins, and solar ponds. A very common industrial application of natural convection is free air cooling without the aid of fans: this can happen on small scales (computer chips) to large scale process equipment.

Natural convection will be more likely and more rapid with a greater variation in density between the two fluids, a larger acceleration due to gravity that drives the convection or a larger distance through the convecting medium. Natural convection will be less likely and less rapid with more rapid diffusion (thereby diffusing away the thermal gradient that is causing the convection) or a more viscous (sticky) fluid.

The onset of natural convection can be determined by the Rayleigh number (Ra).

Differences in buoyancy within a fluid can arise for reasons other than temperature variations, in which case the fluid motion is called gravitational convection (see below). However, all types of buoyant convection, including natural convection, do not occur in microgravity environments. All require the presence of an environment which experiences g-force (proper acceleration).

The difference of density in the fluid is the key driving mechanism. If the differences of density are caused by heat, this force is called as "thermal head" or "thermal driving head." A fluid system designed for natural circulation will have a heat source and a heat sink. Each of these is in contact with some of the fluid in the system, but not all of it. The heat source is positioned lower than the heat sink.

Most fluids expand when heated, becoming less dense, and contract when cooled, becoming denser. At the heat source of a system of natural circulation, the heated fluid becomes lighter than the fluid surrounding it, and thus rises. At the heat sink, the nearby fluid becomes denser as it cools, and is drawn downward by gravity. Together, these effects create a flow of fluid from the heat source to the heat sink and back again.

Gravitational or buoyant convection

Gravitational convection is a type of natural convection induced by buoyancy variations resulting from material properties other than temperature. Typically this is caused by a variable composition of the fluid. If the varying property is a concentration gradient, it is known as solutal convection. For example, gravitational convection can be seen in the diffusion of a source of dry salt downward into wet soil due to the buoyancy of fresh water in saline.

Variable salinity in water and variable water content in air masses are frequent causes of convection in the oceans and atmosphere which do not involve heat, or else involve additional compositional density factors other than the density changes from thermal expansion (see thermohaline circulation). Similarly, variable composition within the Earth's interior which has not yet achieved maximal stability and minimal energy (in other words, with densest parts deepest) continues to cause a fraction of the convection of fluid rock and molten metal within the Earth's interior (see below).

Gravitational convection, like natural thermal convection, also requires a g-force environment in order to occur.

Solid-state convection in ice

Ice convection on Pluto is believed to occur in a soft mixture of nitrogen ice and carbon monoxide ice. It has also been proposed for Europa, and other bodies in the outer Solar System.

Thermomagnetic convection

Thermomagnetic convection can occur when an external magnetic field is imposed on a ferrofluid with varying magnetic susceptibility. In the presence of a temperature gradient this results in a nonuniform magnetic body force, which leads to fluid movement. A ferrofluid is a liquid which becomes strongly magnetized in the presence of a magnetic field.

Combustion

In a zero-gravity environment, there can be no buoyancy forces, and thus no convection possible, so flames in many circumstances without gravity smother in their own waste gases. Thermal expansion and chemical reactions resulting in expansion and contraction gases allows for ventilation of the flame, as waste gases are displaced by cool, fresh, oxygen-rich gas. moves in to take up the low pressure zones created when flame-exhaust water condenses.

Examples and applications

Systems of natural circulation include tornadoes and other weather systems, ocean currents, and household ventilation. Some solar water heaters use natural circulation. The Gulf Stream circulates as a result of the evaporation of water. In this process, the water increases in salinity and density. In the North Atlantic Ocean, the water becomes so dense that it begins to sink down.

Convection occurs on a large scale in atmospheres, oceans, planetary mantles, and it provides the mechanism of heat transfer for a large fraction of the outermost interiors of the Sun and all stars. Fluid movement during convection may be invisibly slow, or it may be obvious and rapid, as in a hurricane. On astronomical scales, convection of gas and dust is thought to occur in the accretion disks of black holes, at speeds which may closely approach that of light.

Demonstration experiments

Thermal convection in liquids can be demonstrated by placing a heat source (for example, a Bunsen burner) at the side of a container with a liquid. Adding a dye to the water (such as food colouring) will enable visualisation of the flow.

Another common experiment to demonstrate thermal convection in liquids involves submerging open containers of hot and cold liquid coloured with dye into a large container of the same liquid without dye at an intermediate temperature (for example, a jar of hot tap water coloured red, a jar of water chilled in a fridge coloured blue, lowered into a clear tank of water at room temperature).

A third approach is to use two identical jars, one filled with hot water dyed one colour, and cold water of another colour. One jar is then temporarily sealed (for example, with a piece of card), inverted and placed on top of the other. When the card is removed, if the jar containing the warmer liquid is placed on top no convection will occur. If the jar containing colder liquid is placed on top, a convection current will form spontaneously.

Convection in gases can be demonstrated using a candle in a sealed space with an inlet and exhaust port. The heat from the candle will cause a strong convection current which can be demonstrated with a flow indicator, such as smoke from another candle, being released near the inlet and exhaust areas respectively.

Double diffusive convection

Convection cells

A convection cell, also known as a Bénard cell, is a characteristic fluid flow pattern in many convection systems. A rising body of fluid typically loses heat because it encounters a colder surface. In liquid, this occurs because it exchanges heat with colder liquid through direct exchange. In the example of the Earth's atmosphere, this occurs because it radiates heat. Because of this heat loss the fluid becomes denser than the fluid underneath it, which is still rising. Since it cannot descend through the rising fluid, it moves to one side. At some distance, its downward force overcomes the rising force beneath it, and the fluid begins to descend. As it descends, it warms again and the cycle repeats itself. Additionally, convection cells can arise due to density variations resulting from differences in the composition of electrolytes.

Atmospheric convection

Atmospheric circulation

Atmospheric circulation is the large-scale movement of air, and is a means by which thermal energy is distributed on the surface of the Earth, together with the much slower (lagged) ocean circulation system. The large-scale structure of the atmospheric circulation varies from year to year, but the basic climatological structure remains fairly constant.

Latitudinal circulation occurs because incident solar radiation per unit area is highest at the heat equator, and decreases as the latitude increases, reaching minima at the poles. It consists of two primary convection cells, the Hadley cell and the polar vortex, with the Hadley cell experiencing stronger convection due to the release of latent heat energy by condensation of water vapor at higher altitudes during cloud formation.

Longitudinal circulation, on the other hand, comes about because the ocean has a higher specific heat capacity than land (and also thermal conductivity, allowing the heat to penetrate further beneath the surface ) and thereby absorbs and releases more heat, but the temperature changes less than land. This brings the sea breeze, air cooled by the water, ashore in the day, and carries the land breeze, air cooled by contact with the ground, out to sea during the night. Longitudinal circulation consists of two cells, the Walker circulation and El Niño / Southern Oscillation.

Weather

Some more localized phenomena than global atmospheric movement are also due to convection, including wind and some of the hydrologic cycle. For example, a foehn wind is a down-slope wind which occurs on the downwind side of a mountain range. It results from the adiabatic warming of air which has dropped most of its moisture on windward slopes. Because of the different adiabatic lapse rates of moist and dry air, the air on the leeward slopes becomes warmer than at the same height on the windward slopes.

A thermal column (or thermal) is a vertical section of rising air in the lower altitudes of the Earth's atmosphere. Thermals are created by the uneven heating of the Earth's surface from solar radiation. The Sun warms the ground, which in turn warms the air directly above it. The warmer air expands, becoming less dense than the surrounding air mass, and creating a thermal low. The mass of lighter air rises, and as it does, it cools by expansion at lower air pressures. It stops rising when it has cooled to the same temperature as the surrounding air. Associated with a thermal is a downward flow surrounding the thermal column. The downward moving exterior is caused by colder air being displaced at the top of the thermal. Another convection-driven weather effect is the sea breeze.

Warm air has a lower density than cool air, so warm air rises within cooler air, similar to hot air balloons. Clouds form as relatively warmer air carrying moisture rises within cooler air. As the moist air rises, it cools, causing some of the water vapor in the rising packet of air to condense. When the moisture condenses, it releases energy known as latent heat of condensation which allows the rising packet of air to cool less than its surrounding air, continuing the cloud's ascension. If enough instability is present in the atmosphere, this process will continue long enough for cumulonimbus clouds to form, which support lightning and thunder. Generally, thunderstorms require three conditions to form: moisture, an unstable airmass, and a lifting force (heat).

All thunderstorms, regardless of type, go through three stages: the developing stage, the mature stage, and the dissipation stage. The average thunderstorm has a 24 km (15 mi) diameter. Depending on the conditions present in the atmosphere, these three stages take an average of 30 minutes to go through.

Oceanic circulation

Solar radiation affects the oceans: warm water from the Equator tends to circulate toward the poles, while cold polar water heads towards the Equator. The surface currents are initially dictated by surface wind conditions. The trade winds blow westward in the tropics, and the westerlies blow eastward at mid-latitudes. This wind pattern applies a stress to the subtropical ocean surface with negative curl across the Northern Hemisphere, and the reverse across the Southern Hemisphere. The resulting Sverdrup transport is equatorward. Because of conservation of potential vorticity caused by the poleward-moving winds on the subtropical ridge's western periphery and the increased relative vorticity of poleward moving water, transport is balanced by a narrow, accelerating poleward current, which flows along the western boundary of the ocean basin, outweighing the effects of friction with the cold western boundary current which originates from high latitudes. The overall process, known as western intensification, causes currents on the western boundary of an ocean basin to be stronger than those on the eastern boundary.

As it travels poleward, warm water transported by strong warm water current undergoes evaporative cooling. The cooling is wind driven: wind moving over water cools the water and also causes evaporation, leaving a saltier brine. In this process, the water becomes saltier and denser and decreases in temperature. Once sea ice forms, salts are left out of the ice, a process known as brine exclusion. These two processes produce water that is denser and colder. The water across the northern Atlantic Ocean becomes so dense that it begins to sink down through less salty and less dense water. (This open ocean convection is not unlike that of a lava lamp.) This downdraft of heavy, cold and dense water becomes a part of the North Atlantic Deep Water, a south-going stream.

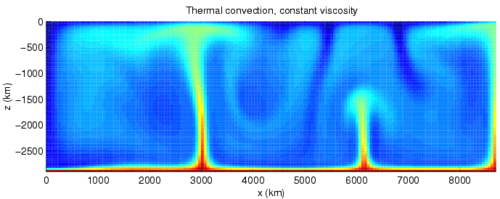

Mantle convection

Mantle convection is the slow creeping motion of Earth's rocky mantle caused by convection currents carrying heat from the interior of the Earth to the surface. It is one of 3 driving forces that causes tectonic plates to move around the Earth's surface.

The Earth's surface is divided into a number of tectonic plates that are continuously being created and consumed at their opposite plate boundaries. Creation (accretion) occurs as mantle is added to the growing edges of a plate. This hot added material cools down by conduction and convection of heat. At the consumption edges of the plate, the material has thermally contracted to become dense, and it sinks under its own weight in the process of subduction at an ocean trench. This subducted material sinks to some depth in the Earth's interior where it is prohibited from sinking further. The subducted oceanic crust triggers volcanism.

Convection within Earth's mantle is the driving force for plate tectonics. Mantle convection is the result of a thermal gradient: the lower mantle is hotter than the upper mantle, and is therefore less dense. This sets up two primary types of instabilities. In the first type, plumes rise from the lower mantle, and corresponding unstable regions of lithosphere drip back into the mantle. In the second type, subducting oceanic plates (which largely constitute the upper thermal boundary layer of the mantle) plunge back into the mantle and move downwards towards the core-mantle boundary. Mantle convection occurs at rates of centimeters per year, and it takes on the order of hundreds of millions of years to complete a cycle of convection.

Neutrino flux measurements from the Earth's core (see kamLAND) show the source of about two-thirds of the heat in the inner core is the radioactive decay of 40K, uranium and thorium. This has allowed plate tectonics on Earth to continue far longer than it would have if it were simply driven by heat left over from Earth's formation; or with heat produced from gravitational potential energy, as a result of physical rearrangement of denser portions of the Earth's interior toward the center of the planet (that is, a type of prolonged falling and settling).

Stack effect

The Stack effect or chimney effect is the movement of air into and out of buildings, chimneys, flue gas stacks, or other containers due to buoyancy. Buoyancy occurs due to a difference in indoor-to-outdoor air density resulting from temperature and moisture differences. The greater the thermal difference and the height of the structure, the greater the buoyancy force, and thus the stack effect. The stack effect helps drive natural ventilation and infiltration. Some cooling towers operate on this principle; similarly the solar updraft tower is a proposed device to generate electricity based on the stack effect.

Stellar physics

The convection zone of a star is the range of radii in which energy is transported outward from the core region primarily by convection rather than radiation. This occurs at radii which are sufficiently opaque that convection is more efficient than radiation at transporting energy.

Granules on the photosphere of the Sun are the visible tops of convection cells in the photosphere, caused by convection of plasma in the photosphere. The rising part of the granules is located in the center where the plasma is hotter. The outer edge of the granules is darker due to the cooler descending plasma. A typical granule has a diameter on the order of 1,000 kilometers and each lasts 8 to 20 minutes before dissipating. Below the photosphere is a layer of much larger "supergranules" up to 30,000 kilometers in diameter, with lifespans of up to 24 hours.

Water convection at freezing temperatures

Water is a fluid that does not obey the Boussinesq approximation. This is because its density varies nonlinearly with temperature, which causes its thermal expansion coefficient to be inconsistent near freezing temperatures. The density of water reaches a maximum at 4 °C and decreases as the temperature deviates. This phenomenon is investigated by experiment and numerical methods. Water is initially stagnant at 10 °C within a square cavity. It is differentially heated between the two vertical walls, where the left and right walls are held at 10 °C and 0 °C, respectively. The density anomaly manifests in its flow pattern. As the water is cooled at the right wall, the density increases, which accelerates the flow downward. As the flow develops and the water cools further, the decrease in density causes a recirculation current at the bottom right corner of the cavity.

Another case of this phenomenon is the event of super-cooling, where the water is cooled to below freezing temperatures but does not immediately begin to freeze. Under the same conditions as before, the flow is developed. Afterward, the temperature of the right wall is decreased to −10 °C. This causes the water at that wall to become supercooled, create a counter-clockwise flow, and initially overpower the warm current. This plume is caused by a delay in the nucleation of the ice. Once ice begins to form, the flow returns to a similar pattern as before and the solidification propagates gradually until the flow is redeveloped.

Nuclear reactors

In a nuclear reactor, natural circulation can be a design criterion. It is achieved by reducing turbulence and friction in the fluid flow (that is, minimizing head loss), and by providing a way to remove any inoperative pumps from the fluid path. Also, the reactor (as the heat source) must be physically lower than the steam generators or turbines (the heat sink). In this way, natural circulation will ensure that the fluid will continue to flow as long as the reactor is hotter than the heat sink, even when power cannot be supplied to the pumps. Notable examples are the S5G and S8G United States Naval reactors, which were designed to operate at a significant fraction of full power under natural circulation, quieting those propulsion plants. The S6G reactor cannot operate at power under natural circulation, but can use it to maintain emergency cooling while shut down.

By the nature of natural circulation, fluids do not typically move very fast, but this is not necessarily bad, as high flow rates are not essential to safe and effective reactor operation. In modern design nuclear reactors, flow reversal is almost impossible. All nuclear reactors, even ones designed to primarily use natural circulation as the main method of fluid circulation, have pumps that can circulate the fluid in the case that natural circulation is not sufficient.

Mathematical models of convection

A number of dimensionless terms have been derived to describe and predict convection, including the Archimedes number, Grashof number, Richardson number, and the Rayleigh number.

In cases of mixed convection (natural and forced occurring together) one would often like to know how much of the convection is due to external constraints, such as the fluid velocity in the pump, and how much is due to natural convection occurring in the system.

The relative magnitudes of the Grashof number and the square of the Reynolds number determine which form of convection dominates. If , forced convection may be neglected, whereas if , natural convection may be neglected. If the ratio, known as the Richardson number, is approximately one, then both forced and natural convection need to be taken into account.

Onset

The onset of natural convection is determined by the Rayleigh number (Ra). This dimensionless number is given by

where

- is the difference in density between the two parcels of material that are mixing

- is the local gravitational acceleration

- is the characteristic length-scale of convection: the depth of the boiling pot, for example

- is the diffusivity of the characteristic that is causing the convection, and

- is the dynamic viscosity.

Natural convection will be more likely and/or more rapid with a greater variation in density between the two fluids, a larger acceleration due to gravity that drives the convection, and/or a larger distance through the convecting medium. Convection will be less likely and/or less rapid with more rapid diffusion (thereby diffusing away the gradient that is causing the convection) and/or a more viscous (sticky) fluid.

For thermal convection due to heating from below, as described in the boiling pot above, the equation is modified for thermal expansion and thermal diffusivity. Density variations due to thermal expansion are given by:

where

- is the reference density, typically picked to be the average density of the medium,

- is the coefficient of thermal expansion, and

- is the temperature difference across the medium.

The general diffusivity, , is redefined as a thermal diffusivity, .

Inserting these substitutions produces a Rayleigh number that can be used to predict thermal convection.

Turbulence

The tendency of a particular naturally convective system towards turbulence relies on the Grashof number (Gr).

In very sticky, viscous fluids (large ν), fluid motion is restricted, and natural convection will be non-turbulent.

Following the treatment of the previous subsection, the typical fluid velocity is of the order of , up to a numerical factor depending on the geometry of the system. Therefore, Grashof number can be thought of as Reynolds number with the velocity of natural convection replacing the velocity in Reynolds number's formula. However In practice, when referring to the Reynolds number, it is understood that one is considering forced convection, and the velocity is taken as the velocity dictated by external constraints (see below).

Behavior

The Grashof number can be formulated for natural convection occurring due to a concentration gradient, sometimes termed thermo-solutal convection. In this case, a concentration of hot fluid diffuses into a cold fluid, in much the same way that ink poured into a container of water diffuses to dye the entire space. Then:

Natural convection is highly dependent on the geometry of the hot surface, various correlations exist in order to determine the heat transfer coefficient. A general correlation that applies for a variety of geometries is

The value of f4(Pr) is calculated using the following formula

Nu is the Nusselt number and the values of Nu0 and the characteristic length used to calculate Re are listed below (see also Discussion):

| Geometry | Characteristic length | Nu0 |

|---|---|---|

| Inclined plane | x (Distance along plane) | 0.68 |

| Inclined disk | 9D/11 (D = diameter) | 0.56 |

| Vertical cylinder | x (height of cylinder) | 0.68 |

| Cone | 4x/5 (x = distance along sloping surface) | 0.54 |

| Horizontal cylinder | (D = diameter of cylinder) | 0.36 |

Warning: The values indicated for the Horizontal cylinder are wrong; see discussion.

Natural convection from a vertical plate

One example of natural convection is heat transfer from an isothermal vertical plate immersed in a fluid, causing the fluid to move parallel to the plate. This will occur in any system wherein the density of the moving fluid varies with position. These phenomena will only be of significance when the moving fluid is minimally affected by forced convection.

When considering the flow of fluid is a result of heating, the following correlations can be used, assuming the fluid is an ideal diatomic, has adjacent to a vertical plate at constant temperature and the flow of the fluid is completely laminar.

Num = 0.478(Gr0.25)

Mean Nusselt number = Num = hmL/k

where

- hm = mean coefficient applicable between the lower edge of the plate and any point in a distance L (W/m2. K)

- L = height of the vertical surface (m)

- k = thermal conductivity (W/m. K)

Grashof number = Gr =

where

- g = gravitational acceleration (m/s2)

- L = distance above the lower edge (m)

- ts = temperature of the wall (K)

- t∞ = fluid temperature outside the thermal boundary layer (K)

- v = kinematic viscosity of the fluid (m2/s)

- T = absolute temperature (K)

When the flow is turbulent different correlations involving the Rayleigh Number (a function of both the Grashof number and the Prandtl number) must be used.

Note that the above equation differs from the usual expression for Grashof number because the value has been replaced by its approximation , which applies for ideal gases only (a reasonable approximation for air at ambient pressure).

Pattern formation

Convection, especially Rayleigh–Bénard convection, where the convecting fluid is contained by two rigid horizontal plates, is a convenient example of a pattern-forming system.

When heat is fed into the system from one direction (usually below), at small values it merely diffuses (conducts) from below upward, without causing fluid flow. As the heat flow is increased, above a critical value of the Rayleigh number, the system undergoes a bifurcation from the stable conducting state to the convecting state, where bulk motion of the fluid due to heat begins. If fluid parameters other than density do not depend significantly on temperature, the flow profile is symmetric, with the same volume of fluid rising as falling. This is known as Boussinesq convection.

As the temperature difference between the top and bottom of the fluid becomes higher, significant differences in fluid parameters other than density may develop in the fluid due to temperature. An example of such a parameter is viscosity, which may begin to significantly vary horizontally across layers of fluid. This breaks the symmetry of the system, and generally changes the pattern of up- and down-moving fluid from stripes to hexagons, as seen at right. Such hexagons are one example of a convection cell.

As the Rayleigh number is increased even further above the value where convection cells first appear, the system may undergo other bifurcations, and other more complex patterns, such as spirals, may begin to appear.

![{\displaystyle Nu=\left[Nu_{0}^{\frac {1}{2}}+Ra^{\frac {1}{6}}\left({\frac {f_{4}\left(Pr\right)}{300}}\right)^{\frac {1}{6}}\right]^{2}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5c796fe28809788aeefc2f78744547847689eae8)

![{\displaystyle f_{4}(Pr)=\left[1+\left({\frac {0.5}{Pr}}\right)^{\frac {9}{16}}\right]^{\frac {-16}{9}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/4a50d2a430fbd1360680d731061cbf11728ac1ad)

![{\displaystyle [gL^{3}(t_{s}-t_{\infty })]/v^{2}T}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7cefc61521e7ee2bbcd5874caa3ea5fc54cdcada)