In physics, Lagrangian mechanics is an alternate formulation of classical mechanics founded on the d'Alembert principle of virtual work. It was introduced by the Italian-French mathematician and astronomer Joseph-Louis Lagrange in his presentation to the Turin Academy of Science in 1760 culminating in his 1788 grand opus, Mécanique analytique. Lagrange’s approach greatly simplifies the analysis of many problems in mechanics, and it had crucial influence on other branches of physics, including relativity and quantum field theory.

Lagrangian mechanics describes a mechanical system as a pair (M, L) consisting of a configuration space M and a smooth function within that space called a Lagrangian. For many systems, L = T − V, where T and V are the kinetic and potential energy of the system, respectively.

The stationary action principle requires that the action functional of the system derived from L must remain at a stationary point (specifically, a maximum, minimum, or saddle point) throughout the time evolution of the system. This constraint allows the calculation of the equations of motion of the system using Lagrange's equations.

Introduction

Newton's laws and the concept of forces are the usual starting point for teaching about mechanical systems. This method works well for many problems, but for others the approach is nightmarishly complicated. For example, in calculation of the motion of a torus rolling on a horizontal surface with a pearl sliding inside, the time-varying constraint forces like the angular velocity of the torus, motion of the pearl in relation to the torus made it difficult to determine the motion of the torus with Newton's equations. Lagrangian mechanics adopts energy rather than force as its basic ingredient, leading to more abstract equations capable of tackling more complex problems.

Particularly, Lagrange's approach was to set up independent generalized coordinates for the position and speed of every object, which allows the writing down of a general form of Lagrangian (total kinetic energy minus potential energy of the system) and summing this over all possible paths of motion of the particles yielded a formula for the 'action', which he minimized to give a generalized set of equations. This summed quantity is minimized along the path that the particle actually takes. This choice eliminates the need for the constraint force to enter into the resultant generalized system of equations. There are fewer equations since one is not directly calculating the influence of the constraint on the particle at a given moment.

For a wide variety of physical systems, if the size and shape of a massive object are negligible, it is a useful simplification to treat it as a point particle. For a system of N point particles with masses m1, m2, ..., mN, each particle has a position vector, denoted r1, r2, ..., rN. Cartesian coordinates are often sufficient, so r1 = (x1, y1, z1), r2 = (x2, y2, z2) and so on. In three-dimensional space, each position vector requires three coordinates to uniquely define the location of a point, so there are 3N coordinates to uniquely define the configuration of the system. These are all specific points in space to locate the particles; a general point in space is written r = (x, y, z). The velocity of each particle is how fast the particle moves along its path of motion, and is the time derivative of its position, thus In Newtonian mechanics, the equations of motion are given by Newton's laws. The second law "net force equals mass times acceleration", applies to each particle. For an N-particle system in 3 dimensions, there are 3N second-order ordinary differential equations in the positions of the particles to solve for.

Lagrangian

Instead of forces, Lagrangian mechanics uses the energies in the system. The central quantity of Lagrangian mechanics is the Lagrangian, a function which summarizes the dynamics of the entire system. Overall, the Lagrangian has units of energy, but no single expression for all physical systems. Any function which generates the correct equations of motion, in agreement with physical laws, can be taken as a Lagrangian. It is nevertheless possible to construct general expressions for large classes of applications. The non-relativistic Lagrangian for a system of particles in the absence of an electromagnetic field is given by where is the total kinetic energy of the system, equaling the sum Σ of the kinetic energies of the particles. Each particle labeled has mass and vk2 = vk · vk is the magnitude squared of its velocity, equivalent to the dot product of the velocity with itself.

Kinetic energy T is the energy of the system's motion and is a function only of the velocities vk, not the positions rk, nor time t, so T = T(v1, v2, ...).

V, the potential energy of the system, reflects the energy of interaction between the particles, i.e. how much energy any one particle has due to all the others, together with any external influences. For conservative forces (e.g. Newtonian gravity), it is a function of the position vectors of the particles only, so V = V(r1, r2, ...). For those non-conservative forces which can be derived from an appropriate potential (e.g. electromagnetic potential), the velocities will appear also, V = V(r1, r2, ..., v1, v2, ...). If there is some external field or external driving force changing with time, the potential changes with time, so most generally V = V(r1, r2, ..., v1, v2, ..., t).

Mathematical formulation (for finite particle systems)

An equivalent but more mathematically formal definition of the Lagrangian is as follows. For a system of N particles in three-dimensional space, the configuration space of the system is a smooth manifold , where each configuration specifies the spacial positions of each of the particles at a given instant of time, and the manifold is composed of all the configurations that are allowed by the constraints on the system.

The Lagrangian is a smooth function: where is the tangent bundle of the configuration space. That is, each element in represents both the positions as well as the velocities of the particles, and can be written as a tuple with and specifying a position and a velocity of the i'th particle respectively. The time dependence allows for the Lagrangian to describe time-dependent forces or potentials.

A trajectory of the system is a smooth function describing the evolution of the configuration over time. Its velocity is the time derivative of , and the pair is thus an element of the bundle for any . The action functional of the trajectory can therefore be defined as the integral of the Lagrangian along the path:

The laws of motion in Lagrangian mechanics are derived from the postulate that among all trajectories between two given configurations, the actual one that will be taken by the system must be a critical point (often but not necessarily a local minimum) of the action functional. This leads to the Euler-Lagrange equations (see also below).

Equations of motion

For a system of particles with masses , the kinetic energy is: where is the velocity of particle i.

The potential energy depends only on the configuration (and possibly on time), and typically arises from conservative forces.

The standard Lagrangian is given by the difference:

This formulation covers both conservative and time-dependent systems and forms the basis for generalizations to continuous systems (fields), constrained systems, and systems with curved configuration spaces.

If T or V or both depend explicitly on time due to time-varying constraints or external influences, the Lagrangian L(r1, r2, ... v1, v2, ... t) is explicitly time-dependent. If neither the potential nor the kinetic energy depend on time, then the Lagrangian L(r1, r2, ... v1, v2, ...) is explicitly independent of time. In either case, the Lagrangian always has implicit time dependence through the generalized coordinates.

With these definitions, Lagrange's equations are

where k = 1, 2, ..., N labels the particles, there is a Lagrange multiplier λi for each constraint equation fi, and are each shorthands for a vector of partial derivatives ∂/∂ with respect to the indicated variables (not a derivative with respect to the entire vector).[nb 1] Each overdot is a shorthand for a time derivative. This procedure does increase the number of equations to solve compared to Newton's laws, from 3N to 3N + C, because there are 3N coupled second-order differential equations in the position coordinates and multipliers, plus C constraint equations. However, when solved alongside the position coordinates of the particles, the multipliers can yield information about the constraint forces. The coordinates do not need to be eliminated by solving the constraint equations.

In the Lagrangian, the position coordinates and velocity components are all independent variables, and derivatives of the Lagrangian are taken with respect to these separately according to the usual differentiation rules (e.g. the partial derivative of L with respect to the z velocity component of particle 2, defined by vz,2 = dz2/dt, is just ∂L/∂vz,2; no awkward chain rules or total derivatives need to be used to relate the velocity component to the corresponding coordinate z2).

In each constraint equation, one coordinate is redundant because it is determined from the other coordinates. The number of independent coordinates is therefore n = 3N − C. We can transform each position vector to a common set of n generalized coordinates, conveniently written as an n-tuple q = (q1, q2, ... qn), by expressing each position vector, and hence the position coordinates, as functions of the generalized coordinates and time:

The vector q is a point in the configuration space of the system. The time derivatives of the generalized coordinates are called the generalized velocities, and for each particle the transformation of its velocity vector, the total derivative of its position with respect to time, is

Given this vk, the kinetic energy in generalized coordinates depends on the generalized velocities, generalized coordinates, and time if the position vectors depend explicitly on time due to time-varying constraints, so

With these definitions, the Euler–Lagrange equations,

are mathematical results from the calculus of variations, which can also be used in mechanics. Substituting in the Lagrangian L(q, dq/dt, t) gives the equations of motion of the system. The number of equations has decreased compared to Newtonian mechanics, from 3N to n = 3N − C coupled second-order differential equations in the generalized coordinates. These equations do not include constraint forces at all, only non-constraint forces need to be accounted for.

Although the equations of motion include partial derivatives, the results of the partial derivatives are still ordinary differential equations in the position coordinates of the particles. The total time derivative denoted d/dt often involves implicit differentiation. Both equations are linear in the Lagrangian, but generally are nonlinear coupled equations in the coordinates.

Extensions

As already noted, this form of L is applicable to many important classes of system, but not everywhere. For relativistic Lagrangian mechanics it must be replaced as a whole by a function consistent with special relativity (scalar under Lorentz transformations) or general relativity (4-scalar). Where a magnetic field is present, the expression for the potential energy needs restating. And for dissipative forces (e.g., friction), another function must be introduced alongside Lagrangian often referred to as a "Rayleigh dissipation function" to account for the loss of energy.

One or more of the particles may each be subject to one or more holonomic constraints; such a constraint is described by an equation of the form f(r, t) = 0. If the number of constraints in the system is C, then each constraint has an equation f1(r, t) = 0, f2(r, t) = 0, ..., fC(r, t) = 0, each of which could apply to any of the particles. If particle k is subject to constraint i, then fi(rk, t) = 0. At any instant of time, the coordinates of a constrained particle are linked together and not independent. The constraint equations determine the allowed paths the particles can move along, but not where they are or how fast they go at every instant of time. Nonholonomic constraints depend on the particle velocities, accelerations, or higher derivatives of position. Lagrangian mechanics can only be applied to systems whose constraints, if any, are all holonomic. Three examples of nonholonomic constraints are: when the constraint equations are non-integrable, when the constraints have inequalities, or when the constraints involve complicated non-conservative forces like friction. Nonholonomic constraints require special treatment, and one may have to revert to Newtonian mechanics or use other methods.

From Newtonian to Lagrangian mechanics

Newton's laws

For simplicity, Newton's laws can be illustrated for one particle without much loss of generality (for a system of N particles, all of these equations apply to each particle in the system). The equation of motion for a particle of constant mass m is Newton's second law of 1687, in modern vector notation where a is its acceleration and F the resultant force acting on it. Where the mass is varying, the equation needs to be generalised to take the time derivative of the momentum. In three spatial dimensions, this is a system of three coupled second-order ordinary differential equations to solve, since there are three components in this vector equation. The solution is the position vector r of the particle at time t, subject to the initial conditions of r and v when t = 0.

Newton's laws are easy to use in Cartesian coordinates, but Cartesian coordinates are not always convenient, and for other coordinate systems the equations of motion can become complicated. In a set of curvilinear coordinates ξ = (ξ1, ξ2, ξ3), the law in tensor index notation is the "Lagrangian form" where Fa is the a-th contravariant component of the resultant force acting on the particle, Γabc are the Christoffel symbols of the second kind, is the kinetic energy of the particle, and gbc the covariant components of the metric tensor of the curvilinear coordinate system. All the indices a, b, c, each take the values 1, 2, 3. Curvilinear coordinates are not the same as generalized coordinates.

It may seem like an overcomplication to cast Newton's law in this form, but there are advantages. The acceleration components in terms of the Christoffel symbols can be avoided by evaluating derivatives of the kinetic energy instead. If there is no resultant force acting on the particle, F = 0, it does not accelerate, but moves with constant velocity in a straight line. Mathematically, the solutions of the differential equation are geodesics, the curves of extremal length between two points in space (these may end up being minimal, that is the shortest paths, but not necessarily). In flat 3D real space the geodesics are simply straight lines. So for a free particle, Newton's second law coincides with the geodesic equation and states that free particles follow geodesics, the extremal trajectories it can move along. If the particle is subject to forces F ≠ 0, the particle accelerates due to forces acting on it and deviates away from the geodesics it would follow if free. With appropriate extensions of the quantities given here in flat 3D space to 4D curved spacetime, the above form of Newton's law also carries over to Einstein's general relativity, in which case free particles follow geodesics in curved spacetime that are no longer "straight lines" in the ordinary sense.

However, we still need to know the total resultant force F acting on the particle, which in turn requires the resultant non-constraint force N plus the resultant constraint force C,

The constraint forces can be complicated, since they generally depend on time. Also, if there are constraints, the curvilinear coordinates are not independent but related by one or more constraint equations.

The constraint forces can either be eliminated from the equations of motion, so only the non-constraint forces remain, or included by including the constraint equations in the equations of motion.

D'Alembert's principle

A fundamental result in analytical mechanics is D'Alembert's principle, introduced in 1708 by Jacques Bernoulli to understand static equilibrium, and developed by D'Alembert in 1743 to solve dynamical problems. The principle asserts for N particles the virtual work, i.e. the work along a virtual displacement, δrk, is zero:

The virtual displacements, δrk, are by definition infinitesimal changes in the configuration of the system consistent with the constraint forces acting on the system at an instant of time, i.e. in such a way that the constraint forces maintain the constrained motion. They are not the same as the actual displacements in the system, which are caused by the resultant constraint and non-constraint forces acting on the particle to accelerate and move it. Virtual work is the work done along a virtual displacement for any force (constraint or non-constraint).

Since the constraint forces act perpendicular to the motion of each particle in the system to maintain the constraints, the total virtual work by the constraint forces acting on the system is zero: so that

Thus D'Alembert's principle allows us to concentrate on only the applied non-constraint forces, and exclude the constraint forces in the equations of motion. The form shown is also independent of the choice of coordinates. However, it cannot be readily used to set up the equations of motion in an arbitrary coordinate system since the displacements δrk might be connected by a constraint equation, which prevents us from setting the N individual summands to 0. We will therefore seek a system of mutually independent coordinates for which the total sum will be 0 if and only if the individual summands are 0. Setting each of the summands to 0 will eventually give us our separated equations of motion.

Equations of motion from D'Alembert's principle

If there are constraints on particle k, then since the coordinates of the position rk = (xk, yk, zk) are linked together by a constraint equation, so are those of the virtual displacements δrk = (δxk, δyk, δzk). Since the generalized coordinates are independent, we can avoid the complications with the δrk by converting to virtual displacements in the generalized coordinates. These are related in the same form as a total differential,

There is no partial time derivative with respect to time multiplied by a time increment, since this is a virtual displacement, one along the constraints in an instant of time.

The first term in D'Alembert's principle above is the virtual work done by the non-constraint forces Nk along the virtual displacements δrk, and can without loss of generality be converted into the generalized analogues by the definition of generalized forces so that

This is half of the conversion to generalized coordinates. It remains to convert the acceleration term into generalized coordinates, which is not immediately obvious. Recalling the Lagrange form of Newton's second law, the partial derivatives of the kinetic energy with respect to the generalized coordinates and velocities can be found to give the desired result:

Now D'Alembert's principle is in the generalized coordinates as required, and since these virtual displacements δqj are independent and nonzero, the coefficients can be equated to zero, resulting in Lagrange's equations or the generalized equations of motion,

These equations are equivalent to Newton's laws for the non-constraint forces. The generalized forces in this equation are derived from the non-constraint forces only – the constraint forces have been excluded from D'Alembert's principle and do not need to be found. The generalized forces may be non-conservative, provided they satisfy D'Alembert's principle.

Euler–Lagrange equations and Hamilton's principle

For a non-conservative force which depends on velocity, it may be possible to find a potential energy function V that depends on positions and velocities. If the generalized forces Qi can be derived from a potential V such that equating to Lagrange's equations and defining the Lagrangian as L = T − V obtains Lagrange's equations of the second kind or the Euler–Lagrange equations of motion

However, the Euler–Lagrange equations can only account for non-conservative forces if a potential can be found as shown. This may not always be possible for non-conservative forces, and Lagrange's equations do not involve any potential, only generalized forces; therefore they are more general than the Euler–Lagrange equations.

The Euler–Lagrange equations also follow from the calculus of variations. The variation of the Lagrangian is which has a form similar to the total differential of L, but the virtual displacements and their time derivatives replace differentials, and there is no time increment in accordance with the definition of the virtual displacements. An integration by parts with respect to time can transfer the time derivative of δqj to the ∂L/∂(dqj/dt), in the process exchanging d(δqj)/dt for δqj, allowing the independent virtual displacements to be factorized from the derivatives of the Lagrangian,

Now, if the condition δqj(t1) = δqj(t2) = 0 holds for all j, the terms not integrated are zero. If in addition the entire time integral of δL is zero, then because the δqj are independent, and the only way for a definite integral to be zero is if the integrand equals zero, each of the coefficients of δqj must also be zero. Then we obtain the equations of motion. This can be summarized by Hamilton's principle:

The time integral of the Lagrangian is another quantity called the action, defined as which is a functional; it takes in the Lagrangian function for all times between t1 and t2 and returns a scalar value. Its dimensions are the same as [angular momentum], [energy]·[time], or [length]·[momentum]. With this definition Hamilton's principle is

Instead of thinking about particles accelerating in response to applied forces, one might think of them picking out the path with a stationary action, with the end points of the path in configuration space held fixed at the initial and final times. Hamilton's principle is one of several action principles.

Historically, the idea of finding the shortest path a particle can follow subject to a force motivated the first applications of the calculus of variations to mechanical problems, such as the Brachistochrone problem solved by Jean Bernoulli in 1696, as well as Leibniz, Daniel Bernoulli, L'Hôpital around the same time, and Newton the following year. Newton himself was thinking along the lines of the variational calculus, but did not publish. These ideas in turn lead to the variational principles of mechanics, of Fermat, Maupertuis, Euler, Hamilton, and others.

Hamilton's principle can be applied to nonholonomic constraints if the constraint equations can be put into a certain form, a linear combination of first order differentials in the coordinates. The resulting constraint equation can be rearranged into first order differential equation. This will not be given here.

Lagrange multipliers and constraints

The Lagrangian L can be varied in the Cartesian rk coordinates, for N particles,

Hamilton's principle is still valid even if the coordinates L is expressed in are not independent, here rk, but the constraints are still assumed to be holonomic. As always the end points are fixed δrk(t1) = δrk(t2) = 0 for all k. What cannot be done is to simply equate the coefficients of δrk to zero because the δrk are not independent. Instead, the method of Lagrange multipliers can be used to include the constraints. Multiplying each constraint equation fi(rk, t) = 0 by a Lagrange multiplier λi for i = 1, 2, ..., C, and adding the results to the original Lagrangian, gives the new Lagrangian

The Lagrange multipliers are arbitrary functions of time t, but not functions of the coordinates rk, so the multipliers are on equal footing with the position coordinates. Varying this new Lagrangian and integrating with respect to time gives

The introduced multipliers can be found so that the coefficients of δrk are zero, even though the rk are not independent. The equations of motion follow. From the preceding analysis, obtaining the solution to this integral is equivalent to the statement which are Lagrange's equations of the first kind. Also, the λi Euler-Lagrange equations for the new Lagrangian return the constraint equations

For the case of a conservative force given by the gradient of some potential energy V, a function of the rk coordinates only, substituting the Lagrangian L = T − V gives and identifying the derivatives of kinetic energy as the (negative of the) resultant force, and the derivatives of the potential equaling the non-constraint force, it follows the constraint forces are thus giving the constraint forces explicitly in terms of the constraint equations and the Lagrange multipliers.

Properties of the Lagrangian

Non-uniqueness

The Lagrangian of a given system is not unique. A Lagrangian L can be multiplied by a nonzero constant a and shifted by an arbitrary constant b, and the new Lagrangian L′ = aL + b will describe the same motion as L. If one restricts as above to trajectories q over a given time interval [tst, tfin]} and fixed end points Pst = q(tst) and Pfin = q(tfin), then two Lagrangians describing the same system can differ by the "total time derivative" of a function f(q, t): where means

Both Lagrangians L and L′ produce the same equations of motion since the corresponding actions S and S′ are related via with the last two components f(Pfin, tfin) and f(Pst, tst) independent of q.

Invariance under point transformations

Given a set of generalized coordinates q, if we change these variables to a new set of generalized coordinates Q according to a point transformation Q = Q(q, t) which is invertible as q = q(Q, t), the new Lagrangian L′ is a function of the new coordinates and similarly for the constraints and by the chain rule for partial differentiation, Lagrange's equations are invariant under this transformation;

For a coordinate transformation , we have which implies that which implies that .

It also follows that: and similarly: which imply that . The two derived relations can be employed in the proof.

Starting from Euler Lagrange equations in initial set of generalized coordinates, we have:

Since the transformation from is invertible, it follows that the form of the Euler-Lagrange equation is invariant i.e.,

Cyclic coordinates and conserved momenta

An important property of the Lagrangian is that conserved quantities can easily be read off from it. The generalized momentum "canonically conjugate to" the coordinate qi is defined by

If the Lagrangian L does not depend on some coordinate qi, it follows immediately from the Euler–Lagrange equations that and integrating shows the corresponding generalized momentum equals a constant, a conserved quantity. This is a special case of Noether's theorem. Such coordinates are called "cyclic" or "ignorable".

For example, a system may have a Lagrangian where r and z are lengths along straight lines, s is an arc length along some curve, and θ and φ are angles. Notice z, s, and φ are all absent in the Lagrangian even though their velocities are not. Then the momenta are all conserved quantities. The units and nature of each generalized momentum will depend on the corresponding coordinate; in this case pz is a translational momentum in the z direction, ps is also a translational momentum along the curve s is measured, and pφ is an angular momentum in the plane the angle φ is measured in. However complicated the motion of the system is, all the coordinates and velocities will vary in such a way that these momenta are conserved.

Energy

Given a Lagrangian the Hamiltonian of the corresponding mechanical system is, by definition, This quantity will be equivalent to energy if the generalized coordinates are natural coordinates, i.e., they have no explicit time dependence when expressing position vector: . From: where is a symmetric matrix that is defined for the derivation.

Invariance under coordinate transformations

At every time instant t, the energy is invariant under configuration space coordinate changes q → Q, i.e. (using natural coordinates) Besides this result, the proof below shows that, under such change of coordinates, the derivatives change as coefficients of a linear form.

For a coordinate transformation Q = F(q), we have where is the tangent map of the vector space to the vector space and is the Jacobian. In the coordinates and the previous formula for has the form After differentiation involving the product rule, where

In vector notation,

On the other hand,

It was mentioned earlier that Lagrangians do not depend on the choice of configuration space coordinates, i.e. One implication of this is that and This demonstrates that, for each and is a well-defined linear form whose coefficients are contravariant 1-tensors. Applying both sides of the equation to and using the above formula for yields The invariance of the energy follows.

Conservation

In Lagrangian mechanics, the system is closed if and only if its Lagrangian does not explicitly depend on time. The energy conservation law states that the energy of a closed system is an integral of motion.

More precisely, let q = q(t) be an extremal. (In other words, q satisfies the Euler–Lagrange equations). Taking the total time-derivative of L along this extremal and using the EL equations leads to

If the Lagrangian L does not explicitly depend on time, then ∂L/∂t = 0, then H does not vary with time evolution of particle, indeed, an integral of motion, meaning that Hence, if the chosen coordinates were natural coordinates, the energy is conserved.

Kinetic and potential energies

Under all these circumstances, the constant is the total energy of the system. The kinetic and potential energies still change as the system evolves, but the motion of the system will be such that their sum, the total energy, is constant. This is a valuable simplification, since the energy E is a constant of integration that counts as an arbitrary constant for the problem, and it may be possible to integrate the velocities from this energy relation to solve for the coordinates.

Mechanical similarity

If the potential energy is a homogeneous function of the coordinates and independent of time, and all position vectors are scaled by the same nonzero constant α, rk′ = αrk, so that and time is scaled by a factor β, t′ = βt, then the velocities vk are scaled by a factor of α/β and the kinetic energy T by (α/β)2. The entire Lagrangian has been scaled by the same factor if

Since the lengths and times have been scaled, the trajectories of the particles in the system follow geometrically similar paths differing in size. The length l traversed in time t in the original trajectory corresponds to a new length l′ traversed in time t′ in the new trajectory, given by the ratios

Interacting particles

For a given system, if two subsystems A and B are non-interacting, the Lagrangian L of the overall system is the sum of the Lagrangians LA and LB for the subsystems:

If they do interact this is not possible. In some situations, it may be possible to separate the Lagrangian of the system L into the sum of non-interacting Lagrangians, plus another Lagrangian LAB containing information about the interaction,

This may be physically motivated by taking the non-interacting Lagrangians to be kinetic energies only, while the interaction Lagrangian is the system's total potential energy. Also, in the limiting case of negligible interaction, LAB tends to zero reducing to the non-interacting case above.

The extension to more than two non-interacting subsystems is straightforward – the overall Lagrangian is the sum of the separate Lagrangians for each subsystem. If there are interactions, then interaction Lagrangians may be added.

Consequences of singular Lagrangians

From the Euler-Lagrange equations, it follows that:

where the matrix is defined as . If the matrix is non-singular, the above equations can be solved to represent as a function of . If the matrix is non-invertible, it would not be possible to represent all 's as a function of but also, the Hamiltonian equations of motions will not take the standard form.

Examples

The following examples apply Lagrange's equations of the second kind to mechanical problems.

Conservative force

A particle of mass m moves under the influence of a conservative force derived from the gradient ∇ of a scalar potential,

If there are more particles, in accordance with the above results, the total kinetic energy is a sum over all the particle kinetic energies, and the potential is a function of all the coordinates.

Cartesian coordinates

The Lagrangian of the particle can be written

The equations of motion for the particle are found by applying the Euler–Lagrange equation, for the x coordinate with derivatives hence and similarly for the y and z coordinates. Collecting the equations in vector form we find which is Newton's second law of motion for a particle subject to a conservative force.

Polar coordinates in 2D and 3D

Using the spherical coordinates (r, θ, φ) as commonly used in physics (ISO 80000-2:2019 convention), where r is the radial distance to origin, θ is polar angle (also known as colatitude, zenith angle, normal angle, or inclination angle), and φ is the azimuthal angle, the Lagrangian for a central potential is So, in spherical coordinates, the Euler–Lagrange equations are The φ coordinate is cyclic since it does not appear in the Lagrangian, so the conserved momentum in the system is the angular momentum in which r, θ and dφ/dt can all vary with time, but only in such a way that pφ is constant.

The Lagrangian in two-dimensional polar coordinates is recovered by fixing θ to the constant value π/2.

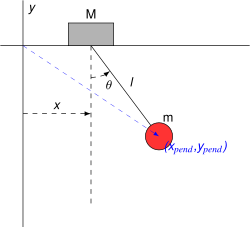

Pendulum on a movable support

Consider a pendulum of mass m and length ℓ, which is attached to a support with mass M, which can move along a line in the -direction. Let be the coordinate along the line of the support, and let us denote the position of the pendulum by the angle from the vertical. The coordinates and velocity components of the pendulum bob are

The generalized coordinates can be taken to be and . The kinetic energy of the system is then and the potential energy is giving the Lagrangian

Since x is absent from the Lagrangian, it is a cyclic coordinate. The conserved momentum is and the Lagrange equation for the support coordinate is

The Lagrange equation for the angle θ is and simplifying

These equations may look quite complicated, but finding them with Newton's laws would have required carefully identifying all forces, which would have been much more laborious and prone to errors. By considering limit cases, the correctness of this system can be verified: For example, should give the equations of motion for a simple pendulum that is at rest in some inertial frame, while should give the equations for a pendulum in a constantly accelerating system, etc. Furthermore, it is trivial to obtain the results numerically, given suitable starting conditions and a chosen time step, by stepping through the results iteratively.

Two-body central force problem

Two bodies of masses m1 and m2 with position vectors r1 and r2 are in orbit about each other due to an attractive central potential V. We may write down the Lagrangian in terms of the position coordinates as they are, but it is an established procedure to convert the two-body problem into a one-body problem as follows. Introduce the Jacobi coordinates; the separation of the bodies r = r2 − r1 and the location of the center of mass R = (m1r1 + m2r2)/(m1 + m2). The Lagrangian is then where M = m1 + m2 is the total mass, μ = m1m2/(m1 + m2) is the reduced mass, and V the potential of the radial force, which depends only on the magnitude of the separation |r| = |r2 − r1|. The Lagrangian splits into a center-of-mass term Lcm and a relative motion term Lrel.

The Euler–Lagrange equation for R is simply which states the center of mass moves in a straight line at constant velocity.

Since the relative motion only depends on the magnitude of the separation, it is ideal to use polar coordinates (r, θ) and take r = |r|, so θ is a cyclic coordinate with the corresponding conserved (angular) momentum

The radial coordinate r and angular velocity dθ/dt can vary with time, but only in such a way that ℓ is constant. The Lagrange equation for r is

This equation is identical to the radial equation obtained using Newton's laws in a co-rotating reference frame, that is, a frame rotating with the reduced mass so it appears stationary. Eliminating the angular velocity dθ/dt from this radial equation, which is the equation of motion for a one-dimensional problem in which a particle of mass μ is subjected to the inward central force −dV/dr and a second outward force, called in this context the (Lagrangian) centrifugal force (see centrifugal force#Other uses of the term):

Of course, if one remains entirely within the one-dimensional formulation, ℓ enters only as some imposed parameter of the external outward force, and its interpretation as angular momentum depends upon the more general two-dimensional problem from which the one-dimensional problem originated.

If one arrives at this equation using Newtonian mechanics in a co-rotating frame, the interpretation is evident as the centrifugal force in that frame due to the rotation of the frame itself. If one arrives at this equation directly by using the generalized coordinates (r, θ) and simply following the Lagrangian formulation without thinking about frames at all, the interpretation is that the centrifugal force is an outgrowth of using polar coordinates. As Hildebrand says:

"Since such quantities are not true physical forces, they are often called inertia forces. Their presence or absence depends, not upon the particular problem at hand, but upon the coordinate system chosen." In particular, if Cartesian coordinates are chosen, the centrifugal force disappears, and the formulation involves only the central force itself, which provides the centripetal force for a curved motion.

This viewpoint, that fictitious forces originate in the choice of coordinates, often is expressed by users of the Lagrangian method. This view arises naturally in the Lagrangian approach, because the frame of reference is (possibly unconsciously) selected by the choice of coordinates. For example, see for a comparison of Lagrangians in an inertial and in a noninertial frame of reference. See also the discussion of "total" and "updated" Lagrangian formulations in. Unfortunately, this usage of "inertial force" conflicts with the Newtonian idea of an inertial force. In the Newtonian view, an inertial force originates in the acceleration of the frame of observation (the fact that it is not an inertial frame of reference), not in the choice of coordinate system. To keep matters clear, it is safest to refer to the Lagrangian inertial forces as generalized inertial forces, to distinguish them from the Newtonian vector inertial forces. That is, one should avoid following Hildebrand when he says (p. 155) "we deal always with generalized forces, velocities accelerations, and momenta. For brevity, the adjective "generalized" will be omitted frequently."

It is known that the Lagrangian of a system is not unique. Within the Lagrangian formalism the Newtonian fictitious forces can be identified by the existence of alternative Lagrangians in which the fictitious forces disappear, sometimes found by exploiting the symmetry of the system.

Extensions to include non-conservative forces

Dissipative forces

Dissipation (i.e. non-conservative systems) can also be treated with an effective Lagrangian formulated by a certain doubling of the degrees of freedom.

In a more general formulation, the forces could be both conservative and viscous. If an appropriate transformation can be found from the Fi, Rayleigh suggests using a dissipation function, D, of the following form: where Cjk are constants that are related to the damping coefficients in the physical system, though not necessarily equal to them. If D is defined this way, then and

Electromagnetism

A test particle is a particle whose mass and charge are assumed to be so small that its effect on external system is insignificant. It is often a hypothetical simplified point particle with no properties other than mass and charge. Real particles like electrons and up quarks are more complex and have additional terms in their Lagrangians. Not only can the fields form non conservative potentials, these potentials can also be velocity dependent.

The Lagrangian for a charged particle with electrical charge q, interacting with an electromagnetic field, is the prototypical example of a velocity-dependent potential. The electric scalar potential ϕ = ϕ(r, t) and magnetic vector potential A = A(r, t) are defined from the electric field E = E(r, t) and magnetic field B = B(r, t) as follows:

The Lagrangian of a massive charged test particle in an electromagnetic field is called minimal coupling. This is a good example of when the common rule of thumb that the Lagrangian is the kinetic energy minus the potential energy is incorrect. Combined with Euler–Lagrange equation, it produces the Lorentz force law

Under gauge transformation: where f(r,t) is any scalar function of space and time, the aforementioned Lagrangian transforms like: which still produces the same Lorentz force law.

Note that the canonical momentum (conjugate to position r) is the kinetic momentum plus a contribution from the A field (known as the potential momentum):

This relation is also used in the minimal coupling prescription in quantum mechanics and quantum field theory. From this expression, we can see that the canonical momentum p is not gauge invariant, and therefore not a measurable physical quantity; However, if r is cyclic (i.e. Lagrangian is independent of position r), which happens if the ϕ and A fields are uniform, then this canonical momentum p given here is the conserved momentum, while the measurable physical kinetic momentum mv is not.

Other contexts and formulations

The ideas in Lagrangian mechanics have numerous applications in other areas of physics, and can adopt generalized results from the calculus of variations.

Alternative formulations of classical mechanics

A closely related formulation of classical mechanics is Hamiltonian mechanics. The Hamiltonian is defined by and can be obtained by performing a Legendre transformation on the Lagrangian, which introduces new variables canonically conjugate to the original variables. For example, given a set of generalized coordinates, the variables canonically conjugate are the generalized momenta. This doubles the number of variables, but makes differential equations first order. The Hamiltonian is a particularly ubiquitous quantity in quantum mechanics (see Hamiltonian (quantum mechanics)).

Routhian mechanics is a hybrid formulation of Lagrangian and Hamiltonian mechanics, which is not often used in practice but an efficient formulation for cyclic coordinates.

Momentum space formulation

The Euler–Lagrange equations can also be formulated in terms of the generalized momenta rather than generalized coordinates. Performing a Legendre transformation on the generalized coordinate Lagrangian L(q, dq/dt, t) obtains the generalized momenta Lagrangian L′(p, dp/dt, t) in terms of the original Lagrangian, as well the EL equations in terms of the generalized momenta. Both Lagrangians contain the same information, and either can be used to solve for the motion of the system. In practice generalized coordinates are more convenient to use and interpret than generalized momenta.

Higher derivatives of generalized coordinates

There is no mathematical reason to restrict the derivatives of generalized coordinates to first order only. It is possible to derive modified EL equations for a Lagrangian containing higher order derivatives, see Euler–Lagrange equation for details. However, from the physical point-of-view there is an obstacle to include time derivatives higher than the first order, which is implied by Ostrogradsky's construction of a canonical formalism for nondegenerate higher derivative Lagrangians, see Ostrogradsky instability

Optics

Lagrangian mechanics can be applied to geometrical optics, by applying variational principles to rays of light in a medium, and solving the EL equations gives the equations of the paths the light rays follow.

Relativistic formulation

Lagrangian mechanics can be formulated in special relativity and general relativity. Some features of Lagrangian mechanics are retained in the relativistic theories but difficulties quickly appear in other respects. In particular, the EL equations take the same form, and the connection between cyclic coordinates and conserved momenta still applies, however the Lagrangian must be modified and is not simply the kinetic minus the potential energy of a particle. Also, it is not straightforward to handle multiparticle systems in a manifestly covariant way, it may be possible if a particular frame of reference is singled out.

Quantum mechanics

In quantum mechanics, action and quantum-mechanical phase are related via the Planck constant, and the principle of stationary action can be understood in terms of constructive interference of wave functions.

In 1948, Feynman discovered the path integral formulation extending the principle of least action to quantum mechanics for electrons and photons. In this formulation, particles travel every possible path between the initial and final states; the probability of a specific final state is obtained by summing over all possible trajectories leading to it. In the classical regime, the path integral formulation cleanly reproduces Hamilton's principle, and Fermat's principle in optics.

Classical field theory

In Lagrangian mechanics, the generalized coordinates form a discrete set of variables that define the configuration of a system. In classical field theory, the physical system is not a set of discrete particles, but rather a continuous field ϕ(r, t) defined over a region of 3D space. Associated with the field is a Lagrangian density defined in terms of the field and its space and time derivatives at a location r and time t. Analogous to the particle case, for non-relativistic applications the Lagrangian density is also the kinetic energy density of the field, minus its potential energy density (this is not true in general, and the Lagrangian density has to be "reverse engineered"). The Lagrangian is then the volume integral of the Lagrangian density over 3D space where d3r is a 3D differential volume element. The Lagrangian is a function of time since the Lagrangian density has implicit space dependence via the fields, and may have explicit spatial dependence, but these are removed in the integral, leaving only time in as the variable for the Lagrangian.

Noether's theorem

The action principle, and the Lagrangian formalism, are tied closely to Noether's theorem, which connects physical conserved quantities to continuous symmetries of a physical system.

If the Lagrangian is invariant under a symmetry, then the resulting equations of motion are also invariant under that symmetry. This characteristic is very helpful in showing that theories are consistent with either special relativity or general relativity.

![{\displaystyle q:[t_{0},t_{1}]\to Q,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3f964ee1409ed516deb357c6c13db975221d47d4)

![{\displaystyle S[q]=\int _{t_{0}}^{t_{1}}L(q(t),{\dot {q}}(t),t)\,dt.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9c4762c6ae2f9dba8b7f3421b7032c13781fba35)

![{\displaystyle \sum _{j=1}^{n}\left[Q_{j}-\left({\frac {\mathrm {d} }{\mathrm {d} t}}{\frac {\partial T}{\partial {\dot {q}}_{j}}}-{\frac {\partial T}{\partial q_{j}}}\right)\right]\delta q_{j}=0,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/aabd545f28d06dcc1b1f7bef3f89519cc2194c0d)

![{\displaystyle {\begin{aligned}\int _{t_{1}}^{t_{2}}\delta L\,\mathrm {d} t&=\int _{t_{1}}^{t_{2}}\sum _{j=1}^{n}\left({\frac {\partial L}{\partial q_{j}}}\delta q_{j}+{\frac {\mathrm {d} }{\mathrm {d} t}}\left({\frac {\partial L}{\partial {\dot {q}}_{j}}}\delta q_{j}\right)-{\frac {\mathrm {d} }{\mathrm {d} t}}{\frac {\partial L}{\partial {\dot {q}}_{j}}}\delta q_{j}\right)\,\mathrm {d} t\\&=\sum _{j=1}^{n}\left[{\frac {\partial L}{\partial {\dot {q}}_{j}}}\delta q_{j}\right]_{t_{1}}^{t_{2}}+\int _{t_{1}}^{t_{2}}\sum _{j=1}^{n}\left({\frac {\partial L}{\partial q_{j}}}-{\frac {\mathrm {d} }{\mathrm {d} t}}{\frac {\partial L}{\partial {\dot {q}}_{j}}}\right)\delta q_{j}\,\mathrm {d} t.\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/739a857b0aa09f6fc8bbe13cdd53e01376d9bba4)

![{\displaystyle {\begin{aligned}S'[\mathbf {q} ]&=\int _{t_{\text{st}}}^{t_{\text{fin}}}L'(\mathbf {q} (t),{\dot {\mathbf {q} }}(t),t)\,dt\\&=\int _{t_{\text{st}}}^{t_{\text{fin}}}L(\mathbf {q} (t),{\dot {\mathbf {q} }}(t),t)\,dt+\int _{t_{\text{st}}}^{t_{\text{fin}}}{\frac {\mathrm {d} f(\mathbf {q} (t),t)}{\mathrm {d} t}}\,dt\\&=S[\mathbf {q} ]+f(P_{\text{fin}},t_{\text{fin}})-f(P_{\text{st}},t_{\text{st}}),\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a7b08287b06286f1d5e8f24c6e1ac4d084903a78)

![{\displaystyle {\begin{array}{rcl}L&=&T-V\\&=&{\frac {1}{2}}M{\dot {x}}^{2}+{\frac {1}{2}}m\left[\left({\dot {x}}+\ell {\dot {\theta }}\cos \theta \right)^{2}+\left(\ell {\dot {\theta }}\sin \theta \right)^{2}\right]+mg\ell \cos \theta \\&=&{\frac {1}{2}}\left(M+m\right){\dot {x}}^{2}+m{\dot {x}}\ell {\dot {\theta }}\cos \theta +{\frac {1}{2}}m\ell ^{2}{\dot {\theta }}^{2}+mg\ell \cos \theta .\end{array}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/495fcfe1fd6e2433a1b2166884ff19686152137f)

![{\displaystyle {\frac {\mathrm {d} }{\mathrm {d} t}}\left[m({\dot {x}}\ell \cos \theta +\ell ^{2}{\dot {\theta }})\right]+m\ell ({\dot {x}}{\dot {\theta }}+g)\sin \theta =0;}](https://wikimedia.org/api/rest_v1/media/math/render/svg/27ef95240aa335e09f85c8c7ef67a7301547c588)