From Wikipedia, the free encyclopedia

Wave–particle duality is the concept in quantum mechanics that every particle or quantum entity may be described as either a particle or a wave. It expresses the inability of the classical concepts "particle" or "wave" to fully describe the behaviour of quantum-scale objects. As Albert Einstein wrote:

It seems as though we must use

sometimes the one theory and sometimes the other, while at times we may

use either. We are faced with a new kind of difficulty. We have two

contradictory pictures of reality; separately neither of them fully

explains the phenomena of light, but together they do.

Through the work of Max Planck, Albert Einstein, Louis de Broglie, Arthur Compton, Niels Bohr, and many others, current scientific theory holds that all particles exhibit a wave nature and vice versa.

This phenomenon has been verified not only for elementary particles,

but also for compound particles like atoms and even molecules. For macroscopic particles, because of their extremely short wavelengths, wave properties usually cannot be detected.

Although the use of the wave-particle duality has worked well in

physics, the meaning or interpretation has not been satisfactorily

resolved; see Interpretations of quantum mechanics.

Bohr regarded the "duality paradox"

as a fundamental or metaphysical fact of nature. A given kind of

quantum object will exhibit sometimes wave, sometimes particle,

character, in respectively different physical settings. He saw such

duality as one aspect of the concept of complementarity.

Bohr regarded renunciation of the cause-effect relation, or

complementarity, of the space-time picture, as essential to the quantum

mechanical account.

Werner Heisenberg

considered the question further. He saw the duality as present for all

quantic entities, but not quite in the usual quantum mechanical account

considered by Bohr. He saw it in what is called second quantization,

which generates an entirely new concept of fields that exist in

ordinary space-time, causality still being visualizable. Classical field

values (e.g. the electric and magnetic field strengths of Maxwell) are replaced by an entirely new kind of field value, as considered in quantum field theory. Turning the reasoning around, ordinary quantum mechanics can be deduced as a specialized consequence of quantum field theory.

History

Classical particle and wave theories of light

Democritus (5th century BC) argued that all things in the universe, including light, are composed of indivisible sub-components. Euclid

(4th-3rd century BC) gives treatises on light propagation, states the

principle of shortest trajectory of light, including multiple

reflections on mirrors, including spherical, while Plutarch

(1st-2nd century AD) describes multiple reflections on spherical

mirrors discussing the creation of larger or smaller images, real or

imaginary, including the case of chirality of the images. At the beginning of the 11th century, the Arabic scientist Ibn al-Haytham wrote the first comprehensive Book of optics describing reflection, refraction,

and the operation of a pinhole lens via rays of light traveling from

the point of emission to the eye. He asserted that these rays were

composed of particles of light. In 1630, René Descartes popularized and accredited the opposing wave description in his treatise on light, The World (Descartes), showing that the behavior of light could be re-created by modeling wave-like disturbances in a universal medium i.e. luminiferous aether. Beginning in 1670 and progressing over three decades, Isaac Newton developed and championed his corpuscular theory,

arguing that the perfectly straight lines of reflection demonstrated

light's particle nature, only particles could travel in such straight

lines. He explained refraction by positing that particles of light

accelerated laterally upon entering a denser medium. Around the same

time, Newton's contemporaries Robert Hooke and Christiaan Huygens, and later Augustin-Jean Fresnel,

mathematically refined the wave viewpoint, showing that if light

traveled at different speeds in different media, refraction could be

easily explained as the medium-dependent propagation of light waves. The

resulting Huygens–Fresnel principle was extremely successful at reproducing light's behavior and was subsequently supported by Thomas Young's discovery of wave interference of light by his double-slit experiment in 1801. The wave view did not immediately displace the ray and particle view,

but began to dominate scientific thinking about light in the mid 19th

century, since it could explain polarization phenomena that the

alternatives could not.

James Clerk Maxwell discovered that he could apply his previously discovered Maxwell's equations,

along with a slight modification to describe self-propagating waves of

oscillating electric and magnetic fields. It quickly became apparent

that visible light, ultraviolet light, and infrared light were all

electromagnetic waves of differing frequency.

Interference of a quantum particle with itself.

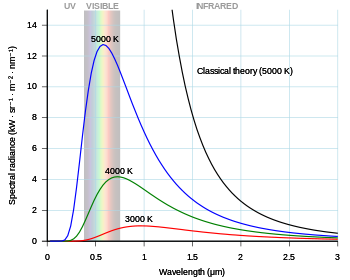

Black-body radiation and Planck's law

In 1901, Max Planck published an analysis that succeeded in reproducing the observed spectrum

of light emitted by a glowing object. To accomplish this, Planck had to

make a mathematical assumption of quantized energy of the oscillators

i.e. atoms of the black body

that emit radiation. Einstein later proposed that electromagnetic

radiation itself is quantized, not the energy of radiating atoms.

Black-body radiation, the emission of electromagnetic energy due to an object's heat, could not be explained from classical arguments alone. The equipartition theorem

of classical mechanics, the basis of all classical thermodynamic

theories, stated that an object's energy is partitioned equally among

the object's vibrational modes.

But applying the same reasoning to the electromagnetic emission of such

a thermal object was not so successful. That thermal objects emit light

had been long known. Since light was known to be waves of

electromagnetism, physicists hoped to describe this emission via

classical laws. This became known as the black body

problem. Since the equipartition theorem worked so well in describing

the vibrational modes of the thermal object itself, it was natural to

assume that it would perform equally well in describing the radiative

emission of such objects. But a problem quickly arose if each mode

received an equal partition of energy, the short wavelength modes would

consume all the energy. This became clear when plotting the Rayleigh–Jeans law,

which, while correctly predicting the intensity of long wavelength

emissions, predicted infinite total energy as the intensity diverges to

infinity for short wavelengths. This became known as the ultraviolet catastrophe.

In 1900, Max Planck

hypothesized that the frequency of light emitted by the black body

depended on the frequency of the oscillator that emitted it, and the

energy of these oscillators increased linearly with frequency (according

E = hf where h is Planck's constant and f

is the frequency). This was not an unsound proposal considering that

macroscopic oscillators operate similarly when studying five simple harmonic oscillators

of equal amplitude but different frequency, the oscillator with the

highest frequency possesses the highest energy (though this relationship

is not linear like Planck's). By demanding that high-frequency light

must be emitted by an oscillator of equal frequency, and further

requiring that this oscillator occupy higher energy than one of a lesser

frequency, Planck avoided any catastrophe, giving an equal partition to

high-frequency oscillators produced successively fewer oscillators and

less emitted light. And as in the Maxwell–Boltzmann distribution,

the low-frequency, low-energy oscillators were suppressed by the

onslaught of thermal jiggling from higher energy oscillators, which

necessarily increased their energy and frequency.

The most revolutionary aspect of Planck's treatment of the black

body is that it inherently relies on an integer number of oscillators in

thermal equilibrium

with the electromagnetic field. These oscillators give their entire

energy to the electromagnetic field, creating a quantum of light, as

often as they are excited by the electromagnetic field, absorbing a

quantum of light and beginning to oscillate at the corresponding

frequency. Planck had intentionally created an atomic theory of the

black body, but had unintentionally generated an atomic theory of light,

where the black body never generates quanta of light at a given

frequency with an energy less than hf. However, once realizing

that he had quantized the electromagnetic field, he denounced particles

of light as a limitation of his approximation, not a property of

reality.

Photoelectric effect

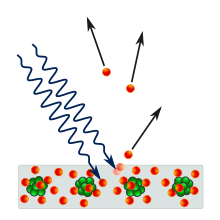

The

photoelectric effect. Incoming photons on the left strike a metal plate

(bottom), and eject electrons, depicted as flying off to the right.

While Planck had solved the ultraviolet catastrophe by using atoms

and a quantized electromagnetic field, most contemporary physicists

agreed that Planck's "light quanta" represented only flaws in his model.

A more-complete derivation of black-body radiation would yield a fully

continuous and "wave-like" electromagnetic field with no quantization.

However, in 1905 Albert Einstein took Planck's black body model to produce his solution to another outstanding problem of the day: the photoelectric effect,

wherein electrons are emitted from atoms when they absorb energy from

light. Since their existence was theorized eight years previously,

phenomena had been studied with the electron model in mind in physics

laboratories worldwide.

In 1902, Philipp Lenard

discovered that the energy of these ejected electrons did not depend on

the intensity of the incoming light, but instead on its frequency. So

if one shines a little low-frequency light upon a metal, a few low

energy electrons are ejected. If one now shines a very intense beam of

low-frequency light upon the same metal, a whole slew of electrons are

ejected; however they possess the same low energy, there are merely more

of them. The more light there is, the more electrons are ejected.

Whereas in order to get high energy electrons, one must illuminate the

metal with high-frequency light. Like blackbody radiation, this was at

odds with a theory invoking continuous transfer of energy between

radiation and matter. However, it can still be explained using a fully

classical description of light, as long as matter is quantum mechanical

in nature.

If one used Planck's energy quanta, and demanded that

electromagnetic radiation at a given frequency could only transfer

energy to matter in integer multiples of an energy quantum hf,

then the photoelectric effect could be explained very simply.

Low-frequency light only ejects low-energy electrons because each

electron is excited by the absorption of a single photon. Increasing the

intensity of the low-frequency light (increasing the number of photons)

only increases the number of excited electrons, not their energy,

because the energy of each photon remains low. Only by increasing the

frequency of the light, and thus increasing the energy of the photons,

can one eject electrons with higher energy. Thus, using Planck's

constant h to determine the energy of the photons based upon

their frequency, the energy of ejected electrons should also increase

linearly with frequency, the gradient of the line being Planck's

constant. These results were not confirmed until 1915, when Robert Andrews Millikan produced experimental results in perfect accord with Einstein's predictions.

While energy of ejected electrons reflected Planck's constant,

the existence of photons was not explicitly proven until the discovery

of the photon antibunching effect, of which a modern experiment can be performed in undergraduate-level labs. This phenomenon could only be explained via photons.

Einstein's "light quanta" would not be called photons

until 1925, but even in 1905 they represented the quintessential

example of wave-particle duality. Electromagnetic radiation propagates

following linear wave equations, but can only be emitted or absorbed as

discrete elements, thus acting as a wave and a particle simultaneously.

Einstein's explanation of photoelectric effect

In 1905, Albert Einstein provided an explanation of the photoelectric effect, an experiment that the wave theory of light failed to explain. He did so by postulating the existence of photons, quanta of light energy with particulate qualities.

In the photoelectric effect, it was observed that shining a light on certain metals would lead to an electric current in a circuit.

Presumably, the light was knocking electrons out of the metal, causing

current to flow. However, using the case of potassium as an example, it

was also observed that while a dim blue light was enough to cause a

current, even the strongest, brightest red light available with the

technology of the time caused no current at all. According to the

classical theory of light and matter, the strength or amplitude

of a light wave was in proportion to its brightness: a bright light

should have been easily strong enough to create a large current. Yet,

oddly, this was not so.

Einstein explained this enigma by postulating that the electrons can receive energy from electromagnetic field only in discrete units (quanta or photons): an amount of energy E that was related to the frequency f of the light by

where h is Planck's constant (6.626 × 10−34 Js). Only photons of a high enough frequency (above a certain threshold

value) could knock an electron free. For example, photons of blue light

had sufficient energy to free an electron from the metal, but photons

of red light did not. One photon of light above the threshold frequency

could release only one electron; the higher the frequency of a photon,

the higher the kinetic energy of the emitted electron, but no amount of

light below the threshold frequency could release an electron. To

violate this law would require extremely high-intensity lasers that had

not yet been invented. Intensity-dependent phenomena have now been

studied in detail with such lasers.

Einstein was awarded the Nobel Prize in Physics in 1921 for his discovery of the law of the photoelectric effect.

de Broglie's hypothesis

Propagation of

de Broglie waves in 1d—real part of the

complex amplitude is blue, imaginary part is green. The probability (shown as the colour

opacity) of finding the particle at a given point

x is spread out like a waveform; there is no definite position of the particle. As the amplitude increases above zero the

curvature decreases, so the amplitude decreases again, and vice versa—the result is an alternating amplitude: a wave. Top:

Plane wave. Bottom:

Wave packet.

In 1924, Louis-Victor de Broglie formulated the de Broglie hypothesis, claiming that all matter has a wave-like nature, he related wavelength and momentum:

This is a generalization of Einstein's equation above, since the momentum of a photon is given by p =  and the wavelength (in a vacuum) by λ =

and the wavelength (in a vacuum) by λ =  , where c is the speed of light in vacuum.

, where c is the speed of light in vacuum.

De Broglie's formula was confirmed three years later for electrons with the observation of electron diffraction in two independent experiments. At the University of Aberdeen, George Paget Thomson passed a beam of electrons through a thin metal film and observed the predicted interference patterns. At Bell Labs, Clinton Joseph Davisson and Lester Halbert Germer guided the electron beam through a crystalline grid in their experiment popularly known as Davisson–Germer experiment.

De Broglie was awarded the Nobel Prize for Physics in 1929 for his hypothesis. Thomson and Davisson shared the Nobel Prize for Physics in 1937 for their experimental work.

Heisenberg's uncertainty principle

In his work on formulating quantum mechanics, Werner Heisenberg postulated his uncertainty principle, which states:

where

here indicates standard deviation, a measure of spread or uncertainty;

here indicates standard deviation, a measure of spread or uncertainty;- x and p are a particle's position and linear momentum respectively.

is the reduced Planck's constant (Planck's constant divided by 2

is the reduced Planck's constant (Planck's constant divided by 2 ).

).

Heisenberg originally explained this as a consequence of the process

of measuring: Measuring position accurately would disturb momentum and

vice versa, offering an example (the "gamma-ray microscope") that

depended crucially on the de Broglie hypothesis.

The thought is now, however, that this only partly explains the

phenomenon, but that the uncertainty also exists in the particle itself,

even before the measurement is made.

In fact, the modern explanation of the uncertainty principle, extending the Copenhagen interpretation first put forward by Bohr and Heisenberg,

depends even more centrally on the wave nature of a particle. Just as

it is nonsensical to discuss the precise location of a wave on a string,

particles do not have perfectly precise positions; likewise, just as it

is nonsensical to discuss the wavelength of a "pulse" wave traveling

down a string, particles do not have perfectly precise momenta that

corresponds to the inverse of wavelength. Moreover, when position is

relatively well defined, the wave is pulse-like and has a very

ill-defined wavelength, and thus momentum. And conversely, when

momentum, and thus wavelength, is relatively well defined, the wave

looks long and sinusoidal, and therefore it has a very ill-defined

position.

de Broglie–Bohm theory

Couder experiments, "materializing" the pilot wave model.

De Broglie himself had proposed a pilot wave

construct to explain the observed wave-particle duality. In this view,

each particle has a well-defined position and momentum, but is guided by

a wave function derived from Schrödinger's equation.

The pilot wave theory was initially rejected because it generated

non-local effects when applied to systems involving more than one

particle. Non-locality, however, soon became established as an integral

feature of quantum theory and David Bohm extended de Broglie's model to explicitly include it.

In the resulting representation, also called the de Broglie–Bohm theory or Bohmian mechanics,

the wave-particle duality vanishes, and explains the wave behaviour as a

scattering with wave appearance, because the particle's motion is

subject to a guiding equation or quantum potential.

This

idea seems to me so natural and simple, to resolve the wave–particle

dilemma in such a clear and ordinary way, that it is a great mystery to

me that it was so generally ignored. – J.S.Bell

The best illustration of the pilot-wave model was given by Couder's 2010 "walking droplets" experiments, demonstrating the pilot-wave behaviour in a macroscopic mechanical analog.

Wave nature of large objects

Since the demonstrations of wave-like properties in photons and electrons, similar experiments have been conducted with neutrons and protons. Among the most famous experiments are those of Estermann and Otto Stern in 1929.

Authors of similar recent experiments with atoms and molecules,

described below, claim that these larger particles also act like waves.

A dramatic series of experiments emphasizing the action of gravity in relation to wave–particle duality was conducted in the 1970s using the neutron interferometer. Neutrons, one of the components of the atomic nucleus,

provide much of the mass of a nucleus and thus of ordinary matter. In

the neutron interferometer, they act as quantum-mechanical waves

directly subject to the force of gravity. While the results were not

surprising since gravity was known to act on everything, including light

(see tests of general relativity and the Pound–Rebka falling photon experiment), the self-interference of the quantum mechanical wave of a massive fermion in a gravitational field had never been experimentally confirmed before.

In 1999, the diffraction of C60 fullerenes by researchers from the University of Vienna was reported. Fullerenes are comparatively large and massive objects, having an atomic mass of about 720 u. The de Broglie wavelength of the incident beam was about 2.5 pm, whereas the diameter of the molecule is about 1 nm, about 400 times larger. In 2012, these far-field diffraction experiments could be extended to phthalocyanine

molecules and their heavier derivatives, which are composed of 58 and

114 atoms respectively. In these experiments the build-up of such

interference patterns could be recorded in real time and with single

molecule sensitivity.

In 2003, the Vienna group also demonstrated the wave nature of tetraphenylporphyrin—a flat biodye with an extension of about 2 nm and a mass of 614 u. For this demonstration they employed a near-field Talbot Lau interferometer. In the same interferometer they also found interference fringes for C60F48., a fluorinated buckyball with a mass of about 1600 u, composed of 108 atoms.

Large molecules are already so complex that they give experimental

access to some aspects of the quantum-classical interface, i.e., to

certain decoherence mechanisms. In 2011, the interference of molecules as heavy as 6910 u could be demonstrated in a Kapitza–Dirac–Talbot–Lau interferometer. In 2013, the interference of molecules beyond 10,000 u has been demonstrated.

Whether objects heavier than the Planck mass

(about the weight of a large bacterium) have a de Broglie wavelength is

theoretically unclear and experimentally unreachable; above the Planck

mass a particle's Compton wavelength would be smaller than the Planck length and its own Schwarzschild radius, a scale at which current theories of physics may break down or need to be replaced by more general ones.

Recently Couder, Fort, et al. showed

that we can use macroscopic oil droplets on a vibrating surface as a

model of wave–particle duality—localized droplet creates periodical

waves around and interaction with them leads to quantum-like phenomena:

interference in double-slit experiment, unpredictable tunneling (depending in complicated way on practically hidden state of field), orbit quantization[36]

(that particle has to 'find a resonance' with field perturbations it

creates—after one orbit, its internal phase has to return to the initial

state) and Zeeman effect.

Importance

Wave–particle duality is deeply embedded into the foundations of quantum mechanics. In the formalism of the theory, all the information about a particle is encoded in its wave function,

a complex-valued function roughly analogous to the amplitude of a wave

at each point in space. This function evolves according to

Schrödinger equation.

For particles with mass this equation has solutions that follow the

form of the wave equation. Propagation of such waves leads to wave-like

phenomena such as interference and diffraction. Particles without mass,

like photons, have no solutions of the Schrödinger equation so have

another wave.

The particle-like behaviour is most evident due to phenomena associated with measurement in quantum mechanics.

Upon measuring the location of the particle, the particle will be

forced into a more localized state as given by the uncertainty

principle. When viewed through this formalism, the measurement of the

wave function will randomly lead to wave function collapse

to a sharply peaked function at some location. For particles with mass,

the likelihood of detecting the particle at any particular location is

equal to the squared amplitude of the wave function there. The

measurement will return a well-defined position, and is subject to Heisenberg's uncertainty principle.

Following the development of quantum field theory

the ambiguity disappeared. The field permits solutions that follow the

wave equation, which are referred to as the wave functions. The term

particle is used to label the irreducible representations of the Lorentz group that are permitted by the field. An interaction as in a Feynman diagram

is accepted as a calculationally convenient approximation where the

outgoing legs are known to be simplifications of the propagation and the

internal lines are for some order in an expansion of the field

interaction. Since the field is non-local and quantized, the phenomena

that previously were thought of as paradoxes are explained. Within the

limits of the wave-particle duality the quantum field theory gives the

same results.

Visualization

There are two ways to visualize the wave-particle behaviour: by the standard model and by the de Broglie–Bohr theory.

Below is an illustration of wave–particle duality as it relates

to de Broglie's hypothesis and Heisenberg's Uncertainty principle, in

terms of the position and momentum space wavefunctions for one spinless

particle with mass in one dimension. These wavefunctions are Fourier transforms of each other.

The more localized the position-space wavefunction, the more

likely the particle is to be found with the position coordinates in that

region, and correspondingly the momentum-space wavefunction is less

localized so the possible momentum components the particle could have

are more widespread.

Conversely, the more localized the momentum-space wavefunction,

the more likely the particle is to be found with those values of

momentum components in that region, and correspondingly the less

localized the position-space wavefunction, so the position coordinates

the particle could occupy are more widespread.

Position

x and momentum

p wavefunctions corresponding to quantum particles. The colour opacity of the particles corresponds to the

probability density of finding the particle with position

x or momentum component

p.

Top: If wavelength

λ is unknown, so are momentum

p, wave-vector

k and energy

E (de Broglie relations). As the particle is more localized in position space, Δ

x is smaller than for Δ

px.

Bottom: If

λ is known, so are

p,

k, and

E. As the particle is more localized in momentum space, Δ

p is smaller than for Δ

x.

Alternative views

Wave–particle

duality is an ongoing conundrum in modern physics. Most physicists

accept wave-particle duality as the best explanation for a broad range

of observed phenomena; however, it is not without controversy.

Alternative views are also presented here. These views are not

generally accepted by mainstream physics, but serve as a basis for

valuable discussion within the community.

Both-particle-and-wave view

The pilot wave model, originally developed by Louis de Broglie and further developed by David Bohm into the hidden variable theory

proposes that there is no duality, but rather a system exhibits both

particle properties and wave properties simultaneously, and particles

are guided, in a deterministic fashion, by the pilot wave (or its "quantum potential"), which will direct them to areas of constructive interference in preference to areas of destructive interference. This idea is held by a significant minority within the physics community.

At least one physicist considers the "wave-duality" as not being an incomprehensible mystery. L.E. Ballentine, Quantum Mechanics, A Modern Development (1989), p. 4, explains:

When first discovered, particle diffraction was a source

of great puzzlement. Are "particles" really "waves?" In the early

experiments, the diffraction patterns were detected holistically by

means of a photographic plate, which could not detect individual

particles. As a result, the notion grew that particle and wave

properties were mutually incompatible, or complementary, in the sense

that different measurement apparatuses would be required to observe

them. That idea, however, was only an unfortunate generalization from a

technological limitation. Today it is possible to detect the arrival of

individual electrons, and to see the diffraction pattern emerge as a

statistical pattern made up of many small spots (Tonomura et al., 1989).

Evidently, quantum particles are indeed particles, but whose behaviour

is very different from classical physics would have us to expect.

The Afshar experiment

(2007) may suggest that it is possible to simultaneously observe both

wave and particle properties of photons. This claim is, however,

disputed by other scientists.

Wave-only view

Carver Mead, an American scientist and professor at Caltech, proposes that the duality can be replaced by a "wave-only" view. In his book Collective Electrodynamics: Quantum Foundations of Electromagnetism (2000), Mead purports to analyze the behavior of electrons and photons

purely in terms of electron wave functions, and attributes the apparent

particle-like behavior to quantization effects and eigenstates.

According to reviewer David Haddon:

Mead has cut the Gordian knot

of quantum complementarity. He claims that atoms, with their neutrons,

protons, and electrons, are not particles at all but pure waves of

matter. Mead cites as the gross evidence of the exclusively wave nature

of both light and matter the discovery between 1933 and 1996 of ten

examples of pure wave phenomena, including the ubiquitous laser of CD players, the self-propagating electrical currents of superconductors, and the Bose–Einstein condensate of atoms.

Albert Einstein, who, in his search for a Unified Field Theory, did not accept wave-particle duality, wrote:

This double nature of radiation (and of material

corpuscles) ... has been interpreted by quantum-mechanics in an

ingenious and amazingly successful fashion. This interpretation ...

appears to me as only a temporary way out...

The many-worlds interpretation (MWI) is sometimes presented as a waves-only theory, including by its originator, Hugh Everett who referred to MWI as "the wave interpretation".

The three wave hypothesis of R. Horodecki relates the particle to wave.

The hypothesis implies that a massive particle is an intrinsically

spatially, as well as temporally extended, wave phenomenon by a

nonlinear law.

The deterministic collapse theory

considers collapse and measurement as two independent physical

processes. Collapse occurs when two wavepackets spatially overlap and

satisfy a mathematical criterion, which depends on the parameters of

both wavepackets. It is a contraction to the overlap volume. In a

measurement apparatus one of the two wavepackets is one of the atomic

clusters, which constitute the apparatus, and the wavepackets collapse

to at most the volume of such a cluster. This mimics the action of a

point particle.

Particle-only view

Still in the days of the old quantum theory, a pre-quantum-mechanical version of wave–particle duality was pioneered by William Duane, and developed by others including Alfred Landé. Duane explained diffraction of x-rays

by a crystal in terms solely of their particle aspect. The deflection

of the trajectory of each diffracted photon was explained as due to quantized momentum transfer from the spatially regular structure of the diffracting crystal.

Neither-wave-nor-particle view

It

has been argued that there are never exact particles or waves, but only

some compromise or intermediate between them. For this reason, in 1928 Arthur Eddington coined the name "wavicle" to describe the objects although it is not regularly used today. One consideration

is that zero-dimensional mathematical points cannot be observed. Another is that the formal representation of such points, the Dirac delta function is unphysical, because it cannot be normalized. Parallel arguments apply to pure wave states. Roger Penrose states:

Such 'position states' are idealized wavefunctions in the opposite

sense from the momentum states. Whereas the momentum states are

infinitely spread out, the position states are infinitely concentrated.

Neither is normalizable [...].

Relational approach to wave–particle duality

Relational quantum mechanics

has been developed as a point of view that regards the event of

particle detection as having established a relationship between the

quantized field and the detector. The inherent ambiguity associated with

applying Heisenberg's uncertainty principle is consequently avoided;

hence there is no wave-particle duality.

Uses

Although it

is difficult to draw a line separating wave–particle duality from the

rest of quantum mechanics, it is nevertheless possible to list some

applications of this basic idea.

- Wave–particle duality is exploited in electron microscopy,

where the small wavelengths associated with the electron can be used to

view objects much smaller than what is visible using visible light.

- Similarly, neutron diffraction uses neutrons with a wavelength of about 0.1 nm, the typical spacing of atoms in a solid, to determine the structure of solids.

- Photos are now able to show this dual nature, which may lead to new ways of examining and recording this behaviour.

![[{\hat {p}}_{i},{\hat {x}}_{j}]=-i\hbar \delta _{ij},](https://wikimedia.org/api/rest_v1/media/math/render/svg/a6de152aa445b7ca6653b9dd087ad604c2b8bf0e)