"Survival of the fittest" is a phrase that originated from Darwinian evolutionary theory as a way of describing the mechanism of natural selection. The biological concept of fitness is defined as reproductive success. In Darwinian terms the phrase is best understood as "Survival of the form that will leave the most copies of itself in successive generations."

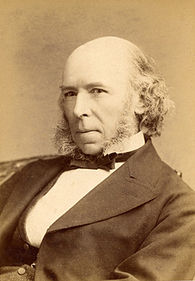

Herbert Spencer first used the phrase, after reading Charles Darwin's On the Origin of Species, in his Principles of Biology (1864), in which he drew parallels between his own economic theories and Darwin's biological ones: "This survival of the fittest, which I have here sought to express in mechanical terms, is that which Mr. Darwin has called 'natural selection', or the preservation of favoured races in the struggle for life."

Darwin responded positively to Alfred Russel Wallace's suggestion of using Spencer's new phrase "survival of the fittest" as an alternative to "natural selection", and adopted the phrase in The Variation of Animals and Plants under Domestication published in 1868. In On the Origin of Species, he introduced the phrase in the fifth edition published in 1869, intending it to mean "better designed for an immediate, local environment".

History of the phrase

By his own account, Herbert Spencer described a concept similar to "survival of the fittest" in his 1852 "A Theory of Population". He first used the phrase – after reading Charles Darwin's On the Origin of Species – in his Principles of Biology of 1864 in which he drew parallels between his economic theories and Darwin's biological, evolutionary ones, writing, "This survival of the fittest, which I have here sought to express in mechanical terms, is that which Mr. Darwin has called 'natural selection', or the preservation of favored races in the struggle for life."

In July 1866 Alfred Russel Wallace wrote to Darwin about readers thinking that the phrase "natural selection" personified nature as "selecting", and said this misconception could be avoided "by adopting Spencer's term" Survival of the fittest. Darwin promptly replied that Wallace's letter was "as clear as daylight. I fully agree with all that you say on the advantages of H. Spencer's excellent expression of 'the survival of the fittest'. This however had not occurred to me till reading your letter. It is, however, a great objection to this term that it cannot be used as a substantive governing a verb". Had he received the letter two months earlier, he would have worked the phrase into the fourth edition of the Origin which was then being printed, and he would use it in his "next book on Domestic Animals etc.".

Darwin wrote on page 6 of The Variation of Animals and Plants under Domestication published in 1868, "This preservation, during the battle for life, of varieties which possess any advantage in structure, constitution, or instinct, I have called Natural Selection; and Mr. Herbert Spencer has well expressed the same idea by the Survival of the Fittest. The term "natural selection" is in some respects a bad one, as it seems to imply conscious choice; but this will be disregarded after a little familiarity". He defended his analogy as similar to language used in chemistry, and to astronomers depicting the "attraction of gravity as ruling the movements of the planets", or the way in which "agriculturists speak of man making domestic races by his power of selection". He had "often personified the word Nature; for I have found it difficult to avoid this ambiguity; but I mean by nature only the aggregate action and product of many natural laws,—and by laws only the ascertained sequence of events."

In the first four editions of On the Origin of Species, Darwin had used the phrase "natural selection". In Chapter 4 of the 5th edition of The Origin published in 1869, Darwin implies again the synonym: "Natural Selection, or the Survival of the Fittest". By "fittest" Darwin meant "better adapted for the immediate, local environment", not the common modern meaning of "in the best physical shape" (think of a puzzle piece, not an athlete). In the introduction he gave full credit to Spencer, writing "I have called this principle, by which each slight variation, if useful, is preserved, by the term Natural Selection, in order to mark its relation to man's power of selection. But the expression often used by Mr. Herbert Spencer of the Survival of the Fittest is more accurate, and is sometimes equally convenient."

In The Man Versus The State, Spencer used the phrase in a postscript to justify a plausible explanation of how his theories would not be adopted by "societies of militant type". He uses the term in the context of societies at war, and the form of his reference suggests that he is applying a general principle.

"Thus by survival of the fittest, the militant type of society becomes characterized by profound confidence in the governing power, joined with a loyalty causing submission to it in all matters whatever".

Though Spencer's conception of organic evolution is commonly interpreted as a form of Lamarckism, Herbert Spencer is sometimes credited with inaugurating Social Darwinism. The phrase "survival of the fittest" has become widely used in popular literature as a catchphrase for any topic related or analogous to evolution and natural selection. It has thus been applied to principles of unrestrained competition, and it has been used extensively by both proponents and opponents of Social Darwinism.

Evolutionary biologists criticise the manner in which the term is used by non-scientists and the connotations that have grown around the term in popular culture. The phrase also does not help in conveying the complex nature of natural selection, so modern biologists prefer and almost exclusively use the term natural selection. The biological concept of fitness refers to reproductive success, as opposed to survival, and is not explicit in the specific ways in which organisms can be more "fit" (increase reproductive success) as having phenotypic characteristics that enhance survival and reproduction (which was the meaning that Spencer had in mind).

Critiquing the phrase

While the phrase "survival of the fittest" is often used to mean "natural selection", it is avoided by modern biologists, because the phrase can be misleading. For example, survival is only one aspect of selection, and not always the most important. Another problem is that the word "fit" is frequently confused with a state of physical fitness. In the evolutionary meaning "fitness" is the rate of reproductive output among a class of genetic variants.

Interpreted as expressing a biological theory

The phrase can also be interpreted to express a theory or hypothesis: that "fit" as opposed to "unfit" individuals or species, in some sense of "fit", will survive some test. Nevertheless, when extended to individuals it is a conceptual mistake, the phrase is a reference to the transgenerational survival of the heritable attributes; particular individuals are quite irrelevant. This becomes more clear when referring to Viral quasispecies, in survival of the flattest, which makes it clear to survive makes no reference to the question of even being alive itself; rather the functional capacity of proteins to carry out work.

Interpretations of the phrase as expressing a theory are in danger of being tautological, meaning roughly "those with a propensity to survive have a propensity to survive"; to have content the theory must use a concept of fitness that is independent of that of survival.

Interpreted as a theory of species survival, the theory that the fittest species survive is undermined by evidence that while direct competition is observed between individuals, populations and species, there is little evidence that competition has been the driving force in the evolution of large groups such as, for example, amphibians, reptiles, and mammals. Instead, these groups have evolved by expanding into empty ecological niches. In the punctuated equilibrium model of environmental and biological change, the factor determining survival is often not superiority over another in competition but ability to survive dramatic changes in environmental conditions, such as after a meteor impact energetic enough to greatly change the environment globally. The main land dwelling animals to survive the K-Pg impact 66 million years ago had the ability to live in tunnels, for example.

In 2010 Sahney et al. argued that there is little evidence that intrinsic, biological factors such as competition have been the driving force in the evolution of large groups. Instead, they cited extrinsic, abiotic factors such as expansion as the driving factor on a large evolutionary scale. The rise of dominant groups such as amphibians, reptiles, mammals and birds occurred by opportunistic expansion into empty ecological niches and the extinction of groups happened due to large shifts in the abiotic environment.

Interpreted as expressing a moral theory

Social Darwinists

It has been claimed that "the survival of the fittest" theory in biology was interpreted by late 19th century capitalists as "an ethical precept that sanctioned cut-throat economic competition" and led to the advent of the theory of "social Darwinism" which was used to justify laissez-faire economics, war and racism. However, these ideas pre-date and commonly contradict Darwin's ideas, and indeed their proponents rarely invoked Darwin in support. The term "social Darwinism" referring to capitalist ideologies was introduced as a term of abuse by Richard Hofstadter's Social Darwinism in American Thought published in 1944.

Anarchists

Russian anarchist Peter Kropotkin viewed the concept of "survival of the fittest" as supporting co-operation rather than competition. In his book Mutual Aid: A Factor of Evolution he set out his analysis leading to the conclusion that the fittest was not necessarily the best at competing individually, but often the community made up of those best at working together. He concluded that

In the animal world we have seen that the vast majority of species live in societies, and that they find in association the best arms for the struggle for life: understood in its wide Darwinian sense – not as a struggle for the sheer means of existence, but as a struggle against all natural conditions unfavourable to the species. The animal species, in which individual struggle has been reduced to its narrowest limits, and the practice of mutual aid has attained the greatest development, are invariably the most numerous, the most prosperous, and the most open to further progress.

Applying this concept to human society, Kropotkin presented mutual aid as one of the dominant factors of evolution, the other being self-assertion, and concluded that

In the practice of mutual aid, which we can retrace to the earliest beginnings of evolution, we thus find the positive and undoubted origin of our ethical conceptions; and we can affirm that in the ethical progress of man, mutual support not mutual struggle – has had the leading part. In its wide extension, even at the present time, we also see the best guarantee of a still loftier evolution of our race.

Tautology

"Survival of the fittest" is sometimes claimed to be a tautology. The reasoning is that if one takes the term "fit" to mean "endowed with phenotypic characteristics which improve chances of survival and reproduction" (which is roughly how Spencer understood it), then "survival of the fittest" can simply be rewritten as "survival of those who are better equipped for surviving". Furthermore, the expression does become a tautology if one uses the most widely accepted definition of "fitness" in modern biology, namely reproductive success itself (rather than any set of characters conducive to this reproductive success). This reasoning is sometimes used to claim that Darwin's entire theory of evolution by natural selection is fundamentally tautological, and therefore devoid of any explanatory power.

However, the expression "survival of the fittest" (taken on its own and out of context) gives a very incomplete account of the mechanism of natural selection. The reason is that it does not mention a key requirement for natural selection, namely the requirement of heritability. It is true that the phrase "survival of the fittest", in and by itself, is a tautology if fitness is defined by survival and reproduction. Natural selection is the portion of variation in reproductive success that is caused by heritable characters.

If certain heritable characters increase or decrease the chances of survival and reproduction of their bearers, then it follows mechanically (by definition of "heritable") that those characters that improve survival and reproduction will increase in frequency over generations. This is precisely what is called "evolution by natural selection". On the other hand, if the characters which lead to differential reproductive success are not heritable, then no meaningful evolution will occur, "survival of the fittest" or not: if improvement in reproductive success is caused by traits that are not heritable, then there is no reason why these traits should increase in frequency over generations. In other words, natural selection does not simply state that "survivors survive" or "reproducers reproduce"; rather, it states that "survivors survive, reproduce and therefore propagate any heritable characters which have affected their survival and reproductive success". This statement is not tautological: it hinges on the testable hypothesis that such fitness-impacting heritable variations actually exist (a hypothesis that has been amply confirmed.)

Momme von Sydow suggested further definitions of 'survival of the fittest' that may yield a testable meaning in biology and also in other areas where Darwinian processes have been influential. However, much care would be needed to disentangle tautological from testable aspects. Moreover, an "implicit shifting between a testable and an untestable interpretation can be an illicit tactic to immunize natural selection ... while conveying the impression that one is concerned with testable hypotheses".

Skeptic Society founder and Skeptic magazine publisher Michael Shermer addresses the tautology problem in his 1997 book, Why People Believe Weird Things, in which he points out that although tautologies are sometimes the beginning of science, they are never the end, and that scientific principles like natural selection are testable and falsifiable by virtue of their predictive power. Shermer points out, as an example, that population genetics accurately demonstrate when natural selection will and will not effect change on a population. Shermer hypothesizes that if hominid fossils were found in the same geological strata as trilobites, it would be evidence against natural selection.

![{\displaystyle \lambda _{1}/\lambda _{2}=\left[\left(1-r_{\text{S}}/R_{1}\right)/\left(1-r_{\text{S}}/R_{2}\right)\right]^{1/2}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/079898fe2da0ad249b52ab020a100edf65ac650b)