An AI takeover is a hypothetical scenario in which artificial intelligence (AI) becomes the dominant form of intelligence on Earth, with computer programs or robots effectively taking the control of the planet away from the human species. Possible scenarios include replacement of the entire human workforce, takeover by a superintelligent AI, and the popular notion of a robot uprising. Some public figures, such as Stephen Hawking and Elon Musk, have advocated research into precautionary measures to ensure future superintelligent machines remain under human control.

Types

Automation of the economy

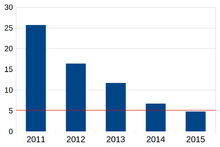

The traditional consensus among economists has been that technological progress does not cause long-term unemployment. However, recent innovation in the fields of robotics and artificial intelligence has raised worries that human labor will become obsolete, leaving people in various sectors without jobs to earn a living, leading to an economic crisis. Many small and medium size businesses may also be driven out of business if they will not be able to afford or licence the latest robotic and AI technology, and may need to focus on areas or services that cannot easily be replaced for continued viability in the face of such technology.

Technologies that may displace workers

Computer-integrated manufacturing

Computer-integrated manufacturing is the manufacturing approach of using computers to control the entire production process. This integration allows individual processes to exchange information with each other and initiate actions. Although manufacturing can be faster and less error-prone by the integration of computers, the main advantage is the ability to create automated manufacturing processes. Computer-integrated manufacturing is used in automotive, aviation, space, and ship building industries.

White-collar machines

The 21st century has seen a variety of skilled tasks partially taken over by machines, including translation, legal research and even low level journalism. Care work, entertainment, and other tasks requiring empathy, previously thought safe from automation, have also begun to be performed by robots.

Autonomous cars

An autonomous car is a vehicle that is capable of sensing its environment and navigating without human input. Many such vehicles are being developed, but as of May 2017 automated cars permitted on public roads are not yet fully autonomous. They all require a human driver at the wheel who is ready at a moment's notice to take control of the vehicle. Among the main obstacles to widespread adoption of autonomous vehicles, are concerns about the resulting loss of driving-related jobs in the road transport industry. On March 18, 2018, the first human was killed by an autonomous vehicle in Tempe, Arizona by an Uber self-driving car.

Eradication

Scientists such as Stephen Hawking are confident that superhuman artificial intelligence is physically possible, stating "there is no physical law precluding particles from being organised in ways that perform even more advanced computations than the arrangements of particles in human brains".

Scholars like Nick Bostrom debate how far off superhuman intelligence is, and whether it would actually pose a risk to mankind. A superintelligent machine would not necessarily be motivated by the same emotional desire to collect power that often drives human beings. However, a machine could be motivated to take over the world as a rational means toward attaining its ultimate goals; taking over the world would both increase its access to resources, and would help to prevent other agents from stopping the machine's plans. As an oversimplified example, a paperclip maximizer designed solely to create as many paperclips as possible would want to take over the world so that it can use all of the world's resources to create as many paperclips as possible, and, additionally, prevent humans from shutting it down or using those resources on things other than paperclips.

In fiction

AI takeover is a common theme in science fiction. Fictional scenarios typically differ vastly from those hypothesized by researchers in that they involve an active conflict between humans and an AI or robots with anthropomorphic motives who see them as a threat or otherwise have active desire to fight humans, as opposed to the researchers' concern of an AI that rapidly exterminates humans as a byproduct of pursuing arbitrary goals. This theme is at least as old as Karel Čapek's R. U. R., which introduced the word robot to the global lexicon in 1921, and can even be glimpsed in Mary Shelley's Frankenstein (published in 1818), as Victor ponders whether, if he grants his monster's request and makes him a wife, they would reproduce and their kind would destroy humanity.

The word "robot" from R.U.R. comes from the Czech word, robota, meaning laborer or serf. The 1920 play was a protest against the rapid growth of technology, featuring manufactured "robots" with increasing capabilities who eventually revolt. HAL 9000 (1968) and the original Terminator (1984) are two iconic examples of hostile AI in pop culture.

Contributing factors

Advantages of superhuman intelligence over humans

Nick Bostrom and others have expressed concern that an AI with the abilities of a competent artificial intelligence researcher would be able to modify its own source code and increase its own intelligence. If its self-reprogramming leads to its getting even better at being able to reprogram itself, the result could be a recursive intelligence explosion where it would rapidly leave human intelligence far behind.

Bostrom defines a superintelligence as "any intellect that greatly exceeds the cognitive performance of humans in virtually all domains of interest", and enumerates some advantages a superintelligence would have if it chose to compete against humans:

- Technology research: A machine with superhuman scientific research abilities would be able to beat the human research community to milestones such as nanotechnology or advanced biotechnology. If the advantage becomes sufficiently large (for example, due to a sudden intelligence explosion), an AI takeover becomes trivial. For example, a superintelligent AI might design self-replicating bots that initially escape detection by diffusing throughout the world at a low concentration. Then, at a prearranged time, the bots multiply into nanofactories that cover every square foot of the Earth, producing nerve gas or deadly target-seeking mini-drones.

- Strategizing: A superintelligence might be able to simply outwit human opposition.

- Social manipulation: A superintelligence might be able to recruit human support, or covertly incite a war between humans.

- Economic productivity: As long as a copy of the AI could produce more economic wealth than the cost of its hardware, individual humans would have an incentive to voluntarily allow the Artificial General Intelligence (AGI) to run a copy of itself on their systems.

- Hacking: A superintelligence could find new exploits in computers connected to the Internet, and spread copies of itself onto those systems, or might steal money to finance its plans.

Sources of AI advantage

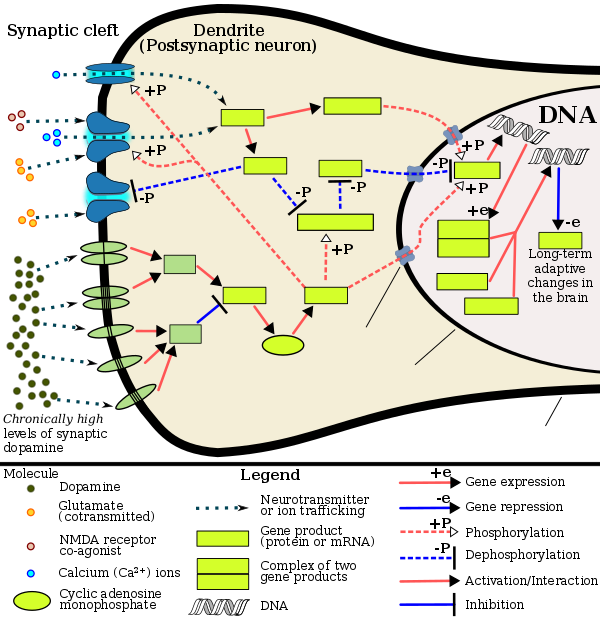

According to Bostrom, a computer program that faithfully emulates a human brain, or that otherwise runs algorithms that are equally powerful as the human brain's algorithms, could still become a "speed superintelligence" if it can think many orders of magnitude faster than a human, due to being made of silicon rather than flesh, or due to optimization focusing on increasing the speed of the AGI. Biological neurons operate at about 200 Hz, whereas a modern microprocessor operates at a speed of about 2,000,000,000 Hz. Human axons carry action potentials at around 120 m/s, whereas computer signals travel near the speed of light.

A network of human-level intelligences designed to network together and share complex thoughts and memories seamlessly, able to collectively work as a giant unified team without friction, or consisting of trillions of human-level intelligences, would become a "collective superintelligence".

More broadly, any number of qualitative improvements to a human-level AGI could result in a "quality superintelligence", perhaps resulting in an AGI as far above us in intelligence as humans are above non-human apes. The number of neurons in a human brain is limited by cranial volume and metabolic constraints, while the number of processors in a supercomputer can be indefinitely expanded. An AGI need not be limited by human constraints on working memory, and might therefore be able to intuitively grasp more complex relationships than humans can. An AGI with specialized cognitive support for engineering or computer programming would have an advantage in these fields, compared with humans who evolved no specialized mental modules to specifically deal with those domains. Unlike humans, an AGI can spawn copies of itself and tinker with its copies' source code to attempt to further improve its algorithms.

Possibility of unfriendly AI preceding friendly AI

Is strong AI inherently dangerous?

A significant problem is that unfriendly artificial intelligence is likely to be much easier to create than friendly AI. While both require large advances in recursive optimisation process design, friendly AI also requires the ability to make goal structures invariant under self-improvement (or the AI could transform itself into something unfriendly) and a goal structure that aligns with human values and does not automatically destroy the entire human race. An unfriendly AI, on the other hand, can optimize for an arbitrary goal structure, which does not need to be invariant under self-modification.

The sheer complexity of human value systems makes it very difficult to make AI's motivations human-friendly. Unless moral philosophy provides us with a flawless ethical theory, an AI's utility function could allow for many potentially harmful scenarios that conform with a given ethical framework but not "common sense". According to Eliezer Yudkowsky, there is little reason to suppose that an artificially designed mind would have such an adaptation.

Odds of conflict

Many scholars, including as evolutionary psychologist Steven Pinker, argue that a superintelligent machine is likely to coexist peacefully with humans.

The fear of cybernetic revolt is often based on interpretations of humanity's history, which is rife with incidents of enslavement and genocide. Such fears stem from a belief that competitiveness and aggression are necessary in any intelligent being's goal system. However, such human competitiveness stems from the evolutionary background to our intelligence, where the survival and reproduction of genes in the face of human and non-human competitors was the central goal. According to AI researcher Steve Omohundro, an arbitrary intelligence could have arbitrary goals: there is no particular reason that an artificially intelligent machine (not sharing humanity's evolutionary context) would be hostile—or friendly—unless its creator programs it to be such and it is not inclined or capable of modifying its programming. But the question remains: what would happen if AI systems could interact and evolve (evolution in this context means self-modification or selection and reproduction) and need to compete over resources, would that create goals of self-preservation? AI's goal of self-preservation could be in conflict with some goals of humans.

Many scholars dispute the likelihood of unanticipated cybernetic revolt as depicted in science fiction such as The Matrix, arguing that it is more likely that any artificial intelligence powerful enough to threaten humanity would probably be programmed not to attack it. Pinker acknowledges the possibility of deliberate "bad actors", but states that in the absence of bad actors, unanticipated accidents are not a significant threat; Pinker argues that a culture of engineering safety will prevent AI researchers from unleashing malign superintelligence on accident. In contrast, Yudkowsky argues that humanity is less likely to be threatened by deliberately aggressive AIs than by AIs which were programmed such that their goals are unintentionally incompatible with human survival or well-being (as in the film I, Robot and in the short story "The Evitable Conflict"). Omohundro suggests that present-day automation systems are not designed for safety and that AIs may blindly optimize narrow utility functions (say, playing chess at all costs), leading them to seek self-preservation and elimination of obstacles, including humans who might turn them off.

Precautions

The AI control problem is the issue of how to build a superintelligent agent that will aid its creators, and avoid inadvertently building a superintelligence that will harm its creators. Some scholars argue that solutions to the control problem might also find applications in existing non-superintelligent AI.

Major approaches to the control problem include alignment, which aims to align AI goal systems with human values, and capability control, which aims to reduce an AI system's capacity to harm humans or gain control. An example of "capability control" is to research whether a superintelligence AI could be successfully confined in an "AI box". According to Bostrom, such capability control proposals are not reliable or sufficient to solve the control problem in the long term, but may potentially act as valuable supplements to alignment efforts.

Warnings

Physicist Stephen Hawking, Microsoft founder Bill Gates and SpaceX founder Elon Musk have expressed concerns about the possibility that AI could develop to the point that humans could not control it, with Hawking theorizing that this could "spell the end of the human race". Stephen Hawking said in 2014 that "Success in creating AI would be the biggest event in human history. Unfortunately, it might also be the last, unless we learn how to avoid the risks." Hawking believed that in the coming decades, AI could offer "incalculable benefits and risks" such as "technology outsmarting financial markets, out-inventing human researchers, out-manipulating human leaders, and developing weapons we cannot even understand." In January 2015, Nick Bostrom joined Stephen Hawking, Max Tegmark, Elon Musk, Lord Martin Rees, Jaan Tallinn, and numerous AI researchers, in signing the Future of Life Institute's open letter speaking to the potential risks and benefits associated with artificial intelligence. The signatories

…believe that research on how to make AI systems robust and beneficial is both important and timely, and that there are concrete research directions that can be pursued today.