The digital divide is the unequal access to digital technology, including smartphones, tablets, laptops, and the internet. The digital divide worsens inequality around access to information and resources. In the Information Age, people without access to the Internet and other technology are at a disadvantage, for they are unable or less able to connect with others, find and apply for jobs, shop, and learn.

People who are homeless, living in poverty, elderly people, and those living in rural communities may have limited access to the Internet; in contrast, urban middle class and upper-class people have easy access to the Internet. Another divide is between producers and consumers of Internet content, which could be a result of educational disparities. While social media use varies across age groups, a US 2010 study reported no racial divide.

History

The historical roots of the digital divide in America refer to the increasing gap that occurred during the early modern period between those who could and could not access the real time forms of calculation, decision-making, and visualization offered via written and printed media. Within this context, ethical discussions regarding the relationship between education and the free distribution of information were raised by thinkers such as Immanuel Kant, Jean Jacques Rousseau, and Mary Wollstonecraft (1712–1778). The latter advocated that governments should intervene to ensure that any society's economic benefits should be fairly and meaningfully distributed. Amid the Industrial Revolution in Great Britain, Rousseau's idea helped to justify poor laws that created a safety net for those who were harmed by new forms of production. Later when telegraph and postal systems evolved, many used Rousseau's ideas to argue for full access to those services, even if it meant subsidizing hard-to-serve citizens. Thus, "universal services" referred to innovations in regulation and taxation that would allow phone services such as AT&T in the United States to serve hard-to-serve rural users. In 1996, as telecommunications companies merged with Internet companies, the Federal Communications Commission adopted Telecommunications Services Act of 1996 to consider regulatory strategies and taxation policies to close the digital divide. Though the term "digital divide" was coined among consumer groups that sought to tax and regulate information and communications technology (ICeT) companies to close the digital divide, the topic soon moved onto a global stage. The focus was the World Trade Organization which passed a Telecommunications Services Act, which resisted regulation of ICT companies so that they would be required to serve hard to serve individuals and communities. In 1999, to assuage anti-globalization forces, the WTO hosted the "Financial Solutions to Digital Divide" in Seattle, US, co-organized by Craig Warren Smith of Digital Divide Institute and Bill Gates Sr. the chairman of the Bill and Melinda Gates Foundation. It catalyzed a full-scale global movement to close the digital divide, which quickly spread to all sectors of the global economy. In 2000, US president Bill Clinton mentioned the term in the State of the Union Address.

During the COVID-19 pandemic

At the outset of the COVID-19 pandemic, governments worldwide issued stay-at-home orders that established lockdowns, quarantines, restrictions, and closures. The resulting interruptions to schooling, public services, and business operations drove nearly half of the world's population into seeking alternative methods to live while in isolation. These methods included telemedicine, virtual classrooms, online shopping, technology-based social interactions and working remotely, all of which require access to high-speed or broadband internet access and digital technologies. A Pew Research Centre study reports that 90% of Americans describe the use of the Internet as "essential" during the pandemic. The accelerated use of digital technologies creates a landscape where the ability, or lack thereof, to access digital spaces becomes a crucial factor in everyday life.

According to the Pew Research Center, 59% of children from lower-income families were likely to face digital obstacles in completing school assignments. These obstacles included the use of a cellphone to complete homework, having to use public Wi-Fi because of unreliable internet service in the home and lack of access to a computer in the home. This difficulty, titled the homework gap, affects more than 30% of K-12 students living below the poverty threshold, and disproportionally affects American Indian/Alaska Native, Black, and Hispanic students. These types of interruptions or privilege gaps in education exemplify problems in the systemic marginalization of historically oppressed individuals in primary education. The pandemic exposed inequity causing discrepancies in learning.

A lack of "tech readiness", that is, confident and independent use of devices, was reported among the US elderly population; with more than 50% reporting an inadequate knowledge of devices and more than one-third reporting a lack of confidence. Moreover, according to a UN research paper, similar results can be found across various Asian countries, with those above the age of 74 reporting a lower and more confused usage of digital devices. This aspect of the digital divide and the elderly occurred during the pandemic as healthcare providers increasingly relied upon telemedicine to manage chronic and acute health conditions.

Aspects

There are various definitions of the digital divide, all with slightly different emphasis, which is evidenced by related concepts like digital inclusion, digital participation, digital skills, media literacy, and digital accessibility.

Infrastructure

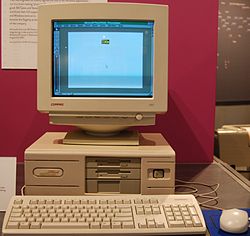

The infrastructure by which individuals, households, businesses, and communities connect to the Internet address the physical mediums that people use to connect to the Internet such as desktop computers, laptops, basic mobile phones or smartphones, iPods or other MP3 players, gaming consoles such as Xbox or PlayStation, electronic book readers, and tablets such as iPads.

Traditionally, the nature of the divide has been measured in terms of the existing numbers of subscriptions and digital devices. Given the increasing number of such devices, some have concluded that the digital divide among individuals has increasingly been closing as the result of a natural and almost automatic process. Others point to persistent lower levels of connectivity among women, racial and ethnic minorities, people with lower incomes, rural residents, and less educated people as evidence that addressing inequalities in access to and use of the medium will require much more than the passing of time. Recent studies have measured the digital divide not in terms of technological devices, but in terms of the existing bandwidth per individual (in kbit/s per capita).

As shown in the Figure on the side, the digital divide in kbit/s is not monotonically decreasing but re-opens up with each new innovation. For example, "the massive diffusion of narrow-band Internet and mobile phones during the late 1990s" increased digital inequality, as well as "the initial introduction of broadband DSL and cable modems during 2003–2004 increased levels of inequality". During the mid-2000s, communication capacity was more unequally distributed than during the late 1980s, when only fixed-line phones existed. The most recent increase in digital equality stems from the massive diffusion of the latest digital innovations (i.e. fixed and mobile broadband infrastructures, e.g. 5G and fiber optics FTTH). Measurement methodologies of the digital divide, and more specifically an Integrated Iterative Approach General Framework (Integrated Contextual Iterative Approach – ICI) and the digital divide modeling theory under measurement model DDG (Digital Divide Gap) are used to analyze the gap existing between developed and developing countries, and the gap among the 27 members-states of the European Union. The Good Things Foundation, a UK non-profit organisation, collates data on the extent and impact of the digital divide in the UK and lobbies the government to fix digital exclusion

Skills and digital literacy

Research from 2001 showed that the digital divide is more than just an access issue and cannot be alleviated merely by providing the necessary equipment. There are at least three factors at play: information accessibility, information utilization, and information receptiveness. More than just accessibility, the digital divide consists of society's lack of knowledge on how to make use of the information and communication tools once they exist within a community. Information professionals have the ability to help bridge the gap by providing reference and information services to help individuals learn and utilize the technologies to which they do have access, regardless of the economic status of the individual seeking help.

Location

One can connect to the internet in a variety of locations, such as homes, offices, schools, libraries, public spaces, and Internet cafes. Levels of connectivity often vary between rural, suburban, and urban areas.

In 2017, the Wireless Broadband Alliance published the white paper The Urban Unconnected, which highlighted that in the eight countries with the world's highest GNP about 1.75 billion people had no internet connection, and one third of them lived in the major urban centers. Delhi (5.3 millions, 9% of the total population), São Paulo (4.3 millions, 36%), New York (1.6 mln, 19%), and Moscow (2.1 mln, 17%) registered the highest percentages of citizens who had no internet access of any type.

As of 2021, only about half of the world's population had access to the internet, leaving 3.7 billion people without internet. A majority of those are in developing countries, and a large portion of them are women. Also, the governments of different countries have different policies about privacy, data governance, speech freedoms and many other factors. Government restrictions make it challenging for technology companies to provide services in certain countries. This disproportionately impacts the different regions of the world; Europe has the highest percentage of the population online while Africa has the lowest. From 2010 to 2014 Europe went from 67% to 75% and in the same time span Africa went from 10% to 19%.

Network speeds play a large role in the quality of an internet connection. Large cities and towns may have better access to high speed internet than rural areas, which may have limited or no service. Households can be locked into a specific service provider, since it may be the only carrier that even offers service to the area. This applies to regions that have developed networks, like the United States, but also applies to developing countries, so that very large areas have virtually no coverage. In those areas there are very limited actions that a consumer could take, since the issue is mainly infrastructure. Technologies that provide an internet connection through satellite are becoming more common, like Starlink, but they are still not available in many regions.

Based on location, a connection may be so slow as to be virtually unusable, solely because a network provider has limited infrastructure in the area. For example, to download 5 GB of data in Taiwan it might take about 8 minutes, while the same download might take 30 hours in Yemen.

From 2020 to 2022, average download speeds in the EU climbed from 70 Mbps to more than 120 Mbps, owing mostly to the demand for digital services during the pandemic. There is still a large rural-urban disparity in internet speeds, with metropolitan areas in France and Denmark reaching rates of more than 150 Mbps, while many rural areas in Greece, Croatia, and Cyprus have speeds of less than 60 Mbps.

The EU aspires for complete gigabit coverage by 2030, however as of 2022, only over 60% of Europe has high-speed internet infrastructure, signalling the need for more enhancements.

Applications

Common Sense Media, a nonprofit group based in San Francisco, surveyed almost 1,400 parents and reported in 2011 that 47 percent of families with incomes more than $75,000 had downloaded apps for their children, while only 14 percent of families earning less than $30,000 had done so.

Reasons and correlating variables

As of 2014, the gap in a digital divide was known to exist for a number of reasons. Obtaining access to ICTs and using them actively has been linked to demographic and socio-economic characteristics including income, education, race, gender, geographic location (urban-rural), age, skills, awareness, political, cultural and psychological attitudes. Multiple regression analysis across countries has shown that income levels and educational attainment are identified as providing the most powerful explanatory variables for ICT access and usage. Evidence was found that Caucasians are much more likely than non-Caucasians to own a computer as well as have access to the Internet in their homes. As for geographic location, people living in urban centers have more access and show more usage of computer services than those in rural areas.

In developing countries, a digital divide between women and men is apparent in tech usage, with men more likely to be competent tech users. Controlled statistical analysis has shown that income, education and employment act as confounding variables and that women with the same level of income, education and employment actually embrace ICT more than men (see Women and ICT4D), this argues against any suggestion that women are "naturally" more technophobic or less tech-savvy. However, each nation has its own set of causes or the digital divide. For example, the digital divide in Germany is unique because it is not largely due to difference in quality of infrastructure.

The correlation between income and internet use suggests that the digital divide persists at least in part due to income disparities. Most commonly, a digital divide stems from poverty and the economic barriers that limit resources and prevent people from obtaining or otherwise using newer technologies.

In research, while each explanation is examined, others must be controlled to eliminate interaction effects or mediating variables, but these explanations are meant to stand as general trends, not direct causes. Measurements for the intensity of usages, such as incidence and frequency, vary by study. Some report usage as access to Internet and ICTs while others report usage as having previously connected to the Internet. Some studies focus on specific technologies, others on a combination (such as Infostate, proposed by Orbicom-UNESCO, the Digital Opportunity Index, or ITU's ICT Development Index).

Economic gap in the United States

During the mid-1990s, the United States Department of Commerce, National Telecommunications & Information Administration (NTIA) began publishing reports about the Internet and access to and usage of the resource. The first of three reports is titled "Falling Through the Net: A Survey of the "Have Nots" in Rural and Urban America" (1995), the second is "Falling Through the Net II: New Data on the Digital Divide" (1998), and the final report "Falling Through the Net: Defining the Digital Divide" (1999). The NTIA's final report attempted clearly to define the term digital divide as "the divide between those with access to new technologies and those without". Since the introduction of the NTIA reports, much of the early, relevant literature began to reference the NTIA's digital divide definition. The digital divide is commonly defined as being between the "haves" and "have-nots".

The U.S. Federal Communications Commission's (FCC) 2019 Broadband Deployment Report indicated that 21.3 million Americans do not have access to wired or wireless broadband internet. As of 2020, BroadbandNow, an independent research company studying access to internet technologies, estimated that the actual number of United States Americans without high-speed internet is twice that number. According to a 2021 Pew Research Center report, smartphone ownership and internet use has increased for all Americans, however, a significant gap still exists between those with lower incomes and those with higher incomes: U.S. households earning $100K or more are twice as likely to own multiple devices and have home internet service as those making $30K or more, and three times as likely as those earning less than $30K per year. The same research indicated that 13% of the lowest income households had no access to internet or digital devices at home compared to only 1% of the highest income households.

According to a Pew Research Center survey of U.S. adults executed from January 25 to February 8, 2021, the digital lives of Americans with high and low incomes are varied. Conversely, the proportion of Americans that use home internet or cell phones has maintained constant between 2019 and 2021. A quarter of those with yearly average earnings under $30,000 (24%) says they don't own smartphones. Four out of every ten low-income people (43%) do not have home internet access or a computer (43%). Furthermore, the more significant part of lower-income Americans does not own a tablet device.

On the other hand, every technology is practically universal among people earning $100,000 or higher per year. Americans with larger family incomes are also more likely to buy a variety of internet-connected products. Wi-Fi at home, a smartphone, a computer, and a tablet are used by around six out of ten families making $100,000 or more per year, compared to 23 percent in the lesser household.

Racial gap in the United States

Although many groups in society are affected by a lack of access to computers or the Internet, communities of color are specifically observed to be negatively affected by the digital divide. Pew research shows that as of 2021, home broadband rates are 81% for White households, 71% for Black households and 65% for Hispanic households. While 63% of adults find the lack of broadband to be a disadvantage, only 49% of White adults do. Smartphone and tablet ownership remains consistent with about 8 out of 10 Black, White, and Hispanic individuals reporting owning a smartphone and half owning a tablet. A 2021 survey found that a quarter of Hispanics rely on their smartphone and do not have access to broadband.

Physical and mental disability gap

Inequities in access to information technologies are present among individuals living with a physical disability in comparison to those who are not living with a disability. In 2011, according to the Pew Research Center, 54% of households with a person who had a disability had home Internet access, compared to 81% of households that did not have a person who has a disability. The type of disability an individual has can prevent them from interacting with computer screens and smartphone screens, such as having a quadriplegia disability or having a disability in the hands. However, there is still a lack of access to technology and home Internet access among those who have a cognitive and auditory disability as well. There is a concern of whether or not the increase in the use of information technologies will increase equality through offering opportunities for individuals living with disabilities or whether it will only add to the present inequalities and lead to individuals living with disabilities being left behind in society. Issues such as the perception of disabilities in society, national and regional government policy, corporate policy, mainstream computing technologies, and real-time online communication have been found to contribute to the impact of the digital divide on individuals with disabilities. In 2022, a survey of people in the UK with severe mental illness found that 42% lacked basic digital skills, such as changing passwords or connecting to Wi-Fi.

People with disabilities are also the targets of online abuse. Online disability hate crimes have increased by 33% across the UK between 2016–17 and 2017–18 according to a report published by Leonard Cheshire, a health and welfare charity. Accounts of online hate abuse towards people with disabilities were shared during an incident in 2019 when model Katie Price's son was the target of online abuse that was attributed to him having a disability. In response to the abuse, a campaign was launched by Price to ensure that Britain's MPs held accountable those who perpetuate online abuse towards those with disabilities. Online abuse towards individuals with disabilities is a factor that can discourage people from engaging online which could prevent people from learning information that could improve their lives. Many individuals living with disabilities face online abuse in the form of accusations of benefit fraud and "faking" their disability for financial gain, which in some cases leads to unnecessary investigations.

Gender gap

Due to the rapidly declining price of connectivity and hardware, skills deficits have eclipsed barriers of access as the primary contributor to the gender digital divide. Studies show that women are less likely to know how to leverage devices and Internet access to their full potential, even when they do use digital technologies. In rural India, for example, a study found that the majority of women who owned mobile phones only knew how to answer calls. They could not dial numbers or read messages without assistance from their husbands, due to a lack of literacy and numeracy skills. A survey of 3,000 respondents across 25 countries found that adolescent boys with mobile phones used them for a wider range of activities, such as playing games and accessing financial services online. Adolescent girls in the same study tended to use just the basic functionalities of their phone, such as making calls and using the calculator. Similar trends can be seen even in areas where Internet access is near-universal. A survey of women in nine cities around the world revealed that although 97% of women were using social media, only 48% of them were expanding their networks, and only 21% of Internet-connected women had searched online for information related to health, legal rights or transport. In some cities, less than one quarter of connected women had used the Internet to look for a job.

Studies show that despite strong performance in computer and information literacy (CIL), girls do not have confidence in their ICT abilities. According to the International Computer and Information Literacy Study (ICILS) assessment girls' self-efficacy scores (their perceived as opposed to their actual abilities) for advanced ICT tasks were lower than boys'.

A paper published by J. Cooper from Princeton University points out that learning technology is designed to be receptive to men instead of women. Overall, the study presents the problem of various perspectives in society that are a result of gendered socialization patterns that believe that computers are a part of the male experience since computers have traditionally presented as a toy for boys when they are children. This divide is followed as children grow older and young girls are not encouraged as much to pursue degrees in IT and computer science. In 1990, the percentage of women in computing jobs was 36%, however in 2016, this number had fallen to 25%. This can be seen in the under representation of women in IT hubs such as Silicon Valley.

There has also been the presence of algorithmic bias that has been shown in machine learning algorithms that are implemented by major companies. In 2015, Amazon had to abandon a recruiting algorithm that showed a difference between ratings that candidates received for software developer jobs as well as other technical jobs. As a result, it was revealed that Amazon's machine algorithm was biased against women and favored male resumes over female resumes. This was due to the fact that Amazon's computer models were trained to vet patterns in resumes over a 10-year period. During this ten-year period, the majority of the resumes belong to male individuals, which is a reflection of male dominance across the tech industry.

Age gap

The age gap contributes to the digital divide due to the fact that people born before 1983 did not grow up with the internet. According to Marc Prensky, people who fall into this age range are classified as "digital immigrants." A digital immigrant is defined as "a person born or brought up before the widespread use of digital technology." The internet became officially available for public use on January 1, 1983; anyone born before then has had to adapt to the new age of technology. On the contrary, people born after 1983 are considered "digital natives". Digital natives are defined as people born or brought up during the age of digital technology.

Across the globe, there is a 10% difference in internet usage between people aged 15–24 years old and people aged 25 years or older. According to the International Telecommunication Union (ITU), 75% of people aged 15–24 used the internet in 2022 compared to 65% of people aged 25 years or older. The highest amount of digital divide between generations occurs in Africa with 55% of the younger age group using the internet compared to 36% of people aged 25 years or older. The lowest amount of divide occurs between the Commonwealth of Independent States with 91% of the younger age group using the internet compared to 83% of people aged 25 years or older.

In addition to being less connected with the internet, older generations are less likely to use financial technology, also known as fintech. Fintech is any way of managing money via digital devices. Some examples of fintech include digital payment apps such as Venmo and Apple Pay, tax services such as TurboTax, or applying for a mortgage digitally. In data from World Bank Findex, 40% of people younger than 40 years old utilized fintech compared to less than 25% of people aged 60 years or older.

Global level

The divide between differing countries or regions of the world is referred to as the global digital divide, which examines the technological gap between developing and developed countries. The divide within countries (such as the digital divide in the United States) may refer to inequalities between individuals, households, businesses, or geographic areas, usually at different socioeconomic levels or other demographic categories. In contrast, the global digital divide describes disparities in access to computing and information resources, and the opportunities derived from such access. As the internet rapidly expands it is difficult for developing countries to keep up with the constant changes. In 2014 only three countries (China, US, Japan) host 50% of the globally installed bandwidth potential. This concentration is not new, as historically only ten countries have hosted 70–75% of the global telecommunication capacity (see Figure). The U.S. lost its global leadership in terms of installed bandwidth in 2011, replaced by China, who hosted more than twice as much national bandwidth potential in 2014 (29% versus 13% of the global total).

Some zero-rating programs such as Facebook Zero offer free/subsidized data access to certain websites. Critics object that this is an anti-competitive program that undermines net neutrality and creates a "walled garden". A 2015 study reported that 65% of Nigerians, 61% of Indonesians, and 58% of Indians agree with the statement that "Facebook is the Internet" compared with only 5% in the US.

Implications

Social capital

Once an individual is connected, Internet connectivity and ICTs can enhance his or her future social and cultural capital. Social capital is acquired through repeated interactions with other individuals or groups of individuals. Connecting to the Internet creates another set of means by which to achieve repeated interactions. ICTs and Internet connectivity enable repeated interactions through access to social networks, chat rooms, and gaming sites. Once an individual has access to connectivity, obtains infrastructure by which to connect, and can understand and use the information that ICTs and connectivity provide, that individual is capable of becoming a "digital citizen."

Economic disparity

In the United States, the research provided by Unguarded Availability Services notes a direct correlation between a company's access to technological advancements and its overall success in bolstering the economy. The study, which includes over 2,000 IT executives and staff officers, indicates that 69 percent of employees feel they do not have access to sufficient technology to make their jobs easier, while 63 percent of them believe the lack of technological mechanisms hinders their ability to develop new work skills. Additional analysis provides more evidence to show how the digital divide also affects the economy in places all over the world. A BEG report suggests that in countries like Sweden, Switzerland, and the U.K., the digital connection among communities is made easier, allowing for their populations to obtain a much larger share of the economies via digital business. In fact, in these places, populations hold shares approximately 2.5 percentage points higher. During a meeting with the United Nations a Bangladesh representative expressed his concern that poor and undeveloped countries would be left behind due to a lack of funds to bridge the digital gap.

Education

The digital divide impacts children's ability to learn and grow in low-income school districts. Without Internet access, students are unable to cultivate necessary technological skills to understand today's dynamic economy. The need for the internet starts while children are in school – necessary for matters such as school portal access, homework submission, and assignment research. The Federal Communications Commission's Broadband Task Force created a report showing that about 70% of teachers give students homework that demand access to broadband. Approximately 65% of young scholars use the Internet at home to complete assignments as well as connect with teachers and other students via discussion boards and shared files. A recent study indicates that approximately 50% of students say that they are unable to finish their homework due to an inability to either connect to the Internet or in some cases, find a computer. Additionally, The Public Policy Institute of California reported in 2023 that 27% of the state’s school children lack the necessary broadband to attend school remotely, and 16% have no internet connection at all.

This has led to a new revelation: 42% of students say they received a lower grade because of this disadvantage. According to research conducted by the Center for American Progress, "if the United States were able to close the educational achievement gaps between native-born white children and black and Hispanic children, the U.S. economy would be 5.8 percent—or nearly $2.3 trillion—larger in 2050".

In a reverse of this idea, well-off families, especially the tech-savvy parents in Silicon Valley, carefully limit their own children's screen time. The children of wealthy families attend play-based preschool programs that emphasize social interaction instead of time spent in front of computers or other digital devices, and they pay to send their children to schools that limit screen time. American families that cannot afford high-quality childcare options are more likely to use tablet computers filled with apps for children as a cheap replacement for a babysitter, and their government-run schools encourage screen time during school. Students in school are also learning about the digital divide.

To reduce the impact of the digital divide and increase digital literacy in young people at an early age, governments have begun to develop and focus policy on embedding digital literacies in both student and educator programs, for instance, in Initial Teacher Training programs in Scotland. The National Framework for Digital Literacies in Initial Teacher Education was developed by representatives from Higher Education institutions that offer Initial Teacher Education (ITE) programs in conjunction with the Scottish Council of Deans of Education (SCDE) with the support of Scottish Government This policy driven approach aims to establish an academic grounding in the exploration of learning and teaching digital literacies and their impact on pedagogy as well as ensuring educators are equipped to teach in the rapidly evolving digital environment and continue their own professional development.

Demographic differences

Factors such as nationality, gender, and income contribute to the digital divide across the globe. Depending on what someone identifies as, their access to the internet can potentially decrease. According to a study conducted by the ITU in 2022, Africa has the fewest people on the internet at a 40% rate; the next lowest internet population is the Asia-Pacific region at 64%. Internet access remains a problem in Least Developing Countries and Landlocked Developing Countries. They both have 36% of people using the internet compared to a 66% average around the world.

Men generally have more access to the internet around the world. The gender parity score across the globe is 0.92. A gender parity score is calculated by the percentage of women who use the internet divided by the percentage of men who use the internet. Ideally, countries want to have gender parity scores between 0.98 and 1.02. The region with the least gender parity is Africa with a score of 0.75. The next lowest gender parity score belongs to the Arab States at 0.87. Americans, Commonwealth of Independent States, and Europe all have the highest gender parity scores with scores that do not go below 0.98 or higher than 1. Gender parity scores are often impacted by class. Low income regions have a score of 0.65 while upper-middle income and high income regions have a score of 0.99.

The difference between economic classes has been a prevalent issue with the digital divide up to this point. People who are considered to earn low income use the internet at a 26% rate followed by lower-middle income at 56%, upper-middle income at 79%, and high income at 92%. The staggering difference between low income individuals and high income individuals can be traced to the affordability of mobile products. Products are becoming more affordable as the years pass; according to the ITU, “the global median price of mobile-broadband services dropped from 1.9 percent to 1.5 percent of average gross national income (GNI) per capita.” There is still plenty of work to be done, as there is a 66% difference between low income individuals and high income individuals' access to the internet.

Facebook divide

The Facebook divide, a concept derived from the "digital divide", is the phenomenon with regard to access to, use of, and impact of Facebook on society. It was coined at the International Conference on Management Practices for the New Economy (ICMAPRANE-17) on February 10–11, 2017.

Additional concepts of Facebook Native and Facebook Immigrants were suggested at the conference. Facebook divide, Facebook native, Facebook immigrants, and Facebook left-behind are concepts for social and business management research. Facebook immigrants utilize Facebook for their accumulation of both bonding and bridging social capital. Facebook natives, Facebook immigrants, and Facebook left-behind induced the situation of Facebook inequality. In February 2018, the Facebook Divide Index was introduced at the ICMAPRANE conference in Noida, India, to illustrate the Facebook divide phenomenon.

Solutions

In the year 2000, the United Nations Volunteers (UNV) program launched its Online Volunteering service, which uses ICT as a vehicle for and in support of volunteering. It constitutes an example of a volunteering initiative that effectively contributes to bridge the digital divide. ICT-enabled volunteering has a clear added value for development. If more people collaborate online with more development institutions and initiatives, this will imply an increase in person-hours dedicated to development cooperation at essentially no additional cost. This is the most visible effect of online volunteering for human development.

Since May 17, 2006, the United Nations has raised awareness of the divide by way of the World Information Society Day. In 2001, it set up the Information and Communications Technology (ICT) Task Force. Later UN initiatives in this area are the World Summit on the Information Society since 2003, and the Internet Governance Forum, set up in 2006.

As of 2009, the borderline between ICT as a necessity good and ICT as a luxury good was roughly around US$10 per person per month, or US$120 per year, which means that people consider ICT expenditure of US$120 per year as a basic necessity. Since more than 40% of the world population lives on less than US$2 per day, and around 20% live on less than US$1 per day (or less than US$365 per year), these income segments would have to spend one third of their income on ICT (120/365 = 33%). The global average of ICT spending is at a mere 3% of income. Potential solutions include driving down the costs of ICT, which includes low-cost technologies and shared access through Telecentres.

In 2022, the US Federal Communications Commission started a proceeding "to prevent and eliminate digital discrimination and ensure that all people of the United States benefit from equal access to broadband internet access service, consistent with Congress's direction in the Infrastructure Investment and Jobs Act.

Social media websites serve as both manifestations of and means by which to combat the digital divide. The former describes phenomena such as the divided users' demographics that make up sites such as Facebook, WordPress and Instagram. Each of these sites hosts communities that engage with otherwise marginalized populations.

Libraries

In 2010, an "online indigenous digital library as part of public library services" was created in Durban, South Africa to narrow the digital divide by not only giving the people of the Durban area access to this digital resource, but also by incorporating the community members into the process of creating it.

In 2002, the Gates Foundation started the Gates Library Initiative which provides training assistance and guidance in libraries.

In Kenya, lack of funding, language, and technology illiteracy contributed to an overall lack of computer skills and educational advancement. This slowly began to change when foreign investment began. In the early 2000s, the Carnegie Foundation funded a revitalization project through the Kenya National Library Service. Those resources enabled public libraries to provide information and communication technologies to their patrons. In 2012, public libraries in the Busia and Kiberia communities introduced technology resources to supplement curriculum for primary schools. By 2013, the program expanded into ten schools.

Effective use

Even though individuals might be capable of accessing the Internet, many are opposed by barriers to entry, such as a lack of means to infrastructure or the inability to comprehend or limit the information that the Internet provides. Some individuals can connect, but they do not have the knowledge to use what information ICTs and Internet technologies provide them. This leads to a focus on capabilities and skills, as well as awareness to move from mere access to effective usage of ICT.

Community informatics (CI) focuses on issues of "use" rather than "access". CI is concerned with ensuring the opportunity not only for ICT access at the community level but also, according to Michael Gurstein, that the means for the "effective use" of ICTs for community betterment and empowerment are available. Gurstein has also extended the discussion of the digital divide to include issues around access to and the use of "open data" and coined the term "data divide" to refer to this issue area.

Criticism

Knowledge divide

Since gender, age, race, income, and educational digital divides have lessened compared to the past, some researchers suggest that the digital divide is shifting from a gap in access and connectivity to ICTs to a knowledge divide. A knowledge divide concerning technology presents the possibility that the gap has moved beyond the access and having the resources to connect to ICTs to interpreting and understanding information presented once connected.

Second-level digital divide

The second-level digital divide, also referred to as the production gap, describes the gap that separates the consumers of content on the Internet from the producers of content. As the technological digital divide is decreasing between those with access to the Internet and those without, the meaning of the term digital divide is evolving. Previously, digital divide research was focused on accessibility to the Internet and Internet consumption. However, with an increasing number of the population gaining access to the Internet, researchers are examining how people use the Internet to create content and what impact socioeconomics are having on user behavior.

New applications have made it possible for anyone with a computer and an Internet connection to be a creator of content, yet the majority of user-generated content available widely on the Internet, like public blogs, is created by a small portion of the Internet-using population. Web 2.0 technologies like Facebook, YouTube, Twitter, and Blogs enable users to participate online and create content without having to understand how the technology actually works, leading to an ever-increasing digital divide between those who have the skills and understanding to interact more fully with the technology and those who are passive consumers of it.

Some of the reasons for this production gap include material factors like the type of Internet connection one has and the frequency of access to the Internet. The more frequently a person has access to the Internet and the faster the connection, the more opportunities they have to gain the technology skills and the more time they have to be creative.

Other reasons include cultural factors often associated with class and socioeconomic status. Users of lower socioeconomic status are less likely to participate in content creation due to disadvantages in education and lack of the necessary free time for the work involved in blog or website creation and maintenance. Additionally, there is evidence to support the existence of the second-level digital divide at the K-12 level based on how educators' use technology for instruction. Schools' economic factors have been found to explain variation in how teachers use technology to promote higher-order thinking skills.