From Wikipedia, the free encyclopedia

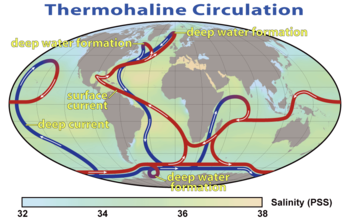

A summary of the path of the thermohaline circulation. Blue paths

represent deep-water currents, while red paths represent surface

currents.

Thermohaline circulation

Thermohaline circulation (THC) is a part of the large-scale ocean circulation that is driven by global density gradients created by surface heat and freshwater fluxes.[1][2] The adjective thermohaline derives from thermo- referring to temperature and -haline referring to salt content, factors which together determine the density of sea water. Wind-driven surface currents (such as the Gulf Stream) travel polewards from the equatorial Atlantic Ocean, cooling en route, and eventually sinking at high latitudes (forming North Atlantic Deep Water). This dense water then flows into the ocean basins. While the bulk of it upwells in the Southern Ocean, the oldest waters (with a transit time of around 1000 years)[3] upwell in the North Pacific.[4] Extensive mixing therefore takes place between the ocean basins, reducing differences between them and making the Earth's oceans a global system. On their journey, the water masses transport both energy (in the form of heat) and matter (solids, dissolved substances and gases) around the globe. As such, the state of the circulation has a large impact on the climate of the Earth.

The thermohaline circulation is sometimes called the ocean conveyor belt, the great ocean conveyor, or the global conveyor belt. On occasion, it is used to refer to the meridional overturning circulation (often abbreviated as MOC). The term MOC is more accurate and well defined, as it is difficult to separate the part of the circulation which is driven by temperature and salinity alone as opposed to other factors such as the wind and tidal forces.[5] Moreover, temperature and salinity gradients can also lead to circulation effects that are not included in the MOC itself.

Overview

The global conveyor belt on a continuous-ocean map

The movement of surface currents pushed by the wind is fairly intuitive. For example, the wind easily produces ripples on the surface of a pond. Thus the deep ocean—devoid of wind—was assumed to be perfectly static by early oceanographers. However, modern instrumentation shows that current velocities in deep water masses can be significant (although much less than surface speeds). In general, ocean water velocities range from fractions of centimeters per second (in the depth of the oceans) to sometimes more than 1 m/s in surface currents like the Gulf Stream and Kuroshio.

In the deep ocean, the predominant driving force is differences in density, caused by salinity and temperature variations (increasing salinity and lowering the temperature of a fluid both increase its density). There is often confusion over the components of the circulation that are wind and density driven.[6][7] Note that ocean currents due to tides are also significant in many places; most prominent in relatively shallow coastal areas, tidal currents can also be significant in the deep ocean. There they are currently thought to facilitate mixing processes, especially diapycnal mixing.[8]

The density of ocean water is not globally homogeneous, but varies significantly and discretely. Sharply defined boundaries exist between water masses which form at the surface, and subsequently maintain their own identity within the ocean. But these sharp boundaries are not to be imagined spatially but rather in a T-S-diagram where water masses are distinguished. They position themselves above or below each other according to their density, which depends on both temperature and salinity.

Warm seawater expands and is thus less dense than cooler seawater. Saltier water is denser than fresher water because the dissolved salts fill interstices between water molecules, resulting in more mass per unit volume. Lighter water masses float over denser ones (just as a piece of wood or ice will float on water, see buoyancy). This is known as "stable stratification" as opposed to unstable stratification (see Bruunt-Väisälä frequency) where denser waters are located over less dense waters (see convection or deep convection needed for water mass formation). When dense water masses are first formed, they are not stably stratified, so they seek to locate themselves in the correct vertical position according to their density. This motion is called convection, it orders the stratification by gravitation. Driven by the density gradients this sets up the main driving force behind deep ocean currents like the deep western boundary current (DWBC).

The thermohaline circulation is mainly driven by the formation of deep water masses in the North Atlantic and the Southern Ocean caused by differences in temperature and salinity of the water.

The great quantities of dense water sinking at high latitudes must be offset by equal quantities of water rising elsewhere. Note that cold water in polar zones sink relatively rapidly over a small area, while warm water in temperate and tropical zones rise more gradually across a much larger area. It then slowly returns poleward near the surface to repeat the cycle. The continual diffuse upwelling of deep water maintains the existence of the permanent thermocline found everywhere at low and mid-latitudes. This model was described by Henry Stommel and Arnold B. Arons in 1960 and is known as the Stommel-Arons box model for the MOC.[9] This slow upward movement is approximated to be about 1 centimeter (0.5 inch) per day over most of the ocean. If this rise were to stop, downward movement of heat would cause the thermocline to descend and would reduce its steepness.

Formation of deep water masses

The dense water masses that sink into the deep basins are formed in quite specific areas of the North Atlantic and the Southern Ocean. In the North Atlantic, seawater at the surface of the ocean is intensely cooled by the wind and low ambient air temperatures. Wind moving over the water also produces a great deal of evaporation, leading to a decrease in temperature, called evaporative cooling related to latent heat. Evaporation removes only water molecules, resulting in an increase in the salinity of the seawater left behind, and thus an increase in the density of the water mass along with the decrease in temperature. In the Norwegian Sea evaporative cooling is predominant, and the sinking water mass, the North Atlantic Deep Water (NADW), fills the basin and spills southwards through crevasses in the submarine sills that connect Greenland, Iceland and Great Britain which are known as the Greenland-Scotland-Ridge. It then flows very slowly into the deep abyssal plains of the Atlantic, always in a southerly direction. Flow from the Arctic Ocean Basin into the Pacific, however, is blocked by the narrow shallows of the Bering Strait.

Diagram showing relation between temperature and salinity for sea water density maximum and sea water freezing temperature.

In the Southern Ocean, strong katabatic winds blowing from the Antarctic continent onto the ice shelves will blow the newly formed sea ice away, opening polynyas along the coast. The ocean, no longer protected by sea ice, suffers a brutal and strong cooling (see polynya). Meanwhile, sea ice starts reforming, so the surface waters also get saltier, hence very dense. In fact, the formation of sea ice contributes to an increase in surface seawater salinity; saltier brine is left behind as the sea ice forms around it (pure water preferentially being frozen). Increasing salinity lowers the freezing point of seawater, so cold liquid brine is formed in inclusions within a honeycomb of ice. The brine progressively melts the ice just beneath it, eventually dripping out of the ice matrix and sinking. This process is known as brine rejection.

The resulting Antarctic Bottom Water (AABW) sinks and flows north and east, but is so dense it actually underflows the NADW. AABW formed in the Weddell Sea will mainly fill the Atlantic and Indian Basins, whereas the AABW formed in the Ross Sea will flow towards the Pacific Ocean.

The dense water masses formed by these processes flow downhill at the bottom of the ocean, like a stream within the surrounding less dense fluid, and fill up the basins of the polar seas. Just as river valleys direct streams and rivers on the continents, the bottom topography constrains the deep and bottom water masses.

Note that, unlike fresh water, seawater does not have a density maximum at 4 °C but gets denser as it cools all the way to its freezing point of approximately −1.8 °C. This freezing point is however a function of salinity and pressure and thus -1.8°C is not a general freezing temperature for sea water (see diagram to the right).

Movement of deep water masses

Formation and movement of the deep water masses at the North Atlantic Ocean, creates sinking water masses that fill the basin and flows very slowly into the deep abyssal plains of the Atlantic. This high-latitude cooling and the low-latitude heating drives the movement of the deep water in a polar southward flow. The deep water flows through the Antarctic Ocean Basin around South Africa where it is split into two routes: one into the Indian Ocean and one past Australia into the Pacific.At the Indian Ocean, some of the cold and salty water from the Atlantic—drawn by the flow of warmer and fresher upper ocean water from the tropical Pacific—causes a vertical exchange of dense, sinking water with lighter water above. It is known as overturning. In the Pacific Ocean, the rest of the cold and salty water from the Atlantic undergoes haline forcing, and becomes warmer and fresher more quickly.

The out-flowing undersea of cold and salty water makes the sea level of the Atlantic slightly lower than the Pacific and salinity or halinity of water at the Atlantic higher than the Pacific. This generates a large but slow flow of warmer and fresher upper ocean water from the tropical Pacific to the Indian Ocean through the Indonesian Archipelago to replace the cold and salty Antarctic Bottom Water. This is also known as 'haline forcing' (net high latitude freshwater gain and low latitude evaporation). This warmer, fresher water from the Pacific flows up through the South Atlantic to Greenland, where it cools off and undergoes evaporative cooling and sinks to the ocean floor, providing a continuous thermohaline circulation.[10]

Hence, a recent and popular name for the thermohaline circulation, emphasizing the vertical nature and pole-to-pole character of this kind of ocean circulation, is the meridional overturning circulation.

Quantitative estimation

Direct estimates of the strength of the thermohaline circulation have been made at 26.5°N in the North Atlantic since 2004 by the UK-US RAPID programme.[11] By combining direct estimates of ocean transport using current meters and subsea cable measurements with estimates of the geostrophic current from temperature and salinity measurements, the RAPID programme provides continuous, full-depth, basinwide estimates of the thermohaline circulation or, more accurately, the meridional overturning circulation.The deep water masses that participate in the MOC have chemical, temperature and isotopic ratio signatures and can be traced, their flow rate calculated, and their age determined. These include 231Pa / 230Th ratios.

Gulf Stream

Benjamin Franklin's map of the Gulf Stream

The Gulf Stream, together with its northern extension towards Europe, the North Atlantic Drift, is a powerful, warm, and swift Atlantic ocean current that originates at the tip of Florida, and follows the eastern coastlines of the United States and Newfoundland before crossing the Atlantic Ocean. The process of western intensification causes the Gulf Stream to be a northward accelerating current off the east coast of North America.[12] At about 40°0′N 30°0′W, it splits in two, with the northern stream crossing to northern Europe and the southern stream recirculating off West Africa. The Gulf Stream influences the climate of the east coast of North America from Florida to Newfoundland, and the west coast of Europe. Although there has been recent debate, there is consensus that the climate of Western Europe and Northern Europe is warmer than it would otherwise be due to the North Atlantic drift,[13][14] one of the branches from the tail of the Gulf Stream. It is part of the North Atlantic Gyre. Its presence has led to the development of strong cyclones of all types, both within the atmosphere and within the ocean. The Gulf Stream is also a significant potential source of renewable power generation.[15][16]

Upwelling

All these dense water masses sinking into the ocean basins displace the older deep water masses were made less dense by ocean mixing. To maintain a balance, water must be rising elsewhere. However, because this thermohaline upwelling is so widespread and diffuse, its speeds are very slow even compared to the movement of the bottom water masses. It is therefore difficult to measure where upwelling occurs using current speeds, given all the other wind-driven processes going on in the surface ocean. Deep waters have their own chemical signature, formed from the breakdown of particulate matter falling into them over the course of their long journey at depth. A number of scientists have tried to use these tracers to infer where the upwelling occurs.Wallace Broecker, using box models, has asserted that the bulk of deep upwelling occurs in the North Pacific, using as evidence the high values of silicon found in these waters. Other investigators have not found such clear evidence. Computer models of ocean circulation increasingly place most of the deep upwelling in the Southern Ocean,[17] associated with the strong winds in the open latitudes between South America and Antarctica. While this picture is consistent with the global observational synthesis of William Schmitz at Woods Hole and with low observed values of diffusion, not all observational syntheses agree. Recent papers by Lynne Talley at the Scripps Institution of Oceanography and Bernadette Sloyan and Stephen Rintoul in Australia suggest that a significant amount of dense deep water must be transformed to light water somewhere north of the Southern Ocean.

Effects on global climate

The thermohaline circulation plays an important role in supplying heat to the polar regions, and thus in regulating the amount of sea ice in these regions, although poleward heat transport outside the tropics is considerably larger in the atmosphere than in the ocean.[18] Changes in the thermohaline circulation are thought to have significant impacts on the Earth's radiation budget. Insofar as the thermohaline circulation governs the rate at which deep waters are exposed to the surface, it may also play an important role in the concentration of carbon dioxide in the atmosphere. While it is often stated that the thermohaline circulation is the primary reason that Western Europe is so temperate, it has been suggested that this is largely incorrect, and that Europe is warm mostly because it lies downwind of an ocean basin, and because of the effect of atmospheric waves bringing warm air north from the subtropics.[19] However, the underlying assumptions of this particular analysis have likewise been challenged.[20]Large influxes of low-density meltwater from Lake Agassiz and deglaciation in North America are thought to have led to a shifting of deep water formation and subsidence in the extreme North Atlantic and caused the climate period in Europe known as the Younger Dryas.[21]

Shutdown of thermohaline circulation

In 2005, British researchers noticed that the net flow of the northern Gulf Stream had decreased by about 30% since 1957. Coincidentally, scientists at Woods Hole had been measuring the freshening of the North Atlantic as Earth becomes warmer. Their findings suggested that precipitation increases in the high northern latitudes, and polar ice melts as a consequence. By flooding the northern seas with lots of extra fresh water, global warming could, in theory, divert the Gulf Stream waters that usually flow northward, past the British Isles and Norway, and cause them to instead circulate toward the equator. If this were to happen, Europe's climate would be seriously impacted.[22][23][24]Downturn of AMOC (Atlantic meridional overturning circulation), has been tied to extreme regional sea level rise.[25]

In 2013, an unexpected significant weakening of the THC led to one of the quietest Atlantic hurricane seasons observed since 1994. The main cause of the inactivity was caused by a continuation of the spring pattern across the Atlantic basin.

![{\displaystyle |\chi _{\rm {A}}-\chi _{\rm {B}}|=({\rm {eV}})^{-1/2}{\sqrt {E_{\rm {d}}({\rm {AB}})-[E_{\rm {d}}({\rm {AA}})+E_{\rm {d}}({\rm {BB}})]/2}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/716676c0e083fbfdb7460e5fcb90dc857d22d5d9)

![E_{\rm {d}}({\rm {AB}})=[E_{\rm {d}}({\rm {AA}})+E_{\rm {d}}({\rm {BB}})]/2+(\chi _{\rm {A}}-\chi _{\rm {B}})^{2}eV](https://wikimedia.org/api/rest_v1/media/math/render/svg/ad7e1e3b88ad4aea43b6edbe66e9a9bf17d75872)