Logo used from the 1990s until acquisition by Oracle

| |

| Public | |

| Traded as | |

| Industry |

|

| Fate | Acquired by Oracle |

| Founded | February 24, 1982 |

| Founders | |

| Defunct | January 27, 2010 |

| Headquarters |

,

U.S.

|

| Products |

|

| Owner | Oracle Corporation |

Number of employees

| 38,600 (near peak, 2006) |

| Website | www See Archived 4 January 2010 at the Wayback Machine. |

Sun Microsystems, Inc. was an American company that sold computers, computer components, software, and information technology services and created the Java programming language, the Solaris operating system, ZFS, the Network File System (NFS), and SPARC. Sun contributed significantly to the evolution of several key computing technologies, among them Unix, RISC processors, thin client computing, and virtualized computing. Sun was founded on February 24, 1982. At its height, the Sun headquarters were in Santa Clara, California (part of Silicon Valley), on the former west campus of the Agnews Developmental Center.

On April 20, 2009, it was announced that Oracle Corporation would acquire Sun for US$7.4 billion. The deal was completed on January 27, 2010.

Sun products included computer servers and workstations built on its own RISC-based SPARC processor architecture, as well as on x86-based AMD Opteron and Intel Xeon processors. Sun also developed its own storage systems and a suite of software products, including the Solaris operating system, developer tools, Web infrastructure software, and identity management applications. Other technologies included the Java platform and NFS. In general, Sun was a proponent of open systems, particularly Unix. It was also a major contributor to open-source software, as evidenced by its $1 billion purchase, in 2008, of MySQL, an open-source relational database management system. At various times, Sun had manufacturing facilities in several locations worldwide, including Newark, California; Hillsboro, Oregon; and Linlithgow, Scotland. However, by the time the company was acquired by Oracle, it had outsourced most manufacturing responsibilities.

History

The initial design for what became Sun's first Unix workstation, the Sun-1, was conceived by Andy Bechtolsheim when he was a graduate student at Stanford University in Palo Alto, California. Bechtolsheim originally designed the SUN workstation for the Stanford University Network communications project as a personal CAD workstation. It was designed around the Motorola 68000 processor with an advanced memory management unit (MMU) to support the Unix operating system with virtual memory support. He built the first ones from spare parts obtained from Stanford's Department of Computer Science and Silicon Valley supply houses.

On February 24, 1982, Vinod Khosla, Andy Bechtolsheim, and Scott McNealy, all Stanford graduate students, founded Sun Microsystems. Bill Joy of Berkeley, a primary developer of the Berkeley Software Distribution (BSD), joined soon after and is counted as one of the original founders. The Sun name is derived from the initials of the Stanford University Network. Sun was profitable from its first quarter in July 1982.

By 1983 Sun was known for producing 68k-based systems with high-quality graphics that were the only computers other than DEC's VAX to run 4.2BSD. It licensed the computer design to other manufacturers, which typically used it to build Multibus-based systems running Unix from UniSoft. Sun's initial public offering was in 1986 under the stock symbol SUNW, for Sun Workstations (later Sun Worldwide). The symbol was changed in 2007 to JAVA; Sun stated that the brand awareness associated with its Java platform better represented the company's current strategy.

Sun's logo, which features four interleaved copies of the word sun in the form of a rotationally symmetric ambigram, was designed by professor Vaughan Pratt,

also of Stanford. The initial version of the logo was orange and had

the sides oriented horizontally and vertically, but it was subsequently

rotated to stand on one corner and re-colored purple, and later blue.

The "bubble" and its aftermath

In the dot-com bubble,

Sun began making much more money, and its shares rose dramatically. It

also began spending much more, hiring workers and building itself out.

Some of this was because of genuine demand, but much was from web

start-up companies anticipating business that would never happen. In

2000, the bubble burst. Sales in Sun's important hardware division went into free-fall as customers closed shop and auctioned high-end servers.

Several quarters of steep losses led to executive departures, rounds of layoffs,

and other cost cutting. In December 2001, the stock fell to the 1998,

pre-bubble level of about $100. But it kept falling, faster than many

other tech companies. A year later it had dipped below $10 (a tenth of

what it was even in 1990) but bounced back to $20. In mid-2004, Sun

closed their Newark, California, factory and consolidated all manufacturing to Hillsboro, Oregon. In 2006, the rest of the Newark campus was put on the market.

Post-crash focus

Aerial photograph of the Sun headquarters campus in Santa Clara, California

Buildings 21 and 22 at Sun's headquarters campus in Santa Clara

Sun in Markham, Ontario, Canada

In 2004, Sun canceled two major processor projects which emphasized high instruction-level parallelism and operating frequency. Instead, the company chose to concentrate on processors optimized for multi-threading and multiprocessing, such as the UltraSPARC T1 processor (codenamed "Niagara"). The company also announced a collaboration with Fujitsu

to use the Japanese company's processor chips in mid-range and high-end

Sun servers. These servers were announced on April 17, 2007, as the

M-Series, part of the SPARC Enterprise series.

In February 2005, Sun announced the Sun Grid, a grid computing deployment on which it offered utility computing

services priced at US$1 per CPU/hour for processing and per GB/month

for storage. This offering built upon an existing 3,000-CPU server farm

used for internal R&D for over 10 years, which Sun marketed as being

able to achieve 97% utilization. In August 2005, the first commercial

use of this grid was announced for financial risk simulations which was

later launched as its first software as a service product.

In January 2005, Sun reported a net profit of $19 million for

fiscal 2005 second quarter, for the first time in three years. This was

followed by net loss of $9 million on GAAP

basis for the third quarter 2005, as reported on April 14, 2005. In

January 2007, Sun reported a net GAAP profit of $126 million on revenue

of $3.337 billion for its fiscal second quarter. Shortly following that

news, it was announced that Kohlberg Kravis Roberts (KKR) would invest $700 million in the company.

Sun had engineering groups in Bangalore, Beijing, Dublin, Grenoble, Hamburg, Prague, St. Petersburg, Tel Aviv, Tokyo, and Trondheim.

In 2007–2008, Sun posted revenue of $13.8 billion and had

$2 billion in cash. First-quarter 2008 losses were $1.68 billion;

revenue fell 7% to $12.99 billion. Sun's stock lost 80% of its value

November 2007 to November 2008, reducing the company's market value to

$3 billion. With falling sales to large corporate clients, Sun announced

plans to lay off 5,000 to 6,000 workers, or 15–18% of its work force.

It expected to save $700 million to $800 million a year as a result of

the moves, while also taking up to $600 million in charges.

Sun acquisitions

Sun server racks at Seneca College (York Campus)

- 1987: Trancept Systems, a high-performance graphics hardware company

- 1987: Sitka Corp, networking systems linking the Macintosh with IBM PCs

- 1987: Centram Systems West, maker of networking software for PCs, Macs and Sun systems

- 1988: Folio, Inc., developer of intelligent font scaling technology and the F3 font format

- 1991: Interactive Systems Corporation's Intel/Unix OS division, from Eastman Kodak Company

- 1992: Praxsys Technologies, Inc., developers of the Windows emulation technology that eventually became Wabi

- 1994: Thinking Machines Corporation hardware division

- 1996: Lighthouse Design, Ltd.

- 1996: Cray Business Systems Division, from Silicon Graphics

- 1996: Integrated Micro Products, specializing in fault tolerant servers

- 1996: Thinking Machines Corporation software division

- February 1997: LongView Technologies, LLC

- August 1997: Diba, technology supplier for the Information Appliance industry

- September 1997: Chorus Systems, creators of ChorusOS

- November 1997: Encore Computer Corporation's storage business

- 1998: RedCape Software

- 1998: i-Planet, a small software company that produced the "Pony Espresso" mobile email client—its name (sans hyphen) for the Sun-Netscape software alliance

- June 1998: Dakota Scientific Software, Inc.—development tools for high-performance computing

- July 1998: NetDynamics—developers of the NetDynamics Application Server

- October 1998: Beduin, small software company that produced the "Impact" small-footprint Java-based Web browser for mobile devices.

- 1999: Star Division, German software company and with it StarOffice, which was later released as open source under the name OpenOffice.org

- 1999: MAXSTRAT Corporation, a company in Milpitas, California selling Fibre Channel storage servers.

- October 1999: Forté Software, an enterprise software company specializing in integration solutions and developer of the Forte 4GL

- 1999: TeamWare

- 1999: NetBeans, produced a modular IDE written in Java, based on a student project at Charles University in Prague

- March 2000: Innosoft International, Inc. a software company specializing in highly scalable MTAs (PMDF) and Directory Services.

- July 2000: Gridware, a software company whose products managed the distribution of computing jobs across multiple computers

- September 2000: Cobalt Networks, an Internet appliance manufacturer for $2 billion

- December 2000: HighGround, with a suite of Web-based management solutions

- 2001: LSC, Inc., an Eagan, Minnesota company that developed Storage and Archive Management File System (SAM-FS) and Quick File System QFS file systems for backup and archive

- March 2002: Clustra Systems

- June 2002: Afara Websystems, developed SPARC processor-based technology

- September 2002: Pirus Networks, intelligent storage services

- November 2002: Terraspring, infrastructure automation software

- June 2003: Pixo, added to the Sun Content Delivery Server

- August 2003: CenterRun, Inc.

- December 2003: Waveset Technologies, identity management

- January 2004 Nauticus Networks

- February 2004: Kealia, founded by original Sun founder Andy Bechtolsheim, developed AMD-based 64-bit servers

- January 2005: SevenSpace, a multi-platform managed services provider

- May 2005: Tarantella, Inc. (formerly known as Santa Cruz Operation (SCO)), for $25 million

- June 2005: SeeBeyond, a Service-Oriented Architecture (SOA) software company for $387m

- June 2005: Procom Technology, Inc.'s NAS IP Assets

- August 2005: StorageTek, data storage technology company for $4.1 billion

- February 2006: Aduva, software for Solaris and Linux patch management

- October 2006: Neogent

- April 2007: SavaJe, the SavaJe OS, a Java OS for mobile phones

- September 2007: Cluster File Systems, Inc.

- November 2007: Vaau, Enterprise Role Management and identity compliance solutions

- February 2008: MySQL AB, the company offering the open source database MySQL for $1 billion.

- February 2008: Innotek GmbH, developer of the VirtualBox virtualization product

- April 2008: Montalvo Systems, x86 microprocessor startup acquired before first silicon

- January 2009: Q-layer, a software company with cloud computing solutions

Major stockholders

As of May 11, 2009, the following shareholders held over 100,000 common shares of Sun and at $9.50 per share offered by Oracle, they received the amounts indicated when the acquisition closed.

| Investor | Common Shares | Value at Merger |

|---|---|---|

| Barclays Global Investors | 37,606,708 | $357 million |

| Scott G. McNealy | 14,566,433 | $138 million |

| M. Kenneth Oshman | 584,985 | $5.5 million |

| Jonathan I. Schwartz | 536,109 | $5 million |

| James L. Barksdale | 231,785 | $2.2 million |

| Michael E. Lehman | 106,684 | $1 million |

Hardware

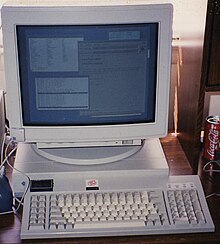

For the first decade of Sun's history, the company positioned its products as technical workstations, competing successfully as a low-cost vendor during the Workstation Wars of the 1980s. It then shifted its hardware product line to emphasize servers and storage. High-level telecom control systems such as Operational Support Systems service predominantly used Sun equipment.

Motorola-based systems

Sun originally used Motorola 68000 family central processing units for the Sun-1 through Sun-3 computer series. The Sun-1 employed a 68000 CPU, the Sun-2 series, a 68010. The Sun-3 series was based on the 68020, with the later Sun-3x using the 68030.

SPARC-based systems

SPARCstation 1+

In 1987, the company began using SPARC, a RISC processor architecture of its own design, in its computer systems, starting with the Sun-4 line. SPARC was initially a 32-bit architecture (SPARC V7) until the introduction of the SPARC V9 architecture in 1995, which added 64-bit extensions.

Sun has developed several generations of SPARC-based computer systems, including the SPARCstation, Ultra and Sun Blade series of workstations, and the SPARCserver, Netra, Enterprise and Sun Fire line of servers.

In the early 1990s the company began to extend its product line to include large-scale symmetric multiprocessing

servers, starting with the four-processor SPARCserver 600MP. This was

followed by the 8-processor SPARCserver 1000 and 20-processor

SPARCcenter 2000, which were based on work done in conjunction with Xerox PARC. In 1995 the company introduced Sun Ultra series machines that were equipped with the first 64-bit implementation of SPARC processors (UltraSPARC).

In the late 1990s the transformation of product line in favor of large

64-bit SMP systems was accelerated by the acquisition of Cray Business

Systems Division from Silicon Graphics. Their 32-bit, 64-processor Cray Superserver 6400, related to the SPARCcenter, led to the 64-bit Sun Enterprise 10000 high-end server (otherwise known as Starfire).

In September 2004 Sun made available systems with UltraSPARC IV which was the first multi-core SPARC processor. It was followed by UltraSPARC IV+ in September 2005 and its revisions with higher clock speeds in 2007. These CPUs were used in the most powerful, enterprise class high-end CC-NUMA servers developed by Sun, such as Sun Fire E25K.

In November 2005 Sun launched the UltraSPARC T1,

notable for its ability to concurrently run 32 threads of execution on 8

processor cores. Its intent was to drive more efficient use of CPU

resources, which is of particular importance in data centers,

where there is an increasing need to reduce power and air conditioning

demands, much of which comes from the heat generated by CPUs. The T1 was

followed in 2007 by the UltraSPARC T2,

which extended the number of threads per core from 4 to 8. Sun has open

sourced the design specifications of both the T1 and T2 processors via

the OpenSPARC project.

In 2006, Sun ventured into the blade server (high density rack-mounted systems) market with the Sun Blade (distinct from the Sun Blade workstation).

In April 2007 Sun released the SPARC Enterprise server products, jointly designed by Sun and Fujitsu and based on Fujitsu SPARC64 VI and later processors. The M-class

SPARC Enterprise systems include high-end reliability and availability

features. Later T-series servers have also been badged SPARC Enterprise

rather than Sun Fire.

In April 2008 Sun released servers with UltraSPARC T2 Plus, which

is an SMP capable version of UltraSPARC T2, available in 2 or 4

processor configurations. It was the first CoolThreads CPU with

multi-processor capability and it made possible to build standard

rack-mounted servers that could simultaneously process up to massive 256

CPU threads in hardware (Sun SPARC Enterprise T5440), which is considered a record in the industry.

Since 2010, all further development of Sun machines based on SPARC architecture (including new SPARC T-Series servers, SPARC T3 and T4 chips) is done as a part of Oracle Corporation hardware division.

x86-based systems

In the late 1980s, Sun also marketed an Intel 80386-based machine, the Sun386i;

this was designed to be a hybrid system, running SunOS but at the same

time supporting DOS applications. This only remained on the market for a

brief time. A follow-up "486i" upgrade was announced but only a few

prototype units were ever manufactured.

Sun's brief first foray into x86 systems ended in the early

1990s, as it decided to concentrate on SPARC and retire the last

Motorola systems and 386i products, a move dubbed by McNealy as "all the

wood behind one arrowhead". Even so, Sun kept its hand in the x86

world, as a release of Solaris for PC compatibles began shipping in 1993.

In 1997 Sun acquired Diba, Inc., followed later by the acquisition of Cobalt Networks in 2000, with the aim of building network appliances (single function computers meant for consumers). Sun also marketed a Network Computer (a term popularized and eventually trademarked by Oracle); the JavaStation was a diskless system designed to run Java applications.

Although none of these business initiatives were particularly

successful, the Cobalt purchase gave Sun a toehold for its return to the

x86 hardware market. In 2002, Sun introduced its first general purpose

x86 system, the LX50, based in part on previous Cobalt system expertise.

This was also Sun's first system announced to support Linux as well as Solaris.

In 2003, Sun announced a strategic alliance with AMD to produce

x86/x64 servers based on AMD's Opteron processor; this was followed

shortly by Sun's acquisition of Kealia, a startup founded by original

Sun founder Andy Bechtolsheim, which had been focusing on

high-performance AMD-based servers.

The following year, Sun launched the Opteron-based Sun Fire V20z and V40z servers, and the Java Workstation W1100z and W2100z workstations.

On September 12, 2005, Sun unveiled a new range of Opteron-based servers: the Sun Fire X2100, X4100 and X4200 servers.

These were designed from scratch by a team led by Bechtolsheim to

address heat and power consumption issues commonly faced in data

centers. In July 2006, the Sun Fire X4500 and X4600 systems were introduced, extending a line of x64 systems that support not only Solaris, but also Linux and Microsoft Windows.

On January 22, 2007, Sun announced a broad strategic alliance with Intel.

Intel endorsed Solaris as a mainstream operating system and as its

mission critical Unix for its Xeon processor-based systems, and

contributed engineering resources to OpenSolaris.

Sun began using the Intel Xeon processor in its x64 server line,

starting with the Sun Blade X6250 server module introduced in June 2007.

On May 5, 2008, AMD announced its Operating System Research

Center (OSRC) expanded its focus to include optimization to Sun's

OpenSolaris and xVM virtualization products for AMD based processors.

Software

Although

Sun was initially known as a hardware company, its software history

began with its founding in 1982; co-founder Bill Joy was one of the

leading Unix developers of the time, having contributed the vi editor, the C shell, and significant work developing TCP/IP and the BSD Unix OS. Sun later developed software such as the Java programming language and acquired software such as StarOffice, VirtualBox and MySQL.

Sun used community-based and open-source licensing of its major

technologies, and for its support of its products with other open source

technologies. GNOME-based desktop software called Java Desktop System

(originally code-named "Madhatter") was distributed for the Solaris

operating system, and at one point for Linux. Sun supported its Java Enterprise System (a middleware stack) on Linux. It released the source code for Solaris under the open-source Common Development and Distribution License,

via the OpenSolaris community. Sun's positioning includes a commitment

to indemnify users of some software from intellectual property disputes

concerning that software. It offers support services on a variety of

pricing bases, including per-employee and per-socket.

A 2006 report prepared for the EU by UNU-MERIT stated that Sun was the largest corporate contributor to open source movements in the world.

According to this report, Sun's open source contributions exceed the

combined total of the next five largest commercial contributors.

Operating systems

Sun is best known for its Unix systems, which have a reputation for system stability and a consistent design philosophy.

Sun's first workstation shipped with UniSoft V7 Unix. Later in 1982 Sun began providing SunOS, a customized 4.1BSD Unix, as the operating system for its workstations.

In the late 1980s, AT&T tapped Sun to help them develop the

next release of their branded UNIX, and in 1988 announced they would

purchase up to a 20% stake in Sun. UNIX System V Release 4

(SVR4) was jointly developed by AT&T and Sun; Sun used SVR4 as the

foundation for Solaris 2.x, which became the successor to SunOS 4.1.x

(later retrospectively named Solaris 1.x). By the mid-1990s, the ensuing

Unix wars

had largely subsided, AT&T had sold off their Unix interests, and

the relationship between the two companies was significantly reduced.

From 1992 Sun also sold Interactive Unix,

an operating system it acquired when it bought Interactive Systems

Corporation from Eastman Kodak Company. This was a popular Unix variant

for the PC platform and a major competitor to market leader SCO UNIX.

Sun's focus on Interactive Unix diminished in favor of Solaris on both

SPARC and x86 systems; it was dropped as a product in 2001.

Sun dropped the Solaris 2.x version numbering scheme after the

Solaris 2.6 release (1997); the following version was branded Solaris 7.

This was the first 64-bit release, intended for the new UltraSPARC

CPUs based on the SPARC V9 architecture. Within the next four years,

the successors Solaris 8 and Solaris 9 were released in 2000 and 2002

respectively.

Following several years of difficult competition and loss of

server market share to competitors' Linux-based systems, Sun began to

include Linux as part of its strategy in 2002. Sun supported both Red Hat Enterprise Linux and SUSE Linux Enterprise Server on its x64 systems; companies such as Canonical Ltd., Wind River Systems and MontaVista also supported their versions of Linux on Sun's SPARC-based systems.

In 2004, after having cultivated a reputation as one of Microsoft's

most vocal antagonists, Sun entered into a joint relationship with

them, resolving various legal entanglements between the two companies

and receiving US$1.95 billion in settlement payments from them.

Sun supported Microsoft Windows on its x64 systems, and announced other

collaborative agreements with Microsoft, including plans to support

each other's virtualization environments.

In 2005, the company released Solaris 10. The new version

included a large number of enhancements to the operating system, as well

as very novel features, previously unseen in the industry. Solaris 10

update releases continued through the next 8 years, the last release

from Sun Microsystems being Solaris 10 10/09. The following updates were

released by Oracle under the new license agreement; the final release

is Solaris 10 1/13.

Previously, Sun offered a separate variant of Solaris called Trusted Solaris, which included augmented security features such as multilevel security and a least privilege

access model. Solaris 10 included many of the same capabilities as

Trusted Solaris at the time of its initial release; Solaris 10 11/06

included Solaris Trusted Extensions, which give it the remaining

capabilities needed to make it the functional successor to Trusted

Solaris.

After releasing Solaris 10, its source code was opened under CDDL free software license and developed in open with contributing Opensolaris community through SXCE that used SVR4 .pkg packaging and supported Opensolaris releases that used IPS.

Following acquisition of Sun by Oracle , Opensolaris continued to develop in open under illumos with illumos distributions.

Oracle Corporation continued to develop OpenSolaris into next Solaris release, changing back the license to proprietary, and released it as Oracle Solaris 11 in November 2011.

Java platform

The Java platform was developed at Sun by James Gosling

in the early 1990s with the objective of allowing programs to function

regardless of the device they were used on, sparking the slogan "Write once, run anywhere"

(WORA). While this objective was not entirely achieved (prompting the

riposte "Write once, debug everywhere"), Java is regarded as being

largely hardware- and operating system-independent.

Java was initially promoted as a platform for client-side applets running inside web browsers. Early examples of Java applications were the HotJava web browser and the HotJava Views suite. However, since then Java has been more successful on the server side of the Internet.

The platform consists of three major parts: the Java programming language, the Java Virtual Machine (JVM), and several Java Application Programming Interfaces (APIs). The design of the Java platform is controlled by the vendor and user community through the Java Community Process (JCP).

Java is an object-oriented programming language. Since its introduction in late 1995, it became one of the world's most popular programming languages.

Java programs are compiled to byte code, which can be executed by any JVM, regardless of the environment.

The Java APIs provide an extensive set of library routines. These APIs evolved into the Standard Edition (Java SE), which provides basic infrastructure and GUI functionality; the Enterprise Edition (Java EE), aimed at large software companies implementing enterprise-class application servers; and the Micro Edition (Java ME), used to build software for devices with limited resources, such as mobile devices.

On November 13, 2006, Sun announced it would be licensing its Java implementation under the GNU General Public License; it released its Java compiler and JVM at that time.

In February 2009 Sun entered a battle with Microsoft and Adobe

Systems, which promoted rival platforms to build software applications

for the Internet. JavaFX was a development platform for music, video and other applications that builds on the Java programming language.

Office suite

In 1999, Sun acquired the German software company Star Division and with it the office suite StarOffice, which Sun later released as OpenOffice.org under both GNU LGPL and the SISSL (Sun Industry Standards Source License). OpenOffice.org supported Microsoft Office file formats (though not perfectly), was available on many platforms (primarily Linux, Microsoft Windows, Mac OS X, and Solaris) and was used in the open source community.

The principal differences between StarOffice and OpenOffice.org

were that StarOffice was supported by Sun, was available as either a

single-user retail box kit or as per-user blocks of licensing for the

enterprise, and included a wider range of fonts and document templates

and a commercial quality spellchecker.

StarOffice also contained commercially licensed functions and add-ons;

in OpenOffice.org these were either replaced by open-source or free

variants, or are not present at all. Both packages had native support

for the OpenDocument format.

Virtualization and datacenter automation software

VirtualBox, purchased by Sun

In 2007, Sun announced the Sun xVM virtualization and datacenter

automation product suite for commodity hardware. Sun also acquired

VirtualBox in 2008. Earlier virtualization technologies from Sun like Dynamic System Domains and Dynamic Reconfiguration were specifically designed for high-end SPARC servers, and Logical Domains only supports the UltraSPARC T1/T2/T2 Plus server platforms. Sun marketed Sun Ops Center provisioning software for datacenter automation.

On the client side, Sun offered virtual desktop

solutions. Desktop environments and applications could be hosted in a

datacenter, with users accessing these environments from a wide range of

client devices, including Microsoft Windows PCs, Sun Ray virtual display clients, Apple

Macintoshes, PDAs or any combination of supported devices. A variety of

networks were supported, from LAN to WAN or the public Internet.

Virtual desktop products included Sun Ray Server Software, Sun Secure Global Desktop and Sun Virtual Desktop Infrastructure.

Database management systems

Sun acquired MySQL AB, the developer of the MySQL database in 2008 for US$1 billion. CEO Jonathan Schwartz mentioned in his blog that optimizing the performance of MySQL was one of the priorities of the acquisition. In February 2008, Sun began to publish results of the MySQL performance optimization work. Sun contributed to the PostgreSQL project. On the Java platform, Sun contributed to and supported Java DB.

Other software

Sun

offered other software products for software development and

infrastructure services. Many were developed in house; others came from

acquisitions, including Tarantella, Waveset Technologies, SeeBeyond, and Vaau. Sun acquired many of the Netscape non-browser software products as part a deal involving Netscape's merger with AOL.

These software products were initially offered under the "iPlanet"

brand; once the Sun-Netscape alliance ended, they were re-branded as "Sun ONE" (Sun Open Network Environment), and then the "Sun Java System".

Sun's middleware product was branded as the Java Enterprise System (or JES), and marketed for web and application serving, communication, calendaring, directory, identity management and service-oriented architecture. Sun's Open ESB and other software suites were available free of charge on systems running Solaris, Red Hat Enterprise Linux, HP-UX, and Windows, with support available optionally.

Sun developed data center management software products, which included the Solaris Cluster high availability software, and a grid management package called Sun Grid Engine and firewall software such as SunScreen.

For Network Equipment Providers and telecommunications customers, Sun developed the Sun Netra High-Availability Suite.

Sun produced compilers and development tools under the Sun Studio brand, for building and developing Solaris and Linux applications.

Sun entered the software as a service (SaaS) market with zembly, a social cloud-based computing platform and Project Kenai, an open-source project hosting service.

Storage

Sun sold its own storage systems to complement its system offerings; it has also made several storage-related acquisitions.

On June 2, 2005, Sun announced it would purchase Storage Technology Corporation (StorageTek) for US$4.1 billion in cash, or $37.00 per share, a deal completed in August 2005.

In 2006, Sun introduced the Sun StorageTek 5800 System,

the first application-aware programmable storage solution. In 2008, Sun

contributed the source code of the StorageTek 5800 System under the BSD

license.

Sun announced the Sun Open Storage platform in 2008 built with open source technologies.

In late 2008 Sun announced the Sun Storage 7000 Unified Storage systems (codenamed Amber Road). Transparent placement of data in the systems' solid-state drives (SSD) and conventional hard drives was managed by ZFS to take advantage of the speed of SSDs and the economy of conventional hard disks.

Other storage products included Sun Fire X4500 storage server and SAM-QFS filesystem and storage management software.

High-performance computing

Sun marketed the Sun Constellation System for high-performance computing (HPC). Even before the introduction of the Sun Constellation System in 2007, Sun's products were in use in many of the TOP500 systems and supercomputing centers:

- Lustre was used by seven of the top 10 supercomputers in 2008, as well as other industries that need high-performance storage: six major oil companies (including BP, Shell, and ExxonMobil), chip-design (including Synopsys and Sony), and the movie-industry (including Harry Potter and Spider-Man).

- Sun Fire X4500 was used by high energy physics supercomputers to run dCache

- Sun Grid Engine was a popular workload scheduler for clusters and computer farms

- Sun Visualization System allowed users of the TeraGrid to remotely access the 3D rendering capabilities of the Maverick system at the University of Texas at Austin

- Sun Modular Datacenter (Project Blackbox) was two Sun MD S20 units used by the Stanford Linear Accelerator Center

The Sun HPC ClusterTools product was a set of Message Passing Interface

(MPI) libraries and tools for running parallel jobs on Solaris HPC

clusters. Beginning with version 7.0, Sun switched from its own

implementation of MPI to Open MPI, and donated engineering resources to the Open MPI project.

Sun was a participant in the OpenMP language committee. Sun Studio compilers and tools implemented the OpenMP specification for shared memory parallelization.

In 2006, Sun built the TSUBAME supercomputer, which was until June 2008 the fastest supercomputer in Asia. Sun built Ranger at the Texas Advanced Computing Center

(TACC) in 2007. Ranger had a peak performance of over 500 TFLOPS, and

was the 6th most powerful supercomputer on the TOP500 list in November

2008.

Sun announced an OpenSolaris distribution that integrated Sun's HPC

products with others.

Staff

Notable Sun employees included John Gilmore, Whitfield Diffie, Radia Perlman, and Marc Tremblay.

Sun was an early advocate of Unix-based networked computing, promoting

TCP/IP and especially NFS, as reflected in the company's motto "The

Network Is The Computer", coined by John Gage. James Gosling led the team which developed the Java programming language. Jon Bosak led the creation of the XML specification at W3C.

Sun staff published articles on the company's blog site.

Staff were encouraged to use the site to blog on any aspect of their

work or personal life, with few restrictions placed on staff, other than

commercially confidential material. Jonathan I. Schwartz was one of the

first CEOs of large companies to regularly blog; his postings were

frequently quoted and analyzed in the press. In 2005, Sun Microsystems was one of the first Fortune 500 companies that instituted a formal Social Media program.

Acquisition by Oracle

Logo used on hardware products by Oracle

Sun was sold to Oracle Corporation in 2009.

Sun's staff were asked to share anecdotes about their experiences at

Sun. A web site containing videos, stories, and photographs from 27

years at Sun was made available on September 2, 2009.

In October, Sun announced a second round of thousands of employees to be

laid off, blamed partially on delays in approval of the merger.

The transaction was completed in early 2010.

In January 2011, Oracle agreed to pay $46 million to settle charges that

it submitted false claims to US federal government agencies and paid

"kickbacks" to systems integrators.

In February 2011, Sun's former Menlo Park, California, campus of about 1,000,000 square feet (93,000 m2) was sold, and it was announced that it would become headquarters for Facebook.

The sprawling facility built around an enclosed courtyard had been nicknamed "Sun Quentin". On September 1, 2011, Sun India legally became part of Oracle. It had been delayed due to legal issues in Indian court.