Special relativity is a physical theory that plays a fundamental role in the description of all physical phenomena, as long as gravitation is not significant. Many experiments played (and still play) an important role in its development and justification. The strength of the theory lies in its unique ability to correctly predict to high precision the outcome of an extremely diverse range of experiments. Repeats of many of those experiments are still being conducted with steadily increased precision, with modern experiments focusing on effects such as at the Planck scale and in the neutrino sector. Their results are consistent with the predictions of special relativity. Collections of various tests were given by Jakob Laub, Zhang, Mattingly, Clifford Will, and Roberts/Schleif.

Special relativity is restricted to flat spacetime, i.e., to all phenomena without significant influence of gravitation. The latter lies in the domain of general relativity and the corresponding tests of general relativity must be considered.

Experiments paving the way to relativity

The predominant theory of light in the 19th century was that of the luminiferous aether, a stationary medium in which light propagates in a manner analogous to the way sound propagates through air. By analogy, it follows that the speed of light is constant in all directions in the aether and is independent of the velocity of the source. Thus an observer moving relative to the aether must measure some sort of "aether wind" even as an observer moving relative to air measures an apparent wind.

First-order experiments

Beginning with the work of François Arago (1810), a series of optical experiments had been conducted, which should have given a positive result for magnitudes of first order in (i.e., of ) and which thus should have demonstrated the relative motion of the aether. Yet the results were negative. An explanation was provided by Augustin Fresnel (1818) with the introduction of an auxiliary hypothesis, the so-called "dragging coefficient", that is, matter is dragging the aether to a small extent. This coefficient was directly demonstrated by the Fizeau experiment (1851). It was later shown that all first-order optical experiments must give a negative result due to this coefficient. In addition, some electrostatic first-order experiments were conducted, again having a negative results. In general, Hendrik Lorentz (1892, 1895) introduced several new auxiliary variables for moving observers, demonstrating why all first-order optical and electrostatic experiments have produced null results. For example, Lorentz proposed a location variable by which electrostatic fields contract in the line of motion and another variable ("local time") by which the time coordinates for moving observers depend on their current location.

Second-order experiments

The stationary aether theory, however, would give positive results when the experiments are precise enough to measure magnitudes of second order in (i.e., of ). Albert A. Michelson conducted the first experiment of this kind in 1881, followed by the more sophisticated Michelson–Morley experiment in 1887. Two rays of light, traveling for some time in different directions were brought to interfere, so that different orientations relative to the aether wind should lead to a displacement of the interference fringes. But the result was negative again. The way out of this dilemma was the proposal by George Francis FitzGerald (1889) and Lorentz (1892) that matter is contracted in the line of motion with respect to the aether (length contraction). That is, the older hypothesis of a contraction of electrostatic fields was extended to intermolecular forces. However, since there was no theoretical reason for that, the contraction hypothesis was considered ad hoc.

Besides the optical Michelson–Morley experiment, its electrodynamic equivalent was also conducted, the Trouton–Noble experiment. By that it should be demonstrated that a moving condenser must be subjected to a torque. In addition, the Experiments of Rayleigh and Brace intended to measure some consequences of length contraction in the laboratory frame, for example the assumption that it would lead to birefringence. Though all of those experiments led to negative results. (The Trouton–Rankine experiment conducted in 1908 also gave a negative result when measuring the influence of length contraction on an electromagnetic coil.)

To explain all experiments conducted before 1904, Lorentz was forced to again expand his theory by introducing the complete Lorentz transformation. Henri Poincaré declared in 1905 that the impossibility of demonstrating absolute motion (principle of relativity) is apparently a law of nature.

Refutations of complete aether drag

The idea that the aether might be completely dragged within or in the vicinity of Earth, by which the negative aether drift experiments could be explained, was refuted by a variety of experiments.

- Oliver Lodge (1893) found that rapidly whirling steel disks above and below a sensitive common path interferometric arrangement failed to produce a measurable fringe shift.

- Gustaf Hammar (1935) failed to find any evidence for aether dragging using a common-path interferometer, one arm of which was enclosed by a thick-walled pipe plugged with lead, while the other arm was free.

- The Sagnac effect showed that aether wind caused by earth drag cannot be demonstrated.

- The existence of the aberration of light was inconsistent with aether drag hypothesis.

- The assumption that aether drag is proportional to mass and thus only occurs with respect to Earth as a whole was refuted by the Michelson–Gale–Pearson experiment, which demonstrated the Sagnac effect through Earth's motion.

Lodge expressed the paradoxical situation in which physicists found themselves as follows: "...at no practicable speed does ... matter [have] any appreciable viscous grip upon the ether. Atoms must be able to throw it into vibration, if they are oscillating or revolving at sufficient speed; otherwise they would not emit light or any kind of radiation; but in no case do they appear to drag it along, or to meet with resistance in any uniform motion through it."

Special relativity

Overview

Eventually, Albert Einstein (1905) drew the conclusion that established theories and facts known at that time only form a logical coherent system when the concepts of space and time are subjected to a fundamental revision. For instance:

- Maxwell-Lorentz's electrodynamics (independence of the speed of light from the speed of the source),

- the negative aether drift experiments (no preferred reference frame),

- Moving magnet and conductor problem (only relative motion is relevant),

- the Fizeau experiment and the aberration of light (both implying modified velocity addition and no complete aether drag).

The result is special relativity theory, which is based on the constancy of the speed of light in all inertial frames of reference and the principle of relativity. Here, the Lorentz transformation is no longer a mere collection of auxiliary hypotheses but reflects a fundamental Lorentz symmetry and forms the basis of successful theories such as Quantum electrodynamics. Special relativity offers a large number of testable predictions, such as:

| Principle of relativity | Constancy of the speed of light | Time dilation |

|---|---|---|

| Any uniformly moving observer in an inertial frame cannot determine his "absolute" state of motion by a co-moving experimental arrangement. | In all inertial frames the measured speed of light is equal in all directions (isotropy), independent of the speed of the source, and cannot be reached by massive bodies. | The rate of a clock C (= any periodic process) traveling between two synchronized clocks A and B at rest in an inertial frame is retarded with respect to the two clocks. |

| Also other relativistic effects such as length contraction, Doppler effect, aberration and the experimental predictions of relativistic theories such as the Standard Model can be measured. | ||

Fundamental experiments

The effects of special relativity can phenomenologically be derived from the following three fundamental experiments:

- Michelson–Morley experiment, by which the dependence of the speed of light on the direction of the measuring device can be tested. It establishes the relation between longitudinal and transverse lengths of moving bodies.

- Kennedy–Thorndike experiment, by which the dependence of the speed of light on the velocity of the measuring device can be tested. It establishes the relation between longitudinal lengths and the duration of time of moving bodies.

- Ives–Stilwell experiment, by which time dilation can be directly tested.

From these three experiments and by using the Poincaré-Einstein synchronization, the complete Lorentz transformation follows, with being the Lorentz factor:

Besides the derivation of the Lorentz transformation, the combination of these experiments is also important because they can be interpreted in different ways when viewed individually. For example, isotropy experiments such as Michelson-Morley can be seen as a simple consequence of the relativity principle, according to which any inertially moving observer can consider himself as at rest. Therefore, by itself, the MM experiment is compatible to Galilean-invariant theories like emission theory or the complete aether drag hypothesis, which also contain some sort of relativity principle. However, when other experiments that exclude the Galilean-invariant theories are considered (i.e. the Ives–Stilwell experiment, various refutations of emission theories and refutations of complete aether dragging), Lorentz-invariant theories and thus special relativity are the only theories that remain viable.

Constancy of the speed of light

Interferometers, resonators

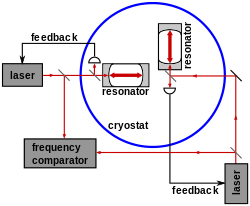

Modern variants of Michelson-Morley and Kennedy–Thorndike experiments have been conducted in order to test the isotropy of the speed of light. Contrary to Michelson-Morley, the Kennedy-Thorndike experiments employ different arm lengths, and the evaluations last several months. In that way, the influence of different velocities during Earth's orbit around the Sun can be observed. Laser, maser and optical resonators are used, reducing the possibility of any anisotropy of the speed of light to the 10−17 level. In addition to terrestrial tests, Lunar Laser Ranging Experiments have also been conducted as a variation of the Kennedy-Thorndike-experiment.

Another type of isotropy experiments are the Mössbauer rotor experiments in the 1960s, by which the anisotropy of the Doppler effect on a rotating disc can be observed by using the Mössbauer effect (those experiments can also be utilized to measure time dilation, see below).

No dependence on source velocity or energy

Emission theories, according to which the speed of light depends on the velocity of the source, can conceivably explain the negative outcome of aether drift experiments. It wasn't until the mid-1960s that the constancy of the speed of light was definitively shown by experiment, since in 1965, J. G. Fox showed that the effects of the extinction theorem rendered the results of all experiments previous to that time inconclusive, and therefore compatible with both special relativity and emission theory. More recent experiments have definitely ruled out the emission model: the earliest were those of Filippas and Fox (1964), using moving sources of gamma rays, and Alväger et al. (1964), which demonstrated that photons didn't acquire the speed of the high speed decaying mesons which were their source. In addition, the de Sitter double star experiment (1913) was repeated by Brecher (1977) under consideration of the extinction theorem, ruling out a source dependence as well.

Observations of Gamma-ray bursts also demonstrated that the speed of light is independent of the frequency and energy of the light rays.

One-way speed of light

A series of one-way measurements were undertaken, all of them confirming the isotropy of the speed of light. However, only the two-way speed of light (from A to B back to A) can unambiguously be measured, since the one-way speed depends on the definition of simultaneity and therefore on the method of synchronization. The Einstein synchronization convention makes the one-way speed equal to the two-way speed. However, there are many models having isotropic two-way speed of light, in which the one-way speed is anisotropic by choosing different synchronization schemes. They are experimentally equivalent to special relativity because all of these models include effects like time dilation of moving clocks, that compensate any measurable anisotropy. However, of all models having isotropic two-way speed, only special relativity is acceptable for the overwhelming majority of physicists since all other synchronizations are much more complicated, and those other models (such as Lorentz ether theory) are based on extreme and implausible assumptions concerning some dynamical effects, which are aimed at hiding the "preferred frame" from observation.

Isotropy of mass, energy, and space

Clock-comparison experiments (periodic processes and frequencies can be considered as clocks) such as the Hughes–Drever experiments provide stringent tests of Lorentz invariance. They are not restricted to the photon sector as Michelson-Morley but directly determine any anisotropy of mass, energy, or space by measuring the ground state of nuclei. Upper limit of such anisotropies of 10−33 GeV have been provided. Thus these experiments are among the most precise verifications of Lorentz invariance ever conducted.

Time dilation and length contraction

The transverse Doppler effect and consequently time dilation was directly observed for the first time in the Ives–Stilwell experiment (1938). In modern Ives-Stilwell experiments in heavy ion storage rings using saturated spectroscopy, the maximum measured deviation of time dilation from the relativistic prediction has been limited to ≤ 10−8. Other confirmations of time dilation include Mössbauer rotor experiments in which gamma rays were sent from the middle of a rotating disc to a receiver at the edge of the disc, so that the transverse Doppler effect can be evaluated by means of the Mössbauer effect. By measuring the lifetime of muons in the atmosphere and in particle accelerators, the time dilation of moving particles was also verified. On the other hand, the Hafele–Keating experiment confirmed the resolution of the twin paradox, i.e. that a clock moving from A to B back to A is retarded with respect to the initial clock. However, in this experiment the effects of general relativity also play an essential role.

Direct confirmation of length contraction is hard to achieve in practice since the dimensions of the observed particles are vanishingly small. However, there are indirect confirmations; for example, the behavior of colliding heavy ions can only be explained if their increased density due to Lorentz contraction is considered. Contraction also leads to an increase of the intensity of the Coulomb field perpendicular to the direction of motion, whose effects already have been observed. Consequently, both time dilation and length contraction must be considered when conducting experiments in particle accelerators.

Relativistic momentum and energy

Starting with 1901, a series of measurements was conducted aimed at demonstrating the velocity dependence of the mass of electrons. The results actually showed such a dependency but the precision necessary to distinguish between competing theories was disputed for a long time. Eventually, it was possible to definitely rule out all competing models except special relativity.

Today, special relativity's predictions are routinely confirmed in particle accelerators such as the Relativistic Heavy Ion Collider. For example, the increase of relativistic momentum and energy is not only precisely measured but also necessary to understand the behavior of cyclotrons and synchrotrons etc., by which particles are accelerated near to the speed of light.

Sagnac and Fizeau

Special relativity also predicts that two light rays traveling in opposite directions around a spinning closed path (e.g. a loop) require different flight times to come back to the moving emitter/receiver (this is a consequence of the independence of the speed of light from the velocity of the source, see above). This effect was actually observed and is called the Sagnac effect. Currently, the consideration of this effect is necessary for many experimental setups and for the correct functioning of GPS.

If such experiments are conducted in moving media (e.g. water, or glass optical fiber), it is also necessary to consider Fresnel's dragging coefficient as demonstrated by the Fizeau experiment. Although this effect was initially understood as giving evidence of a nearly stationary aether or a partial aether drag it can easily be explained with special relativity by using the velocity composition law.

Test theories

Several test theories have been developed to assess a possible positive outcome in Lorentz violation experiments by adding certain parameters to the standard equations. These include the Robertson-Mansouri-Sexl framework (RMS) and the Standard-Model Extension (SME). RMS has three testable parameters with respect to length contraction and time dilation. From that, any anisotropy of the speed of light can be assessed. On the other hand, SME includes many Lorentz violation parameters, not only for special relativity, but for the Standard model and General relativity as well; thus it has a much larger number of testable parameters.

Other modern tests

Due to the developments concerning various models of Quantum gravity in recent years, deviations of Lorentz invariance (possibly following from those models) are again the target of experimentalists. Because "local Lorentz invariance" (LLI) also holds in freely falling frames, experiments concerning the weak Equivalence principle belong to this class of tests as well. The outcomes are analyzed by test theories (as mentioned above) like RMS or, more importantly, by SME.

- Besides the mentioned variations of Michelson–Morley and Kennedy–Thorndike experiments, Hughes–Drever experiments are continuing to be conducted for isotropy tests in the proton and neutron sector. To detect possible deviations in the electron sector, spin-polarized torsion balances are used.

- Time dilation is confirmed in heavy ion storage rings, such as the TSR at the MPIK, by observation of the Doppler effect of lithium, and those experiments are valid in the electron, proton, and photon sector.

- Other experiments use Penning traps to observe deviations of cyclotron motion and Larmor precession in electrostatic and magnetic fields.

- Possible deviations from CPT symmetry (whose violation represents a violation of Lorentz invariance as well) can be determined in experiments with neutral mesons, Penning traps and muons, see Antimatter Tests of Lorentz Violation.

- Astronomical tests are conducted in connection with the flight time of photons, where Lorentz violating factors could cause anomalous dispersion and birefringence leading to a dependency of photons on energy, frequency or polarization.

- With respect to threshold energy of distant astronomical objects, but also of terrestrial sources, Lorentz violations could lead to alterations in the standard values for the processes following from that energy, such as Vacuum Cherenkov radiation, or modifications of synchrotron radiation.

- Neutrino oscillations (see Lorentz-violating neutrino oscillations) and the speed of neutrinos (see measurements of neutrino speed) are being investigated for possible Lorentz violations.

- Other candidates for astronomical observations are the Greisen–Zatsepin–Kuzmin limit and Airy disks. The latter is investigated to find possible deviations of Lorentz invariance that could drive the photons out of phase.

- Observations in the Higgs sector are under way.