In 1966, an early meta-research paper examined the statistical methods of 295 papers published in ten high-profile medical journals. It found that "in almost 73% of the reports read ... conclusions were drawn when the justification for these conclusions was invalid." Meta-research in the following decades found many methodological flaws, inefficiencies, and poor practices in research across numerous scientific fields. Many scientific studies could not be reproduced, particularly in medicine and the soft sciences. The term "replication crisis" was coined in the early 2010s as part of a growing awareness of the problem.

Measures have been implemented to address the issues revealed by metascience. These measures include the pre-registration of scientific studies and clinical trials as well as the founding of organizations such as CONSORT and the EQUATOR Network that issue guidelines for methodology and reporting. There are continuing efforts to reduce the misuse of statistics, to eliminate perverse incentives from academia, to improve the peer review process, to systematically collect data about the scholarly publication system, to combat bias in scientific literature, and to increase the overall quality and efficiency of the scientific process. As such, metascience is a big part of methods underlying the Open Science Movement.

History

In 1966, an early meta-research paper examined the statistical methods of 295 papers published in ten high-profile medical journals. It found that, "in almost 73% of the reports read ... conclusions were drawn when the justification for these conclusions was invalid." A paper in 1976 called for funding for meta-research: "Because the very nature of research on research, particularly if it is prospective, requires long periods of time, we recommend that independent, highly competent groups be established with ample, long term support to conduct and support retrospective and prospective research on the nature of scientific discovery". In 2005, John Ioannidis published a paper titled "Why Most Published Research Findings Are False", which argued that a majority of papers in the medical field produce conclusions that are wrong. The paper went on to become the most downloaded paper in the Public Library of Science and is considered foundational to the field of metascience. In a related study with Jeremy Howick and Despina Koletsi, Ioannidis showed that only a minority of medical interventions are supported by 'high quality' evidence according to The Grading of Recommendations Assessment, Development and Evaluation (GRADE) approach. Later meta-research identified widespread difficulty in replicating results in many scientific fields, including psychology and medicine. This problem was termed "the replication crisis". Metascience has grown as a reaction to the replication crisis and to concerns about waste in research.

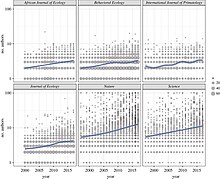

Many prominent publishers are interested in meta-research and in improving the quality of their publications. Top journals such as Science, The Lancet, and Nature, provide ongoing coverage of meta-research and problems with reproducibility. In 2012 PLOS ONE launched a Reproducibility Initiative. In 2015 Biomed Central introduced a minimum-standards-of-reporting checklist to four titles.

The first international conference in the broad area of meta-research was the Research Waste/EQUATOR conference held in Edinburgh in 2015; the first international conference on peer review was the Peer Review Congress held in 1989. In 2016, Research Integrity and Peer Review was launched. The journal's opening editorial called for "research that will increase our understanding and suggest potential solutions to issues related to peer review, study reporting, and research and publication ethics".

Fields and topics of meta-research

Metascience can be categorized into five major areas of interest: Methods, Reporting, Reproducibility, Evaluation, and Incentives. These correspond, respectively, with how to perform, communicate, verify, evaluate, and reward research.

Methods

Metascience seeks to identify poor research practices, including biases in research, poor study design, abuse of statistics, and to find methods to reduce these practices. Meta-research has identified numerous biases in scientific literature. Of particular note is the widespread misuse of p-values and abuse of statistical significance.

Scientific data science

Scientific data science is the use of data science to analyse research papers. It encompasses both qualitative and quantitative methods. Research in scientific data science includes fraud detection and citation network analysis.

Journalology

Journalology, also known as publication science, is the scholarly study of all aspects of the academic publishing process. The field seeks to improve the quality of scholarly research by implementing evidence-based practices in academic publishing. The term "journalology" was coined by Stephen Lock, the former editor-in-chief of The BMJ. The first Peer Review Congress, held in 1989 in Chicago, Illinois, is considered a pivotal moment in the founding of journalology as a distinct field. The field of journalology has been influential in pushing for study pre-registration in science, particularly in clinical trials. Clinical-trial registration is now expected in most countries.

Reporting

Meta-research has identified poor practices in reporting, explaining, disseminating and popularizing research, particularly within the social and health sciences. Poor reporting makes it difficult to accurately interpret the results of scientific studies, to replicate studies, and to identify biases and conflicts of interest in the authors. Solutions include the implementation of reporting standards, and greater transparency in scientific studies (including better requirements for disclosure of conflicts of interest). There is an attempt to standardize reporting of data and methodology through the creation of guidelines by reporting agencies such as CONSORT and the larger EQUATOR Network.

Reproducibility

The replication crisis is an ongoing methodological crisis in which it has been found that many scientific studies are difficult or impossible to replicate. While the crisis has its roots in the meta-research of the mid- to late 20th century, the phrase "replication crisis" was not coined until the early 2010s as part of a growing awareness of the problem. The replication crisis has been closely studied in psychology (especially social psychology) and medicine, including cancer research. Replication is an essential part of the scientific process, and the widespread failure of replication puts into question the reliability of affected fields.

Moreover, replication of research (or failure to replicate) is considered less influential than original research, and is less likely to be published in many fields. This discourages the reporting of, and even attempts to replicate, studies.

Evaluation and incentives

Metascience seeks to create a scientific foundation for peer review. Meta-research evaluates peer review systems including pre-publication peer review, post-publication peer review, and open peer review. It also seeks to develop better research funding criteria.

Metascience seeks to promote better research through better incentive systems. This includes studying the accuracy, effectiveness, costs, and benefits of different approaches to ranking and evaluating research and those who perform it. Critics argue that perverse incentives have created a publish-or-perish environment in academia which promotes the production of junk science, low quality research, and false positives. According to Brian Nosek, "The problem that we face is that the incentive system is focused almost entirely on getting research published, rather than on getting research right." Proponents of reform seek to structure the incentive system to favor higher-quality results. For example, by quality being judged on the basis of narrative expert evaluations ("rather than [only or mainly] indices"), institutional evaluation criteria, guaranteeing of transparency, and professional standards.

Contributorship

Studies proposed machine-readable standards and (a taxonomy of) badges for science publication management systems that hones in on contributorship – who has contributed what and how much of the research labor – rather that using traditional concept of plain authorship – who was involved in any way creation of a publication. A study pointed out one of the problems associated with the ongoing neglect of contribution nuanciation – it found that "the number of publications has ceased to be a good metric as a result of longer author lists, shorter papers, and surging publication numbers".

Assessment factors

Factors other than a submission's merits can substantially influence peer reviewers' evaluations. Such factors may however also be important such as the use of track-records about the veracity of a researchers' prior publications and its alignment with public interests. Nevertheless, evaluation systems – include those of peer-review – may substantially lack mechanisms and criteria that are oriented or well-performingly oriented towards merit, real-world positive impact, progress and public usefulness rather than analytical indicators such as number of citations or altmetrics even when such can be used as partial indicators of such ends. Rethinking of the academic reward structure "to offer more formal recognition for intermediate products, such as data" could have positive impacts and reduce data withholding.

Recognition of training

A commentary noted that academic rankings don't consider where (country and institute) the respective researchers were trained.

Scientometrics

Scientometrics concerns itself with measuring bibliographic data in scientific publications. Major research issues include the measurement of the impact of research papers and academic journals, the understanding of scientific citations, and the use of such measurements in policy and management contexts. Studies suggest that "metrics used to measure academic success, such as the number of publications, citation number, and impact factor, have not changed for decades" and have to some degrees "ceased" to be good measures, leading to issues such as "overproduction, unnecessary fragmentations, overselling, predatory journals (pay and publish), clever plagiarism, and deliberate obfuscation of scientific results so as to sell and oversell".

Novel tools in this area include systems to quantify how much the cited-node informs the citing-node. This can be used to convert unweighted citation networks to a weighted one and then for importance assessment, deriving "impact metrics for the various entities involved, like the publications, authors etc" as well as, among other tools, for search engine- and recommendation systems.

Science governance

Science funding and science governance can also be explored and informed by metascience.

Incentives

Various interventions such as prioritization can be important. For instance, the concept of differential technological development refers to deliberately developing technologies – e.g. control-, safety- and policy-technologies versus risky biotechnologies – at different precautionary paces to decrease risks, mainly global catastrophic risk, by influencing the sequence in which technologies are developed. Relying only on the established form of legislation and incentives to ensure the right outcomes may not be adequate as these may often be too slow or inappropriate.

Other incentives to govern science and related processes, including via metascience-based reforms, may include ensuring accountability to the public (in terms of e.g. accessibility of, especially publicly-funded, research or of it addressing various research topics of public interest in serious manners), increasing the qualified productive scientific workforce, improving the efficiency of science to improve problem-solving in general, and facilitating that unambiguous societal needs based on solid scientific evidence – such as about human physiology – are adequately prioritized and addressed. Such interventions, incentives and intervention-designs can be subjects of metascience.

Science funding and awards

Scientific awards are one category of science incentives. Metascience can explore existing and hypothetical systems of science awards. For instance, it found that work honored by Nobel prizes clusters in only a few scientific fields with only 36/71 having received at least one Nobel prize of the 114/849 domains science could be divided into according to their DC2 and DC3 classification systems. Five of the 114 domains were shown to make up over half of the Nobel prizes awarded 1995–2017 (particle physics [14%], cell biology [12.1%], atomic physics [10.9%], neuroscience [10.1%], molecular chemistry [5.3%]).

A study found that delegation of responsibility by policy-makers – a centralized authority-based top-down approach – for knowledge production and appropriate funding to science with science subsequently somehow delivering "reliable and useful knowledge to society" is too simple.

Measurements show that allocation of bio-medical resources can be more strongly correlated to previous allocations and research than to burden of diseases.

A study suggests that "[i]f peer review is maintained as the primary mechanism of arbitration in the competitive selection of research reports and funding, then the scientific community needs to make sure it is not arbitrary".

Studies indicate there to is a need to "reconsider how we measure success" .

- Funding data

Funding information from grant databases and funding acknowledgment sections can be sources of data for scientometrics studies, e.g. for investigating or recognition of the impact of funding entities on the development of science and technology.

Research questions and coordination

Risk governance

Science communication and public use

It has been argued that "science has two fundamental attributes that underpin its value as a global public good: that knowledge claims and the evidence on which they are based are made openly available to scrutiny, and that the results of scientific research are communicated promptly and efficiently". Metascientific research is exploring topics of science communication such as media coverage of science, science journalism and online communication of results by science educators and scientists. A study found that the "main incentive academics are offered for using social media is amplification" and that it should be "moving towards an institutional culture that focuses more on how these [or such] platforms can facilitate real engagement with research". Science communication may also involve the communication of societal needs, concerns and requests to scientists.

Alternative metrics tools

Alternative metrics tools can be used not only for help in assessment (performance and impact) and findability, but also aggregate many of the public discussions about a scientific paper in social media such as reddit, citations on Wikipedia, and reports about the study in the news media which can then in turn be analyzed in metascience or provided and used by related tools. In terms of assessment and findability, altmetrics rate publications' performance or impact by the interactions they receive through social media or other online platforms, which can for example be used for sorting recent studies by measured impact, including before other studies are citing them. The specific procedures of established altmetrics are not transparent and the used algorithms can not be customized or altered by the user as open source software can. A study has described various limitations of altmetrics and points "toward avenues for continued research and development". They are also limited in their use as a primary tool for researchers to find received constructive feedback.

Societal implications and applications

It has been suggested that it may benefit science if "intellectual exchange—particularly regarding the societal implications and applications of science and technology—are better appreciated and incentivized in the future".

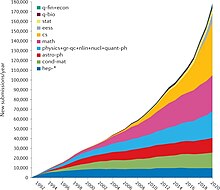

Knowledge integration

Primary studies "without context, comparison or summary are ultimately of limited value" and various types of research syntheses and summaries integrate primary studies. Progress in key social-ecological challenges of the global environmental agenda is "hampered by a lack of integration and synthesis of existing scientific evidence", with a "fast-increasing volume of data", compartmentalized information and generally unmet evidence synthesis challenges. According to Khalil, researchers are facing the problem of too many papers – e.g. in March 2014 more than 8,000 papers were submitted to arXiv – and to "keep up with the huge amount of literature, researchers use reference manager software, they make summaries and notes, and they rely on review papers to provide an overview of a particular topic". He notes that review papers are usually (only)" for topics in which many papers were written already, and they can get outdated quickly" and suggests "wiki-review papers" that get continuously updated with new studies on a topic and summarize many studies' results and suggest future research. A study suggests that if a scientific publication is being cited in a Wikipedia article this could potentially be considered as an indicator of some form of impact for this publication, for example as this may, over time, indicate that the reference has contributed to a high-level of summary of the given topic.

Science journalism

Science journalists play an important role in the scientific ecosystem and in science communication to the public and need to "know how to use, relevant information when deciding whether to trust a research finding, and whether and how to report on it", vetting the findings that get transmitted to the public.

Science education

Some studies investigate science education, e.g. the teaching about selected scientific controversies and historical discovery process of major scientific conclusions, and common scientific misconceptions. Education can also be a topic more generally such as how to improve the quality of scientific outputs and reduce the time needed before scientific work or how to enlarge and retain various scientific workforces.

Science misconceptions and anti-science attitudes

Many students have misconceptions about what science is and how it works. Anti-science attitudes and beliefs are also a subject of research. Hotez suggests antiscience "has emerged as a dominant and highly lethal force, and one that threatens global security", and that there is a need for "new infrastructure" that mitigates it.

Evolution of sciences

Scientific practice

Metascience can investigate how scientific processes evolve over time. A study found that teams are growing in size, "increasing by an average of 17% per decade".

It was found that prevalent forms of non-open access publication and prices charged for many conventional journals – even for publicly funded papers – are unwarranted, unnecessary – or suboptimal – and detrimental barriers to scientific progress. Open access can save considerable amounts of financial resources, which could be used otherwise, and level the playing field for researchers in developing countries. There are substantial expenses for subscriptions, gaining access to specific studies, and for article processing charges. Paywall: The Business of Scholarship is a documentary on such issues.

Another topic are the established styles of scientific communication (e.g. long text-form studies and reviews) and the scientific publishing practices – there are concerns about a "glacial pace" of conventional publishing. The use of preprint-servers to publish study-drafts early is increasing and open peer review, new tools to screen studies, and improved matching of submitted manuscripts to reviewers are among the proposals to speed up publication.

Science overall and intrafield developments

Studies have various kinds of metadata which can be utilized, complemented and made accessible in useful ways. OpenAlex is a free online index of over 200 million scientific documents that integrates and provides metadata such as sources, citations, author information, scientific fields and research topics. Its API and open source website can be used for metascience, scientometrics and novel tools that query this semantic web of papers. Another project under development, Scholia, uses metadata of scientific publications for various visualizations and aggregation features such as providing a simple user interface summarizing literature about a specific feature of the SARS-CoV-2 virus using Wikidata's "main subject" property.

Subject-level resolutions

Beyond metadata explicitly assigned to studies by humans, natural language processing and AI can be used to assign research publications to topics – one study investigating the impact of science awards used such to associate a paper's text (not just keywords) with the linguistic content of Wikipedia's scientific topics pages ("pages are created and updated by scientists and users through crowdsourcing"), creating meaningful and plausible classifications of high-fidelity scientific topics for further analysis or navigability.

Growth or stagnation of science overall

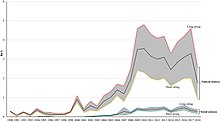

Metascience research is investigating the growth of science overall, using e.g. data on the number of publications in bibliographic databases. A study found segments with different growth rates appear related to phases of "economic (e.g., industrialization)" – money is considered as necessary input to the science system – "and/or political developments (e.g., Second World War)". It also confirmed a recent exponential growth in the volume of scientific literature and calculated an average doubling period of 17.3 years.

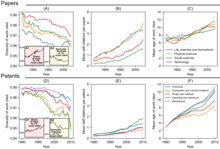

However, others have pointed out that is difficult to measure scientific progress in meaningful ways, partly because it's hard to accurately evaluate how important any given scientific discovery is. A variety of perspectives of the trajectories of science overall (impact, number of major discoveries, etc) have been described in books and articles, including that science is becoming harder (per dollar or hour spent), that if science "slowing today, it is because science has remained too focused on established fields", that papers and patents are increasingly less likely to be "disruptive" in terms of breaking with the past as measured by the "CD index", and that there is a great stagnation – possibly as part of a larger trend – whereby e.g. "things haven't changed nearly as much since the 1970s" when excluding the computer and the Internet.

Better understanding of potential slowdowns according to some measures could be a major opportunity to improve humanity's future. For example, emphasis on citations in the measurement of scientific productivity, information overloads, reliance on a narrower set of existing knowledge (which may include narrow specialization and related contemporary practices) based on three "use of previous knowledge"-indicators, and risk-avoidant funding structures may have "toward incremental science and away from exploratory projects that are more likely to fail". The study that introduced the "CD index" suggests the overall number of papers has risen while the total of "highly disruptive" papers as measured by the index hasn't (notably, the 1998 discovery of the accelerating expansion of the universe has a CD index of 0). Their results also suggest scientists and inventors "may be struggling to keep up with the pace of knowledge expansion." Various ways of measuring "novelty" of studies, novelty metrics, have been proposed to balance a potential anti-novelty bias – such as textual analysis or measuring whether it makes first-time-ever combinations of referenced journals, taking into account the difficulty. Other approaches include pro-actively funding risky projects.

Topic mapping

Science maps could show main interrelated topics within a certain scientific domain, their change over time, and their key actors (researchers, institutions, journals). They may help find factors determine the emergence of new scientific fields and the development of interdisciplinary areas and could be relevant for science policy purposes. Theories of scientific change could guide "the exploration and interpretation of visualized intellectual structures and dynamic patterns". The maps can show the intellectual, social or conceptual structure of a research field. Beyond visual maps, expert survey-based studies and similar approaches could identify understudied or neglected societally important areas, topic-level problems (such as stigma or dogma), or potential misprioritizations. Examples of such are studies about policy in relation to public health and the social science of climate change mitigation where it has been estimated that only 0.12% of all funding for climate-related research is spent on such despite the most urgent puzzle at the current juncture being working out how to mitigate climate change, whereas the natural science of climate change is already well established.

There are also studies that map a scientific field or a topic such as the study of the use of research evidence in policy and practice, partly using surveys.

Controversies, current debates and disagreement

Some research is investigating scientific controversy or controversies, and may identify currently ongoing major debates (e.g. open questions), and disagreement between scientists or studies. One study suggests the level of disagreement was highest in the social sciences and humanities (0.61%), followed by biomedical and health sciences (0.41%), life and earth sciences (0.29%); physical sciences and engineering (0.15%), and mathematics and computer science (0.06%). Such research may also show, where the disagreements are, especially if they cluster, including visually such as with cluster diagrams.

Challenges of interpretation of pooled results

Studies about a specific research question or research topic are often reviewed in the form of higher-level overviews in which results from various studies are integrated, compared, critically analyzed and interpreted. Examples of such works are scientific reviews and meta-analyses. These and related practices face various challenges and are a subject of metascience.

Various issues with included or available studies such as, for example, heterogeneity of methods used may lead to faulty conclusions of the meta-analysis.

Knowledge integration and living documents

Various problems require swift integration of new and existing science-based knowledge. Especially setting where there are a large number of loosely related projects and initiatives benefit from a common ground or "commons".

Evidence synthesis can be applied to important and, notably, both relatively urgent and certain global challenges: "climate change, energy transitions, biodiversity loss, antimicrobial resistance, poverty eradication and so on". It was suggested that a better system would keep summaries of research evidence up to date via living systematic reviews – e.g. as living documents. While the number of scientific papers and data (or information and online knowledge) has risen substantially, the number of published academic systematic reviews has risen from "around 6,000 in 2011 to more than 45,000 in 2021". An evidence-based approach is important for progress in science, policy, medical and other practices. For example, meta-analyses can quantify what is known and identify what is not yet known and place "truly innovative and highly interdisciplinary ideas" into the context of established knowledge which may enhance their impact.

Factors of success and progress

It has been hypothesized that a deeper understanding of factors behind successful science could "enhance prospects of science as a whole to more effectively address societal problems".

Novel ideas and disruptive scholarship

Two metascientists reported that "structures fostering disruptive scholarship and focusing attention on novel ideas" could be important as in a growing scientific field citation flows disproportionately consolidate to already well-cited papers, possibly slowing and inhibiting canonical progress. A study concluded that to enhance impact of truly innovative and highly interdisciplinary novel ideas, they should be placed in the context of established knowledge.torship, partnerships and social factors

Other researchers reported that the most successful – in terms of "likelihood of prizewinning, National Academy of Science (NAS) induction, or superstardom" – protégés studied under mentors who published research for which they were conferred a prize after the protégés' mentorship. Studying original topics rather than these mentors' research-topics was also positively associated with success. Highly productive partnerships are also a topic of research – e.g. "super-ties" of frequent co-authorship of two individuals who can complement skills, likely also the result of other factors such as mutual trust, conviction, commitment and fun.

Study of successful scientists and processes, general skills and activities

The emergence or origin of ideas by successful scientists is also a topic of research, for example reviewing existing ideas on how Mendel made his discoveries, – or more generally, the process of discovery by scientists. Science is a "multifaceted process of appropriation, copying, extending, or combining ideas and inventions" [and other types of knowledge or information], and not an isolated process. There are also few studies investigating scientists' habits, common modes of thinking, reading habits, use of information sources, digital literacy skills, and workflows.

Labor advantage

A study theorized that in many disciplines, larger scientific productivity or success by elite universities can be explained by their larger pool of available funded laborers. The study found that university prestige was only associated with higher productivity for faculty with group members, not for faculty publishing alone or the group members themselves. This is presented as evidence that the outsize productivity of elite researchers is not from a more rigorous selection of talent by top universities, but from labor advantages accrued through greater access to funding and the attraction of prestige to graduate and postdoctoral researchers.

Ultimate impacts

Success in science (as indicated in tenure review processes) is often measured in terms of metrics like citations, not in terms of the eventual or potential impact on lives and society, which awards sometimes do. Problems with such metrics are roughly outlined elsewhere in this article and include that reviews replace citations to primary studies. There are also proposals for changes to the academic incentives systems that increase the recognition of societal impact in the research process.

Progress studies

A proposed field of "Progress Studies" could investigate how scientists (or funders or evaluators of scientists) should be acting, "figuring out interventions" and study progress itself. The field was explicitly proposed in a 2019 essay and described as an applied science that prescribes action.

As and for acceleration of progress

A study suggests that improving the way science is done could accelerate the rate of scientific discovery and its applications which could be useful for finding urgent solutions to humanity's problems, improve humanity's conditions, and enhance understanding of nature. Metascientific studies can seek to identify aspects of science that need improvement, and develop ways to improve them. If science is accepted as the fundamental engine of economic growth and social progress, this could raise "the question of what we – as a society – can do to accelerate science, and to direct science toward solving society's most important problems." However, one of the authors clarified that a one-size-fits-all approach is not thought to be right answer – for example, in funding, DARPA models, curiosity-driven methods, allowing "a single reviewer to champion a project even if his or her peers do not agree", and various other approaches all have their uses. Nevertheless, evaluation of them can help build knowledge of what works or works best.

Reforms

Meta-research identifying flaws in scientific practice has inspired reforms in science. These reforms seek to address and fix problems in scientific practice which lead to low-quality or inefficient research.

A 2015 study lists "fragmented" efforts in meta-research.

Pre-registration

The practice of registering a scientific study before it is conducted is called pre-registration. It arose as a means to address the replication crisis. Pregistration requires the submission of a registered report, which is then accepted for publication or rejected by a journal based on theoretical justification, experimental design, and the proposed statistical analysis. Pre-registration of studies serves to prevent publication bias (e.g. not publishing negative results), reduce data dredging, and increase replicability.

Reporting standards

Studies showing poor consistency and quality of reporting have demonstrated the need for reporting standards and guidelines in science, which has led to the rise of organisations that produce such standards, such as CONSORT (Consolidated Standards of Reporting Trials) and the EQUATOR Network.

The EQUATOR (Enhancing the QUAlity and Transparency Of health Research) Network is an international initiative aimed at promoting transparent and accurate reporting of health research studies to enhance the value and reliability of medical research literature. The EQUATOR Network was established with the goals of raising awareness of the importance of good reporting of research, assisting in the development, dissemination and implementation of reporting guidelines for different types of study designs, monitoring the status of the quality of reporting of research studies in the health sciences literature, and conducting research relating to issues that impact the quality of reporting of health research studies. The Network acts as an "umbrella" organisation, bringing together developers of reporting guidelines, medical journal editors and peer reviewers, research funding bodies, and other key stakeholders with a mutual interest in improving the quality of research publications and research itself.

Applications

Information and communications technologies

Metascience is used in the creation and improvement of technical systems (ICTs) and standards of science evaluation, incentivation, communication, commissioning, funding, regulation, production, management, use and publication. Such can be called "applied metascience" and may seek to explore ways to increase quantity, quality and positive impact of research. One example for such is the development of alternative metrics.

Study screening and feedback

Various websites or tools also identify inappropriate studies and/or enable feedback such as PubPeer, Cochrane's Risk of Bias Tool and RetractionWatch. Medical and academic disputes are as ancient as antiquity and a study calls for research into "constructive and obsessive criticism" and into policies to "help strengthen social media into a vibrant forum for discussion, and not merely an arena for gladiator matches". Feedback to studies can be found via altmetrics which is often integrated at the website of the study – most often as an embedded Altmetrics badge – but may often be incomplete, such as only showing social media discussions that link to the study directly but not those that link to news reports about the study.

Tools used, modified, extended or investigated

Tools may get developed with metaresearch or can be used or investigated by such. Notable examples may include:

- The tool scite.ai aims to track and link citations of papers as 'Supporting', 'Mentioning' or 'Contrasting' the study.

- The Scite Reference Check bot is an extension of scite.ai that scans new article PDFs "for references to retracted papers, and posts both the citing and retracted papers on Twitter" and also "flags when new studies cite older ones that have issued corrections, errata, withdrawals, or expressions of concern". Studies have suggested as few as 4% of citations to retracted papers clearly recognize the retraction.

- Search engines like Google Scholar are used to find studies and the notification service Google Alerts enables notifications for new studies matching specified search terms. Scholarly communication infrastructure includes search databases.

- Shadow library Sci-hub is a topic of metascience

- Personal knowledge management systems for research-, knowledge- and task management, such as saving information in organized ways with multi-document text editors for future use Such systems could be described as part of, along with e.g. Web browser (tabs-addons etc) and search software, "mind-machine partnerships" that could be investigated by metascience for how they could improve science.

- Scholia – efforts to open scholarly publication metadata and use it via Wikidata.

- Various software enables common metascientific practices such as bibliometric analysis.

Development

According to a study "a simple way to check how often studies have been repeated, and whether or not the original findings are confirmed" is needed due to reproducibility issues in science. A study suggests a tool for screening studies for early warning signs for research fraud.

Medicine

Clinical research in medicine is often of low quality, and many studies cannot be replicated. An estimated 85% of research funding is wasted. Additionally, the presence of bias affects research quality. The pharmaceutical industry exerts substantial influence on the design and execution of medical research. Conflicts of interest are common among authors of medical literature and among editors of medical journals. While almost all medical journals require their authors to disclose conflicts of interest, editors are not required to do so. Financial conflicts of interest have been linked to higher rates of positive study results. In antidepressant trials, pharmaceutical sponsorship is the best predictor of trial outcome.

Blinding is another focus of meta-research, as error caused by poor blinding is a source of experimental bias. Blinding is not well reported in medical literature, and widespread misunderstanding of the subject has resulted in poor implementation of blinding in clinical trials. Furthermore, failure of blinding is rarely measured or reported. Research showing the failure of blinding in antidepressant trials has led some scientists to argue that antidepressants are no better than placebo. In light of meta-research showing failures of blinding, CONSORT standards recommend that all clinical trials assess and report the quality of blinding.

Studies have shown that systematic reviews of existing research evidence are sub-optimally used in planning a new research or summarizing the results. Cumulative meta-analyses of studies evaluating the effectiveness of medical interventions have shown that many clinical trials could have been avoided if a systematic review of existing evidence was done prior to conducting a new trial. For example, Lau et al. analyzed 33 clinical trials (involving 36974 patients) evaluating the effectiveness of intravenous streptokinase for acute myocardial infarction. Their cumulative meta-analysis demonstrated that 25 of 33 trials could have been avoided if a systematic review was conducted prior to conducting a new trial. In other words, randomizing 34542 patients was potentially unnecessary. One study analyzed 1523 clinical trials included in 227 meta-analyses and concluded that "less than one quarter of relevant prior studies" were cited. They also confirmed earlier findings that most clinical trial reports do not present systematic review to justify the research or summarize the results.

Many treatments used in modern medicine have been proven to be ineffective, or even harmful. A 2007 study by John Ioannidis found that it took an average of ten years for the medical community to stop referencing popular practices after their efficacy was unequivocally disproven.

Psychology

Metascience has revealed significant problems in psychological research. The field suffers from high bias, low reproducibility, and widespread misuse of statistics. The replication crisis affects psychology more strongly than any other field; as many as two-thirds of highly publicized findings may be impossible to replicate. Meta-research finds that 80-95% of psychological studies support their initial hypotheses, which strongly implies the existence of publication bias.

The replication crisis has led to renewed efforts to re-test important findings. In response to concerns about publication bias and p-hacking, more than 140 psychology journals have adopted result-blind peer review, in which studies are pre-registered and published without regard for their outcome. An analysis of these reforms estimated that 61 percent of result-blind studies produce null results, in contrast with 5 to 20 percent in earlier research. This analysis shows that result-blind peer review substantially reduces publication bias.

Psychologists routinely confuse statistical significance with practical importance, enthusiastically reporting great certainty in unimportant facts. Some psychologists have responded with an increased use of effect size statistics, rather than sole reliance on the p values.

Physics

Richard Feynman noted that estimates of physical constants were closer to published values than would be expected by chance. This was believed to be the result of confirmation bias: results that agreed with existing literature were more likely to be believed, and therefore published. Physicists now implement blinding to prevent this kind of bias.

Computer Science

Web measurement studies are essential for understanding the workings of the modern Web, particularly in the fields of security and privacy. However, these studies often require custom-built or modified crawling setups, leading to a plethora of analysis tools for similar tasks. In a paper by Nurullah Demir et al., the authors surveyed 117 recent research papers to derive best practices for Web-based measurement studies and establish criteria for reproducibility and replicability. They found that experimental setups and other critical information for reproducing and replicating results are often missing. In a large-scale Web measurement study on 4.5 million pages with 24 different measurement setups, the authors demonstrated the impact of slight differences in experimental setups on the overall results, emphasizing the need for accurate and comprehensive documentation.

Organizations and institutes

There are several organizations and universities across the globe which work on meta-research – these include the Meta-Research Innovation Center at Berlin, the Meta-Research Innovation Center at Stanford, the Meta-Research Center at Tilburg University, the Meta-research & Evidence Synthesis Unit, The George Institute for Global Health at India and Center for Open Science. Organizations that develop tools for metascience include OurResearch, Center for Scientific Integrity and altmetrics companies. There is an annual Metascience Conference hosted by the Association for Interdisciplinary Meta-Research and Open Science (AIMOS) and biannual conference hosted by the Centre for Open Science.