| Limbic system | |||

|---|---|---|---|

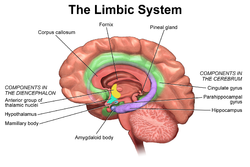

Cross section of the human brain showing parts of the limbic system from below.

Traité d'Anatomie et de Physiologie (1786) | |||

The limbic system largely consists of what was previously known as the limbic lobe.

| |||

| Details | |||

| Identifiers | |||

| Latin | Systema limbicum | ||

| MeSH | D008032 | ||

| NeuroNames | 2055 | ||

| FMA | 242000 | ||

| Anatomical terms of neuroanatomy | |||

The limbic system is a set of brain structures located on both sides of the thalamus, immediately beneath the cerebrum. It has also been referred to as the paleomammalian cortex. It is not a separate system but a collection of structures from the telencephalon, diencephalon, and mesencephalon. It includes the olfactory bulbs, hippocampus, hypothalamus, amygdala, anterior thalamic nuclei, fornix, columns of fornix, mammillary body, septum pellucidum, habenular commissure, cingulate gyrus, parahippocampal gyrus, entorhinal cortex, and limbic midbrain areas.

The limbic system supports a variety of functions including emotion, behavior, motivation, long-term memory, and olfaction.[4] Emotional life is largely housed in the limbic system, and it has a great deal to do with the formation of memories.

Although the term only originated in the 1940s, some neuroscientists, including Joseph LeDoux, have suggested that the concept of a functionally unified limbic system should be abandoned as obsolete because it is grounded mainly in historical concepts of brain anatomy that are no longer accepted as accurate.[5]

Structure

Anatomical components of the limbic system

The limbic system was originally defined by Paul D, MacLean as a series of cortical structures surrounding the limit between the cerebral hemispheres and the brainstem: the border, or limbus, of the brain. These structures were known together as the limbic lobe.[6] Further studies began to associate these areas with emotional and motivational processes and linked them to subcortical components that were grouped into the limbic system.[7] The existence of such a system as an isolated entity responsible for the neurological regulation of emotion has gone into disuse and currently it is considered as one of the many parts of the brain that regulate visceral, autonomic processes.[8]

Therefore, the definition of anatomical structures considered part of the limbic system is a controversial subject. The following structures are, or have been considered, part of the limbic system:[9][10]

- Cortical areas:

- Limbic lobe

- Orbitofrontal cortex, a region in the frontal lobe involved in the process of decision-making.

- Piriform cortex, part of the olfactory system.

- Entorhinal cortex, related with memory and associative components.

- Hippocampus and associated structures, which play a central role in the consolidation of new memories.

- Fornix, a white matter structure connecting the hippocampus with other brain structures, particularly the mammillary bodies and septal nuclei

- Subcortical areas:

- Septal nuclei, a set of structures that lie in front of the lamina terminalis, considered a pleasure zone.

- Amygdala, located deep within the temporal lobes and related with a number of emotional processes.

- Nucleus accumbens: involved in reward, pleasure, and addiction.

- Diencephalic structures:

- Hypothalamus: a center for the limbic system, connected with the frontal lobes, septal nuclei and the brain stem reticular formation via the medial forebrain bundle, with the hippocampus via the fornix, and with the thalamus via the mammillothalamic fasciculus. It regulates a great number of autonomic processes.

- Mammillary bodies, part of the hypothalamus that receives signals from the hippocampus via the fornix and projects them to the thalamus.

- Anterior nuclei of thalamus receive input from the mammillary bodies. Involved in memory processing.

Function

The structures of the limbic system are involved in motivation, emotion, learning, and memory. The limbic system is where the subcortical structures meet the cerebral cortex.[1] The limbic system operates by influencing the endocrine system and the autonomic nervous system. It is highly interconnected with the nucleus accumbens, which plays a role in sexual arousal and the "high" derived from certain recreational drugs. These responses are heavily modulated by dopaminergic projections from the limbic system. In 1954, Olds and Milner found that rats with metal electrodes implanted into their nucleus accumbens, as well as their septal nuclei, repeatedly pressed a lever activating this region, and did so in preference to eating and drinking, eventually dying of exhaustion.[11] The limbic system also includes the basal ganglia. The basal ganglia are a set of subcortical structures that direct intentional movements. The basal ganglia are located near the thalamus and hypothalamus. They receive input from the cerebral cortex, which sends outputs to the motor centers in the brain stem. A part of the basal ganglia called the striatum controls posture and movement. Recent studies indicate that, if there is an inadequate supply of dopamine, the striatum is affected, which can lead to visible behavioral symptoms of Parkinson's disease.[1]The limbic system is also tightly connected to the prefrontal cortex. Some scientists contend that this connection is related to the pleasure obtained from solving problems. To cure severe emotional disorders, this connection was sometimes surgically severed, a procedure of psychosurgery, called a prefrontal lobotomy (this is actually a misnomer). Patients having undergone this procedure often became passive and lacked all motivation.

The limbic system is often classified as a “cerebral structure”. This structure is closely linked to olfaction, emotions, drives, autonomic regulation, memory, and pathologically to encephalopathy, epilepsy, psychotic symptoms, cognitive defects.[12] The functional relevance of the limbic system has proven to serve many different functions such as affects/emotions, memory, sensory processing, time perception, attention, consciousness, instincts, autonomic/vegetative control, and actions/motor behavior. Some of the disorders associated with the limbic system are epilepsy and schizophrenia.[13]

Hippocampus

Various processes of cognition involve the hippocampus.Spatial memory

The first and most widely researched area concerns memory, spatial memory in particular. Spatial memory was found to have many sub-regions in the hippocampus, such as the dentate gyrus (DG) in the dorsal hippocampus, the left hippocampus, and the parahippocampal region. The dorsal hippocampus was found to be an important component for the generation of new neurons, called adult-born granules (GC), in adolescence and adulthood.[14] These new neurons contribute to pattern separation in spatial memory, increasing the firing in cell networks, and overall causing stronger memory formations.While the dorsal hippocampus is involved in spatial memory formation, the left hippocampus is a participant in the recall of these spatial memories. Eichenbaum[15] and his team found, when studying the hippocampal lesions in rats, that the left hippocampus is “critical for effectively combining the ‘what, ‘when,’ and ‘where’ qualities of each experience to compose the retrieved memory.” This makes the left hippocampus a key component in the retrieval of spatial memory. However, Spreng[16] found that the left hippocampus is, in fact, a general concentrated region for binding together bits and pieces of memory composed not only by the hippocampus, but also by other areas of the brain to be recalled at a later time. Eichenbaum’s research in 2007 also demonstrates that the parahippocampal area of the hippocampus is another specialized region for the retrieval of memories just like the left hippocampus.

Learning

The hippocampus, over the decades, has also been found to have a huge impact in learning. Curlik and Shors[17] examined the effects of neurogenesis in the hippocampus and its effects on learning. This researcher and his team employed many different types of mental and physical training on their subjects, and found that the hippocampus is highly responsive to these latter tasks. Thus, they discovered an upsurge of new neurons and neural circuits in the hippocampus as a result of the training, causing an overall improvement in the learning of the task. This neurogenesis contributes to the creation of adult-born granules cells (GC), cells also described by Eichenbaum[15] in his own research on neurogenesis and its contributions to learning. The creation of these cells exhibited "enhanced excitability" in the dentate gyrus (DG) of the dorsal hippocampus, impacting the hippocampus and its contribution to the learning process.[15]Hippocampus damage

Damage related to the hippocampal region of the brain has reported vast effects on overall cognitive functioning, particularly memory such as spatial memory. As previously mentioned, spatial memory is a cognitive function greatly intertwined with the hippocampus. While damage to the hippocampus may be a result of a brain injury or other injuries of that sort, researchers particularly investigated the effects that high emotional arousal and certain types of drugs had on the recall ability in this specific memory type. In particular, in a study performed by Parkard,[18] rats were given the task of correctly making their way through a maze. In the first condition, rats were stressed by shock or restraint which caused a high emotional arousal. When completing the maze task, these rats had an impaired effect on their hippocampal-dependent memory when compared to the control group. Then, in a second condition, a group of rats were injected with anxiogenic drugs. Like the former these results reported similar outcomes, in that hippocampal-memory was also impaired. Studies such as these reinforce the impact that the hippocampus has on memory processing, in particular the recall function of spatial memory. Furthermore, impairment to the hippocampus can occur from prolonged exposure to stress hormones such as Glucocorticoids (GCs), which target the hippocampus and cause disruption in explicit memory.[19]In an attempt to curtail life-threatening epileptic seizures, 27-year-old Henry Gustav Molaison underwent bilateral removal of almost all of his hippocampus in 1953. Over the course of fifty years he participated in thousands of tests and research projects that provided specific information on exactly what he had lost. Semantic and episodic events faded within minutes, having never reached his long term memory, yet emotions, unconnected from the details of causation, were often retained. Dr. Suzanne Corkin, who worked with him for 46 years until his death, described the contribution of this tragic "experiment" in her 2013 book.[20]

Amygdala

Episodic-autobiographical memory (EAM) networks

Another integrative part of the limbic system, the amygdala is involved in many cognitive processes. Like the hippocampus, processes in the amygdala seem to impact memory; however, it is not spatial memory as in the hippocampus but episodic-autobiographical memory (EAM) networks. Markowitsch's[21] amygdala research shows it encodes, stores, and retrieves EAM memories. To delve deeper into these types of processes by the amygdala, Markowitsch[21] and his team provided extensive evidence through investigations that the "amygdala's main function is to charge cues so that mnemonic events of a specific emotional significance can be successfully searched within the appropriate neural nets and re-activated." These cues for emotional events created by the amygdala encompass the EAM networks previously mentioned.Attentional and emotional processes

Besides memory, the amygdala also seems to be an important brain region involved in attentional and emotional processes. First, to define attention in cognitive terms, attention is the ability to home in on some stimuli while ignoring others. Thus, the amygdala seems to be an important structure in this ability. Foremost, however, this structure was historically thought to be linked to fear, allowing the individual to take action in response to that fear. However, as time has gone by, researchers such as Pessoa,[22] generalized this concept with help from evidence of EEG recordings, and concluded that the amygdala helps an organism to define a stimulus and therefore respond accordingly. However, when the amygdala was initially thought to be linked to fear, this gave way for research in the amygdala for emotional processes. Kheirbek[14] demonstrated research that the amygdala is involved in emotional processes, in particular the ventral hippocampus. He described the ventral hippocampus as having a role in neurogenesis and the creation of adult-born granule cells (GC). These cells not only were a crucial part of neurogenesis and the strengthening of spatial memory and learning in the hippocampus but also appear to be an essential component in the amygdala. A deficit of these cells, as Pessoa (2009) predicted in his studies, would result in low emotional functioning, leading to high retention rate of mental diseases, such as anxiety disorders.Social processing

Social processing, specifically the evaluation of faces in social processing, is an area of cognition specific to the amygdala. In a study done by Todorov,[23] fMRI tasks were performed with participants to evaluate whether the amygdala was involved in the general evaluation of faces. After the study, Todorov concluded from his fMRI results that the amygdala did indeed play a key role in the general evaluation of faces. However, in a study performed by researchers Koscik[24] and his team, the trait of trustworthiness was particularly examined in the evaluation of faces. Koscik and his team demonstrated that the amygdala was involved in evaluating the trustworthiness of an individual. They investigated how brain damage to the amygdala played a role in trustworthiness, and found that individuals that suffered damage tended to confuse trust and betrayal, and thus placed trust in those having done them wrong. Furthermore, Rule,[25] along with his colleagues, expanded on the idea of the amygdala in its critique of trustworthiness in others by performing a study in 2009 in which he examined the amygdala's role in evaluating general first impressions and relating them to real-world outcomes. Their study involved first impressions of CEOs. Rule demonstrated that while the amygdala did play a role in the evaluation of trustworthiness, as observed by Koscik in his own research two years later in 2011, the amygdala also played a generalized role in the overall evaluation of first impression of faces. This latter conclusion, along with Todorov's study on the amygdala’s role in general evaluations of faces and Koscik’s research on trustworthiness and the amygdala, further solidified evidence that the amygdala plays a role in overall social processing.Evolution

Paul D. MacLean, as part of his triune brain theory, hypothesized that the limbic system is older than other parts of the forebrain, and that it developed to manage circuitry attributed to the fight or flight first identified by Hans Selye [26] in his report of the General Adaptation Syndrome in 1936. It may be considered a part of survival adaptation in reptiles as well as mammals (including humans). MacLean postulated that the human brain has evolved three components, that evolved successively, with more recent components developing at the top/front. These components are, respectively:- The archipallium or primitive ("reptilian") brain, comprising the structures of the brain stem – medulla, pons, cerebellum, mesencephalon, the oldest basal nuclei – the globus pallidus and the olfactory bulbs.

- The paleopallium or intermediate ("old mammalian") brain, comprising the structures of the limbic system.

- The neopallium, also known as the superior or rational ("new mammalian") brain, comprises almost the whole of the hemispheres (made up of a more recent type of cortex, called neocortex) and some subcortical neuronal groups. It corresponds to the brain of the superior mammals, thus including the primates and, as a consequence, the human species. Similar development of the neocortex in mammalian species unrelated to humans and primates has also occurred, for example in cetaceans and elephants; thus the designation of "superior mammals" is not an evolutionary one, as it has occurred independently in different species. The evolution of higher degrees of intelligence is an example of convergent evolution, and is also seen in non-mammals such as birds.

However, while the categorization into structures is reasonable, the recent studies of the limbic system of tetrapods, both living and extinct, have challenged several aspects of this hypothesis, notably the accuracy of the terms "reptilian" and "old mammalian". The common ancestors of reptiles and mammals had a well-developed limbic system in which the basic subdivisions and connections of the amygdalar nuclei were established.[27] Further, birds, which evolved from the dinosaurs, which in turn evolved separately but around the same time as the mammals, have a well-developed limbic system. While the anatomic structures of the limbic system are different in birds and mammals, there are functional equivalents.

Clinical significance

Damage to the structures of limbic system results in conditions like Alzheimer's disease, anterograde amnesia, retrograde amnesia, and Klüver-Bucy syndrome.Society and culture

Etymology and history

The term limbic comes from the Latin limbus, for "border" or "edge", or, particularly in medical terminology, a border of an anatomical component. Paul Broca coined the term based on its physical location in the brain, sandwiched between two functionally different components.The limbic system is a term that was introduced in 1949 by the American physician and neuroscientist, Paul D. MacLean.[28][29] The French physician Paul Broca first called this part of the brain le grand lobe limbique in 1878.[6] He examined the differentiation between deeply recessed cortical tissue and underlying, subcortical nuclei.[30] However, most of its putative role in emotion was developed only in 1937 when the American physician James Papez described his anatomical model of emotion, the Papez circuit.[31]

The first evidence that the limbic system was responsible for the cortical representation of emotions was discovered in 1939, by Heinrich Kluver and Paul Bucy. Kluver and Bucy, after much research, demonstrated that the bilateral removal of the temporal lobes in monkeys created an extreme behavioral syndrome. After performing a temporal lobectomy, the monkeys showed a decrease in aggression. The animals revealed a reduced threshold to visual stimuli, and were thus unable to recognize objects that were once familiar.[32] MacLean expanded these ideas to include additional structures in a more dispersed "limbic system", more on the lines of the system described above.[29] MacLean developed the intriguing theory of the "triune brain" to explain its evolution and to try to reconcile rational human behavior with its more primal and violent side. He became interested in the brain's control of emotion and behavior. After initial studies of brain activity in epileptic patients, he turned to cats, monkeys, and other models, using electrodes to stimulate different parts of the brain in conscious animals recording their responses.[33] In the 1950s, he began to trace individual behaviors like aggression and sexual arousal to their physiological sources. He analyzed the brain's center of emotions, the limbic system, and described an area that includes structures called the hippocampus and amygdala. Developing observations made by Papez, he determined that the limbic system had evolved in early mammals to control fight-or-flight responses and react to both emotionally pleasurable and painful sensations. The concept is now broadly accepted in neuroscience.[34] Additionally, MacLean said that the idea of the limbic system leads to a recognition that its presence "represents the history of the evolution of mammals and their distinctive family way of life." In the 1960s, Dr. MacLean enlarged his theory to address the human brain's overall structure and divided its evolution into three parts, an idea that he termed the triune brain. In addition to identifying the limbic system, he pointed to a more primitive brain called the R-complex, related to reptiles, which controls basic functions like muscle movement and breathing. The third part, the neocortex, controls speech and reasoning and is the most recent evolutionary arrival.[35] The concept of the limbic system has since been further expanded and developed by Walle Nauta, Lennart Heimer and others.