The logo of Wikipedia, a globe featuring glyphs from several writing systems

|

Type of site

| Online encyclopedia |

|---|---|

| Available in | 303 languages |

| Owner | Wikimedia Foundation |

| Created by | Jimmy Wales, Larry Sanger |

| Website | wikipedia.org |

| Alexa rank | |

| Commercial | No |

| Registration | Optional |

| Users | >295,987 active users and >79,803,732 registered users |

| Launched | January 15, 2001 |

| Current status | Active |

Content license

| CC Attribution / Share-Alike 3.0 Most text is also dual-licensed under GFDL; media licensing varies |

| Written in | LAMP platform |

| OCLC number | 52075003 |

Wikipedia (/ˌwɪkɪˈpiːdiə/ is a multilingual, web-based, free encyclopedia based on a model of openly editable and viewable content, a wiki. It is the largest and most popular general reference work on the World Wide Web, and is one of the most popular websites by Alexa rank. It is owned and supported by the Wikimedia Foundation, a non-profit organization that operates on money it receives from donors.

Wikipedia was launched on January 15, 2001, by Jimmy Wales and Larry Sanger. Sanger coined its name, as a portmanteau of wiki and "encyclopedia". Initially an English-language encyclopedia, versions in other languages were quickly developed. With 5,790,638 articles, the English Wikipedia

is the largest of the more than 290 Wikipedia encyclopedias. Overall,

Wikipedia comprises more than 40 million articles in 301 different

languages and by February 2014 it had reached 18 billion page views and nearly 500 million unique visitors per month.

In 2005, Nature published a peer review comparing 42 science articles from Encyclopædia Britannica and Wikipedia and found that Wikipedia's level of accuracy approached that of Britannica. Time

magazine stated that the open-door policy of allowing anyone to edit

had made Wikipedia the biggest and possibly the best encyclopedia in the

world, and was a testament to the vision of Jimmy Wales.

Wikipedia has been criticized for exhibiting systemic bias, for presenting a mixture of "truths, half truths, and some falsehoods", and for being subject to manipulation and spin in controversial topics. In 2017, Facebook announced that it would help readers detect fake news by suitable links to Wikipedia articles. YouTube announced a similar plan in 2018.

History

Nupedia

Wikipedia originally developed from another encyclopedia project called Nupedia

Other collaborative online encyclopedias were attempted before Wikipedia, but none were as successful.

Wikipedia began as a complementary project for Nupedia, a free online

English-language encyclopedia project whose articles were written by

experts and reviewed under a formal process. It was founded on March 9, 2000, under the ownership of Bomis, a web portal company. Its main figures were Bomis CEO Jimmy Wales and Larry Sanger, editor-in-chief for Nupedia and later Wikipedia. Nupedia was initially licensed under its own Nupedia Open Content License, but even before Wikipedia was founded, Nupedia switched to the GNU Free Documentation License at the urging of Richard Stallman. Wales is credited with defining the goal of making a publicly editable encyclopedia, while Sanger is credited with the strategy of using a wiki to reach that goal. On January 10, 2001, Sanger proposed on the Nupedia mailing list to create a wiki as a "feeder" project for Nupedia.

Launch and early growth

The domains wikipedia.com and wikipedia.org were registered on January 12, 2001 and January 13, 2001 respectively, and Wikipedia was launched on January 15, 2001, as a single English-language edition at www.wikipedia.com, and announced by Sanger on the Nupedia mailing list. Wikipedia's policy of "neutral point-of-view"

was codified in its first months. Otherwise, there were relatively few

rules initially and Wikipedia operated independently of Nupedia. Originally, Bomis intended to make Wikipedia a business for profit.

Wikipedia gained early contributors from Nupedia, Slashdot postings, and web search engine indexing. Language editions were also created, with a total of 161 by the end of 2004.

Nupedia and Wikipedia coexisted until the former's servers were taken

down permanently in 2003, and its text was incorporated into Wikipedia.

The English Wikipedia

passed the mark of two million articles on September 9, 2007, making it

the largest encyclopedia ever assembled, surpassing the 1408 Yongle Encyclopedia, which had held the record for almost 600 years.

Citing fears of commercial advertising and lack of control in Wikipedia, users of the Spanish Wikipedia forked from Wikipedia to create the Enciclopedia Libre in February 2002.

These moves encouraged Wales to announce that Wikipedia would not

display advertisements, and to change Wikipedia's domain from wikipedia.com to wikipedia.org.

Though the English Wikipedia reached three million articles in

August 2009, the growth of the edition, in terms of the numbers of new

articles and of contributors, appears to have peaked around early 2007. Around 1,800 articles were added daily to the encyclopedia in 2006; by 2013 that average was roughly 800. A team at the Palo Alto Research Center attributed this slowing of growth to the project's increasing exclusivity and resistance to change. Others suggest that the growth is flattening naturally because articles that could be called "low-hanging fruit"—topics that clearly merit an article—have already been created and built up extensively.

In November 2009, a researcher at the Rey Juan Carlos University in Madrid (Spain)

found that the English Wikipedia had lost 49,000 editors during the

first three months of 2009; in comparison, the project lost only 4,900

editors during the same period in 2008. The Wall Street Journal cited the array of rules applied to editing and disputes related to such content among the reasons for this trend. Wales disputed these claims in 2009, denying the decline and questioning the methodology of the study.

Two years later, in 2011, Wales acknowledged the presence of a slight

decline, noting a decrease from "a little more than 36,000 writers" in

June 2010 to 35,800 in June 2011. In the same interview, Wales also

claimed the number of editors was "stable and sustainable". A 2013 article titled "The Decline of Wikipedia" in MIT's Technology Review

questioned this claim. The article revealed that since 2007, Wikipedia

had lost a third of the volunteer editors who update and correct the

online encyclopedia and those still there have focused increasingly on

minutiae. In July 2012, The Atlantic reported that the number of administrators is also in decline. In the November 25, 2013, issue of New York magazine, Katherine Ward stated "Wikipedia, the sixth-most-used website, is facing an internal crisis".

Wikipedia blackout protest against SOPA on January 18, 2012

Milestones

In January 2007, Wikipedia entered for the first time the top-ten list of the most popular websites in the U.S., according to comScore Networks. With 42.9 million unique visitors, Wikipedia was ranked number 9, surpassing The New York Times (#10) and Apple

(#11). This marked a significant increase over January 2006, when the

rank was number 33, with Wikipedia receiving around 18.3 million unique

visitors. As of March 2015, Wikipedia has rank 5 among websites in terms of popularity according to Alexa Internet. In 2014, it received 8 billion pageviews every month. On February 9, 2014, The New York Times reported that Wikipedia has 18 billion page views and nearly 500 million unique visitors a month, "according to the ratings firm comScore."

On January 18, 2012, the English Wikipedia participated in a

series of coordinated protests against two proposed laws in the United

States Congress—the Stop Online Piracy Act (SOPA) and the PROTECT IP Act (PIPA)—by blacking out its pages for 24 hours. More than 162 million people viewed the blackout explanation page that temporarily replaced Wikipedia content.

The Wikipedia Page on December 17, 2001

Loveland and Reagle argue that, in process, Wikipedia follows a long

tradition of historical encyclopedias that accumulated improvements

piecemeal through "stigmergic accumulation".

On January 20, 2014, Subodh Varma reporting for The Economic Times

indicated that not only had Wikipedia's growth flattened, but that it

"had lost nearly 10 per cent of its page views last year. There was a

decline of about 2 billion between December 2012 and December 2013. Its

most popular versions are leading the slide: page-views of the English

Wikipedia declined by 12 per cent, those of German version slid by 17

per cent and the Japanese version lost 9 per cent."

Varma added that, "While Wikipedia's managers think that this could be

due to errors in counting, other experts feel that Google's Knowledge Graphs project launched last year may be gobbling up Wikipedia users." When contacted on this matter, Clay Shirky,

associate professor at New York University and fellow at Harvard's

Berkman Center for Internet and Security indicated that he suspected

much of the page view decline was due to Knowledge Graphs, stating, "If

you can get your question answered from the search page, you don't need

to click [any further]."

By the end of December 2016, Wikipedia was ranked fifth in the most popular websites globally.

Openness

Number of English Wikipedia articles

Wikipedia editors with >100 edits per month

Differences between versions of an article are highlighted as shown

Unlike traditional encyclopedias, Wikipedia follows the procrastination principle regarding the security of its content.

It started almost entirely open—anyone could create articles, and any

Wikipedia article could be edited by any reader, even those who did not

have a Wikipedia account. Modifications to all articles would be

published immediately. As a result, any article could contain

inaccuracies such as errors, ideological biases, and nonsensical or

irrelevant text.

Restrictions

Due to the increasing popularity of Wikipedia, some editions,

including the English version, have introduced editing restrictions in

some cases. For instance, on the English Wikipedia and some other

language editions, only registered users may create a new article.

On the English Wikipedia, among others, some particularly

controversial, sensitive and/or vandalism-prone pages have been

protected to some degree. A frequently vandalized article can be semi-protected or extended confirmed protected, meaning that only autoconfirmed or extended confirmed editors are able to modify it. A particularly contentious article may be locked so that only administrators are able to make changes.

In certain cases, all editors are allowed to submit

modifications, but review is required for some editors, depending on

certain conditions. For example, the German Wikipedia maintains "stable versions" of articles,

which have passed certain reviews. Following protracted trials and

community discussion, the English Wikipedia introduced the "pending

changes" system in December 2012.

Under this system, new and unregistered users' edits to certain

controversial or vandalism-prone articles are reviewed by established

users before they are published.

The editing interface of Wikipedia

Review of changes

Although changes are not systematically reviewed, the software that

powers Wikipedia provides certain tools allowing anyone to review

changes made by others. The "History" page of each article links to each

revision. On most articles, anyone can undo others' changes by clicking a link on the article's history page. Anyone can view the latest changes to articles, and anyone may maintain a "watchlist"

of articles that interest them so they can be notified of any changes.

"New pages patrol" is a process whereby newly created articles are

checked for obvious problems.

In 2003, economics PhD student Andrea Ciffolilli argued that the low transaction costs of participating in a wiki

create a catalyst for collaborative development, and that features such

as allowing easy access to past versions of a page favor "creative

construction" over "creative destruction".

Vandalism

Any change or edit that manipulates content in a way that

purposefully compromises the integrity of Wikipedia is considered

vandalism. The most common and obvious types of vandalism include

additions of obscenities and crude humor. Vandalism can also include

advertising and other types of spam.

Sometimes editors commit vandalism by removing content or entirely

blanking a given page. Less common types of vandalism, such as the

deliberate addition of plausible but false information to an article,

can be more difficult to detect. Vandals can introduce irrelevant

formatting, modify page semantics such as the page's title or

categorization, manipulate the underlying code of an article, or use

images disruptively.

American journalist John Seigenthaler (1927–2014), subject of the Seigenthaler incident

Obvious vandalism is generally easy to remove from Wikipedia

articles; the median time to detect and fix vandalism is a few minutes. However, some vandalism takes much longer to repair.

In the Seigenthaler biography incident, an anonymous editor introduced false information into the biography of American political figure John Seigenthaler in May 2005. Seigenthaler was falsely presented as a suspect in the assassination of John F. Kennedy. The article remained uncorrected for four months. Seigenthaler, the founding editorial director of USA Today and founder of the Freedom Forum First Amendment Center at Vanderbilt University,

called Wikipedia co-founder Jimmy Wales and asked whether he had any

way of knowing who contributed the misinformation. Wales replied that he

did not, although the perpetrator was eventually traced. After the incident, Seigenthaler described Wikipedia as "a flawed and irresponsible research tool". This incident led to policy changes at Wikipedia, specifically targeted at tightening up the verifiability of biographical articles of living people.

Policies and laws

Content in Wikipedia is subject to the laws (in particular, copyright laws) of the United States and of the U.S. state of Virginia,

where the majority of Wikipedia's servers are located. Beyond legal

matters, the editorial principles of Wikipedia are embodied in the "five pillars" and in numerous policies and guidelines

intended to appropriately shape content. Even these rules are stored in

wiki form, and Wikipedia editors write and revise the website's

policies and guidelines. Editors can enforce these rules

by deleting or modifying non-compliant material. Originally, rules on

the non-English editions of Wikipedia were based on a translation of the

rules for the English Wikipedia. They have since diverged to some

extent.

Content policies and guidelines

According to the rules on the English Wikipedia, each entry in Wikipedia must be about a topic that is encyclopedic and is not a dictionary entry or dictionary-like. A topic should also meet Wikipedia's standards of "notability",

which generally means that the topic must have been covered in

mainstream media or major academic journal sources that are independent

of the article's subject. Further, Wikipedia intends to convey only

knowledge that is already established and recognized. It must not present original research. A claim that is likely to be challenged requires a reference to a reliable source.

Among Wikipedia editors, this is often phrased as "verifiability, not

truth" to express the idea that the readers, not the encyclopedia, are

ultimately responsible for checking the truthfulness of the articles and

making their own interpretations. This can at times lead to the removal of information that, though valid, is not properly sourced. Finally, Wikipedia must not take sides.

All opinions and viewpoints, if attributable to external sources, must

enjoy an appropriate share of coverage within an article. This is known

as neutral point of view (NPOV).

Governance

Wikipedia's initial anarchy integrated democratic and hierarchical elements over time. An article is not considered to be owned by its creator or any other editor, nor by the subject of the article. Wikipedia's contributors avoid a tragedy of the commons (behaving contrary to the common good) by internalizing benefits. They do this by experiencing flow (i.e., energized focus, full involvement, and enjoyment) and identifying with and gaining status in the Wikipedia community.

Administrators

Editors in good standing in the community can run for one of many levels of volunteer stewardship: this begins with "administrator",

privileged users who can delete pages, prevent articles from being

changed in case of vandalism or editorial disputes (setting protective

measures on articles), and try to prevent certain persons from editing.

Despite the name, administrators are not supposed to enjoy any special

privilege in decision-making; instead, their powers are mostly limited

to making edits that have project-wide effects and thus are disallowed

to ordinary editors, and to implement restrictions intended to prevent

certain persons from making disruptive edits (such as vandalism).

Fewer editors become administrators than in years past, in part

because the process of vetting potential Wikipedia administrators has

become more rigorous.

Bureaucrats name new administrators, solely upon the recommendations from the community.

Dispute resolution

Wikipedians often have disputes regarding content, which may result

in repeatedly making opposite changes to an article, known as edit warring.

Over time, Wikipedia has developed a semi-formal dispute resolution

process to assist in such circumstances. In order to determine community

consensus, editors can raise issues at appropriate community forums, or seek outside input through third opinion requests or by initiating a more general community discussion known as a request for comment.

Arbitration Committee

The Arbitration Committee presides over the ultimate dispute

resolution process. Although disputes usually arise from a disagreement

between two opposing views on how an article should read, the

Arbitration Committee explicitly refuses to directly rule on the

specific view that should be adopted. Statistical analyses suggest that

the committee ignores the content of disputes and rather focuses on the

way disputes are conducted,

functioning not so much to resolve disputes and make peace between

conflicting editors, but to weed out problematic editors while allowing

potentially productive editors back in to participate. Therefore, the

committee does not dictate the content of articles, although it

sometimes condemns content changes when it deems the new content

violates Wikipedia policies (for example, if the new content is

considered biased). Its remedies include cautions and probations (used in 63% of cases) and banning editors from articles

(43%), subject matters (23%), or Wikipedia (16%). Complete bans from

Wikipedia are generally limited to instances of impersonation and anti-social behavior.

When conduct is not impersonation or anti-social, but rather

anti-consensus or in violation of editing policies, remedies tend to be

limited to warnings.

Community

Wikimania 2005 – an annual conference for users of Wikipedia and other projects operated by the Wikimedia Foundation, was held in Frankfurt am Main, Germany from August 4 to 8.

Each article and each user of Wikipedia has an associated "Talk"

page. These form the primary communication channel for editors to

discuss, coordinate and debate.

Wikipedians and British Museum curators collaborate on the article Hoxne Hoard in June 2010

Wikipedia's community has been described as cult-like, although not always with entirely negative connotations. The project's preference for cohesiveness, even if it requires compromise that includes disregard of credentials, has been referred to as "anti-elitism".

Wikipedians sometimes award one another virtual barnstars

for good work. These personalized tokens of appreciation reveal a wide

range of valued work extending far beyond simple editing to include

social support, administrative actions, and types of articulation work.

Wikipedia does not require that its editors and contributors provide identification. As Wikipedia grew, "Who writes Wikipedia?" became one of the questions frequently asked on the project.

Jimmy Wales once argued that only "a community ... a dedicated group of

a few hundred volunteers" makes the bulk of contributions to Wikipedia

and that the project is therefore "much like any traditional

organization". In 2008, a Slate

magazine article reported that: "According to researchers in Palo Alto,

1 percent of Wikipedia users are responsible for about half of the

site's edits." This method of evaluating contributions was later disputed by Aaron Swartz,

who noted that several articles he sampled had large portions of their

content (measured by number of characters) contributed by users with low

edit counts.

The English Wikipedia has 5,790,638 articles, 35,473,236 registered editors, and 125,285 active editors. An editor is considered active if they have made one or more edits in the past thirty days.

Editors who fail to comply with Wikipedia cultural rituals, such as signing talk page comments,

may implicitly signal that they are Wikipedia outsiders, increasing the

odds that Wikipedia insiders may target or discount their

contributions. Becoming a Wikipedia insider involves non-trivial costs:

the contributor is expected to learn Wikipedia-specific technological

codes, submit to a sometimes convoluted dispute resolution process, and

learn a "baffling culture rich with in-jokes and insider references". Editors who do not log in are in some sense second-class citizens on Wikipedia,

as "participants are accredited by members of the wiki community, who

have a vested interest in preserving the quality of the work product, on

the basis of their ongoing participation", but the contribution histories of anonymous unregistered editors recognized only by their IP addresses cannot be attributed to a particular editor with certainty.

Studies

A 2007 study by researchers from Dartmouth College

found that "anonymous and infrequent contributors to Wikipedia [...]

are as reliable a source of knowledge as those contributors who register

with the site".

Jimmy Wales stated in 2009 that "(I)t turns out over 50% of all the

edits are done by just .7% of the users... 524 people... And in fact the

most active 2%, which is 1400 people, have done 73.4% of all the

edits." However, Business Insider editor and journalist Henry Blodget

showed in 2009 that in a random sample of articles, most content in

Wikipedia (measured by the amount of contributed text that survives to

the latest sampled edit) is created by "outsiders", while most editing

and formatting is done by "insiders".

A 2008 study found that Wikipedians were less agreeable, open, and conscientious than others,

although a later commentary pointed out serious flaws, including that

the data showed higher openness and that the differences with the

control group and the samples were small. According to a 2009 study, there is "evidence of growing resistance from the Wikipedia community to new content".

Diversity

Several studies have shown that most of the Wikipedia contributors

are male. Notably, the results of a Wikimedia Foundation survey in 2008

showed that only 13% of Wikipedia editors were female.

Because of this, universities throughout the United States tried to

encourage females to become Wikipedia contributors. Similarly, many of

these universities, including Yale and Brown, gave college credit to students who create or edit an article relating to women in science or technology. Andrew Lih, a professor and scientist, wrote in The New York Times

that the reason he thought the number of male contributors outnumbered

the number of females so greatly, is because identifying as a feminist

may expose oneself to "ugly, intimidating behavior." Data has shown that Africans are underrepresented among Wikipedia editors.

Language editions

There are currently 301 language editions of Wikipedia (also called language versions, or simply Wikipedias). Fifteen of these have over one million articles each (English, Cebuano, Swedish, German, Dutch, French, Russian, Italian, Spanish, Waray-Waray, Polish, Vietnamese, Japanese, Chinese and Portuguese), four more have over 500,000 articles (Ukrainian, Persian, Catalan and Arabic), 40 more have over 100,000 articles, and 78 more have over 10,000 articles. The largest, the English Wikipedia, has over 5.7 million articles. As of January 2019, according to Alexa, the English subdomain

(en.wikipedia.org; English Wikipedia) receives approximately 57% of

Wikipedia's cumulative traffic, with the remaining split among the other

languages (Russian: 9%; Chinese: 6%; Japanese: 6%; Spanish: 5%). As of January 2019, the six largest language editions are (in order of article count) the English, Cebuano, Swedish, German, French, and Dutch Wikipedias.

A graph for pageviews of Turkish Wikipedia shows a great drop of roughly 80 % immediately after the block of Wikipedia in Turkey was imposed in 2017.

Since Wikipedia is based on the Web

and therefore worldwide, contributors to the same language edition may

use different dialects or may come from different countries (as is the

case for the English edition). These differences may lead to some conflicts over spelling differences (e.g. colour versus color) or points of view.

Though the various language editions are held to global policies

such as "neutral point of view", they diverge on some points of policy

and practice, most notably on whether images that are not licensed freely may be used under a claim of fair use.

Jimmy Wales has described Wikipedia as "an effort to create and

distribute a free encyclopedia of the highest possible quality to every

single person on the planet in their own language".

Though each language edition functions more or less independently, some

efforts are made to supervise them all. They are coordinated in part by

Meta-Wiki, the Wikimedia Foundation's wiki devoted to maintaining all

of its projects (Wikipedia and others). For instance, Meta-Wiki provides important statistics on all language editions of Wikipedia, and it maintains a list of articles every Wikipedia should have.

The list concerns basic content by subject: biography, history,

geography, society, culture, science, technology, and mathematics. It is

not rare for articles strongly related to a particular language not to

have counterparts in another edition. For example, articles about small

towns in the United States might only be available in English, even when

they meet notability criteria of other language Wikipedia projects.

Estimation of contributions shares from different regions in the world to different Wikipedia editions

Translated articles represent only a small portion of articles in

most editions, in part because those edition do not allow fully

automated translation of articles. Articles available in more than one language may offer "interwiki links", which link to the counterpart articles in other editions.

A study published by PLoS ONE

in 2012 also estimated the share of contributions to different editions

of Wikipedia from different regions of the world. It reported that the

proportion of the edits made from North America was 51% for the English Wikipedia, and 25% for the simple English Wikipedia. The Wikimedia Foundation hopes to increase the number of editors in the Global South to 37% by 2015.

English Wikipedia editor decline

On March 1, 2014, The Economist

in an article titled "The Future of Wikipedia" cited a trend analysis

concerning data published by Wikimedia stating that: "The number of

editors for the English-language version has fallen by a third in seven

years." The attrition rate for active editors in English Wikipedia was cited by The Economist as substantially in contrast to statistics for Wikipedia in other languages (non-English Wikipedia). The Economist

reported that the number of contributors with an average of five of

more edits per month was relatively constant since 2008 for Wikipedia in

other languages at approximately 42,000 editors within narrow seasonal

variances of about 2,000 editors up or down. The attrition rates for

editors in English Wikipedia, by sharp comparison, were cited as peaking

in 2007 at approximately 50,000 editors, which has dropped to 30,000

editors as of the start of 2014.

At the quoted trend rate, the number of active editors in English

Wikipedia has lost approximately 20,000 editors to attrition since

2007, and the documented trend rate indicates the loss of another 20,000

editors by 2021, down to 10,000 active editors on English Wikipedia by

2021 if left unabated. Given that the trend analysis published in The Economist

presents the number of active editors for Wikipedia in other languages

(non-English Wikipedia) as remaining relatively constant and successful

in sustaining its numbers at approximately 42,000 active editors, the

contrast has pointed to the effectiveness of Wikipedia in other

languages to retain its active editors on a renewable and sustained

basis.

No comment was made concerning which of the differentiated edit policy

standards from Wikipedia in other languages (non-English Wikipedia)

would provide a possible alternative to English Wikipedia for

effectively ameliorating substantial editor attrition rates on the

English-language Wikipedia.

Reception

Several Wikipedians have criticized Wikipedia's large and growing regulation, which includes over 50 policies and nearly 150,000 words as of 2014.

Critics have stated that Wikipedia exhibits systemic bias. In 2010, columnist and journalist Edwin Black criticized Wikipedia for being a mixture of "truth, half truth, and some falsehoods". Articles in The Chronicle of Higher Education and The Journal of Academic Librarianship have criticized Wikipedia's Undue Weight

policy, concluding that the fact that Wikipedia explicitly is not

designed to provide correct information about a subject, but rather

focus on all the major viewpoints on the subject and give less attention

to minor ones, creates omissions that can lead to false beliefs based

on incomplete information.

Journalists Oliver Kamm and Edwin Black

noted (in 2010 and 2011 respectively) how articles are dominated by the

loudest and most persistent voices, usually by a group with an "ax to

grind" on the topic. A 2008 article in Education Next Journal concluded that as a resource about controversial topics, Wikipedia is subject to manipulation and spin.

In 2006, the Wikipedia Watch criticism website listed dozens of examples of plagiarism in the English Wikipedia.

Accuracy of content

Articles for traditional encyclopedias such as Encyclopædia Britannica are carefully and deliberately written by experts, lending such encyclopedias a reputation for accuracy. However, a peer review in 2005 of forty-two scientific entries on both Wikipedia and Encyclopædia Britannica by the science journal Nature

found few differences in accuracy, and concluded that "the average

science entry in Wikipedia contained around four inaccuracies; Britannica, about three."

Reagle suggested that while the study reflects "a topical strength of

Wikipedia contributors" in science articles, "Wikipedia may not have

fared so well using a random sampling of articles or on humanities

subjects." The findings by Nature were disputed by Encyclopædia Britannica, and in response, Nature gave a rebuttal of the points raised by Britannica.

In addition to the point-for-point disagreement between these two

parties, others have examined the sample size and selection method used

in the Nature effort, and suggested a "flawed study design" (in Nature's manual selection of articles, in part or in whole, for comparison), absence of statistical analysis (e.g., of reported confidence intervals), and a lack of study "statistical power" (i.e., owing to small sample size, 42 or 4 × 101 articles compared, vs greater than 105 and greater than 106 set sizes for Britannica and the English Wikipedia, respectively).

As a consequence of the open structure, Wikipedia "makes no

guarantee of validity" of its content, since no one is ultimately

responsible for any claims appearing in it. Concerns have been raised by PC World in 2009 regarding the lack of accountability that results from users' anonymity, the insertion of false information, vandalism, and similar problems.

Economist Tyler Cowen

wrote: "If I had to guess whether Wikipedia or the median refereed

journal article on economics was more likely to be true, after a not so

long think I would opt for Wikipedia." He comments that some traditional

sources of non-fiction suffer from systemic biases and novel results,

in his opinion, are over-reported in journal articles and relevant

information is omitted from news reports. However, he also cautions that

errors are frequently found on Internet sites, and that academics and

experts must be vigilant in correcting them.

Critics argue that Wikipedia's open nature and a lack of proper sources for most of the information makes it unreliable. Some commentators suggest that Wikipedia may be reliable, but that the reliability of any given article is not clear. Editors of traditional reference works such as the Encyclopædia Britannica have questioned the project's utility and status as an encyclopedia. Wikipedia co-founder Jimmy Wales

has claimed that Wikipedia has largely avoided the problem of "fake

news" because the Wikipedia community regularly debates the quality of

sources in articles.

Wikipedia's open structure inherently makes it an easy target for Internet trolls, spammers,

and various forms of paid advocacy seen as counterproductive to the

maintenance of a neutral and verifiable online encyclopedia.

In response to paid advocacy editing and undisclosed editing issues, Wikipedia was reported in an article in The Wall Street Journal, to have strengthened its rules and laws against undisclosed editing.

The article stated that: "Beginning Monday [from the date of article,

June 16, 2014], changes in Wikipedia's terms of use will require anyone

paid to edit articles to disclose that arrangement. Katherine Maher,

the nonprofit Wikimedia Foundation's chief communications officer, said

the changes address a sentiment among volunteer editors that, 'we're

not an advertising service; we're an encyclopedia.'" These issues, among others, had been parodied since the first decade of Wikipedia, notably by Stephen Colbert on The Colbert Report.

A Harvard law textbook, Legal Research in a Nutshell

(2011), cites Wikipedia as a "general source" that "can be a real boon"

in "coming up to speed in the law governing a situation" and, "while not

authoritative, can provide basic facts as well as leads to more

in-depth resources".

Discouragement in education

Most university lecturers discourage students from citing any encyclopedia in academic work, preferring primary sources; some specifically prohibit Wikipedia citations.

Wales stresses that encyclopedias of any type are not usually

appropriate to use as citable sources, and should not be relied upon as

authoritative. Wales once (2006 or earlier) said he receives about ten emails

weekly from students saying they got failing grades on papers because

they cited Wikipedia; he told the students they got what they deserved.

"For God's sake, you're in college; don't cite the encyclopedia", he

said.

In February 2007, an article in The Harvard Crimson newspaper reported that a few of the professors at Harvard University were including Wikipedia articles in their syllabi, although without realizing the articles might change. In June 2007, former president of the American Library Association Michael Gorman condemned Wikipedia, along with Google,

stating that academics who endorse the use of Wikipedia are "the

intellectual equivalent of a dietitian who recommends a steady diet of

Big Macs with everything".

Medical information

On March 5, 2014, Julie Beck writing for The Atlantic magazine

in an article titled "Doctors' #1 Source for Healthcare Information:

Wikipedia", stated that

"Fifty percent of physicians look up conditions on the (Wikipedia) site,

and some are editing articles themselves to improve the quality of

available information." Beck continued to detail in this article new programs of Amin Azzam at the University of San Francisco to offer medical school courses to medical students for learning to edit and improve Wikipedia articles on health-related issues, as well as internal quality control programs within Wikipedia organized by James Heilman

to improve a group of 200 health-related articles of central medical

importance up to Wikipedia's highest standard of articles using its

Featured Article and Good Article peer review evaluation process. In a May 7, 2014, follow-up article in The Atlantic titled "Can Wikipedia Ever Be a Definitive Medical Text?", Julie Beck quotes WikiProject Medicine's James Heilman as stating: "Just because a reference is peer-reviewed doesn't mean it's a high-quality reference."

Beck added that: "Wikipedia has its own peer review process before

articles can be classified as 'good' or 'featured.' Heilman, who has

participated in that process before, says 'less than 1 percent' of

Wikipedia's medical articles have passed."

Quality of writing

In 2008, researchers at Carnegie Mellon University

found that the quality of a Wikipedia article would suffer rather than

gain from adding more writers when the article lacked appropriate

explicit or implicit coordination.

For instance, when contributors rewrite small portions of an entry

rather than making full-length revisions, high- and low-quality content

may be intermingled within an entry. Roy Rosenzweig, a history professor, stated that American National Biography Online

outperformed Wikipedia in terms of its "clear and engaging prose",

which, he said, was an important aspect of good historical writing. Contrasting Wikipedia's treatment of Abraham Lincoln to that of Civil War historian James McPherson in American National Biography Online,

he said that both were essentially accurate and covered the major

episodes in Lincoln's life, but praised "McPherson's richer

contextualization [...] his artful use of quotations to capture

Lincoln's voice [...] and [...] his ability to convey a profound message

in a handful of words." By contrast, he gives an example of Wikipedia's

prose that he finds "both verbose and dull". Rosenzweig also criticized

the "waffling—encouraged by the NPOV policy—[which] means that it is

hard to discern any overall interpretive stance in Wikipedia history".

While generally praising the article on William Clarke Quantrill,

he quoted its conclusion as an example of such "waffling", which then

stated: "Some historians [...] remember him as an opportunistic,

bloodthirsty outlaw, while others continue to view him as a daring

soldier and local folk hero."

Other critics have made similar charges that, even if Wikipedia

articles are factually accurate, they are often written in a poor,

almost unreadable style. Frequent Wikipedia critic Andrew Orlowski

commented, "Even when a Wikipedia entry is 100 per cent factually

correct, and those facts have been carefully chosen, it all too often

reads as if it has been translated from one language to another then

into a third, passing an illiterate translator at each stage." A study of Wikipedia articles on cancer was conducted in 2010 by Yaacov Lawrence of the Kimmel Cancer Center at Thomas Jefferson University. The study was limited to those articles that could be found in the Physician Data Query

and excluded those written at the "start" class or "stub" class level.

Lawrence found the articles accurate but not very readable, and thought

that "Wikipedia's lack of readability (to non-college readers) may

reflect its varied origins and haphazard editing". The Economist

argued that better-written articles tend to be more reliable:

"inelegant or ranting prose usually reflects muddled thoughts and

incomplete information".

To assess Wikipedia articles it can be used various quality measures related to credibility, completeness, objectivity, readability, relevance, style and timeliness.

Coverage of topics and systemic bias

Wikipedia seeks to create a summary of all human knowledge in the

form of an online encyclopedia, with each topic covered encyclopedically

in one article. Since it has terabytes of disk space, it can have far more topics than can be covered by any printed encyclopedia.

The exact degree and manner of coverage on Wikipedia is under constant

review by its editors, and disagreements are not uncommon. Wikipedia contains materials that some people may find objectionable, offensive, or pornographic because Wikipedia is not censored. The policy has sometimes proved controversial: in 2008, Wikipedia rejected an online petition against the inclusion of images of Muhammad in the English edition of its Muhammad

article, citing this policy. The presence of politically, religiously,

and pornographically sensitive materials in Wikipedia has led to the censorship of Wikipedia by national authorities in China, and Pakistan, among other countries.

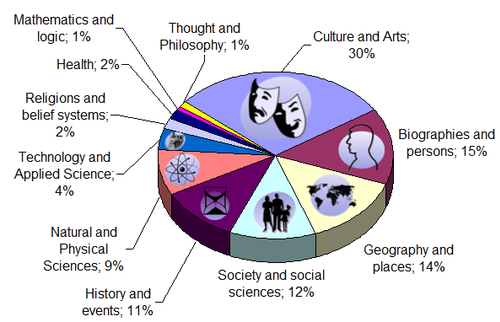

Pie chart of Wikipedia content by subject as of January 2008

A 2008 study conducted by researchers at Carnegie Mellon University

and Palo Alto Research Center gave a distribution of topics as well as

growth (from July 2006 to January 2008) in each field:

- Culture and the arts: 30% (210%)

- Biographies and persons: 15% (97%)

- Geography and places: 14% (52%)

- Society and social sciences: 12% (83%)

- History and events: 11% (143%)

- Natural and physical sciences: 9% (213%)

- Technology and the applied sciences: 4% (−6%)

- Religions and belief systems: 2% (38%)

- Health: 2% (42%)

- Mathematics and logic: 1% (146%)

- Thought and philosophy: 1% (160%)

These numbers refer only to the quantity of articles: it is possible

for one topic to contain a large number of short articles and another to

contain a small number of large ones. Through its "Wikipedia Loves Libraries" program, Wikipedia has partnered with major public libraries such as the New York Public Library for the Performing Arts to expand its coverage of underrepresented subjects and articles.

A 2011 study conducted by researchers at the University of Minnesota

indicated that male and female editors focus on different coverage

topics. There was a greater concentration of females in the People and

Arts category, while males focus more on Geography and Science.

Coverage of topics and selection bias

Research conducted by Mark Graham of the Oxford Internet Institute in 2009 indicated that the geographic distribution of article topics is highly uneven. Africa is most underrepresented.

Across 30 language editions of Wikipedia, historical articles and

sections are generally Eurocentric and focused on recent events.

An editorial in The Guardian in 2014 noted that women porn stars are better covered than women writers as a further example.

Data has also shown that Africa-related material often faces omission; a

knowledge gap that a July 2018 Wikimedia conference in Cape Town sought to address.

Systemic bias

When multiple editors contribute to one topic or set of topics, systemic bias

may arise, due to the demographic backgrounds of the editors. In 2011,

Wales noted that the unevenness of coverage is a reflection of the

demography of the editors, which predominantly consists of highly

educated young males in the developed world (see previously). The October 22, 2013, essay by Tom Simonite in MIT's Technology Review titled "The Decline of Wikipedia" discussed the effect of systemic bias and policy creep on the downward trend in the number of editors.

Systemic bias on Wikipedia may follow that of culture generally, for example favoring certain nationalities, ethnicities or majority religions. It may more specifically follow the biases of Internet culture,

inclining to being young, male, English-speaking, educated,

technologically aware, and wealthy enough to spare time for editing.

Biases of its own may include over-emphasis on topics such as pop

culture, technology, and current events.

Taha Yasseri of the University of Oxford, in 2013, studied the statistical trends of systemic bias at Wikipedia introduced by editing conflicts and their resolution. His research examined the counterproductive work behavior

of edit warring. Yasseri contended that simple reverts or "undo"

operations were not the most significant measure of counterproductive

behavior at Wikipedia and relied instead on the statistical measurement

of detecting "reverting/reverted pairs" or "mutually reverting edit

pairs". Such a "mutually reverting edit pair" is defined where one

editor reverts the edit of another editor who then, in sequence, returns

to revert the first editor in the "mutually reverting edit pairs". The

results were tabulated for several language versions of Wikipedia. The

English Wikipedia's three largest conflict rates belonged to the

articles George W. Bush, Anarchism and Muhammad. By comparison, for the German Wikipedia, the three largest conflict rates at the time of the Oxford study were for the articles covering (i) Croatia, (ii) Scientology and (iii) 9/11 conspiracy theories.

Researchers from the Washington University

developed a statistical model to measure systematic bias in the

behavior of Wikipedia's users regarding controversial topics. The

authors focused on behavioral changes of the encyclopedia's

administrators after assuming the post, writing that systematic bias

occurred after the fact.

Explicit content

Wikipedia has been criticized for allowing information of graphic

content. Articles depicting what some critics have called objectionable

content (such as Feces, Cadaver, Human penis, Vulva, and Nudity) contain graphic pictures and detailed information easily available to anyone with access to the internet, including children.

The site also includes sexual content such as images and videos of masturbation and ejaculation, illustrations of zoophilia, and photos from hardcore pornographic films in its articles. It also has non-sexual photographs of nude children.

The Wikipedia article about Virgin Killer—a 1976 album from German heavy metal band Scorpions—features a picture of the album's original cover, which depicts a naked prepubescent

girl. The original release cover caused controversy and was replaced in

some countries. In December 2008, access to the Wikipedia article Virgin Killer was blocked for four days by most Internet service providers in the United Kingdom after the Internet Watch Foundation

(IWF) decided the album cover was a potentially illegal indecent image

and added the article's URL to a "blacklist" it supplies to British

internet service providers.

In April 2010, Sanger wrote a letter to the Federal Bureau of

Investigation, outlining his concerns that two categories of images on Wikimedia Commons contained child pornography, and were in violation of US federal obscenity law. Sanger later clarified that the images, which were related to pedophilia and one about lolicon,

were not of real children, but said that they constituted "obscene

visual representations of the sexual abuse of children", under the PROTECT Act of 2003. That law bans photographic child pornography and cartoon images and drawings of children that are obscene under American law. Sanger also expressed concerns about access to the images on Wikipedia in schools. Wikimedia Foundation spokesman Jay Walsh strongly rejected Sanger's accusation, saying that Wikipedia did not have "material we would deem to be illegal. If we did, we would remove it."

Following the complaint by Sanger, Wales deleted sexual images without

consulting the community. After some editors who volunteer to maintain

the site argued that the decision to delete had been made hastily, Wales

voluntarily gave up some of the powers he had held up to that time as

part of his co-founder status. He wrote in a message to the Wikimedia

Foundation mailing-list that this action was "in the interest of

encouraging this discussion to be about real philosophical/content

issues, rather than be about me and how quickly I acted". Critics, including Wikipediocracy, noticed that many of the pornographic images deleted from Wikipedia since 2010 have reappeared.

Privacy

One privacy concern in the case of Wikipedia is the right of a private citizen to remain a "private citizen" rather than a "public figure" in the eyes of the law. It is a battle between the right to be anonymous in cyberspace and the right to be anonymous in real life ("meatspace").

A particular problem occurs in the case of an individual who is

relatively unimportant and for whom there exists a Wikipedia page

against her or his wishes.

In January 2006, a German court ordered the German Wikipedia shut down within Germany because it stated the full name of Boris Floricic,

aka "Tron", a deceased hacker. On February 9, 2006, the injunction

against Wikimedia Deutschland was overturned, with the court rejecting

the notion that Tron's right to privacy or that of his parents was being violated.

Wikipedia has a "Volunteer Response Team" that uses the OTRS

system to handle queries without having to reveal the identities of the

involved parties. This is used, for example, in confirming the

permission for using individual images and other media in the project.

Sexism

Wikipedia has been described as harboring a battleground culture of sexism and harassment.

The perceived toxic attitudes and tolerance of violent and abusive

language are also reasons put forth for the gender gap in Wikipedia

editors.

In 2014, a female editor who requested a separate space on Wikipedia to

discuss improving civility had her proposal referred to by a male

editor using the words "the easiest way to avoid being called a cunt is not to act like one".

Operation

Wikimedia Foundation and Wikimedia movement affiliates

Katherine Maher is the third executive director at Wikimedia, following the departure of Lila Tretikov in 2016.

Wikipedia is hosted and funded by the Wikimedia Foundation, a non-profit organization which also operates Wikipedia-related projects such as Wiktionary and Wikibooks. The foundation relies on public contributions and grants to fund its mission.

The foundation's 2013 IRS Form 990 shows revenue of $39.7 million and

expenses of almost $29 million, with assets of $37.2 million and

liabilities of about $2.3 million.

In May 2014, Wikimedia Foundation named Lila Tretikov as its second executive director, taking over for Sue Gardner. The Wall Street Journal

reported on May 1, 2014, that Tretikov's information technology

background from her years at University of California offers Wikipedia

an opportunity to develop in more concentrated directions guided by her

often repeated position statement that, "Information, like air, wants to

be free." The same Wall Street Journal

article reported these directions of development according to an

interview with spokesman Jay Walsh of Wikimedia, who "said Tretikov

would address that issue (paid advocacy)

as a priority. 'We are really pushing toward more transparency... We

are reinforcing that paid advocacy is not welcome.' Initiatives to

involve greater diversity of contributors, better mobile support of

Wikipedia, new geo-location tools to find local content more easily, and

more tools for users in the second and third world are also priorities,

Walsh said."

Following the departure of Tretikov from Wikipedia due to issues

concerning the use of the "superprotection" feature which some language

versions of Wikipedia have adopted, Katherine Maher became the third

executive director the Wikimedia Foundation in June 2016.

Maher has stated that one of her priorities would be the issue of

editor harassment endemic to Wikipedia as identified by the Wikipedia

board in December. Maher stated regarding the harassment issue that: "It

establishes a sense within the community that this is a priority...

(and that correction requires that) it has to be more than words."

Wikipedia is also supported by many organizations and groups that

are affiliated with the Wikimedia Foundation but independently-run,

called Wikimedia movement affiliates. These include Wikimedia chapters

(which are national or sub-national organizations, such as Wikimedia

Deutschland and Wikimédia France), thematic organizations (such as

Amical Wikimedia for the Catalan language community), and user groups. These affiliates participate in the promotion, development, and funding of Wikipedia.

Software operations and support

The operation of Wikipedia depends on MediaWiki, a custom-made, free and open source wiki software platform written in PHP and built upon the MySQL database system. The software incorporates programming features such as a macro language, variables, a transclusion system for templates, and URL redirection. MediaWiki is licensed under the GNU General Public License and it is used by all Wikimedia projects, as well as many other wiki projects. Originally, Wikipedia ran on UseModWiki written in Perl by Clifford Adams (Phase I), which initially required CamelCase

for article hyperlinks; the present double bracket style was

incorporated later. Starting in January 2002 (Phase II), Wikipedia began

running on a PHP wiki engine with a MySQL database; this software was custom-made for Wikipedia by Magnus Manske. The Phase II software was repeatedly modified to accommodate the exponentially increasing demand. In July 2002 (Phase III), Wikipedia shifted to the third-generation software, MediaWiki, originally written by Lee Daniel Crocker.

Several MediaWiki extensions are installed to extend the functionality of the MediaWiki software.

In April 2005, a Lucene extension was added to MediaWiki's built-in search and Wikipedia switched from MySQL to Lucene for searching. The site currently uses Lucene Search 2.1, which is written in Java and based on Lucene library 2.3.

In July 2013, after extensive beta testing, a WYSIWYG (What You See Is What You Get) extension, VisualEditor, was opened to public use. It was met with much rejection and criticism, and was described as "slow and buggy". The feature was changed from opt-out to opt-in afterward.

Automated editing

Computer programs called bots

have been used widely to perform simple and repetitive tasks, such as

correcting common misspellings and stylistic issues, or to start

articles such as geography entries in a standard format from statistical

data.

One controversial contributor massively creating articles with his bot

was reported to create up to ten thousand articles on the Swedish

Wikipedia on certain days.

There are also some bots designed to automatically notify editors when

they make common editing errors (such as unmatched quotes or unmatched

parentheses). Edits misidentified by a bot as the work of a banned editor can be restored by other editors. An anti-vandal bot tries to detect and revert vandalism quickly and automatically.

Bots can also report edits from particular accounts or IP address

ranges, as was done at the time of the MH17 jet downing incident in July

2014. Bots on Wikipedia must be approved prior to activation.

According to Andrew Lih, the current expansion of Wikipedia to millions of articles would be difficult to envision without the use of such bots.

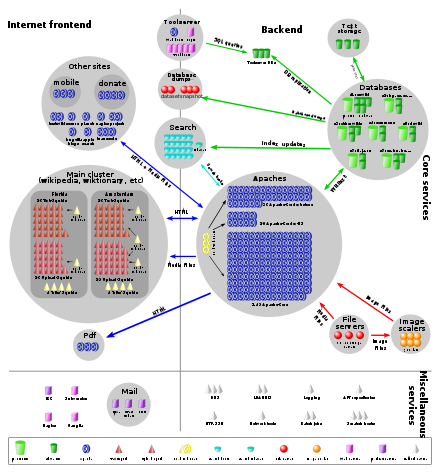

Hardware operations and support

Wikipedia receives between 25,000 and 60,000 page requests per second, depending on time of day. As of 2008, page requests are first passed to a front-end layer of Squid caching servers. Further statistics, based on a publicly available 3-month Wikipedia access trace, are available. Requests that cannot be served from the Squid cache are sent to load-balancing servers running the Linux Virtual Server

software, which in turn pass them to one of the Apache web servers for

page rendering from the database. The web servers deliver pages as

requested, performing page rendering for all the language editions of

Wikipedia. To increase speed further, rendered pages are cached in a

distributed memory cache until invalidated, allowing page rendering to

be skipped entirely for most common page accesses.

Overview of system architecture as of December 2010

Wikipedia currently runs on dedicated clusters of Linux servers (mainly Ubuntu). As of December 2009, there were 300 in Florida and 44 in Amsterdam. By January 22, 2013, Wikipedia had migrated its primary data center to an Equinix facility in Ashburn, Virginia.

Internal research and operational development

In accordance with growing amounts of incoming donations exceeding seven digits in 2013 as recently reported, the Foundation has reached a threshold of assets which qualify its consideration under the principles of industrial organization economics to indicate the need for the re-investment of donations into the internal research and development of the Foundation.

Two of the recent projects of such internal research and development

have been the creation of a Visual Editor and a largely under-utilized

"Thank" tab which were developed for the purpose of ameliorating issues

of editor attrition, which have met with limited success.

The estimates for reinvestment by industrial organizations into

internal research and development was studied by Adam Jaffe, who

recorded that the range of 4% to 25% annually was to be recommended,

with high end technology requiring the higher level of support for

internal reinvestment.

At the 2013 level of contributions for Wikimedia presently documented

as 45 million dollars, the computed budget level recommended by Jaffe

and Caballero for reinvestment into internal research and development is

between 1.8 million and 11.3 million dollars annually. In 2016, the level of contributions were reported by Bloomberg News

as being at $77 million annually, updating the Jaffe estimates for the

higher level of support to between $3.08 million and $19.2 million

annually.

Internal news publications

Community-produced news publications include the English Wikipedia's The Signpost, founded in 2005 by Michael Snow, an attorney, Wikipedia administrator and former chair of the Wikimedia Foundation board of trustees. It covers news and events from the site, as well as major events from other Wikimedia projects, such as Wikimedia Commons. Similar publications are the German-language Kurier, and the Portuguese-language Correio da Wikipédia. Other past and present community news publications on English Wikipedia include the "Wikiworld" web comic, the Wikipedia Weekly podcast, and newsletters of specific WikiProjects like The Bugle from WikiProject Military History and the monthly newsletter from The Guild of Copy Editors. There are also a number of publications from the Wikimedia Foundation and multilingual publications such as the Wikimedia Blog and This Month in Education.

Access to content

Content licensing

When the project was started in 2001, all text in Wikipedia was covered by the GNU Free Documentation License (GFDL), a copyleft

license permitting the redistribution, creation of derivative works,

and commercial use of content while authors retain copyright of their

work. The GFDL was created for software manuals that come with free software programs licensed under the GPL.

This made it a poor choice for a general reference work: for example,

the GFDL requires the reprints of materials from Wikipedia to come with a

full copy of the GFDL text. In December 2002, the Creative Commons license

was released: it was specifically designed for creative works in

general, not just for software manuals. The license gained popularity

among bloggers and others distributing creative works on the Web. The

Wikipedia project sought the switch to the Creative Commons.

Because the two licenses, GFDL and Creative Commons, were incompatible,

in November 2008, following the request of the project, the Free Software Foundation (FSF) released a new version of the GFDL designed specifically to allow Wikipedia to relicense its content to CC BY-SA

by August 1, 2009. (A new version of the GFDL automatically covers

Wikipedia contents.) In April 2009, Wikipedia and its sister projects

held a community-wide referendum which decided the switch in June 2009.

The handling of media files (e.g. image files) varies across

language editions. Some language editions, such as the English

Wikipedia, include non-free image files under fair use

doctrine, while the others have opted not to, in part because of the

lack of fair use doctrines in their home countries (e.g. in Japanese copyright law). Media files covered by free content licenses (e.g. Creative Commons' CC BY-SA) are shared across language editions via Wikimedia Commons

repository, a project operated by the Wikimedia Foundation. Wikipedia's

accommodation of varying international copyright laws regarding images

has led some to observe that its photographic coverage of topics lags

behind the quality of the encyclopedic text.

The Wikimedia Foundation is not a licensor of content, but merely

a hosting service for the contributors (and licensors) of the

Wikipedia. This position has been successfully defended in court.

Methods of access

Because Wikipedia content is distributed under an open license,

anyone can reuse or re-distribute it at no charge. The content of

Wikipedia has been published in many forms, both online and offline,

outside of the Wikipedia website.

- Websites – Thousands of "mirror sites" exist that republish content from Wikipedia: two prominent ones, that also include content from other reference sources, are Reference.com and Answers.com. Another example is Wapedia, which began to display Wikipedia content in a mobile-device-friendly format before Wikipedia itself did.

- Mobile apps – A variety of mobile apps provide access to Wikipedia on hand-held devices, including both Android and iOS devices.

- Search engines – Some web search engines make special use of Wikipedia content when displaying search results: examples include Bing (via technology gained from Powerset) and DuckDuckGo.

- Compact discs, DVDs – Collections of Wikipedia articles have been published on optical discs. An English version, 2006 Wikipedia CD Selection, contained about 2,000 articles. The Polish-language version contains nearly 240,000 articles. There are German- and Spanish-language versions as well. Also, "Wikipedia for Schools", the Wikipedia series of CDs / DVDs produced by Wikipedians and SOS Children, is a free, hand-checked, non-commercial selection from Wikipedia targeted around the UK National Curriculum and intended to be useful for much of the English-speaking world. The project is available online; an equivalent print encyclopedia would require roughly 20 volumes.

- Printed books – There are efforts to put a select subset of Wikipedia's articles into printed book form. Since 2009, tens of thousands of print-on-demand books that reproduced English, German, Russian and French Wikipedia articles have been produced by the American company Books LLC and by three Mauritian subsidiaries of the German publisher VDM.

- Semantic Web – The website DBpedia, begun in 2007, extracts data from the infoboxes and category declarations of the English-language Wikipedia. Wikimedia has created the Wikidata project with a similar objective of storing the basic facts from each page of Wikipedia and the other WMF wikis and make it available in a queriable semantic format, RDF. This is still under development. As of February 2014 it has 15,000,000 items and 1,000 properties for describing them.

Obtaining the full contents of Wikipedia for reuse presents challenges, since direct cloning via a web crawler is discouraged. Wikipedia publishes "dumps" of its contents, but these are text-only; as of 2007 there was no dump available of Wikipedia's images.

Several languages of Wikipedia also maintain a reference desk, where volunteers answer questions from the general public. According to a study by Pnina Shachaf in the Journal of Documentation, the quality of the Wikipedia reference desk is comparable to a standard library reference desk, with an accuracy of 55%.

Mobile access

The mobile version of the English Wikipedia's main page

Wikipedia's original medium was for users to read and edit content using any standard web browser through a fixed Internet connection. Although Wikipedia content has been accessible through the mobile web since July 2013, The New York Times

on February 9, 2014, quoted Erik Möller, deputy director of the

Wikimedia Foundation, stating that the transition of internet traffic

from desktops to mobile devices was significant and a cause for concern

and worry. The article in The New York Times

reported the comparison statistics for mobile edits stating that, "Only

20 percent of the readership of the English-language Wikipedia comes

via mobile devices, a figure substantially lower than the percentage of

mobile traffic for other media sites, many of which approach 50 percent.

And the shift to mobile editing has lagged even more." The New York Times

reports that Möller has assigned "a team of 10 software developers

focused on mobile", out of a total of approximately 200 employees

working at the Wikimedia Foundation. One principal concern cited by The New York Times

for the "worry" is for Wikipedia to effectively address attrition

issues with the number of editors which the online encyclopedia attracts

to edit and maintain its content in a mobile access environment.

Bloomberg Businessweek

reported in July 2014 that Google's Android mobile apps have dominated

the largest share of global smartphone shipments for 2013 with 78.6% of

market share over their next closest competitor in iOS with 15.2% of the

market. At the time of the Tretikov appointment and her posted web interview with Sue Gardner

in May 2014, Wikimedia representatives made a technical announcement

concerning the number of mobile access systems in the market seeking

access to Wikipedia. Directly after the posted web interview, the

representatives stated that Wikimedia would be applying an all-inclusive

approach to accommodate as many mobile access systems as possible in

its efforts for expanding general mobile access, including BlackBerry

and the Windows Phone system, making market share a secondary issue.

The latest version of the Android app for Wikipedia was released on

July 23, 2014, to generally positive reviews, scoring over four of a

possible five in a poll of approximately 200,000 users downloading from

Google. The latest version for iOS was released on April 3, 2013, to similar reviews.

Access to Wikipedia from mobile phones was possible as early as 2004, through the Wireless Application Protocol (WAP), via the Wapedia service. In June 2007 Wikipedia launched en.mobile.wikipedia.org, an official website for wireless devices. In 2009 a newer mobile service was officially released, located at en.m.wikipedia.org, which caters to more advanced mobile devices such as the iPhone, Android-based devices or WebOS-based

devices. Several other methods of mobile access to Wikipedia have

emerged. Many devices and applications optimize or enhance the display

of Wikipedia content for mobile devices, while some also incorporate

additional features such as use of Wikipedia metadata, such as geoinformation.

Wikipedia Zero is an initiative of the Wikimedia Foundation to expand the reach of the encyclopedia to the developing countries.

Andrew Lih and Andrew Brown both maintain editing Wikipedia with smart phones

is difficult and this discourages new potential contributors. Several

years running the number of Wikipedia editors has been falling and Tom

Simonite of MIT Technology Review claims the bureaucratic structure and rules are a factor in this. Simonite alleges some Wikipedians use the labyrinthine rules and guidelines to dominate others and those editors have a vested interest in keeping the status quo.

Lih alleges there is serious disagreement among existing contributors

how to resolve this. Lih fears for Wikipedia's long term future while

Brown fears problems with Wikipedia will remain and rival encyclopedias

will not replace it.

Cultural impact

Trusted source to combat fake news

In 2017–18, after a barrage of false news reports, both Facebook and

YouTube announced they would rely on Wikipedia to help their users

evaluate reports and reject false news. Noam Cohen, writing in The Washington Post

states, "YouTube’s reliance on Wikipedia to set the record straight

builds on the thinking of another fact-challenged platform, the Facebook

social network, which announced last year that Wikipedia would help its

users root out 'fake news'."

In answer to the question of 'how engaged are visitors to the site?'

Alexa records the daily page views per visitor as 3.10 and the daily time

on site as 4.11 minutes.

Readership

Wikipedia is extremely popular. In February 2014, The New York Times

reported that Wikipedia is ranked fifth globally among all websites,

stating "With 18 billion page views and nearly 500 million unique

visitors a month [...] Wikipedia trails just Yahoo, Facebook, Microsoft

and Google, the largest with 1.2 billion unique visitors."

In addition to logistic growth in the number of its articles, Wikipedia has steadily gained status as a general reference website since its inception in 2001. About 50% of search engine traffic to Wikipedia comes from Google, a good portion of which is related to academic research. The number of readers of Wikipedia worldwide reached 365 million at the end of 2009. The Pew Internet and American Life project found that one third of US Internet users consulted Wikipedia. In 2011 Business Insider gave Wikipedia a valuation of $4 billion if it ran advertisements.

According to "Wikipedia Readership Survey 2011", the average age

of Wikipedia readers is 36, with a rough parity between genders. Almost

half of Wikipedia readers visit the site more than five times a month,

and a similar number of readers specifically look for Wikipedia in

search engine results. About 47% of Wikipedia readers do not realize

that Wikipedia is a non-profit organization.

Cultural significance

Wikipedia Monument in Słubice, Poland

Wikipedia's content has also been used in academic studies, books, conferences, and court cases. The Parliament of Canada's website refers to Wikipedia's article on same-sex marriage in the "related links" section of its "further reading" list for the Civil Marriage Act. The encyclopedia's assertions are increasingly used as a source by organizations such as the US federal courts and the World Intellectual Property Organization – though mainly for supporting information rather than information decisive to a case. Content appearing on Wikipedia has also been cited as a source and referenced in some US intelligence agency reports. In December 2008, the scientific journal RNA Biology

launched a new section for descriptions of families of RNA molecules

and requires authors who contribute to the section to also submit a

draft article on the RNA family for publication in Wikipedia.

Wikipedia has also been used as a source in journalism, often without attribution, and several reporters have been dismissed for plagiarizing from Wikipedia.

In 2006, Time magazine recognized Wikipedia's participation (along with YouTube, Reddit, MySpace, and Facebook) in the rapid growth of online collaboration and interaction by millions of people worldwide.

In July 2007 Wikipedia was the focus of a 30-minute documentary on BBC Radio 4

which argued that, with increased usage and awareness, the number of

references to Wikipedia in popular culture is such that the word is one

of a select band of 21st-century nouns that are so familiar (Google, Facebook, YouTube) that they no longer need explanation.

On September 28, 2007, Italian politician Franco Grillini raised a parliamentary question with the minister of cultural resources and activities about the necessity of freedom of panorama.

He said that the lack of such freedom forced Wikipedia, "the seventh

most consulted website", to forbid all images of modern Italian

buildings and art, and claimed this was hugely damaging to tourist

revenues.

Jimmy Wales receiving the Quadriga A Mission of Enlightenment award

On September 16, 2007, The Washington Post reported that Wikipedia had become a focal point in the 2008 US election campaign,

saying: "Type a candidate's name into Google, and among the first

results is a Wikipedia page, making those entries arguably as important

as any ad in defining a candidate. Already, the presidential entries are

being edited, dissected and debated countless times each day." An October 2007 Reuters

article, titled "Wikipedia page the latest status symbol", reported the

recent phenomenon of how having a Wikipedia article vindicates one's

notability.

Active participation also has an impact. Law students have been

assigned to write Wikipedia articles as an exercise in clear and

succinct writing for an uninitiated audience.

A working group led by Peter Stone (formed as a part of the Stanford-based project One Hundred Year Study on Artificial Intelligence)

in its report called Wikipedia "the best-known example of

crowdsourcing... that far exceeds traditionally-compiled information

sources, such as encyclopedias and dictionaries, in scale and depth."

In a 2017 opinion piece for Wired, Hossein Derakhshan describes Wikipedia as "one of the last remaining pillars of the open and decentralized web" and contrasted its existence as a text-based source of knowledge with social media and social networking services,

the latter having "since colonized the web for television's values."

For Derakhshan, Wikipedia's goal as an encyclopedia represents the Age of Enlightenment tradition of rationality triumphing over emotions, a trend which he considers "endangered" due to the "gradual shift from a typographic

culture to a photographic one, which in turn mean[s] a shift from

rationality to emotions, exposition to entertainment." Rather than "sapere aude" (lit. 'dare to know'),

social networks have led to a culture of "[d]are not to care to know."

This is while Wikipedia faces "a more concerning problem" than funding,

namely "a flattening growth rate in the number of contributors to the

website." Consequently, the challenge for Wikipedia and those who use it

is to "save Wikipedia and its promise of a free and open collection of

all human knowledge amid the conquest of new and old television—how to

collect and preserve knowledge when nobody cares to know."

Awards

Wikipedia team visiting to Parliament of Asturias

Wikipedians meeting after the Asturias awards ceremony