From Wikipedia, the free encyclopedia

Beckman DU640 UV/Vis spectrophotometer

UV spectroscopy or UV–visible spectrophotometry (UV–Vis or UV/Vis) refers to absorption spectroscopy or reflectance spectroscopy in part of the ultraviolet and the full, adjacent visible regions of the electromagnetic spectrum.

Being relatively inexpensive and easily implemented, this methodology

is widely used in diverse applied and fundamental applications. The

only requirement is that the sample absorb in the UV-vis region, i.e. be

a chromophore. Absorption spectroscopy is complementary to fluorescence spectroscopy.

Parameters of interest, besides the wavelength of measurement, are

absorbance (A) or Transmittance (%T) or Reflectance (%R), and its change

with time.

Optical transitions

Most

molecules and ions absorb energy in the ultraviolet or visible range,

i.e., they are chromophores. The absorbed photon excites an electron in

the chromophore to higher energy molecular orbitals, giving rise to an excited state.

For organic chromophores, four possible types of transitions are

assumed: π–π*, n–π*, σ–σ*, and n–σ*. Transition metal complexes are

often colored (i.e., absorb visible light) owing to the presence of

multiple electronic states associated with incompletely filled d

orbitals.

Applications

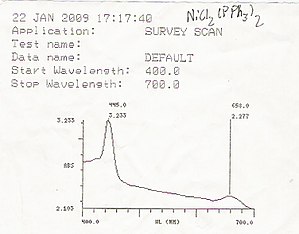

An example of a UV/Vis readout

UV/Vis spectroscopy is routinely used in analytical chemistry for the quantitative determination of diverse analytes or sample, such as transition metal ions, highly conjugated organic compounds,

and biological macromolecules. Spectroscopic analysis is commonly

carried out in solutions but solids and gases may also be studied.

- Organic compounds, especially those with a high degree of conjugation, also absorb light in the UV or visible regions of the electromagnetic spectrum. The solvents for these determinations are often water for water-soluble compounds, or ethanol

for organic-soluble compounds. (Organic solvents may have significant

UV absorption; not all solvents are suitable for use in UV spectroscopy.

Ethanol absorbs very weakly at most wavelengths.) Solvent polarity and

pH can affect the absorption spectrum of an organic compound. Tyrosine,

for example, increases in absorption maxima and molar extinction

coefficient when pH increases from 6 to 13 or when solvent polarity

decreases.

- While charge transfer complexes also give rise to colours, the colours are often too intense to be used for quantitative measurement.

The Beer–Lambert law

states that the absorbance of a solution is directly proportional to

the concentration of the absorbing species in the solution and the path

length.

Thus, for a fixed path length, UV/Vis spectroscopy can be used to

determine the concentration of the absorber in a solution. It is

necessary to know how quickly the absorbance changes with concentration.

This can be taken from references (tables of molar extinction coefficients), or more accurately, determined from a calibration curve.

A UV/Vis spectrophotometer may be used as a detector for HPLC.

The presence of an analyte gives a response assumed to be proportional

to the concentration. For accurate results, the instrument's response to

the analyte in the unknown should be compared with the response to a

standard; this is very similar to the use of calibration curves. The

response (e.g., peak height) for a particular concentration is known as

the response factor.

The wavelengths of absorption peaks can be correlated with the

types of bonds in a given molecule and are valuable in determining the

functional groups within a molecule. The Woodward–Fieser rules, for instance, are a set of empirical observations used to predict λmax, the wavelength of the most intense UV/Vis absorption, for conjugated organic compounds such as dienes and ketones.

The spectrum alone is not, however, a specific test for any given

sample. The nature of the solvent, the pH of the solution, temperature,

high electrolyte concentrations, and the presence of interfering

substances can influence the absorption spectrum. Experimental

variations such as the slit width (effective bandwidth) of the

spectrophotometer will also alter the spectrum. To apply UV/Vis

spectroscopy to analysis, these variables must be controlled or

accounted for in order to identify the substances present.

The method is most often used in a quantitative way to determine concentrations of an absorbing species in solution, using the Beer–Lambert law:

,

,

where A is the measured absorbance (in Absorbance Units (AU)),  is the intensity of the incident light at a given wavelength,

is the intensity of the incident light at a given wavelength,  is the transmitted intensity, L the path length through the sample, and c the concentration of the absorbing species. For each species and wavelength, ε is a constant known as the molar absorptivity

or extinction coefficient. This constant is a fundamental molecular

property in a given solvent, at a particular temperature and pressure,

and has units of

is the transmitted intensity, L the path length through the sample, and c the concentration of the absorbing species. For each species and wavelength, ε is a constant known as the molar absorptivity

or extinction coefficient. This constant is a fundamental molecular

property in a given solvent, at a particular temperature and pressure,

and has units of  .

.

The absorbance and extinction ε are sometimes defined in terms of the natural logarithm instead of the base-10 logarithm.

The Beer–Lambert Law is useful for characterizing many compounds

but does not hold as a universal relationship for the concentration and

absorption of all substances. A 2nd order polynomial relationship

between absorption and concentration is sometimes encountered for very

large, complex molecules such as organic dyes (Xylenol Orange or Neutral Red, for example).

UV–Vis spectroscopy is also used in the semiconductor industry to

measure the thickness and optical properties of thin films on a wafer.

UV–Vis spectrometers are used to measure the reflectance of light, and

can be analyzed via the Forouhi–Bloomer dispersion equations to determine the index of refraction ( ) and the extinction coefficient (

) and the extinction coefficient ( ) of a given film across the measured spectral range.

) of a given film across the measured spectral range.

Practical considerations

The

Beer–Lambert law has implicit assumptions that must be met

experimentally for it to apply; otherwise there is a possibility of

deviations from the law.

For instance, the chemical makeup and physical environment of the

sample can alter its extinction coefficient. The chemical and physical

conditions of a test sample therefore must match reference measurements

for conclusions to be valid. Worldwide, pharmacopoeias such as the

American (USP) and European (Ph. Eur.) pharmacopeias demand that

spectrophotometers perform according to strict regulatory requirements

encompassing factors such as stray light and wavelength accuracy.

Spectral bandwidth

It is important to have a monochromatic source of radiation for the light incident on the sample cell.

Monochromaticity is measured as the width of the "triangle" formed by

the intensity spike, at one half of the peak intensity. A given

spectrometer has a spectral bandwidth that characterizes how monochromatic the incident light is. If this bandwidth is comparable to (or more than) the width

of the absorption line, then the measured extinction coefficient will

be mistaken. In reference measurements, the instrument bandwidth

(bandwidth of the incident light) is kept below the width of the

spectral lines. When a test material is being measured, the bandwidth of

the incident light should also be sufficiently narrow. Reducing the

spectral bandwidth reduces the energy passed to the detector and will,

therefore, require a longer measurement time to achieve the same signal

to noise ratio.

Wavelength error

In

liquids, the extinction coefficient usually changes slowly with

wavelength. A peak of the absorbance curve (a wavelength where the

absorbance reaches a maximum) is where the rate of change in absorbance

with wavelength is smallest.

Measurements are usually made at a peak to minimize errors produced by

errors in wavelength in the instrument, that is errors due to having a

different extinction coefficient than assumed.

Stray light

Another important major factor is the purity of the light used. The most important factor affecting this is the stray light level of the monochromator.

The detector used is broadband; it responds to all the light that

reaches it. If a significant amount of the light passed through the

sample contains wavelengths that have much lower extinction coefficients

than the nominal one, the instrument will report an incorrectly low

absorbance. Any instrument will reach a point where an increase in

sample concentration will not result in an increase in the reported

absorbance, because the detector is simply responding to the stray

light. In practice the concentration of the sample or the optical path

length must be adjusted to place the unknown absorbance within a range

that is valid for the instrument. Sometimes an empirical calibration

function is developed, using known concentrations of the sample, to

allow measurements into the region where the instrument is becoming

non-linear.

As a rough guide, an instrument with a single monochromator would

typically have a stray light level corresponding to about 3 Absorbance

Units (AU), which would make measurements above about 2 AU problematic. A

more complex instrument with a double monochromator

would have a stray light level corresponding to about 6 AU, which would

therefore allow measuring a much wider absorbance range.

Deviations from the Beer–Lambert law

At

sufficiently high concentrations, the absorption bands will saturate

and show absorption flattening. The absorption peak appears to flatten

because close to 100% of the light is already being absorbed. The

concentration at which this occurs depends on the particular compound

being measured. One test that can be used to test for this effect is to

vary the path length of the measurement. In the Beer–Lambert law,

varying concentration and path length has an equivalent effect—diluting a

solution by a factor of 10 has the same effect as shortening the path

length by a factor of 10. If cells of different path lengths are

available, testing if this relationship holds true is one way to judge

if absorption flattening is occurring.

Solutions that are not homogeneous can show deviations from the

Beer–Lambert law because of the phenomenon of absorption flattening.

This can happen, for instance, where the absorbing substance is located

within suspended particles.

The deviations will be most noticeable under conditions of low

concentration and high absorbance. The last reference describes a way to

correct for this deviation.

Some solutions, like copper(II)chloride in water, change visually

at a certain concentration because of changed conditions around the

coloured ion (the divalent copper ion). For copper(II)chloride it means a

shift from blue to green, which would mean that monochromatic measurements would deviate from the Beer–Lambert law.

Measurement uncertainty sources

The above factors contribute to the measurement uncertainty

of the results obtained with UV/Vis spectrophotometry. If UV/Vis

spectrophotometry is used in quantitative chemical analysis then the

results are additionally affected by uncertainty sources arising from

the nature of the compounds and/or solutions that are measured. These

include spectral interferences caused by absorption band overlap, fading

of the color of the absorbing species (caused by decomposition or

reaction) and possible composition mismatch between the sample and the

calibration solution.

Ultraviolet–visible spectrophotometer

The instrument used in ultraviolet–visible spectroscopy is called a UV/Vis spectrophotometer. It measures the intensity of light after passing through a sample ( ), and compares it to the intensity of light before it passes through the sample (

), and compares it to the intensity of light before it passes through the sample ( ). The ratio

). The ratio  is called the transmittance, and is usually expressed as a percentage (%T). The absorbance,

is called the transmittance, and is usually expressed as a percentage (%T). The absorbance,  , is based on the transmittance:

, is based on the transmittance:

The UV–visible spectrophotometer can also be configured to measure

reflectance. In this case, the spectrophotometer measures the intensity

of light reflected from a sample ( ), and compares it to the intensity of light reflected from a reference material (

), and compares it to the intensity of light reflected from a reference material ( ) (such as a white tile). The ratio

) (such as a white tile). The ratio  is called the reflectance, and is usually expressed as a percentage (%R).

is called the reflectance, and is usually expressed as a percentage (%R).

The basic parts of a spectrophotometer are a light source, a holder for the sample, a diffraction grating in a monochromator or a prism to separate the different wavelengths of light, and a detector. The radiation source is often a Tungsten filament (300–2500 nm), a deuterium arc lamp, which is continuous over the ultraviolet region (190–400 nm), Xenon arc lamp, which is continuous from 160 to 2,000 nm; or more recently, light emitting diodes (LED) for the visible wavelengths. The detector is typically a photomultiplier tube, a photodiode, a photodiode array or a charge-coupled device

(CCD). Single photodiode detectors and photomultiplier tubes are used

with scanning monochromators, which filter the light so that only light

of a single wavelength reaches the detector at one time. The scanning

monochromator moves the diffraction grating to "step-through" each

wavelength so that its intensity may be measured as a function of

wavelength. Fixed monochromators are used with CCDs and photodiode

arrays. As both of these devices consist of many detectors grouped into

one or two dimensional arrays, they are able to collect light of

different wavelengths on different pixels or groups of pixels

simultaneously.

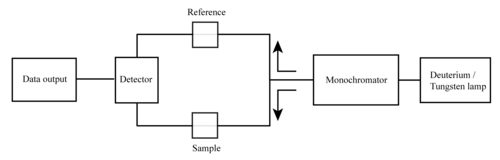

Simplified schematic of a double beam UV–visible spectrophotometer

A spectrophotometer can be either single beam or double beam. In a single beam instrument (such as the Spectronic 20), all of the light passes through the sample cell.  must be measured by removing the sample. This was the earliest design

and is still in common use in both teaching and industrial labs.

must be measured by removing the sample. This was the earliest design

and is still in common use in both teaching and industrial labs.

In a double-beam instrument, the light is split into two beams

before it reaches the sample. One beam is used as the reference; the

other beam passes through the sample. The reference beam intensity is

taken as 100% Transmission (or 0 Absorbance), and the measurement

displayed is the ratio of the two beam intensities. Some double-beam

instruments have two detectors (photodiodes), and the sample and

reference beam are measured at the same time. In other instruments, the

two beams pass through a beam chopper,

which blocks one beam at a time. The detector alternates between

measuring the sample beam and the reference beam in synchronism with the

chopper. There may also be one or more dark intervals in the chopper

cycle. In this case, the measured beam intensities may be corrected by

subtracting the intensity measured in the dark interval before the ratio

is taken.

In a single-beam instrument, the cuvette containing only a

solvent has to be measured first. Mettler Toledo developed a single beam

array spectrophotometer that allows fast and accurate measurements over

the UV/VIS range. The light source consists of a Xenon flash lamp for

the ultraviolet (UV) as well as for the visible (VIS) and near-infrared

wavelength regions covering a spectral range from 190 up to 1100 nm. The

lamp flashes are focused on a glass fiber which drives the beam of

light onto a cuvette containing the sample solution. The beam passes

through the sample and specific wavelengths are absorbed by the sample

components. The remaining light is collected after the cuvette by a

glass fiber and driven into a spectrograph. The spectrograph consists of

a diffraction grating that separates the light into the different

wavelengths, and a CCD sensor to record the data, respectively. The

whole spectrum is thus simultaneously measured, allowing for fast

recording.

Samples for UV/Vis spectrophotometry are most often liquids,

although the absorbance of gases and even of solids can also be

measured. Samples are typically placed in a transparent cell, known as a cuvette. Cuvettes are typically rectangular in shape, commonly with an internal width of 1 cm. (This width becomes the path length,  , in the Beer–Lambert law.) Test tubes

can also be used as cuvettes in some instruments. The type of sample

container used must allow radiation to pass over the spectral region of

interest. The most widely applicable cuvettes are made of high quality fused silica or quartz glass

because these are transparent throughout the UV, visible and near

infrared regions. Glass and plastic cuvettes are also common, although

glass and most plastics absorb in the UV, which limits their usefulness

to visible wavelengths.

, in the Beer–Lambert law.) Test tubes

can also be used as cuvettes in some instruments. The type of sample

container used must allow radiation to pass over the spectral region of

interest. The most widely applicable cuvettes are made of high quality fused silica or quartz glass

because these are transparent throughout the UV, visible and near

infrared regions. Glass and plastic cuvettes are also common, although

glass and most plastics absorb in the UV, which limits their usefulness

to visible wavelengths.

Specialized instruments have also been made. These include

attaching spectrophotometers to telescopes to measure the spectra of

astronomical features. UV–visible microspectrophotometers consist of a

UV–visible microscope integrated with a UV–visible spectrophotometer.

A complete spectrum of the absorption at all wavelengths of

interest can often be produced directly by a more sophisticated

spectrophotometer. In simpler instruments the absorption is determined

one wavelength at a time and then compiled into a spectrum by the

operator. By removing the concentration dependence, the extinction

coefficient (ε) can be determined as a function of wavelength.

Microspectrophotometry

UV–visible

spectroscopy of microscopic samples is done by integrating an optical

microscope with UV–visible optics, white light sources, a monochromator, and a sensitive detector such as a charge-coupled device (CCD) or photomultiplier

tube (PMT). As only a single optical path is available, these are

single beam instruments. Modern instruments are capable of measuring

UV–visible spectra in both reflectance and transmission of micron-scale

sampling areas. The advantages of using such instruments is that they

are able to measure microscopic samples but are also able to measure the

spectra of larger samples with high spatial resolution. As such, they

are used in the forensic laboratory to analyze the dyes and pigments in

individual textile fibers, microscopic paint chips and the color of glass fragments. They are also used in materials

science and biological research and for determining the energy content

of coal and petroleum source rock by measuring the vitrinite

reflectance. Microspectrophotometers are used in the semiconductor and

micro-optics industries for monitoring the thickness of thin films after

they have been deposited. In the semiconductor industry, they are used

because the critical dimensions of circuitry is microscopic. A typical

test of a semiconductor wafer would entail the acquisition of spectra

from many points on a patterned or unpatterned wafer. The thickness of

the deposited films may be calculated from the interference pattern

of the spectra. In addition, ultraviolet–visible spectrophotometry can

be used to determine the thickness, along with the refractive index and

extinction coefficient of thin films. A map of the film thickness across the entire wafer can then be generated and used for quality control purposes.

Additional applications

UV/Vis can be applied to characterize the rate of a chemical reaction.

Illustrative is the conversion of the yellow-orange and blue isomers of

mercury dithizonate. This method of analysis relies on the fact that

concentration is linearly proportional to concentration. In the same

approach allows determination of equilibria between chromophores.

From the spectrum of burning gases, it is possible to determine a

chemical composition of a fuel, temperature of gases, and air-fuel

ratio.