In particle physics, every type of particle of "ordinary" matter (as opposed to antimatter) is associated with an antiparticle with the same mass but with opposite physical charges (such as electric charge). For example, the antiparticle of the electron is the positron (also known as an antielectron). While the electron has a negative electric charge, the positron has a positive electric charge, and is produced naturally in certain types of radioactive decay. The opposite is also true: the antiparticle of the positron is the electron.

Some particles, such as the photon, are their own antiparticle. Otherwise, for each pair of antiparticle partners, one is designated as the normal particle (the one that occurs in matter usually interacted with in daily life). The other (usually given the prefix "anti-") is designated the antiparticle.

Particle–antiparticle pairs can annihilate each other, producing photons; since the charges of the particle and antiparticle are opposite, total charge is conserved. For example, the positrons produced in natural radioactive decay quickly annihilate themselves with electrons, producing pairs of gamma rays, a process exploited in positron emission tomography.

The laws of nature are very nearly symmetrical with respect to particles and antiparticles. For example, an antiproton and a positron can form an antihydrogen atom, which is believed to have the same properties as a hydrogen atom. This leads to the question of why the formation of matter after the Big Bang resulted in a universe consisting almost entirely of matter, rather than being a half-and-half mixture of matter and antimatter. The discovery of charge parity violation helped to shed light on this problem by showing that this symmetry, originally thought to be perfect, was only approximate. The question about how the formation of matter after the Big Bang resulted in a universe consisting almost entirely of matter remains an unanswered one, and explanations so far are not truly satisfactory, overall.

Because charge is conserved, it is not possible to create an antiparticle without either destroying another particle of the same charge (as is for instance the case when antiparticles are produced naturally via beta decay or the collision of cosmic rays with Earth's atmosphere), or by the simultaneous creation of both a particle and its antiparticle (pair production), which can occur in particle accelerators such as the Large Hadron Collider at CERN.

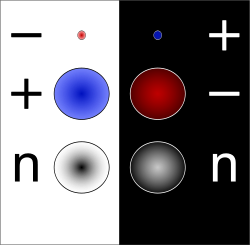

Particles and their antiparticles have equal and opposite

charges, so that an uncharged particle also gives rise to an uncharged

antiparticle. In many cases, the antiparticle and the particle

coincide: pairs of photons, Z0 bosons, π0

mesons, and hypothetical gravitons and some hypothetical WIMPs

all self-annihilate. However, electrically neutral particles need not

be identical to their antiparticles: for example, the neutron and

antineutron are distinct.

History

Experiment

In 1932, soon after the prediction of positrons by Paul Dirac, Carl D. Anderson found that cosmic-ray collisions produced these particles in a cloud chamber – a particle detector in which moving electrons (or positrons) leave behind trails as they move through the gas. The electric charge-to-mass ratio of a particle can be measured by observing the radius of curling of its cloud-chamber track in a magnetic field. Positrons, because of the direction that their paths curled, were at first mistaken for electrons travelling in the opposite direction. Positron paths in a cloud-chamber trace the same helical path as an electron but rotate in the opposite direction with respect to the magnetic field direction due to their having the same magnitude of charge-to-mass ratio but with opposite charge and, therefore, opposite signed charge-to-mass ratios.

The antiproton and antineutron were found by Emilio Segrè and Owen Chamberlain in 1955 at the University of California, Berkeley. Since then, the antiparticles of many other subatomic particles have been created in particle accelerator experiments. In recent years, complete atoms of antimatter have been assembled out of antiprotons and positrons, collected in electromagnetic traps.

Dirac hole theory

... the development of quantum field theory made the interpretation of antiparticles as holes unnecessary, even though it lingers on in many textbooks.

Solutions of the Dirac equation contain negative energy quantum states. As a result, an electron could always radiate energy and fall into a negative energy state. Even worse, it could keep radiating infinite amounts of energy because there were infinitely many negative energy states available. To prevent this unphysical situation from happening, Dirac proposed that a "sea" of negative-energy electrons fills the universe, already occupying all of the lower-energy states so that, due to the Pauli exclusion principle, no other electron could fall into them. Sometimes, however, one of these negative-energy particles could be lifted out of this Dirac sea to become a positive-energy particle. But, when lifted out, it would leave behind a hole in the sea that would act exactly like a positive-energy electron with a reversed charge. These holes were interpreted as "negative-energy electrons" by Paul Dirac and mistakenly identified with protons in his 1930 paper A Theory of Electrons and Protons. However, these "negative-energy electrons" turned out to be positrons, and not protons.

This picture implied an infinite negative charge for the

universe – a problem of which Dirac was aware. Dirac tried to argue that

we would perceive this as the normal state of zero charge. Another

difficulty was the difference in masses of the electron and the proton.

Dirac tried to argue that this was due to the electromagnetic

interactions with the sea, until Hermann Weyl proved that hole theory was completely symmetric between negative and positive charges. Dirac also predicted a reaction e−

+ p+

→ γ + γ, where an electron and a proton annihilate to give two photons. Robert Oppenheimer and Igor Tamm,

however, proved that this would cause ordinary matter to disappear too

fast. A year later, in 1931, Dirac modified his theory and postulated

the positron,

a new particle of the same mass as the electron. The discovery of this

particle the next year removed the last two objections to his theory.

Within Dirac's theory, the problem of infinite charge of the universe remains. Some bosons also have antiparticles, but since bosons do not obey the Pauli exclusion principle (only fermions do), hole theory does not work for them. A unified interpretation of antiparticles is now available in quantum field theory, which solves both these problems by describing antimatter as negative energy states of the same underlying matter field, i.e. particles moving backwards in time.

Elementary antiparticles

| Generation | Name | Symbol | Spin | Charge (e) | Mass (MeV/c2) |

Observed |

|---|---|---|---|---|---|---|

| 1 | up antiquark | u | 1⁄2 | −2⁄3 | 2.2+0.6 −0.4 |

Yes |

| down antiquark | d | 1⁄2 | +1⁄3 | 4.6+0.5 −0.4 |

Yes | |

| 2 | charm antiquark | c | 1⁄2 | −2⁄3 | 1280±30 | Yes |

| strange antiquark | s | 1⁄2 | +1⁄3 | 96+8 −4 |

Yes | |

| 3 | top antiquark | t | 1⁄2 | −2⁄3 | 173100±600 | Yes |

| bottom antiquark | b | 1⁄2 | +1⁄3 | 4180+40 −30 |

Yes |

| Generation | Name | Symbol | Spin | Charge (e) | Mass (MeV/c2) |

Observed |

|---|---|---|---|---|---|---|

| 1 | positron | e+ |

1 /2 | +1 | 0.511 | Yes |

| electron antineutrino | ν e |

1 /2 | 0 | < 0.0000022 | Yes | |

| 2 | antimuon | μ+ |

1 /2 | +1 | 105.7 | Yes |

| muon antineutrino | ν μ |

1 /2 | 0 | < 0.170 | Yes | |

| 3 | antitau | τ+ |

1 /2 | +1 | 1776.86±0.12 | Yes |

| tau antineutrino | ν τ |

1 /2 | 0 | < 15.5 | Yes |

| Name | Symbol | Spin | Charge (e) | Mass (GeV/c2) |

Interaction mediated | Observed |

|---|---|---|---|---|---|---|

| anti W boson | W+ |

1 | +1 | 80.385±0.015 | weak interaction | Yes |

Composite antiparticles

| Class | Subclass | Name | Symbol | Spin | Charge

(e) |

Mass (MeV/c2) | Mass (kg) | Observed |

|---|---|---|---|---|---|---|---|---|

| Antihadron | Antibaryon | Antiproton | p | 1 /2 | −1 | 938.27208943(29) | 1.67262192595(52)×10−27 | Yes |

| Antineutron | n | 1 /2 | 0 | 939.56542194(48) | ? | Yes |

Particle–antiparticle annihilation

If a particle and antiparticle are in the appropriate quantum states,

then they can annihilate each other and produce other particles.

Reactions such as e−

+ e+

→ γγ

(the two-photon annihilation of an electron-positron pair) are an

example. The single-photon annihilation of an electron-positron pair, e−

+ e+

→ γ, cannot occur in free space because it is impossible to conserve energy and momentum together in this process. However, in the Coulomb field of a nucleus the translational invariance is broken and single-photon annihilation may occur. The reverse reaction (in free space, without an atomic nucleus) is also

impossible for this reason. In quantum field theory, this process is

allowed only as an intermediate quantum state for times short enough

that the violation of energy conservation can be accommodated by the uncertainty principle. This opens the way for virtual pair production or annihilation in which a one particle quantum state may fluctuate into a two particle state and back. These processes are important in the vacuum state and renormalization

of a quantum field theory. It also opens the way for neutral particle

mixing through processes such as the one pictured here, which is a

complicated example of mass renormalization.

Properties

Quantum states of a particle and an antiparticle are interchanged by the combined application of charge conjugation , parity and time reversal . and are linear, unitary operators, is antilinear and antiunitary, . If denotes the quantum state of a particle with momentum and spin whose component in the z-direction is , then one has

where denotes the charge conjugate state, that is, the antiparticle. In particular a massive particle and its antiparticle transform under the same irreducible representation of the Poincaré group which means the antiparticle has the same mass and the same spin.

If , and can be defined separately on the particles and antiparticles, then

where the proportionality sign indicates that there might be a phase on the right hand side.

As anticommutes with the charges, , particle and antiparticle have opposite electric charges q and -q.

Quantum field theory

- This section draws upon the ideas, language and notation of canonical quantization of a quantum field theory.

One may try to quantize an electron field without mixing the annihilation and creation operators by writing

where we use the symbol k to denote the quantum numbers p and σ of the previous section and the sign of the energy, E(k), and ak denotes the corresponding annihilation operators. Of course, since we are dealing with fermions, we have to have the operators satisfy canonical anti-commutation relations. However, if one now writes down the Hamiltonian

then one sees immediately that the expectation value of H need not be positive. This is because E(k) can have any sign whatsoever, and the combination of creation and annihilation operators has expectation value 1 or 0.

So one has to introduce the charge conjugate antiparticle field, with its own creation and annihilation operators satisfying the relations

where k has the same p, and opposite σ and sign of the energy. Then one can rewrite the field in the form

where the first sum is over positive energy states and the second over those of negative energy. The energy becomes

where E0 is an infinite negative constant. The vacuum state is defined as the state with no particle or antiparticle, i.e., and . Then the energy of the vacuum is exactly E0. Since all energies are measured relative to the vacuum, H is positive definite. Analysis of the properties of ak and bk shows that one is the annihilation operator for particles and the other for antiparticles. This is the case of a fermion.

This approach is due to Vladimir Fock, Wendell Furry and Robert Oppenheimer. If one quantizes a real scalar field, then one finds that there is only one kind of annihilation operator; therefore, real scalar fields describe neutral bosons. Since complex scalar fields admit two different kinds of annihilation operators, which are related by conjugation, such fields describe charged bosons.

Feynman–Stückelberg interpretation

By considering the propagation of the negative energy modes of the electron field backward in time, Ernst Stückelberg reached a pictorial understanding of the fact that the particle and antiparticle have equal mass m and spin J but opposite charges q. This allowed him to rewrite perturbation theory precisely in the form of diagrams. Richard Feynman later gave an independent systematic derivation of these diagrams from a particle formalism, and they are now called Feynman diagrams. Each line of a diagram represents a particle propagating either backward or forward in time. In Feynman diagrams, anti-particles are shown traveling backwards in time relative to normal matter, and vice versa. This technique is the most widespread method of computing amplitudes in quantum field theory today.

Since this picture was first developed by Stückelberg, and acquired its modern form in Feynman's work, it is called the Feynman–Stückelberg interpretation of antiparticles to honor both scientists.