From Wikipedia, the free encyclopedia

Planetary habitability is the measure of a planet's or a natural satellite's potential to develop and sustain life. Life may develop directly on a planet or satellite or be transferred to it from another body, a theoretical process known as panspermia. As the existence of life beyond Earth is unknown, planetary habitability is largely an extrapolation of conditions on Earth and the characteristics of the Sun and Solar System which appear favourable to life's flourishing—in particular those factors that have sustained complex, multicellular organisms and not just simpler, unicellular creatures. Research and theory in this regard is a component of planetary science and the emerging discipline of astrobiology.

An absolute requirement for life is an energy source, and the notion of planetary habitability implies that many other geophysical, geochemical, and astrophysical criteria must be met before an astronomical body can support life. In its astrobiology roadmap, NASA has defined the principal habitability criteria as "extended regions of liquid water,[1] conditions favourable for the assembly of complex organic molecules, and energy sources to sustain metabolism."[2]

In determining the habitability potential of a body, studies focus on its bulk composition, orbital properties, atmosphere, and potential chemical interactions. Stellar characteristics of importance include mass and luminosity, stable variability, and high metallicity. Rocky, terrestrial-type planets and moons with the potential for Earth-like chemistry are a primary focus of astrobiological research, although more speculative habitability theories occasionally examine alternative biochemistries and other types of astronomical bodies.

The idea that planets beyond Earth might host life is an ancient one, though historically it was framed by philosophy as much as physical science.[a] The late 20th century saw two breakthroughs in the field. The observation and robotic spacecraft exploration of other planets and moons within the Solar System has provided critical information on defining habitability criteria and allowed for substantial geophysical comparisons between the Earth and other bodies. The discovery of extrasolar planets, beginning in the early 1990s[3][4] and accelerating thereafter, has provided further information for the study of possible extraterrestrial life. These findings confirm that the Sun is not unique among stars in hosting planets and expands the habitability research horizon beyond the Solar System.

The chemistry of life may have begun shortly after the Big Bang, 13.8 billion years ago, during a habitable epoch when the Universe was only 10–17 million years old.[5][6] According to the panspermia hypothesis, microscopic life—distributed by meteoroids, asteroids and other small Solar System bodies—may exist throughout the universe.[7] Nonetheless, Earth is the only place in the universe known to harbor life.[8][9] Estimates of habitable zones around other stars,[10][11] along with the discovery of hundreds of extrasolar planets and new insights into the extreme habitats here on Earth, suggest that there may be many more habitable places in the universe than considered possible until very recently.[12] On 4 November 2013, astronomers reported, based on Kepler space mission data, that there could be as many as 40 billion Earth-sized planets orbiting in the habitable zones of Sun-like stars and red dwarfs within the Milky Way.[13][14] 11 billion of these estimated planets may be orbiting Sun-like stars.[15] The nearest such planet may be 12 light-years away, according to the scientists.[13][14]

Suitable star systems

An understanding of planetary habitability begins with stars. While bodies that are generally Earth-like may be plentiful, it is just as important that their larger system be agreeable to life. Under the auspices of SETI's Project Phoenix, scientists Margaret Turnbull and Jill Tarter developed the "HabCat" (or Catalogue of Habitable Stellar Systems) in 2002. The catalogue was formed by winnowing the nearly 120,000 stars of the larger Hipparcos Catalogue into a core group of 17,000 "HabStars", and the selection criteria that were used provide a good starting point for understanding which astrophysical factors are necessary to habitable planets.[16] According to research published in August 2015, very large galaxies may be more favorable to the creation and development of habitable planets than smaller galaxies, like the Milky Way galaxy.[17]Spectral class

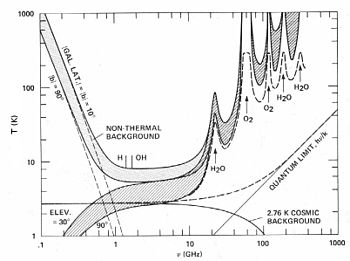

The spectral class of a star indicates its photospheric temperature, which (for main-sequence stars) correlates to overall mass. The appropriate spectral range for "HabStars" is considered to be "early F" or "G", to "mid-K". This corresponds to temperatures of a little more than 7,000 K down to a little more than 4,000 K (6,700 °C to 3,700 °C); the Sun, a G2 star at 5,777 K, is well within these bounds. "Middle-class" stars of this sort have a number of characteristics considered important to planetary habitability:- They live at least a few billion years, allowing life a chance to evolve. More luminous main-sequence stars of the "O", "B", and "A" classes usually live less than a billion years and in exceptional cases less than 10 million.[18][b]

- They emit enough high-frequency ultraviolet radiation to trigger important atmospheric dynamics such as ozone formation, but not so much that ionisation destroys incipient life.[19]

- Liquid water may exist on the surface of planets orbiting them at a distance that does not induce tidal locking. K Spectrum stars may be able to support life for long periods, far longer than the Sun.[20]

A recent study suggests that cooler stars that emit more light in the infrared and near infrared may actually host warmer planets with less ice and incidence of snowball states. These wavelengths are absorbed by their planets' ice and greenhouse gases and remain warmer.[25][26]

A stable habitable zone

The habitable zone (HZ, categorized by the Planetary Habitability Index) is a shell-shaped region of space surrounding a star in which a planet could maintain liquid water on its surface. After an energy source, liquid water is considered the most important ingredient for life, considering how integral it is to all life systems on Earth. This may reflect the known dependence of life on water; however, if life is discovered in the absence of water, the definition of an HZ may have to be greatly expanded.A "stable" HZ implies two factors. First, the range of an HZ should not vary greatly over time. All stars increase in luminosity as they age, and a given HZ thus migrates outwards, but if this happens too quickly (for example, with a super-massive star) planets may only have a brief window inside the HZ and a correspondingly smaller chance of developing life. Calculating an HZ range and its long-term movement is never straightforward, as negative feedback loops such as the CNO cycle will tend to offset the increases in luminosity. Assumptions made about atmospheric conditions and geology thus have as great an impact on a putative HZ range as does stellar evolution: the proposed parameters of the Sun's HZ, for example, have fluctuated greatly.[27]

Second, no large-mass body such as a gas giant should be present in or relatively close to the HZ, thus disrupting the formation of Earth-like bodies. The matter in the asteroid belt, for example, appears to have been unable to accrete into a planet due to orbital resonances with Jupiter; if the giant had appeared in the region that is now between the orbits of Venus and Mars, Earth would almost certainly not have developed in its present form. However a gas giant inside the HZ might have habitable moons under the right conditions.[28]

In the Solar System, the inner planets are terrestrial, and the outer ones are gas giants, but discoveries of extrasolar planets suggest that this arrangement may not be at all common: numerous Jupiter-sized bodies have been found in close orbit about their primary, disrupting potential HZs. However, present data for extrasolar planets is likely to be skewed towards that type (large planets in close orbits) because they are far easier to identify; thus it remains to be seen which type of planetary system is the norm, or indeed if there is one.

Low stellar variation

Changes in luminosity are common to all stars, but the severity of such fluctuations covers a broad range. Most stars are relatively stable, but a significant minority of variable stars often undergo sudden and intense increases in luminosity and consequently in the amount of energy radiated toward bodies in orbit. These stars are considered poor candidates for hosting life-bearing planets, as their unpredictability and energy output changes would negatively impact organisms: living things adapted to a specific temperature range could not survive too great a temperature variation. Further, upswings in luminosity are generally accompanied by massive doses of gamma ray and X-ray radiation which might prove lethal. Atmospheres do mitigate such effects, but their atmosphere might not be retained by planets orbiting variables, because the high-frequency energy buffeting these planets would continually strip them of their protective covering.The Sun, in this respect as in many others, is relatively benign: the variation between its maximum and minimum energy output is roughly 0.1% over its 11-year solar cycle. There is strong (though not undisputed) evidence that even minor changes in the Sun's luminosity have had significant effects on the Earth's climate well within the historical era: the Little Ice Age of the mid-second millennium, for instance, may have been caused by a relatively long-term decline in the Sun's luminosity.[29] Thus, a star does not have to be a true variable for differences in luminosity to affect habitability. Of known solar analogs, one that closely resembles the Sun is considered to be 18 Scorpii; unfortunately for the prospects of life existing in its proximity, the only significant difference between the two bodies is the amplitude of the solar cycle, which appears to be much greater for 18 Scorpii.[30]

High metallicity

While the bulk of material in any star is hydrogen and helium, there is a great variation in the amount of heavier elements (metals) that stars contain. A high proportion of metals in a star correlates to the amount of heavy material initially available in the protoplanetary disk. A smaller amount of metal makes the formation of planets much less likely, under the solar nebula theory of planetary system formation. Any planets that did form around a metal-poor star would probably be low in mass, and thus unfavorable for life. Spectroscopic studies of systems where exoplanets have been found to date confirm the relationship between high metal content and planet formation: "Stars with planets, or at least with planets similar to the ones we are finding today, are clearly more metal rich than stars without planetary companions."[31] This relationship between high metallicity and planet formation also means that habitable systems are more likely to be found around younger stars, since stars that formed early in the universe's history have low metal content.Planetary characteristics

The chief assumption about habitable planets is that they are terrestrial. Such planets, roughly within one order of magnitude of Earth mass, are primarily composed of silicate rocks, and have not accreted the gaseous outer layers of hydrogen and helium found on gas giants. That life could evolve in the cloud tops of giant planets has not been decisively ruled out,[c] though it is considered unlikely, as they have no surface and their gravity is enormous.[35] The natural satellites of giant planets, meanwhile, remain valid candidates for hosting life.[32]

In February 2011 the Kepler Space Observatory Mission team released a list of 1235 extrasolar planet candidates, including 54 that may be in the habitable zone.[36][37] Six of the candidates in this zone are smaller than twice the size of Earth.[36] A more recent study found that one of these candidates (KOI 326.01) is much larger and hotter than first reported.[38] Based on the findings, the Kepler team estimated there to be "at least 50 billion planets in the Milky Way" of which "at least 500 million" are in the habitable zone.[39]

In analyzing which environments are likely to support life, a distinction is usually made between simple, unicellular organisms such as bacteria and archaea and complex metazoans (animals). Unicellularity necessarily precedes multicellularity in any hypothetical tree of life, and where single-celled organisms do emerge there is no assurance that greater complexity will then develop.[d] The planetary characteristics listed below are considered crucial for life generally, but in every case multicellular organisms are more picky than unicellular life.

Mass

Low-mass planets are poor candidates for life for two reasons. First, their lesser gravity makes atmosphere retention difficult. Constituent molecules are more likely to reach escape velocity and be lost to space when buffeted by solar wind or stirred by collision. Planets without a thick atmosphere lack the matter necessary for primal biochemistry, have little insulation and poor heat transfer across their surfaces (for example, Mars, with its thin atmosphere, is colder than the Earth would be if it were at a similar distance from the Sun), and provide less protection against meteoroids and high-frequency radiation. Further, where an atmosphere is less dense than 0.006 Earth atmospheres, water cannot exist in liquid form as the required atmospheric pressure, 4.56 mm Hg (608 Pa) (0.18 inch Hg), does not occur. The temperature range at which water is liquid is smaller at low pressures generally.

Secondly, smaller planets have smaller diameters and thus higher surface-to-volume ratios than their larger cousins. Such bodies tend to lose the energy left over from their formation quickly and end up geologically dead, lacking the volcanoes, earthquakes and tectonic activity which supply the surface with life-sustaining material and the atmosphere with temperature moderators like carbon dioxide. Plate tectonics appear particularly crucial, at least on Earth: not only does the process recycle important chemicals and minerals, it also fosters bio-diversity through continent creation and increased environmental complexity and helps create the convective cells necessary to generate Earth's magnetic field.[40]

"Low mass" is partly a relative label: the Earth is low mass when compared to the Solar System's gas giants, but it is the largest, by diameter and mass, and the densest of all terrestrial bodies.[e] It is large enough to retain an atmosphere through gravity alone and large enough that its molten core remains a heat engine, driving the diverse geology of the surface (the decay of radioactive elements within a planet's core is the other significant component of planetary heating). Mars, by contrast, is nearly (or perhaps totally) geologically dead and has lost much of its atmosphere.[41] Thus it would be fair to infer that the lower mass limit for habitability lies somewhere between that of Mars and that of Earth or Venus: 0.3 Earth masses has been offered as a rough dividing line for habitable planets.[42] However, a 2008 study by the Harvard-Smithsonian Center for Astrophysics suggests that the dividing line may be higher. Earth may in fact lie on the lower boundary of habitability: if it were any smaller, plate tectonics would be impossible. Venus, which has 85% of Earth's mass, shows no signs of tectonic activity. Conversely, "super-Earths", terrestrial planets with higher masses than Earth, would have higher levels of plate tectonics and thus be firmly placed in the habitable range.[43]

Exceptional circumstances do offer exceptional cases: Jupiter's moon Io (which is smaller than any of the terrestrial planets) is volcanically dynamic because of the gravitational stresses induced by its orbit, and its neighbor Europa may have a liquid ocean or icy slush underneath a frozen shell also due to power generated from orbiting a gas giant.

Saturn's Titan, meanwhile, has an outside chance of harbouring life, as it has retained a thick atmosphere and has liquid methane seas on its surface. Organic-chemical reactions that only require minimum energy are possible in these seas, but whether any living system can be based on such minimal reactions is unclear, and would seem unlikely. These satellites are exceptions, but they prove that mass, as a criterion for habitability, cannot necessarily be considered definitive at this stage of our understanding.[citation needed]

A larger planet is likely to have a more massive atmosphere. A combination of higher escape velocity to retain lighter atoms, and extensive outgassing from enhanced plate tectonics may greatly increase the atmospheric pressure and temperature at the surface compared to Earth. The enhanced greenhouse effect of such a heavy atmosphere would tend to suggest that the habitable zone should be further out from the central star for such massive planets.

Finally, a larger planet is likely to have a large iron core. This allows for a magnetic field to protect the planet from stellar wind and cosmic radiation, which otherwise would tend to strip away planetary atmosphere and to bombard living things with ionized particles. Mass is not the only criterion for producing a magnetic field—as the planet must also rotate fast enough to produce a dynamo effect within its core[44]—but it is a significant component of the process.

Orbit and rotation

As with other criteria, stability is the critical consideration in evaluating the effect of orbital and rotational characteristics on planetary habitability. Orbital eccentricity is the difference between a planet's farthest and closest approach to its parent star divided by the sum of said distances. It is a ratio describing the shape of the elliptical orbit. The greater the eccentricity the greater the temperature fluctuation on a planet's surface. Although they are adaptive, living organisms can stand only so much variation, particularly if the fluctuations overlap both the freezing point and boiling point of the planet's main biotic solvent (e.g., water on Earth). If, for example, Earth's oceans were alternately boiling and freezing solid, it is difficult to imagine life as we know it having evolved. The more complex the organism, the greater the temperature sensitivity.[45] The Earth's orbit is almost wholly circular, with an eccentricity of less than 0.02; other planets in the Solar System (with the exception of Mercury) have eccentricities that are similarly benign.Data collected on the orbital eccentricities of extrasolar planets has surprised most researchers: 90% have an orbital eccentricity greater than that found within the Solar System, and the average is fully 0.25.[46] This means that the vast majority of planets have highly eccentric orbits and of these, even if their average distance from their star is deemed to be within the HZ, they nonetheless would be spending only a small portion of their time within the zone.

A planet's movement around its rotational axis must also meet certain criteria if life is to have the opportunity to evolve. A first assumption is that the planet should have moderate seasons. If there is little or no axial tilt (or obliquity) relative to the perpendicular of the ecliptic, seasons will not occur and a main stimulant to biospheric dynamism will disappear. The planet would also be colder than it would be with a significant tilt: when the greatest intensity of radiation is always within a few degrees of the equator, warm weather cannot move poleward and a planet's climate becomes dominated by colder polar weather systems.

If a planet is radically tilted, meanwhile, seasons will be extreme and make it more difficult for a biosphere to achieve homeostasis. The axial tilt of the Earth is higher now (in the Quaternary) than it has been in the past, coinciding with reduced polar ice, warmer temperatures and less seasonal variation. Scientists do not know whether this trend will continue indefinitely with further increases in axial tilt (see Snowball Earth).

The exact effects of these changes can only be computer modelled at present, and studies have shown that even extreme tilts of up to 85 degrees do not absolutely preclude life "provided it does not occupy continental surfaces plagued seasonally by the highest temperature."[47] Not only the mean axial tilt, but also its variation over time must be considered. The Earth's tilt varies between 21.5 and 24.5 degrees over 41,000 years. A more drastic variation, or a much shorter periodicity, would induce climatic effects such as variations in seasonal severity.

Other orbital considerations include:

- The planet should rotate relatively quickly so that the day-night cycle is not overlong. If a day takes years, the temperature differential between the day and night side will be pronounced, and problems similar to those noted with extreme orbital eccentricity will come to the fore.

- The planet also should rotate quickly enough so that a magnetic dynamo may be started in its iron core to produce a magnetic field.

- Change in the direction of the axis rotation (precession) should not be pronounced. In itself, precession need not affect habitability as it changes the direction of the tilt, not its degree. However, precession tends to accentuate variations caused by other orbital deviations; see Milankovitch cycles. Precession on Earth occurs over a 26,000-year cycle.

Geochemistry

It is generally assumed that any extraterrestrial life that might exist will be based on the same fundamental biochemistry as found on Earth, as the four elements most vital for life, carbon, hydrogen, oxygen, and nitrogen, are also the most common chemically reactive elements in the universe. Indeed, simple biogenic compounds, such as very simple amino acids such as glycine, have been found in meteorites and in the interstellar medium.[49] These four elements together comprise over 96% of Earth's collective biomass. Carbon has an unparalleled ability to bond with itself and to form a massive array of intricate and varied structures, making it an ideal material for the complex mechanisms that form living cells. Hydrogen and oxygen, in the form of water, compose the solvent in which biological processes take place and in which the first reactions occurred that led to life's emergence. The energy released in the formation of powerful covalent bonds between carbon and oxygen, available by oxidizing organic compounds, is the fuel of all complex life-forms. These four elements together make up amino acids, which in turn are the building blocks of proteins, the substance of living tissue. In addition, neither sulfur, required for the building of proteins, nor phosphorus, needed for the formation of DNA, RNA, and the adenosine phosphates essential to metabolism, is rare.Relative abundance in space does not always mirror differentiated abundance within planets; of the four life elements, for instance, only oxygen is present in any abundance in the Earth's crust.[50] This can be partly explained by the fact that many of these elements, such as hydrogen and nitrogen, along with their simplest and most common compounds, such as carbon dioxide, carbon monoxide, methane, ammonia, and water, are gaseous at warm temperatures. In the hot region close to the Sun, these volatile compounds could not have played a significant role in the planets' geological formation. Instead, they were trapped as gases underneath the newly formed crusts, which were largely made of rocky, involatile compounds such as silica (a compound of silicon and oxygen, accounting for oxygen's relative abundance). Outgassing of volatile compounds through the first volcanoes would have contributed to the formation of the planets' atmospheres. The Miller–Urey experiment showed that, with the application of energy, simple inorganic compounds exposed to a primordial atmosphere can react to synthesize amino acids.[51]

Even so, volcanic outgassing could not have accounted for the amount of water in Earth's oceans.[52] The vast majority of the water —and arguably carbon— necessary for life must have come from the outer Solar System, away from the Sun's heat, where it could remain solid. Comets impacting with the Earth in the Solar System's early years would have deposited vast amounts of water, along with the other volatile compounds life requires onto the early Earth, providing a kick-start to the origin of life.

Thus, while there is reason to suspect that the four "life elements" ought to be readily available elsewhere, a habitable system probably also requires a supply of long-term orbiting bodies to seed inner planets. Without comets there is a possibility that life as we know it would not exist on Earth.

Microenvironments and extremophiles

The Atacama Desert provides an analog to Mars and an ideal environment to study the boundary between sterility and habitability.

One important qualification to habitability criteria is that only a tiny portion of a planet is required to support life. Astrobiologists often concern themselves with "micro-environments", noting that "we lack a fundamental understanding of how evolutionary forces, such as mutation, selection, and genetic drift, operate in micro-organisms that act on and respond to changing micro-environments."[53] Extremophiles are Earth organisms that live in niche environments under severe conditions generally considered inimical to life. Usually (although not always) unicellular, extremophiles include acutely alkaliphilic and acidophilic organisms and others that can survive water temperatures above 100 °C in hydrothermal vents.

The discovery of life in extreme conditions has complicated definitions of habitability, but also generated much excitement amongst researchers in greatly broadening the known range of conditions under which life can persist. For example, a planet that might otherwise be unable to support an atmosphere given the solar conditions in its vicinity, might be able to do so within a deep shadowed rift or volcanic cave.[54] Similarly, craterous terrain might offer a refuge for primitive life. The Lawn Hill crater has been studied as an astrobiological analog, with researchers suggesting rapid sediment infill created a protected microenvironment for microbial organisms; similar conditions may have occurred over the geological history of Mars.[55]

Earth environments that cannot support life are still instructive to astrobiologists in defining the limits of what organisms can endure. The heart of the Atacama desert, generally considered the driest place on Earth, appears unable to support life, but it has been subject to study by NASA and ESA for that reason: it provides a Mars analog and the moisture gradients along its edges are ideal for studying the boundary between sterility and habitability.[56] The Atacama was the subject of study in 2003 that partly replicated experiments from the Viking landings on Mars in the 1970s; no DNA could be recovered from two soil samples, and incubation experiments were also negative for biosignatures.[57]

Ecological factors

The two current ecological approaches for predicting the potential habitability use 19 or 20 environmental factors, with emphasis on water availability, temperature, presence of nutrients, an energy source, and protection from solar ultraviolet and galactic cosmic radiation.[58][59]| Some habitability factors[59] | |

|---|---|

| Water | · Activity of liquid water · Past or future liquid (ice) inventories · Salinity, pH, and Eh of available water |

| Chemical environment | Nutrients: · C, H, N, O, P, S, essential metals, essential micronutrients · Fixed nitrogen · Availability/mineralogy Toxin abundances and lethality: · Heavy metals (e.g. Zn, Ni, Cu, Cr, As, Cd, etc.; some are essential, but toxic at high levels) · Globally distributed oxidizing soils |

| Energy for metabolism | Solar (surface and near-surface only) Geochemical (subsurface) · Oxidants · Reductants · Redox gradients |

| Conducive physical conditions |

· Temperature · Extreme diurnal temperature fluctuations · Low pressure (is there a low-pressure threshold for terrestrial anaerobes?) · Strong ultraviolet germicidal irradiation · Galactic cosmic radiation and solar particle events (long-term accumulated effects) · Solar UV-induced volatile oxidants, e.g. O 2−, O−, H2O2, O3 · Climate and its variability (geography, seasons, diurnal, and eventually, obliquity variations) · Substrate (soil processes, rock microenvironments, dust composition, shielding) · High CO2 concentrations in the global atmosphere · Transport (aeolian, ground water flow, surface water, glacial) |

Uninhabited habitats

An important distinction in habitability is between habitats that contain active life (inhabited habitats) and habitats that are habitable for life, but uninhabited.[60] Uninhabited (or vacant) habitats could arise on a planet where there was no origin of life (and no transfer of life to the planet from another, inhabited, planet), but where habitable environments exist. They might also occur on a planet that is inhabited, but the lack of connectivity between habitats might mean that many habitats remain uninhabited. Uninhabited habitats underline the importance of decoupling habitability and the presence of life. Charles Cockell and co-workers discuss Mars as one plausible world that might harbor uninhabited habitats. Other stellar systems might host planets that are habitable, but devoid of life.Alternative star systems

In determining the feasibility of extraterrestrial life, astronomers had long focused their attention on stars like the Sun. However, since planetary systems that resemble the Solar System are proving to be rare, they have begun to explore the possibility that life might form in systems very unlike our own.Binary systems

Typical estimates often suggest that 50% or more of all stellar systems are binary systems. This may be partly sample bias, as massive and bright stars tend to be in binaries and these are most easily observed and catalogued; a more precise analysis has suggested that the more common fainter stars are usually singular, and that up to two thirds of all stellar systems are therefore solitary.[61]The separation between stars in a binary may range from less than one astronomical unit (AU, the average Earth–Sun distance) to several hundred. In latter instances, the gravitational effects will be negligible on a planet orbiting an otherwise suitable star and habitability potential will not be disrupted unless the orbit is highly eccentric (see Nemesis, for example). However, where the separation is significantly less, a stable orbit may be impossible. If a planet's distance to its primary exceeds about one fifth of the closest approach of the other star, orbital stability is not guaranteed.[62] Whether planets might form in binaries at all had long been unclear, given that gravitational forces might interfere with planet formation. Theoretical work by Alan Boss at the Carnegie Institution has shown that gas giants can form around stars in binary systems much as they do around solitary stars.[63]

One study of Alpha Centauri, the nearest star system to the Sun, suggested that binaries need not be discounted in the search for habitable planets. Centauri A and B have an 11 AU distance at closest approach (23 AU mean), and both should have stable habitable zones. A study of long-term orbital stability for simulated planets within the system shows that planets within approximately three AU of either star may remain rather stable (i.e. the semi-major axis deviating by less than 5% during 32 000 binary periods). The HZ for Centauri A is conservatively estimated at 1.2 to 1.3 AU and Centauri B at 0.73 to 0.74—well within the stable region in both cases.[64]

Red dwarf systems

Relative star sizes and photospheric temperatures. Any planet around a red dwarf such as the one shown here (Gliese 229A) would have to huddle close to achieve Earth-like temperatures, probably inducing tidal locking. See Aurelia. Credit: MPIA/V. Joergens.

Determining the habitability of red dwarf stars could help determine how common life in the universe might be, as red dwarfs make up between 70 to 90% of all the stars in the galaxy.

Size

Astronomers for many years ruled out red dwarfs as potential abodes for life. Their small size (from 0.08 to 0.45 solar masses) means that their nuclear reactions proceed exceptionally slowly, and they emit very little light (from 3% of that produced by the Sun to as little as 0.01%). Any planet in orbit around a red dwarf would have to huddle very close to its parent star to attain Earth-like surface temperatures; from 0.3 AU (just inside the orbit of Mercury) for a star like Lacaille 8760, to as little as 0.032 AU for a star like Proxima Centauri[65] (such a world would have a year lasting just 6.3 days). At those distances, the star's gravity would cause tidal locking. One side of the planet would eternally face the star, while the other would always face away from it. The only ways in which potential life could avoid either an inferno or a deep freeze would be if the planet had an atmosphere thick enough to transfer the star's heat from the day side to the night side, or if there was a gas giant in the habitable zone, with a habitable moon, which would be locked to the planet instead of the star, allowing a more even distribution of radiation over the planet. It was long assumed that such a thick atmosphere would prevent sunlight from reaching the surface in the first place, preventing photosynthesis.

An artist's impression of GJ 667 Cc, a potentially habitable planet orbiting a red dwarf constituent in a trinary star system.

This pessimism has been tempered by research. Studies by Robert Haberle and Manoj Joshi of NASA's Ames Research Center in California have shown that a planet's atmosphere (assuming it included greenhouse gases CO2 and H2O) need only be 100 mbs, or 10% of Earth's atmosphere, for the star's heat to be effectively carried to the night side.[66] This is well within the levels required for photosynthesis, though water would still remain frozen on the dark side in some of their models. Martin Heath of Greenwich Community College, has shown that seawater, too, could be effectively circulated without freezing solid if the ocean basins were deep enough to allow free flow beneath the night side's ice cap. Further research—including a consideration of the amount of photosynthetically active radiation—suggested that tidally locked planets in red dwarf systems might at least be habitable for higher plants.[67]

Other factors limiting habitability

Size is not the only factor in making red dwarfs potentially unsuitable for life, however. On a red dwarf planet, photosynthesis on the night side would be impossible, since it would never see the sun. On the day side, because the sun does not rise or set, areas in the shadows of mountains would remain so forever. Photosynthesis as we understand it would be complicated by the fact that a red dwarf produces most of its radiation in the infrared, and on the Earth the process depends on visible light. There are potential positives to this scenario. Numerous terrestrial ecosystems rely on chemosynthesis rather than photosynthesis, for instance, which would be possible in a red dwarf system. A static primary star position removes the need for plants to steer leaves toward the sun, deal with changing shade/sun patterns, or change from photosynthesis to stored energy during night. Because of the lack of a day-night cycle, including the weak light of morning and evening, far more energy would be available at a given radiation level.Red dwarfs are far more variable and violent than their more stable, larger cousins. Often they are covered in starspots that can dim their emitted light by up to 40% for months at a time, while at other times they emit gigantic flares that can double their brightness in a matter of minutes.[68] Such variation would be very damaging for life, as it would not only destroy any complex organic molecules that could possibly form biological precursors, but also because it would blow off sizeable portions of the planet's atmosphere.

For a planet around a red dwarf star to support life, it would require a rapidly rotating magnetic field to protect it from the flares. However, a tidally locked planet rotates only very slowly, and so cannot produce a geodynamo at its core. However, the violent flaring period of a red dwarf's life cycle is estimated to only last roughly the first 1.2 billion years of its existence. If a planet forms far away from a red dwarf so as to avoid tidal locking, and then migrates into the star's habitable zone after this turbulent initial period, it is possible that life may have a chance to develop.[69]

Longevity and ubiquity

There is, however, one major advantage that red dwarfs have over other stars as abodes for life: they live a long time. It took 4.5 billion years before humanity appeared on Earth, and life as we know it will see suitable conditions for 1[70] to 2.3[71] billion years more. Red dwarfs, by contrast, could live for trillions of years because their nuclear reactions are far slower than those of larger stars, meaning that life would have longer to evolve and survive.While the odds of finding a planet in the habitable zone around any specific red dwarf are slim, the total amount of habitable zone around all red dwarfs combined is equal to the total amount around Sun-like stars given their ubiquity.[72] Furthermore, this total amount of habitable zone will last longer, because red dwarf stars live for hundreds of billions of years or even longer on the main sequence.[73]

Massive stars

Recent research suggests that very large stars, greater than ~100 solar masses, could have planetary systems consisting of hundreds of Mercury-sized planets within the habitable zone. Such systems could also contain brown dwarfs and low-mass stars (~0.1–0.3 solar masses).[74] However the very short lifespans of stars of more than a few solar masses would scarcely allow time for a planet to cool, let alone the time needed for a stable biosphere to develop. Massive stars are thus eliminated as possible abodes for life.[75]However, a massive-star system could be a progenitor of life in another way – the supernova explosion of the massive star in the central part of the system. This supernova will disperse heavier elements throughout its vicinity, created during the phase when the massive star has moved off of the main sequence, and the systems of the potential low-mass stars (which are still on the main sequence) within the former massive-star system may be enriched with the relatively large supply of the heavy elements so close to a supernova explosion. However, this states nothing about what types of planets would form as a result of the supernova material, or what their habitability potential would be.

The galactic neighborhood

Along with the characteristics of planets and their star systems, the wider galactic environment may also impact habitability. Scientists considered the possibility that particular areas of galaxies (galactic habitable zones) are better suited to life than others; the Solar System in which we live, in the Orion Spur, on the Milky Way galaxy's edge is considered to be in a life-favorable spot:[76]- It is not in a globular cluster where immense star densities are inimical to life, given excessive radiation and gravitational disturbance. Globular clusters are also primarily composed of older, probably metal-poor, stars. Furthermore, in globular clusters, the great ages of the stars would mean a large amount of stellar evolution by the host or other nearby stars, which due to their proximity may cause extreme harm to life on any planets, provided that they can form.

- It is not near an active gamma ray source.

- It is not near the galactic center where once again star densities increase the likelihood of ionizing radiation (e.g., from magnetars and supernovae). A supermassive black hole is also believed to lie at the middle of the galaxy which might prove a danger to any nearby bodies.

- The circular orbit of the Sun around the galactic center keeps it out of the way of the galaxy's spiral arms where intense radiation and gravitation may again lead to disruption.[77]

While stellar crowding proves disadvantageous to habitability, so too does extreme isolation. A star as metal-rich as the Sun would probably not have formed in the very outermost regions of the Milky Way given a decline in the relative abundance of metals and a general lack of star formation. Thus, a "suburban" location, such as the Solar System enjoys, is preferable to a Galaxy's center or farthest reaches.[78]

Other considerations

Alternative biochemistries

While most investigations of extraterrestrial life start with the assumption that advanced life-forms must have similar requirements for life as on Earth, the hypothesis of other types of biochemistry suggests the possibility of lifeforms evolving around a different metabolic mechanism. In Evolving the Alien, biologist Jack Cohen and mathematician Ian Stewart argue astrobiology, based on the Rare Earth hypothesis, is restrictive and unimaginative. They suggest that Earth-like planets may be very rare, but non-carbon-based complex life could possibly emerge in other environments. The most frequently mentioned alternative to carbon is silicon-based life, while ammonia and hydrocarbons are sometimes suggested as alternative solvents to water. The astrobiologist Dirk Schulze-Makuch and other scientists have proposed a Planet Habitability Index whose criteria include "potential for holding a liquid solvent" that is not necessarily restricted to water.[79][80]More speculative ideas have focused on bodies altogether different from Earth-like planets. Astronomer Frank Drake, a well-known proponent of the search for extraterrestrial life, imagined life on a neutron star: submicroscopic "nuclear molecules" combining to form creatures with a life cycle millions of times quicker than Earth life.[81] Called "imaginative and tongue-in-cheek", the idea gave rise to science fiction depictions.[82] Carl Sagan, another optimist with regards to extraterrestrial life, considered the possibility of organisms that are always airborne within the high atmosphere of Jupiter in a 1976 paper.[33][34] Cohen and Stewart also envisioned life in both a solar environment and in the atmosphere of a gas giant.

"Good Jupiters"

"Good Jupiters" are gas giants, like the Solar System's Jupiter, that orbit their stars in circular orbits far enough away from the habitable zone not to disturb it but close enough to "protect" terrestrial planets in closer orbit in two critical ways. First, they help to stabilize the orbits, and thereby the climates, of the inner planets. Second, they keep the inner Solar System relatively free of comets and asteroids that could cause devastating impacts.[83] Jupiter orbits the Sun at about five times the distance between the Earth and the Sun. This is the rough distance we should expect to find good Jupiters elsewhere. Jupiter's "caretaker" role was dramatically illustrated in 1994 when Comet Shoemaker–Levy 9 impacted the giant; had Jovian gravity not captured the comet, it may well have entered the inner Solar System.However, the story is not quite so clear cut. Research has shown that Jupiter's role in determining the rate at which objects hit the Earth is, at the very least, significantly more complicated than once thought.[84][85][86][87] Whilst for the long-period comets (which contribute only a small fraction of the impact risk to the Earth) it is true that Jupiter acts as a shield, it actually seems to increase the rate at which asteroids and short-period comets are flung towards our planet. Were Jupiter absent, it seems likely that the Earth would actually experience significantly fewer impacts from potentially hazardous objects. By extension, it is becoming clear that the presence of Jupiter-like planets is no longer required as a pre-requisite for planetary habitability – indeed, our first searches for life beyond the Solar System might be better directed to systems where no such planet has formed, since in those systems, less material will be directed to impact on the potentially inhabited planets.

The role of Jupiter in the early history of the Solar System is somewhat better established, and the source of significantly less debate. Early in the Solar System's history, Jupiter is accepted as having played an important role in the hydration of our planet: it increased the eccentricity of asteroid belt orbits and enabled many to cross Earth's orbit and supply the planet with important volatiles. Before Earth reached half its present mass, icy bodies from the Jupiter–Saturn region and small bodies from the primordial asteroid belt supplied water to the Earth due to the gravitational scattering of Jupiter and, to a lesser extent, Saturn.[88] Thus, while the gas giants are now helpful protectors, they were once suppliers of critical habitability material.

In contrast, Jupiter-sized bodies that orbit too close to the habitable zone but not in it (as in 47 Ursae Majoris), or have a highly elliptical orbit that crosses the habitable zone (like 16 Cygni B) make it very difficult for an independent Earth-like planet to exist in the system. See the discussion of a stable habitable zone above. However, during the process of migrating into a habitable zone, a Jupiter-size planet may capture a terrestrial planet as a moon. Even if such a planet is initially loosely bound and following a strongly inclined orbit, gravitational interactions with the star can stabilize the new moon into a close, circular orbit that is coplanar with the planet's orbit around the star.[89]