Microwave transmission is the transmission of information by electromagnetic waves with wavelengths in the microwave frequency range of 300MHz to 300GHz(1 m - 1 mm wavelength) of the electromagnetic spectrum. Microwave signals are normally limited to the line of sight, so long-distance transmission using these signals requires a series of repeaters forming a microwave relay network. It is possible to use microwave signals in over-the-horizon communications using tropospheric scatter, but such systems are expensive and generally used only in specialist roles.

Although an experimental 40-mile (64 km) microwave telecommunication link across the English Channel was demonstrated in 1931, the development of radar in World War II provided the technology for practical exploitation of microwave communication. During the war, the British Army introduced the Wireless Set No. 10, which used microwave relays to multiplex eight telephone channels over long distances. A link across the English Channel allowed General Bernard Montgomery to remain in continual contact with his group headquarters in London.

In the post-war era, the development of microwave technology was rapid, which led to the construction of several transcontinental microwave relay systems in North America and Europe. In addition to carrying thousands of telephone calls at a time, these networks were also used to send television signals for cross-country broadcast, and later, computer data. Communication satellites took over the television broadcast market during the 1970s and 80s, and the introduction of long-distance fibre optic systems in the 1980s and especially 90s led to the rapid rundown of the relay networks, most of which are abandoned.

In recent years, there has been an explosive increase in use of the microwave spectrum by new telecommunication technologies such as wireless networks, and direct-broadcast satellites which broadcast television and radio directly into consumers' homes. Larger line-of-sight links are once again popular for handing connections between mobile telephone towers, although these are generally not organized into long relay chains.

Uses

Microwaves are widely used for point-to-point communications because their small wavelength allows conveniently-sized antennas to direct them in narrow beams, which can be pointed directly at the receiving antenna. This allows nearby microwave equipment to use the same frequencies without interfering with each other, as lower frequency radio waves do. This frequency reuse conserves scarce radio spectrum bandwidth. Another advantage is that the high frequency of microwaves gives the microwave band a very large information-carrying capacity; the microwave band has a bandwidth 30 times that of all the rest of the radio spectrum below it. A disadvantage is that microwaves are limited to line of sight propagation; they cannot pass around hills or mountains as lower frequency radio waves can.

Microwave radio transmission is commonly used in point-to-point communication systems on the surface of the Earth, in satellite communications, and in deep space radio communications. Other parts of the microwave radio band are used for radars, radio navigation systems, sensor systems, and radio astronomy.

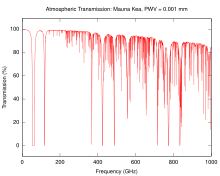

The next higher frequency band of the radio spectrum, between 30 GHz and 300 GHz, are called "millimeter waves" because their wavelengths range from 10 mm to 1 mm. Radio waves in this band are strongly attenuated by the gases of the atmosphere. This limits their practical transmission distance to a few kilometers, so these frequencies cannot be used for long-distance communication. The electronic technologies needed in the millimeter wave band are also in an earlier state of development than those of the microwave band.

- Wireless transmission of information

- One-way and two-way telecommunication using communications satellite

- Terrestrial microwave relay links in telecommunications networks including backbone or backhaul carriers in cellular networks

More recently, microwaves have been used for wireless power transmission.

Microwave radio relay

Microwave radio relay is a technology widely used in the 1950s and 1960s for transmitting information, such as long-distance telephone calls and television programs between two terrestrial points on a narrow beam of microwaves. In microwave radio relay, a microwave transmitter and directional antenna transmits a narrow beam of microwaves carrying many channels of information on a line of sight path to another relay station where it is received by a directional antenna and receiver, forming a fixed radio connection between the two points. The link was often bidirectional, using a transmitter and receiver at each end to transmit data in both directions. The requirement of a line of sight limits the separation between stations to the visual horizon, about 30 to 50 miles (48 to 80 km). For longer distances, the receiving station could function as a relay, retransmitting the received information to another station along its journey. Chains of microwave relay stations were used to transmit telecommunication signals over transcontinental distances. Microwave relay stations were often located on tall buildings and mountaintops, with their antennas on towers to get maximum range.

Beginning in the 1950s, networks of microwave relay links, such as the AT&T Long Lines system in the U.S., carried long-distance telephone calls and television programs between cities. The first system, dubbed TDX and built by AT&T, connected New York and Boston in 1947 with a series of eight radio relay stations. Through the 1950s, they deployed a network of a slightly improved version across the U.S., known as TD2. These included long daisy-chained links that traversed mountain ranges and spanned continents. The launch of communication satellites in the 1970s provided a cheaper alternative. Much of the transcontinental traffic is now carried by satellites and optical fibers, but microwave relay remains important for shorter distances.

Planning

Because the radio waves travel in narrow beams confined to a line-of-sight path from one antenna to the other, they do not interfere with other microwave equipment, so nearby microwave links can use the same frequencies. Antennas must be highly directional (high gain); these antennas are installed in elevated locations such as large radio towers in order to be able to transmit across long distances. Typical types of antenna used in radio relay link installations are parabolic antennas, dielectric lens, and horn-reflector antennas, which have a diameter of up to 4 meters. Highly directive antennas permit an economical use of the available frequency spectrum, despite long transmission distances.

Because of the high frequencies used, a line-of-sight path between the stations is required. Additionally, in order to avoid attenuation of the beam, an area around the beam called the first Fresnel zone must be free from obstacles. Obstacles in the signal field cause unwanted attenuation. High mountain peak or ridge positions are often ideal.

In addition to conventional repeaters which use back-to-back radios transmitting on different frequencies, obstructions in microwave paths can be dealt with by using Passive repeater or on-frequency repeaters.

Obstacles, the curvature of the Earth, the geography of the area and reception issues arising from the use of nearby land (such as in manufacturing and forestry) are important issues to consider when planning radio links. In the planning process, it is essential that "path profiles" are produced, which provide information about the terrain and Fresnel zones affecting the transmission path. The presence of a water surface, such as a lake or river, along the path also must be taken into consideration since it can reflect the beam, and the direct and reflected beam can interfere at the receiving antenna, causing multipath fading. Multipath fades are usually deep only in a small spot and a narrow frequency band, so space and/or frequency diversity schemes can be applied to mitigate these effects.

The effects of atmospheric stratification cause the radio path to bend downward in a typical situation so a major distance is possible as the earth equivalent curvature increases from 6370 km to about 8500 km (a 4/3 equivalent radius effect). Rare events of temperature, humidity and pressure profile versus height, may produce large deviations and distortion of the propagation and affect transmission quality. High-intensity rain and snow making rain fade must also be considered as an impairment factor, especially at frequencies above 10 GHz. All previous factors, collectively known as path loss, make it necessary to compute suitable power margins, in order to maintain the link operative for a high percentage of time, like the standard 99.99% or 99.999% used in 'carrier class' services of most telecommunication operators.

The longest microwave radio relay known up to date crosses the Red Sea with a 360 km (200 mi) hop between Jebel Erba (2170m a.s.l., 20°44′46.17″N 36°50′24.65″E, Sudan) and Jebel Dakka (2572m a.s.l., 21°5′36.89″N 40°17′29.80″E, Saudi Arabia). The link was built in 1979 by Telettra to transmit 300 telephone channels and one TV signal, in the 2 GHz frequency band. (Hop distance is the distance between two microwave stations).

Previous considerations represent typical problems characterizing terrestrial radio links using microwaves for the so-called backbone networks: hop lengths of a few tens of kilometers (typically 10 to 60 km) were largely used until the 1990s. Frequency bands below 10 GHz, and above all, the information to be transmitted, were a stream containing a fixed capacity block. The target was to supply the requested availability for the whole block (Plesiochronous digital hierarchy, PDH, or synchronous digital hierarchy, SDH). Fading and/or multipath affecting the link for short time period during the day had to be counteracted by the diversity architecture. During 1990s microwave radio links begun widely to be used for urban links in cellular network. Requirements regarding link distance changed to shorter hops (less than 10 km, typically 3 to 5 km), and frequency increased to bands between 11 and 43 GHz and more recently, up to 86 GHz (E-band). Furthermore, link planning deals more with intense rainfall and less with multipath, so diversity schemes became less used. Another big change that occurred during the last decade was an evolution toward packet radio transmission. Therefore, new countermeasures, such as adaptive modulation, have been adopted.

The emitted power is regulated for cellular and microwave systems. These microwave transmissions use emitted power typically from 0.03 to 0.30 W, radiated by a parabolic antenna on a narrow beam diverging by a few degrees (1 to 3-4). The microwave channel arrangement is regulated by International Telecommunication Union (ITU-R) and local regulations (ETSI, FCC). In the last decade the dedicated spectrum for each microwave band has become extremely crowded, motivating the use of techniques to increase transmission capacity such as frequency reuse, polarization-division multiplexing, XPIC, MIMO.

History

The history of radio relay communication began in 1898 from the publication by Johann Mattausch in Austrian journal, Zeitschrift für Electrotechnik. But his proposal was primitive and not suitable for practical use. The first experiments with radio repeater stations to relay radio signals were done in 1899 by Emile Guarini-Foresio. However the low frequency and medium frequency radio waves used during the first 40 years of radio proved to be able to travel long distances by ground wave and skywave propagation. The need for radio relay did not really begin until the 1940s exploitation of microwaves, which traveled by line of sight and so were limited to a propagation distance of about 40 miles (64 km) by the visual horizon.

In 1931 an Anglo-French consortium headed by Andre C. Clavier demonstrated an experimental microwave relay link across the English Channel using 10-foot (3 m) dishes. Telephony, telegraph, and facsimile data was transmitted over the bidirectional 1.7 GHz beams 40 miles (64 km) between Dover, UK, and Calais, France. The radiated power, produced by a miniature Barkhausen–Kurz tube located at the dish's focus, was one-half watt. A 1933 military microwave link between airports at St. Inglevert, France, and Lympne, UK, a distance of 56 km (35 miles), was followed in 1935 by a 300 MHz telecommunication link, the first commercial microwave relay system.

The development of radar during World War II provided much of the microwave technology which made practical microwave communication links possible, particularly the klystron oscillator and techniques of designing parabolic antennas. Though not commonly known, the British Army used the Wireless Set Number 10 in this role during World War II.

After the war, telephone companies used this technology to build large microwave radio relay networks to carry long-distance telephone calls. During the 1950s a unit of the US telephone carrier, AT&T Long Lines, built a transcontinental system of microwave relay links across the US that grew to carry the majority of US long distance telephone traffic, as well as television network signals. The main motivation in 1946 to use microwave radio instead of cable was that a large capacity could be installed quickly and at less cost. It was expected at that time that the annual operating costs for microwave radio would be greater than for cable. There were two main reasons that a large capacity had to be introduced suddenly: Pent up demand for long-distance telephone service, because of the hiatus during the war years, and the new medium of television, which needed more bandwidth than radio. The prototype was called TDX and was tested with a connection between New York City and Murray Hill, the location of Bell Laboratories in 1946. The TDX system was set up between New York and Boston in 1947. The TDX was upgraded to the TD2 system, which used [the Morton tube, 416B and later 416C, manufactured by Western Electric] in the transmitters, and then later to TD3 that used solid-state electronics.

Remarkable were the microwave relay links to West Berlin during the Cold War, which had to be built and operated due to the large distance between West Germany and Berlin at the edge of the technical feasibility. In addition to the telephone network, also microwave relay links for the distribution of TV and radio broadcasts. This included connections from the studios to the broadcasting systems distributed across the country, as well as between the radio stations, for example for program exchange.

Military microwave relay systems continued to be used into the 1960s, when many of these systems were supplanted with tropospheric scatter or communication satellite systems. When the NATO military arm was formed, much of this existing equipment was transferred to communications groups. The typical communications systems used by NATO during that time period consisted of the technologies which had been developed for use by the telephone carrier entities in host countries. One example from the USA is the RCA CW-20A 1–2 GHz microwave relay system which utilized flexible UHF cable rather than the rigid waveguide required by higher frequency systems, making it ideal for tactical applications. The typical microwave relay installation or portable van had two radio systems (plus backup) connecting two line of sight sites. These radios would often carry 24 telephone channels frequency-division multiplexed on the microwave carrier (i.e. Lenkurt 33C FDM). Any channel could be designated to carry up to 18 teletype communications instead. Similar systems from Germany and other member nations were also in use.

Long-distance microwave relay networks were built in many countries until the 1980s, when the technology lost its share of fixed operation to newer technologies such as fiber-optic cable and communication satellites, which offer a lower cost per bit.

During the Cold War, the US intelligence agencies, such as the National Security Agency (NSA), were reportedly able to intercept Soviet microwave traffic using satellites such as Rhyolite. Much of the beam of a microwave link passes the receiving antenna and radiates toward the horizon, into space. By positioning a geosynchronous satellite in the path of the beam, the microwave beam can be received.

At the turn of the century, microwave radio relay systems are being used increasingly in portable radio applications. The technology is particularly suited to this application because of lower operating costs, a more efficient infrastructure, and provision of direct hardware access to the portable radio operator.

Microwave link

A microwave link is a communications system that uses a beam of radio waves in the microwave frequency range to transmit video, audio, or data between two locations, which can be from just a few feet or meters to several miles or kilometers apart. Microwave links are commonly used by television broadcasters to transmit programmes across a country, for instance, or from an outside broadcast back to a studio.

Mobile units can be camera mounted, allowing cameras the freedom to move around without trailing cables. These are often seen on the touchlines of sports fields on Steadicam systems.

Properties of microwave links

- Involve line of sight (LOS) communication technology

- Affected greatly by environmental constraints, including rain fade

- Have very limited penetration capabilities through obstacles such as hills, buildings and trees

- Sensitive to high pollen count

- Signals can be degraded during Solar proton events

Uses of microwave links

- In communications between satellites and base stations

- As backbone carriers for cellular systems

- In short-range indoor communications

- Linking remote and regional telephone exchanges to larger (main) exchanges without the need for copper/optical fibre lines

- Measuring the intensity of rain between two locations