Gravitational-wave astronomy is a subfield of astronomy concerned with the detection and study of gravitational waves emitted by astrophysical sources.

Gravitational waves are minute distortions or ripples in spacetime caused by the acceleration of massive objects. They are produced by cataclysmic events such as the merger of binary black holes, the coalescence of binary neutron stars, supernova explosions and processes including those of the early universe shortly after the Big Bang. Studying them offers a new way to observe the universe, providing valuable insights into the behavior of matter under extreme conditions. Similar to electromagnetic radiation (such as light wave, radio wave, infrared radiation and X-rays) which involves transport of energy via propagation of electromagnetic field fluctuations, gravitational radiation involves fluctuations of the relatively weaker gravitational field. The existence of gravitational waves was first suggested by Oliver Heaviside in 1893 and then later conjectured by Henri Poincaré in 1905 as the gravitational equivalent of electromagnetic waves before they were predicted by Albert Einstein in 1916 as a corollary to his theory of general relativity.

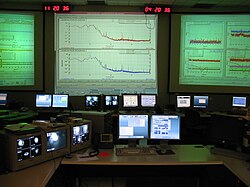

In 1978, Russell Alan Hulse and Joseph Hooton Taylor Jr. provided the first experimental evidence for the existence of gravitational waves by observing two neutron stars orbiting each other and won the 1993 Nobel Prize in physics for their work. In 2015, nearly a century after Einstein's forecast, the first direct observation of gravitational waves as a signal from the merger of two black holes confirmed the existence of these elusive phenomena and opened a new era in astronomy. Subsequent detections have included binary black hole mergers, neutron star collisions, and other violent cosmic events. Gravitational waves are now detected using laser interferometry, which measures tiny changes in the length of two perpendicular arms caused by passing waves. Observatories like LIGO (Laser Interferometer Gravitational-wave Observatory), Virgo and KAGRA (Kamioka Gravitational Wave Detector) use this technology to capture the faint signals from distant cosmic events. LIGO co-founders Barry C. Barish, Kip S. Thorne, and Rainer Weiss were awarded the 2017 Nobel Prize in Physics for their ground-breaking contributions in gravitational wave astronomy.

Potential and challenges

When distant astronomical objects are observed using electromagnetic waves, different phenomena like scattering, absorption, reflection, refraction, etc. cause information loss. There are various regions in space only partially penetrable by photons, such as the insides of nebulae, the dense dust clouds at the galactic core, the regions near black holes, etc. Gravitational astronomy has the potential to be used in parallel with electromagnetic astronomy to study the universe at a better resolution. In an approach known as multi-messenger astronomy, gravitational wave data is combined with data from other wavelengths to get a more complete picture of astrophysical phenomena. Gravitational wave astronomy helps understand the early universe, test theories of gravity, and reveal the distribution of dark matter and dark energy. In particular, it can help find the Hubble constant, which describes the rate of accelerated expansion of the universe. All of these open doors to a physics beyond the Standard Model (BSM).

Challenges that remain in the field include noise interference, the lack of ultra-sensitive instruments, and the detection of low-frequency waves. Ground-based detectors face problems with seismic vibrations produced by environmental disturbances and the limitation of the arm length of detectors due to the curvature of the Earth's surface. In the future, the field of gravitational wave astronomy will try develop upgraded detectors and next-generation observatories, along with possible space-based detectors such as LISA (Laser Interferometer Space Antenna). LISA will be able to listen to distant sources like compact supermassive black holes in the galactic core and primordial black holes, as well as low-frequency sensitive signals sources such as binary white dwarf merger and sources from the early universe.

Gravitational waves

Gravitational waves are waves of the intensity of gravity generated by the accelerated masses of an orbital binary system that propagate as waves outward from their source at the speed of light. They were first proposed by Oliver Heaviside in 1893 and then later by Henri Poincaré in 1905 as waves similar to electromagnetic waves but the gravitational equivalent.

Gravitational waves were later predicted in 1916 by Albert Einstein on the basis of his general theory of relativity as ripples in spacetime. Later he refused to accept gravitational waves. Gravitational waves transport energy as gravitational radiation, a form of radiant energy similar to electromagnetic radiation. Newton's law of universal gravitation, part of classical mechanics, does not provide for their existence, since that law is predicated on the assumption that physical interactions propagate instantaneously (at infinite speed) – showing one of the ways the methods of Newtonian physics are unable to explain phenomena associated with relativity.

The first indirect evidence for the existence of gravitational waves came in 1974 from the observed orbital decay of the Hulse–Taylor binary pulsar, which matched the decay predicted by general relativity as energy is lost to gravitational radiation. In 1993, Russell A. Hulse and Joseph Hooton Taylor Jr. received the Nobel Prize in Physics for this discovery.

Direct observation of gravitational waves was not made until 2015, when a signal generated by the merger of two black holes was received by the LIGO gravitational wave detectors in Livingston, Louisiana, and in Hanford, Washington. The 2017 Nobel Prize in Physics was subsequently awarded to Rainer Weiss, Kip Thorne and Barry Barish for their role in the direct detection of gravitational waves.

In gravitational-wave astronomy, observations of gravitational waves are used to infer data about the sources of gravitational waves. Sources that can be studied this way include binary star systems composed of white dwarfs, neutron stars, and black holes; events such as supernovae; and the formation of the early universe shortly after the Big Bang.

Instruments for different frequencies

Collaboration between detectors aids in collecting unique and valuable information, owing to different specifications and sensitivity of each.

High frequency

There are several ground-based laser interferometers which span several miles/kilometers, including: the two Laser Interferometer Gravitational-Wave Observatory (LIGO) detectors in Washington and Louisiana, USA; Virgo, at the European Gravitational Observatory in Italy; GEO600 in Germany, and the Kamioka Gravitational Wave Detector (KAGRA) in Japan. While LIGO, Virgo, and KAGRA have made joint observations to date, GEO600 is currently utilized for trial and test runs due to lower sensitivity of its instruments and has not participated in joint runs with the others recently.

In 2015, the LIGO project was the first to directly observe gravitational waves using laser interferometers. The LIGO detectors observed gravitational waves from the merger of two stellar-mass black holes, matching predictions of general relativity. These observations demonstrated the existence of binary stellar-mass black hole systems, and were the first direct detection of gravitational waves and the first observation of a binary black hole merger. This finding has been characterized as revolutionary to science, because of the verification of our ability to use gravitational-wave astronomy to progress in our search and exploration of dark matter and the big bang.

Detection of an event by three or more detectors allows the celestial location to be estimated from the relative delays.

Low frequency

An alternative means of observation is using pulsar timing arrays (PTAs). There are three consortia, the European Pulsar Timing Array (EPTA), the North American Nanohertz Observatory for Gravitational Waves (NANOGrav), and the Parkes Pulsar Timing Array (PPTA), which co-operate as the International Pulsar Timing Array. These use existing radio telescopes, but since they are sensitive to frequencies in the nanohertz range, many years of observation are needed to detect a signal and detector sensitivity improves gradually. Current bounds are approaching those expected for astrophysical sources.

In June 2023, four PTA collaborations, the three mentioned above and the Chinese Pulsar Timing Array, delivered independent but similar evidence for a stochastic background of nanohertz gravitational waves. Each provided an independent first measurement of the theoretical Hellings-Downs curve, i.e., the quadrupolar correlation between two pulsars as a function of their angular separation in the sky, which is a telltale sign of the gravitational wave origin of the observed background. The sources of this background remain to be identified, although binaries of supermassive black holes are the most likely candidates.

Intermediate frequencies

Further in the future, there is the possibility of space-borne detectors. The European Space Agency has selected a gravitational-wave mission for its L3 mission, due to launch 2034, the current concept is the evolved Laser Interferometer Space Antenna (eLISA). Also in development is the Japanese Deci-hertz Interferometer Gravitational wave Observatory (DECIGO).

Scientific value

Astronomy has traditionally relied on electromagnetic radiation. Originating with the visible band, as technology advanced, it became possible to observe other parts of the electromagnetic spectrum, from radio to gamma rays. Each new frequency band gave a new perspective on the Universe and heralded new discoveries. During the 20th century, indirect and later direct measurements of high-energy, massive particles provided an additional window into the cosmos. Late in the 20th century, the detection of solar neutrinos founded the field of neutrino astronomy, giving an insight into previously inaccessible phenomena, such as the inner workings of the Sun. The observation of gravitational waves provides a further means of making astrophysical observations.

Russell Hulse and Joseph Taylor were awarded the 1993 Nobel Prize in Physics for showing that the orbital decay of a pair of neutron stars, one of them a pulsar, fits general relativity's predictions of gravitational radiation. Subsequently, many other binary pulsars (including one double pulsar system) have been observed, all fitting gravitational-wave predictions. In 2017, the Nobel Prize in Physics was awarded to Rainer Weiss, Kip Thorne and Barry Barish for their role in the first detection of gravitational waves.

Gravitational waves provide complementary information to that provided by other means. By combining observations of a single event made using different means, it is possible to gain a more complete understanding of the source's properties. This is known as multi-messenger astronomy. Gravitational waves can also be used to observe systems that are invisible (or almost impossible to detect) by any other means. For example, they provide a unique method of measuring the properties of black holes.

Gravitational waves can be emitted by many systems, but, to produce detectable signals, the source must consist of extremely massive objects moving at a significant fraction of the speed of light. The main source is a binary of two compact objects. Example systems include:

- Compact binaries made up of two closely orbiting stellar-mass objects, such as white dwarfs, neutron stars or black holes. Wider binaries, which have lower orbital frequencies, are a source for detectors like LISA. Closer binaries produce a signal for ground-based detectors like LIGO. Ground-based detectors could potentially detect binaries containing an intermediate mass black hole of several hundred solar masses.

- Supermassive black hole binaries, consisting of two black holes with masses of 105–109 solar masses. Supermassive black holes are found at the centre of galaxies. When galaxies merge, it is expected that their central supermassive black holes merge too. These are potentially the loudest gravitational-wave signals. The most massive binaries are a source for PTAs. Less massive binaries (about a million solar masses) are a source for space-borne detectors like LISA.

- Extreme-mass-ratio systems of a stellar-mass compact object orbiting a supermassive black hole. These are sources for detectors like LISA. Systems with highly eccentric orbits produce a burst of gravitational radiation as they pass through the point of closest approach; systems with near-circular orbits, which are expected towards the end of the inspiral, emit continuously within LISA's frequency band. Extreme-mass-ratio inspirals can be observed over many orbits. This makes them excellent probes of the background spacetime geometry, allowing for precision tests of general relativity.

In addition to binaries, there are other potential sources:

- Supernovae generate high-frequency bursts of gravitational waves that could be detected with LIGO or Virgo.

- Rotating neutron stars are a source of continuous high-frequency waves if they possess axial asymmetry.

- Early universe processes, such as inflation or a phase transition.

- Cosmic strings could also emit gravitational radiation if they do exist. Discovery of these gravitational waves would confirm the existence of cosmic strings.

Gravitational waves interact only weakly with matter. This is what makes them difficult to detect. It also means that they can travel freely through the Universe, and are not absorbed or scattered like electromagnetic radiation. It is therefore possible to see to the center of dense systems, like the cores of supernovae or the Galactic Center. It is also possible to see further back in time than with electromagnetic radiation, as the early universe was opaque to light prior to recombination, but transparent to gravitational waves.

The ability of gravitational waves to move freely through matter also means that gravitational-wave detectors, unlike telescopes, are not pointed to observe a single field of view but observe the entire sky. Detectors are more sensitive in some directions than others, which is one reason why it is beneficial to have a network of detectors. Directionalization is also poor, due to the small number of detectors.

In cosmic inflation

Cosmic inflation, a hypothesized period when the universe rapidly expanded during the first 10−36 seconds after the Big Bang, would have given rise to gravitational waves; that would have left a characteristic imprint in the polarization of the CMB radiation.

It is possible to calculate the properties of the primordial gravitational waves from measurements of the patterns in the microwave radiation, and use those calculations to learn about the early universe.

Development

As a young area of research, gravitational-wave astronomy is still in development; however, there is consensus within the astrophysics community that this field will evolve to become an established component of 21st century multi-messenger astronomy.

Gravitational-wave observations complement observations in the electromagnetic spectrum. These waves also promise to yield information in ways not possible via detection and analysis of electromagnetic waves. Electromagnetic waves can be absorbed and re-radiated in ways that make extracting information about the source difficult. Gravitational waves, however, only interact weakly with matter, meaning that they are not scattered or absorbed. This should allow astronomers to view the center of a supernova, stellar nebulae, and even colliding galactic cores in new ways.

Ground-based detectors have yielded new information about the inspiral phase and mergers of binary systems of two stellar mass black holes, and merger of two neutron stars. They could also detect signals from core-collapse supernovae, and from periodic sources such as pulsars with small deformations. If there is truth to speculation about certain kinds of phase transitions or kink bursts from long cosmic strings in the very early universe (at cosmic times around 10−25 seconds), these could also be detectable. Space-based detectors like LISA should detect objects such as binaries consisting of two white dwarfs, and AM CVn stars (a white dwarf accreting matter from its binary partner, a low-mass helium star), and also observe the mergers of supermassive black holes and the inspiral of smaller objects (between one and a thousand solar masses) into such black holes. LISA should also be able to listen to the same kind of sources from the early universe as ground-based detectors, but at even lower frequencies and with greatly increased sensitivity.

Detecting emitted gravitational waves is a difficult endeavor. It involves ultra-stable high-quality lasers and detectors calibrated with a sensitivity of at least 2·10−22 Hz−1/2 as shown at the ground-based detector, GEO600. It has also been proposed that even from large astronomical events, such as supernova explosions, these waves are likely to degrade to vibrations as small as an atomic diameter.

Pinpointing the location of where the gravitational waves comes from is also a challenge. But deflected waves through gravitational lensing combined with machine learning could make it easier and more accurate. Just as the light from the SN Refsdal supernova was detected a second time almost a year after it was first discovered, due to gravitational lensing sending some of the light on a different path through the universe, the same approach could be used for gravitational waves. While still at an early stage, a technique similar to the triangulation used by cell phones to determine their location in relation to GPS satellites, will help astronomers tracking down the origin of the waves.