One of the major ambitions of my life is to promote

science and critical thinking, which I do under the related banners of

scientific skepticism and science-based medicine. This is a huge

endeavor, with many layers of complexity. For that reason it is tempting

to keep one’s head down, focus on small manageable problems and goals,

and not worry too much about the big picture. Worrying about the big

picture causes stress and anxiety.

I have been doing this too long to keep my head down, however. I have

to worry about the big picture: are we making progress, are we doing it

right, how should we alter our strategy, is there anything we are

missing?

The answers to these questions are different for each topic we face.

While we are involved in one large meta-goal, it is composed of hundreds

of sub-goals, each of which may pose their own challenges. Creationism,

for example, is one specific topic that we confront within our broader

mission or promoting science.

Over my life the defenders of science

have won every major battle against creationism, in the form of major

court battles, many at the supreme court level. The most recent was Kitzmiller vs Dover,

which effectively killed Intelligent Design as a strategy for pushing

creationism into public schools. The courts are a great venue for the

side of science, because of the separation clause in the constitution

and the way it has been interpreted by the courts. Creationism is a

religious belief, pure and simple, and it has no place in a science

classroom. Evolution, meanwhile, is an established scientific theory

with overwhelming support in the scientific community. It is the exact

kind of consensus science that should be taught in the classroom. When

we have this debate in the courtroom, where there are rules of evidence

and logic, it’s no contest. Logic, facts, and the law are clearly on the

side of evolution.

Despite the consistent legal defeat of creationism, over the last 30 years Gallup’s poll

of American public belief in creationism has not changed. In 1982 44%

of Americans endorsed the statement: “God created humans in their

present form.” In 2014 the figure was 42%; in between the figure

fluctuated from 40-47% without any trend.

There has been a trend in the number of people willing to endorse the

statement that humans evolved without any involvement from God, with an

increase from 9 to 19%. This likely reflects a general trend,

especially in younger people, away from religious affiliation – but

apparently not penetrating the fundamentalist Christian segments of

society.

Meanwhile creationism has become, if anything, more of an issue for

the Republican party. It seems that any Republican primary candidate

must either endorse creationism or at least pander with evasive answers

such as, “I am not a scientist” or “teach the controversy” or something

similar.

Further, in many parts of the country with a strong fundamentalist

Christian population, they are simply ignoring the law with impunity and

teaching outright creationism, or at least the made-up “weaknesses” of

evolutionary theory.

They are receiving cover from pandering or

believing politicians. This is the latest creationist strategy – use

“academic freedom” laws to provide cover for teachers who want to

introduce creationist propaganda into their science classrooms.

Louisiana is the model for this. Zack Kopplin, who was a high school

student when Bobby Jindal signed the law that allows teachers to

introduce creationist material into Louisiana classrooms. He has since

made it his mission to oppose such laws, and he writes about his frustrations in trying to make any progress. Creationists are simply too politically powerful in the Bible belt.

This brings me back to my core question – how are we doing (at least

with respect to the creationism issue)? The battles we have fought

needed to be fought and it is definitely a good thing that science and

reason won. There are now powerful legal precedents defending the

teaching of evolution and opposing the teaching of creationism in public

schools, and I don’t mean to diminish the meaning of these victories.

But we have not penetrated in the slightest the creationist culture

and political power, which remains solid at around 42% of the US

population. It seems to me that the problem is self-perpetuating.

Students raised in schools that teach creationism or watered-down

evolution and live in families and go to churches that preach

creationism are very likely to grow up to be creationists. Some of them

will be teachers and politicians.

From one perspective we might say that we held the line defensively

against a creationist offense, but that is all – we held the line.

Perhaps we need to now figure out a way to go on offense, rather than

just waiting to defend against the next creationist offense. The

creationists have think tanks who spend their time thinking about the

next strategy. At best we have people and organizations (like the

excellent National Center for Science Education) who spend their time trying to anticipate the next strategy.

The NCSE’s own description of their mission is, “Defending the

teaching of evolution and climate science.” They are in a defensive

posture. Again, to be clear, they do excellent and much needed work and I

have nothing but praise for them. But looking at the big picture,

perhaps we need to add some offensive strategies to our defensive

strategies.

I don’t know exactly what form those offensive strategies would take.

This would be a great conversation for skeptics to have, however.

Rather than just fighting against creationist laws, for example, perhaps

we could craft a model pro-science law that will make it more difficult

for science teachers to hide their teaching of creationism.

Perhaps we

need a federal law to trump any pro-creationist state laws. It’s worth

thinking about.

I also think we need a cultural change within the fundamentalist

Christian community. This will be a tougher nut to crack. We should,

however, be having a conversation with them about how Christian faith

can be compatible with science. Faith does not have to directly conflict

with the current findings of science. Modeling ways in which Christians

can accommodate their faith to science may be helpful. And to be clear –

I am not saying that science should accommodate itself to faith, that

is exactly what we are fighting against.

Conclusion

As the skeptical movement grows and evolves, I would like to see it

mature in the direction where high-level strategizing on major issues

can occur. It is still very much a grassroots movement without any real

organization. At best there is networking going on, and perhaps that is

enough. At the very least we should parlay those networks into

goal-oriented strategies on specific issues.

Creationism is one such issue that needs some high-level think tank attention.

Reading further into the articles where the case is laid out, a few caveats appear, but the chain of events seems strong.

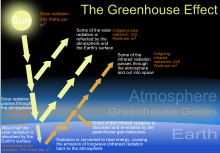

The mechanism? An extreme drought in the Fertile Crescent region—one that a new study finds was made worse by human greenhouse gas emissions—added a spark to the tinderbox of tensions that had been amassing in Syria for a number of years under the Assad regime (including poor water management policies).

It is not until you dig pretty deep into the technical scientific literature, that you find out that the anthropogenic climate change impact on drought conditions in the Fertile Crescent is extremely minimal and tenuous—so much so that it is debatable as to whether it is detectable at all.

This is not to say that a strong and prolonged drought didn’t play some role in the Syria’s pre-war unrest—perhaps it did, perhaps it didn’t (a debate we leave up to folks much more qualified than we are on the topic)—but that the human-influenced climate change impact on the drought conditions was almost certainly too small to have mattered.

In other words, the violence would almost certainly have occurred anyway.

Several tidbits buried in the scientific literature are relevant to assessing the human impact on the meteorology behind recent drought conditions there.

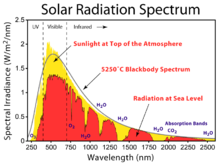

It is true that climate models do project a general drying trend in the Mediterranean region (including the Fertile Crescent region in the Eastern Mediterranean) as the climate warms under increasing greenhouse gas concentrations. There are two components to the projected drying. The first is a northward expansion of the subtropical high pressure system that typically dominates the southern portion of the region. This poleward expansion of the high pressure system would act to shunt wintertime storm systems northward, increasing precipitation over Europe but decreasing precipitation across the Mediterranean. The second component is an increase in the temperature which would lead to increased evaporation and enhanced drying.

Our analysis will show that the connection between this drought and human-induced climate change is tenuous at best, and tendentious at worst.

An analysis in the new headline-generating paper by Colin Kelley and colleagues that just appeared in the Proceeding of the National Academy of Sciences shows the observed trend in the sea level pressure across the eastern Mediterranean as well as the trend projected to have taken place there by a collection of climate models. We reproduce this graphic as Figure 1. If the subtropical high is expanding northward over the region, the sea level pressure ought to be on the rise. Indeed, the climate models (bottom panel) project a rise in the surface pressure over the 20th century (blue portion of the curve) and predict even more of a rise into the future (red portion of the curve).

However, the observations (top panel, green line) do not corroborate the model hypothesis under the normative rules of science. Ignoring the confusing horizontal lines included by the authors, several things are obvious. First, the level of natural variability is such that no overall trend is readily apparent.

[Note: The authors identify an upwards trend in the observations and describe it as being “marginally significant (P < 0.14)”. In nobody’s book (except, we guess, these authors) is a P-value of 0.14 “marginally significant”—it is widely accepted in the scientific literature that P-values must be less than 0.05 for them to be considered statistically significant (i.e., there is a less than 1 in 20 chance that chance alone would produce a similar result). That’s normative science. We’ve seen some rather rare cases where authors attached the term “marginally” significant to P-values up to 0.10, but 0.14 (about a 1 in 7 chance that chance didn’t produce it) is taking things a bit far, hence our previous usage of the word “tendentious.”]

Whether or not there is an identifiable overall upwards trend, the barometric pressure in the region during the last decade of the record (when the Syrian drought took place) is not at all unusual when compared to other periods in the region’s pressure history—including periods that took place long before large-scale greenhouse gas emissions were taking place.

Consequently, there is little in the pressure record to lend credence to the notion that human-induced climate change played a significant role in the region’s recent drought.

Figure 1. Observed (top) and modeled (bottom) sea level pressure for

the Eastern Mediterranean region (figure adapted from Kelley et al., 2015).

Another clue that the human impact on the recent drought was minimal (at best) comes from a 2012 paper in the Journal of Climate by Martin Hoerling and colleagues. In that paper, Hoerling et al. concluded that about half of the trend towards late-20th century dry conditions in the Mediterranean region was potentially attributable to human emissions of greenhouse gases and aerosols. They found that climate models run with increasing concentrations of greenhouse gases and aerosols produce drying across the Mediterranean region in general. However, the subregional patterns of the drying are sensitive to the patterns of sea surface temperature (SST) variability and change. Alas, the patterns of SST changes are quite different in reality than they were projected to be by the climate models. Hoerling et al. describe the differences this way “In general, the observed SST differences have stronger meridional [North-South] contrast between the tropics and NH extratropics and also a stronger zonal [East-West] contrast between the Indian Ocean and the tropical Pacific Ocean.”

Figure 2 shows visually what Hoerling was describing—the observed SST change (top) along with the model projected changes (bottom) for the period 1971-2010 minus 1902-1970. Note the complexity that accompanies reality.

Figure 2. Cold season (November–April) sea surface temperature

departures (°C) for the period 1971–2010 minus 1902–70: (top)

observed and (bottom) mean from climate model projections

(from Hoerling et al., 2012).

Hoerling et al. show that in the Fertile Crescent region, the drying produced by climate models is particularly enhanced (by some 2-3 times) if the observed patterns of sea surface temperatures are incorporated into the models rather than patterns that would otherwise be projected by the models (i.e., the top portion of Figure 2 is used to drive the model output rather than the bottom portion).

Let’s be clear here. The models were unable to accurately reproduce the patterns of SST that have been observed as greenhouse gas concentrations increased. So the observed data were substituted for the predicted value, and then that was used to generate forecasts of changed rainfall. We can’t emphasize this enough: what was not supposed to happen from climate change was forced into the models that then synthesized rainfall.

Figure 3 shows these results and Figure 4 shows what has been observed. Note that even using the prescribed SST, the model predicted changes in Figure 3 (lower panel) are only about half as much as has been observed to have taken place in the region around Syria (Figure 4, note scale difference). This leaves the other half of the moisture decline largely unexplained. From Figure 3 (top), you can also see that only about 10mm out of more than 60mm of observed precipitation decline around Syria during the cold season is “consistent with” human-caused climate change as predicted by climate models left to their own devices.

Nor does “consistent with” mean “caused by” it.

Figure 3. Simulated change in cold season precipitation

(mm) over the Mediterranean region based on the ensemble

average (top) of 22 IPCC models run with observed emissions

of greenhouse gases and aerosols and (bottom) of 40 models

run with observed emissions of greenhouse gases and aerosols

with prescribed sea surface temperatures. The difference plots

in the panels are for the period 1971–2010 minus 1902–70

(source: Hoerling et al., 2012).

For comparative purposes, according to the University of East Anglia climate history, the average cold-season rainfall in Syria is 261mm (10.28 inches). Climate models, when left to their own devices, predict a decline averaging about 10mm, or 3.8 per cent of the total. When “prescribed” (some would use the word “fudged”) sea surface temperatures are substituted for their wrong numbers, the decline in rainfall goes up to a whopping 24mm, or 9.1 per cent of the total. For additional comparative purposes, population has roughly tripled in the last three decades.

Figure 4. Observed change in cold season precipitation for the period

1971–2010 minus 1902–70. Anomalies (mm) are relative to the

1902–2010 (source: Hoerling et al., 2012).

So what you are left with after carefully comparing the patterns of observed changes in the meteorology and climatology of Syria and the Fertile Crescent region to those produced by climate models, is that the lion’s share of the observed changes are left unexplained by the models run with increasing greenhouse gases. Lacking a better explanation, these unexplained changes get chalked up to “natural variability”—and natural variability dominates the observed climate history.

You wouldn’t come to this conclusion from the cursory treatment of climate that is afforded in the mainstream press. It requires an examination of scientific literature and a good background and understanding of the rather technical research being discussed. Like all issues related to climate change, the devil is in the details, and, in the haste to produce attention grabbing headlines, the details often get glossed over or dismissed.

Our bottom line: the identifiable influence of human-caused climate change on recent drought conditions in the Fertile Crescent was almost certainly not the so-called straw that broke the camel’s back and led to the outbreak of conflict in Syria. The pre-existing (political) climate in the region was plenty hot enough for a conflict to ignite, perhaps partly fuelled by recent drought conditions—conditions which are part and parcel of the region climate and the intensity and frequency of which remain dominated by natural variability, even in this era of increasing greenhouse gas emissions from human activities.

References:

Hoerling, M., et al., 2012. On the increased frequency of Mediterranean drought. Journal of Climate, 25, 2146-2161.

Kelley, C. P., et al., 2015. Climate change in the Fertile Crescent and implications of the recent Syrian drought. Proceedings of the National Academy of Sciences, doi:10.1073/pnas.1421533112

The Current Wisdom is a series of monthly articles in which Patrick J. Michaels, director of the Center for the Study of Science, reviews interesting items on global warming in the scientific literature that may not have received the media attention that they deserved, or have been misinterpreted in the popular press.