Accumulated cyclone energy (ACE) is a measure used by various agencies including the National Oceanic and Atmospheric Administration (NOAA) and the India Meteorological Department to express the activity of individual tropical cyclones

and entire tropical cyclone seasons. It uses an approximation of the

wind energy used by a tropical system over its lifetime and is

calculated every six hours. The ACE of a season is the sum of the ACEs

for each storm and takes into account the number, strength, and duration

of all the tropical storms in the season. The highest ACE calculated for a single storm is 82, for Hurricane/Typhoon Ioke in 2006.

Calculation

The

ACE of a season is calculated by summing the squares of the estimated

maximum sustained velocity of every active tropical storm (wind speed 35

knots [65 km/h, 40 mph] or higher), at six-hour intervals. Since the

calculation is sensitive to the starting point of the six-hour

intervals, the convention is to use 00:00, 06:00, 12:00, and 18:00 UTC. If any storms of a season happen to cross years, the storm's ACE counts for the previous year. The numbers are usually divided by 10,000 to make them more manageable. One unit of ACE equals 104 kn2, and for use as an index the unit is assumed. Thus:

where vmax is estimated sustained wind speed in knots.

Kinetic energy

is proportional to the square of velocity, and by adding together the

energy per some interval of time, the accumulated energy is found. As

the duration of a storm increases, more values are summed and the ACE

also increases such that longer-duration storms may accumulate a larger

ACE than more-powerful storms of lesser duration. Although ACE is a

value proportional to the energy of the system, it is not a direct

calculation of energy (the mass of the moved air and therefore the size

of the storm would show up in a real energy calculation).

A related quantity is hurricane destruction potential (HDP),

which is ACE but only calculated for the time where the system is a

hurricane.

Atlantic basin ACE

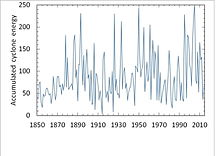

Atlantic basin cyclone intensity by Accumulated cyclone energy, timeseries 1850-2014

A season's ACE is used by NOAA and others to categorize the hurricane season into 3 groups by its activity. Measured over the period 1951–2000 for the Atlantic basin, the median annual index was 87.5 and the mean annual index was 93.2. The NOAA categorization system divides seasons into:

- Above-normal season: An ACE value above 111 (120% of the 1981–2010 median), provided at least two of the following three parameters are also exceeded: number of tropical storms: 12, hurricanes: 6, and major hurricanes: 2.

- Near-normal season: neither above-normal nor below normal

- Below-normal season: An ACE value below 66 (71.4% of the 1981–2010 median), or none of the following three parameters are exceeded: number of tropical storms: 9, hurricanes: 4, and major hurricanes: 1.

According to the NOAA categorization system for the Atlantic, the most recent above-normal season is the 2018 season, the most recent near-normal season is the 2014 season, and the most recent below normal season is the 2015 season.

Hyperactivity

The term hyperactive is used by Goldenberg et al. (2001) based on a different weighting algorithm,

which places more weight on major hurricanes, but typically equating to

an ACE of about 153 (175% of the 1951–2000 median) or more.

Individual storms in the Atlantic

The highest ever ACE estimated for a single storm in the Atlantic is 73.6, for the San Ciriaco hurricane in 1899. This single storm had an ACE higher than many whole Atlantic storm seasons. Other Atlantic storms with high ACEs include Hurricane Ivan in 2004, with an ACE of 70.4, Hurricane Irma in 2017, with an ACE of 64.9, the Great Charleston Hurricane in 1893, with an ACE of 63.5, Hurricane Isabel in 2003, with an ACE of 63.3, and the 1932 Cuba hurricane, with an ACE of 59.8.

Since 1950, the highest ACE of a tropical storm was Tropical Storm Laura in 1971, which attained an ACE of 8.6. The highest ACE of a Category 1 hurricane was Hurricane Nadine in 2012, which attained an ACE of 26.3. The lowest ACE of a tropical storm was 2000's Tropical Storm Chris and 2017's Tropical Storm Philippe, both of which were tropical storms for only six hours and had an ACE of just 0.1. The lowest ACE of any hurricane was 2005's Hurricane Cindy, which was only a hurricane for six hours, and 2007's Hurricane Lorenzo,

which was a hurricane for twelve hours; both of which had an ACE of

just 1.5. The lowest ACE of a major hurricane (Category 3 or higher),

was Hurricane Gerda in 1969, with an ACE of 5.3. The only years since 1950 to feature two storms with an ACE index of over 40 points have been 1966, 2003, and 2004, and the only year to feature three storms is 2017.

The following table shows those storms in the Atlantic basin from 1950–2019 that have attained over 40 points of ACE.

| Storm | Year | Peak classification | ACE | Duration |

|---|---|---|---|---|

| Hurricane Ivan | 2004 | 70.4 | 23 days | |

| Hurricane Irma | 2017 | 64.9 | 13 days | |

| Hurricane Isabel | 2003 | 63.3 | 14 days | |

| Hurricane Donna | 1960 | 57.6 | 16 days | |

| Hurricane Carrie | 1957 | 55.8 | 21 days | |

| Hurricane Inez | 1966 | 54.6 | 21 days | |

| Hurricane Luis | 1995 | 53.5 | 16 days | |

| Hurricane Allen | 1980 | 52.3 | 12 days | |

| Hurricane Esther | 1961 | 52.2 | 18 days | |

| Hurricane Matthew | 2016 | 50.9 | 12 days | |

| Hurricane Flora | 1963 | 49.4 | 16 days | |

| Hurricane Edouard | 1996 | 49.3 | 14 days | |

| Hurricane Beulah | 1967 | 47.9 | 17 days | |

| Hurricane Dorian | 2019 | 47.8 | 15 days | |

| Hurricane Dog | 1950 | 47.5 | 13 days | |

| Hurricane Betsy | 1965 | 47.0 | 18 days | |

| Hurricane Frances | 2004 | 45.9 | 15 days | |

| Hurricane Faith | 1966 | 45.4 | 17 days | |

| Hurricane Maria | 2017 | 44.8 | 14 days | |

| Hurricane Ginger | 1971 | 44.2 | 28 days | |

| Hurricane David | 1979 | 44.0 | 12 days | |

| Hurricane Jose | 2017 | 43.3 | 17 days | |

| Hurricane Fabian | 2003 | 43.2 | 14 days | |

| Hurricane Hugo | 1989 | 42.7 | 12 days | |

| Hurricane Gert | 1999 | 42.3 | 12 days | |

| Hurricane Igor | 2010 | 41.9 | 14 days |

Atlantic hurricane seasons, 1851–2019

Due

to the scarcity and imprecision of early offshore measurements, ACE

data for the Atlantic hurricane season is less reliable prior to the

modern satellite era,but NOAA has analyzed the best available information dating back to 1851. The 1933 Atlantic hurricane season is considered the highest ACE on record with a total of 259.

For the current season or the season that just ended, the ACE is

preliminary based on National Hurricane Center bulletins, which may

later be revised.

Eastern Pacific ACE

Individual storms in the Eastern Pacific (east of 180°W)

The highest ever ACE estimated for a single storm in the Eastern or Central Pacific, while located east of the International Date Line is 62.8, for Hurricane Fico of 1978. Other Eastern Pacific storms with high ACEs include Hurricane John in 1994, with an ACE of 54.0, Hurricane Kevin in 1991, with an ACE of 52.1, and Hurricane Hector of 2018, with an ACE of 50.5.

The following table shows those storms in the Eastern and Central

Pacific basins from 1971–2018 that have attained over 30 points of ACE.

| Storm | Year | Peak classification | ACE | Duration |

|---|---|---|---|---|

| Hurricane Fico | 1978 | 62.8 | 20 days | |

| Hurricane John |

1994 | 54.0 | 19 days | |

| Hurricane Kevin | 1991 | 52.1 | 17 days | |

| Hurricane Hector |

2018 | 50.5 | 13 days | |

| Hurricane Tina | 1992 | 47.7 | 22 days | |

| Hurricane Trudy | 1990 | 45.8 | 16 days | |

| Hurricane Lane | 2018 | 44.2 | 13 days | |

| Hurricane Dora |

1999 | 41.4 | 13 days | |

| Hurricane Jimena | 2015 | 40.0 | 15 days | |

| Hurricane Guillermo | 1997 | 40.0 | 16 days | |

| Hurricane Norbert | 1984 | 39.6 | 12 days | |

| Hurricane Norman | 2018 | 36.6 | 12 days | |

| Hurricane Celeste | 1972 | 36.3 | 16 days | |

| Hurricane Sergio | 2018 | 35.5 | 13 days | |

| Hurricane Lester | 2016 | 35.4 | 14 days | |

| Hurricane Olaf | 2015 | 34.6 | 12 days | |

| Hurricane Jimena | 1991 | 34.5 | 12 days | |

| Hurricane Doreen | 1973 | 34.3 | 16 days | |

| Hurricane Ioke |

2006 | 34.2 | 7 days | |

| Hurricane Marie | 1990 | 33.1 | 14 days | |

| Hurricane Orlene | 1992 | 32.4 | 12 days | |

| Hurricane Greg | 1993 | 32.3 | 13 days | |

| Hurricane Hilary | 2011 | 31.2 | 9 days |

Eastern Pacific hurricane seasons, 1971–2019

Accumulated

Cyclone Energy is also used in the eastern and central Pacific Ocean.

Data on ACE is considered reliable starting with the 1971 season. The season with the highest ACE since 1971 is the 2018 season. The 1977 season has the lowest ACE. The most recent above-normal season is the 2018 season, the most recent near-normal season is the 2017 season, and the most recent below normal season is the 2013 season. The 35 year median 1971–2005 is 115 x 104 kn2 (100 in the EPAC zone east of 140°W, 13 in the CPAC zone); the mean is 130 (112 + 18).