Metallurgy is a domain of materials science and engineering that studies the physical and chemical behavior of metallic elements, their inter-metallic compounds, and their mixtures, which are called alloys. Metallurgy encompasses both the science and the technology of metals; that is, the way in which science is applied to the production of metals, and the engineering of metal components used in products for both consumers and manufacturers. Metallurgy is distinct from the craft of metalworking. Metalworking relies on metallurgy in a similar manner to how medicine relies on medical science for technical advancement. A specialist practitioner of metallurgy is known as a metallurgist.

The science of metallurgy is subdivided into two broad categories: chemical metallurgy and physical metallurgy. Chemical metallurgy is chiefly concerned with the reduction and oxidation of metals, and the chemical performance of metals. Subjects of study in chemical metallurgy include mineral processing, the extraction of metals, thermodynamics, electrochemistry, and chemical degradation (corrosion). In contrast, physical metallurgy focuses on the mechanical properties of metals, the physical properties of metals, and the physical performance of metals. Topics studied in physical metallurgy include crystallography, material characterization, mechanical metallurgy, phase transformations, and failure mechanisms.

Historically, metallurgy has predominately focused on the production of metals. Metal production begins with the processing of ores to extract the metal, and includes the mixture of metals to make alloys. Metal alloys are often a blend of at least two different metallic elements. However, non-metallic elements are often added to alloys in order to achieve properties suitable for an application. The study of metal production is subdivided into ferrous metallurgy (also known as black metallurgy) and non-ferrous metallurgy (also known as colored metallurgy). Ferrous metallurgy involves processes and alloys based on iron, while non-ferrous metallurgy involves processes and alloys based on other metals. The production of ferrous metals accounts for 95% of world metal production.

Modern metallurgists work in both emerging and traditional areas as part of an interdisciplinary team alongside material scientists, and other engineers. Some traditional areas include mineral processing, metal production, heat treatment, failure analysis, and the joining of metals (including welding, brazing, and soldering). Emerging areas for metallurgists include nanotechnology, superconductors, composites, biomedical materials, electronic materials (semiconductors) and surface engineering.

Etymology and pronunciation

Metallurgy derives from the Ancient Greek μεταλλουργός, metallourgós, "worker in metal", from μέταλλον, métallon, "mine, metal" + ἔργον, érgon, "work" The word was originally an alchemist's term for the extraction of metals from minerals, the ending -urgy signifying a process, especially manufacturing: it was discussed in this sense in the 1797 Encyclopædia Britannica. In the late 19th century, it was extended to the more general scientific study of metals, alloys, and related processes. In English, the /mɛˈtælərdʒi/ pronunciation is the more common one in the UK and Commonwealth. The /ˈmɛtəlɜːrdʒi/ pronunciation is the more common one in the US and is the first-listed variant in various American dictionaries (e.g., Merriam-Webster Collegiate, American Heritage).

History

The earliest recorded metal employed by humans appears to be gold, which can be found free or "native". Small amounts of natural gold have been found in Spanish caves dating to the late Paleolithic period, 40,000 BC. Silver, copper, tin and meteoric iron can also be found in native form, allowing a limited amount of metalworking in early cultures. Egyptian weapons made from meteoric iron in about 3,000 BC were highly prized as "daggers from heaven". Certain metals, notably tin, lead, and at a higher temperature, copper, can be recovered from their ores by simply heating the rocks in a fire or blast furnace, a process known as smelting. The first evidence of this extractive metallurgy, dating from the 5th and 6th millennia BC, has been found at archaeological sites in Majdanpek, Jarmovac near Priboj and Pločnik, in present-day Serbia. To date, the earliest evidence of copper smelting is found at the Belovode site near Plocnik. This site produced a copper axe from 5,500 BC, belonging to the Vinča culture.

The earliest use of lead is documented from the late neolithic settlement of Yarim Tepe in Iraq:

"The earliest lead (Pb) finds in the ancient Near East are a 6th millennium BC bangle from Yarim Tepe in northern Iraq and a slightly later conical lead piece from Halaf period Arpachiyah, near Mosul. As native lead is extremely rare, such artifacts raise the possibility that lead smelting may have begun even before copper smelting."

Copper smelting is also documented at this site at about the same time period (soon after 6,000 BC), although the use of lead seems to precede copper smelting. Early metallurgy is also documented at the nearby site of Tell Maghzaliyah, which seems to be dated even earlier, and completely lacks that pottery. The Balkans were the site of major Neolithic cultures, including Butmir, Vinča, Varna, Karanovo, and Hamangia.

The Varna Necropolis, Bulgaria, is a burial site in the western industrial zone of Varna (approximately 4 km from the city centre), internationally considered one of the key archaeological sites in world prehistory. The oldest gold treasure in the world, dating from 4,600 BC to 4,200 BC, was discovered at the site. The gold piece dating from 4,500 BC, recently founded in Durankulak, near Varna is another important example. Other signs of early metals are found from the third millennium BC in places like Palmela (Portugal), Los Millares (Spain), and Stonehenge (United Kingdom). However, the ultimate beginnings cannot be clearly ascertained and new discoveries are both continuous and ongoing.

In the Near East, about 3,500 BC, it was discovered that by combining copper and tin, a superior metal could be made, an alloy called bronze. This represented a major technological shift known as the Bronze Age.

The extraction of iron from its ore into a workable metal is much more difficult than for copper or tin. The process appears to have been invented by the Hittites in about 1200 BC, beginning the Iron Age. The secret of extracting and working iron was a key factor in the success of the Philistines.

Historical developments in ferrous metallurgy can be found in a wide variety of past cultures and civilizations. This includes the ancient and medieval kingdoms and empires of the Middle East and Near East, ancient Iran, ancient Egypt, ancient Nubia, and Anatolia (Turkey), Ancient Nok, Carthage, the Greeks and Romans of ancient Europe, medieval Europe, ancient and medieval China, ancient and medieval India, ancient and medieval Japan, amongst others. Many applications, practices, and devices associated or involved in metallurgy were established in ancient China, such as the innovation of the blast furnace, cast iron, hydraulic-powered trip hammers, and double acting piston bellows.

A 16th century book by Georg Agricola called De re metallica describes the highly developed and complex processes of mining metal ores, metal extraction and metallurgy of the time. Agricola has been described as the "father of metallurgy".

Extraction

Extractive metallurgy is the practice of removing valuable metals from an ore and refining the extracted raw metals into a purer form. In order to convert a metal oxide or sulphide to a purer metal, the ore must be reduced physically, chemically, or electrolytically. Extractive metallurgists are interested in three primary streams: feed, concentrate (metal oxide/sulphide) and tailings (waste).

After mining, large pieces of the ore feed are broken through crushing or grinding in order to obtain particles small enough, where each particle is either mostly valuable or mostly waste. Concentrating the particles of value in a form supporting separation enables the desired metal to be removed from waste products.

Mining may not be necessary, if the ore body and physical environment are conducive to leaching. Leaching dissolves minerals in an ore body and results in an enriched solution. The solution is collected and processed to extract valuable metals. Ore bodies often contain more than one valuable metal.

Tailings of a previous process may be used as a feed in another process to extract a secondary product from the original ore. Additionally, a concentrate may contain more than one valuable metal. That concentrate would then be processed to separate the valuable metals into individual constituents.

Metal and its alloys

Common engineering metals include aluminium, chromium, copper, iron, magnesium, nickel, titanium, zinc, and silicon. These metals are most often used as alloys with the noted exception of silicon.

Much effort has been placed on understanding the iron - carbon alloy system, which includes steels and cast irons. Plain carbon steels (those that contain essentially only carbon as an alloying element) are used in low-cost, high-strength applications, where neither weight nor corrosion are a major concern. Cast irons, including ductile iron, are also part of the iron-carbon system. Iron-Manganese-Chromium alloys (Hadfield-type steels) are also used in non-magnetic applications such as directional drilling.

Stainless steel, particularly Austenitic stainless steels, galvanized steel, nickel alloys, titanium alloys, or occasionally copper alloys are used, where resistance to corrosion is important.

Aluminium alloys and magnesium alloys are commonly used, when a lightweight strong part is required such as in automotive and aerospace applications.

Copper-nickel alloys (such as Monel) are used in highly corrosive environments and for non-magnetic applications.

Nickel-based superalloys like Inconel are used in high-temperature applications such as gas turbines, turbochargers, pressure vessels, and heat exchangers.

For extremely high temperatures, single crystal alloys are used to minimize creep. In modern electronics, high purity single crystal silicon is essential for metal-oxide-silicon transistors (MOS) and integrated circuits.

Production

In production engineering, metallurgy is concerned with the production of metallic components for use in consumer or engineering products. This involves production of alloys, shaping, heat treatment and surface treatment of product.

Determining the hardness of the metal using the Rockwell, Vickers, and Brinell hardness scales is a commonly used practice that helps better understand the metal's elasticity and plasticity for different applications and production processes.

The task of the metallurgist is to achieve balance between material properties, such as cost, weight, strength, toughness, hardness, corrosion, fatigue resistance and performance in temperature extremes. To achieve this goal, the operating environment must be carefully considered.

In a saltwater environment, most ferrous metals and some non-ferrous alloys corrode quickly. Metals exposed to cold or cryogenic conditions may undergo a ductile to brittle transition and lose their toughness, becoming more brittle and prone to cracking. Metals under continual cyclic loading can suffer from metal fatigue. Metals under constant stress at elevated temperatures can creep.

Metalworking processes

Metals are shaped by processes such as:

- Casting – molten metal is poured into a shaped mold.

- Forging – a red-hot billet is hammered into shape.

- Rolling – a billet is passed through successively narrower rollers to create a sheet.

- Extrusion – a hot and malleable metal is forced under pressure through a die, which shapes it before it cools.

- Machining – lathes, milling machines and drills cut the cold metal to shape.

- Sintering – a powdered metal is heated in a non-oxidizing environment after being compressed into a die.

- Fabrication – sheets of metal are cut with guillotines or gas cutters and bent and welded into structural shape.

- Laser cladding – metallic powder is blown through a movable laser beam (e.g. mounted on a NC 5-axis machine). The resulting melted metal reaches a substrate to form a melt pool. By moving the laser head, it is possible to stack the tracks and build up a three-dimensional piece.

- 3D printing – Sintering or melting amorphous powder metal in a 3D space to make any object to shape.

Cold-working processes, in which the product's shape is altered by rolling, fabrication or other processes, while the product is cold, can increase the strength of the product by a process called work hardening. Work hardening creates microscopic defects in the metal, which resist further changes of shape.

Various forms of casting exist in industry and academia. These include sand casting, investment casting (also called the lost wax process), die casting, and continuous castings. Each of these forms has advantages for certain metals and applications considering factors like magnetism and corrosion.

Heat treatment

Metals can be heat-treated to alter the properties of strength, ductility, toughness, hardness and resistance to corrosion. Common heat treatment processes include annealing, precipitation strengthening, quenching, and tempering.

Annealing process softens the metal by heating it and then allowing it to cool very slowly, which gets rid of stresses in the metal and makes the grain structure large and soft-edged so that, when the metal is hit or stressed it dents or perhaps bends, rather than breaking; it is also easier to sand, grind, or cut annealed metal.

Quenching is the process of cooling metal very quickly after heating, thus "freezing" the metal's molecules in the very hard martensite form, which makes the metal harder.

Tempering relieves stresses in the metal that were caused by the hardening process; tempering makes the metal less hard while making it better able to sustain impacts without breaking.

Often, mechanical and thermal treatments are combined in what are known as thermo-mechanical treatments for better properties and more efficient processing of materials. These processes are common to high-alloy special steels, superalloys and titanium alloys.

Plating

Electroplating is a chemical surface-treatment technique. It involves bonding a thin layer of another metal such as gold, silver, chromium or zinc to the surface of the product. This is done by selecting the coating material electrolyte solution, which is the material that is going to coat the workpiece (gold, silver, zinc). There needs to be two electrodes of different materials: one the same material as the coating material and one that is receiving the coating material. Two electrodes are electrically charged and the coating material is stuck to the work piece. It is used to reduce corrosion as well as to improve the product's aesthetic appearance. It is also used to make inexpensive metals look like the more expensive ones (gold, silver).

Shot peening

Shot peening is a cold working process used to finish metal parts. In the process of shot peening, small round shot is blasted against the surface of the part to be finished. This process is used to prolong the product life of the part, prevent stress corrosion failures, and also prevent fatigue. The shot leaves small dimples on the surface like a peen hammer does, which cause compression stress under the dimple. As the shot media strikes the material over and over, it forms many overlapping dimples throughout the piece being treated. The compression stress in the surface of the material strengthens the part and makes it more resistant to fatigue failure, stress failures, corrosion failure, and cracking.

Thermal spraying

Thermal spraying techniques are another popular finishing option, and often have better high temperature properties than electroplated coatings.Thermal spraying, also known as a spray welding process, is an industrial coating process that consists of a heat source (flame or other) and a coating material that can be in a powder or wire form, which is melted then sprayed on the surface of the material being treated at a high velocity. The spray treating process is known by many different names such as HVOF (High Velocity Oxygen Fuel), plasma spray, flame spray, arc spray and metalizing.

Characterization

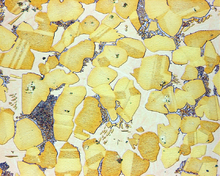

Metallurgists study the microscopic and macroscopic structure of metals using metallography, a technique invented by Henry Clifton Sorby.

In metallography, an alloy of interest is ground flat and polished to a mirror finish. The sample can then be etched to reveal the microstructure and macrostructure of the metal. The sample is then examined in an optical or electron microscope, and the image contrast provides details on the composition, mechanical properties, and processing history.

Crystallography, often using diffraction of x-rays or electrons, is another valuable tool available to the modern metallurgist. Crystallography allows identification of unknown materials and reveals the crystal structure of the sample. Quantitative crystallography can be used to calculate the amount of phases present as well as the degree of strain to which a sample has been subjected.