In mathematics, particularly in linear algebra, matrix multiplication is a binary operation that produces a matrix from two matrices. For matrix multiplication, the number of columns in the first matrix must be equal to the number of rows in the second matrix. The resulting matrix, known as the matrix product, has the number of rows of the first and the number of columns of the second matrix. The product of matrices A and B is denoted as AB.

Matrix multiplication was first described by the French mathematician Jacques Philippe Marie Binet in 1812, to represent the composition of linear maps that are represented by matrices. Matrix multiplication is thus a basic tool of linear algebra, and as such has numerous applications in many areas of mathematics, as well as in applied mathematics, statistics, physics, economics, and engineering. Computing matrix products is a central operation in all computational applications of linear algebra.

Notation

This article will use the following notational conventions: matrices are represented by capital letters in bold, e.g. A; vectors in lowercase bold, e.g. a; and entries of vectors and matrices are italic (they are numbers from a field), e.g. A and a. Index notation is often the clearest way to express definitions, and is used as standard in the literature. The entry in row i, column j of matrix A is indicated by (A)ij, Aij or aij. In contrast, a single subscript, e.g. A1, A2, is used to select a matrix (not a matrix entry) from a collection of matrices.

Definition

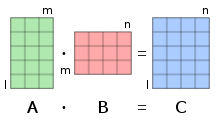

If A is an m × n matrix and B is an n × p matrix,

the matrix product C = AB (denoted without multiplication signs or dots) is defined to be the m × p matrix

such that

for i = 1, ..., m and j = 1, ..., p.

That is, the entry of the product is obtained by multiplying term-by-term the entries of the ith row of A and the jth column of B, and summing these n products. In other words, is the dot product of the ith row of A and the jth column of B.

Therefore, AB can also be written as

Thus the product AB is defined if and only if the number of columns in A equals the number of rows in B, in this case n.

In most scenarios, the entries are numbers, but they may be any kind of mathematical objects for which an addition and a multiplication are defined, that are associative, and such that the addition is commutative, and the multiplication is distributive with respect to the addition. In particular, the entries may be matrices themselves (see block matrix).

Illustration

The figure to the right illustrates diagrammatically the product of two matrices A and B, showing how each intersection in the product matrix corresponds to a row of A and a column of B.

The values at the intersections marked with circles are:

Fundamental applications

Historically, matrix multiplication has been introduced for facilitating and clarifying computations in linear algebra. This strong relationship between matrix multiplication and linear algebra remains fundamental in all mathematics, as well as in physics, chemistry, engineering and computer science.

Linear maps

If a vector space has a finite basis, its vectors are each uniquely represented by a finite sequence of scalars, called a coordinate vector, whose elements are the coordinates of the vector on the basis. These coordinate vectors form another vector space, which is isomorphic to the original vector space. A coordinate vector is commonly organized as a column matrix (also called column vector), which is a matrix with only one column. So, a column vector represents both a coordinate vector, and a vector of the original vector space.

A linear map A from a vector space of dimension n into a vector space of dimension m maps a column vector

onto the column vector

The linear map A is thus defined by the matrix

and maps the column vector to the matrix product

If B is another linear map from the preceding vector space of dimension m, into a vector space of dimension p, it is represented by a matrix A straightforward computation shows that the matrix of the composite map is the matrix product The general formula ) that defines the function composition is instanced here as a specific case of associativity of matrix product (see § Associativity below):

Geometric rotations

Using a Cartesian coordinate system in a Euclidean plane, the rotation by an angle around the origin is a linear map. More precisely,

- ,

where the source point and its image are written as column vectors.

The composition of the rotation by and that by then corresponds to the matrix product

- ,

where appropriate trigonometric identities are employed for the second equality. That is, the composition corresponds to the rotation by angle , as expected.

Resource allocation in economics

A factory uses 4 kinds of basic commodities, to produce 3 kinds of intermediate goods, , which in turn are used to produce 3 kinds of final products, . The matrices

- and

provide the amount of basic commodities needed for a given amount of intermediate goods, and the amount of intermediate goods needed for a given amount of final products, respectively. For example, to produce one unit of intermediate good , one unit of basic commodity , two units of , no units of , and one unit of are needed, corresponding to the first column of .

Using matrix multiplication, compute

- ;

this matrix directly provides the amounts of basic commodities needed for given amounts of final goods. For example, the bottom left entry of is computed as , reflecting that units of are needed to produce one unit of . Indeed, one unit is needed for , 2 for , and for each of the two units that go into the unit, see picture.

In order to produce e.g. 100 units of the final product , 80 units of , and 60 units of , the necessary amounts of basic goods can be computed as

- ,

that is, units of , units of , units of , units of are needed. Similarly, the product matrix can be used to compute the needed amounts of basic goods for other final-good amount data.

System of linear equations

The general form of a system of linear equations is

Using same notation as above, such a system is equivalent with the single matrix equation

Dot product, bilinear form and inner product

The dot product of two column vectors is the matrix product

where is the row vector obtained by transposing and the resulting 1×1 matrix is identified with its unique entry.

More generally, any bilinear form over a vector space of finite dimension may be expressed as a matrix product

and any inner product may be expressed as

where denotes the conjugate transpose of (conjugate of the transpose, or equivalently transpose of the conjugate).

General properties

Matrix multiplication shares some properties with usual multiplication. However, matrix multiplication is not defined if the number of columns of the first factor differs from the number of rows of the second factor, and it is non-commutative, even when the product remains definite after changing the order of the factors.

Non-commutativity

An operation is commutative if, given two elements A and B such that the product is defined, then is also defined, and

If A and B are matrices of respective sizes and , then is defined if , and is defined if . Therefore, if one of the products is defined, the other is not defined in general. If , the two products are defined, but have different sizes; thus they cannot be equal. Only if , that is, if A and B are square matrices of the same size, are both products defined and of the same size. Even in this case, one has in general

For example

but

This example may be expanded for showing that, if A is a matrix with entries in a field F, then for every matrix B with entries in F, if and only if where , and I is the identity matrix. If, instead of a field, the entries are supposed to belong to a ring, then one must add the condition that c belongs to the center of the ring.

One special case where commutativity does occur is when D and E are two (square) diagonal matrices (of the same size); then DE = ED. Again, if the matrices are over a general ring rather than a field, the corresponding entries in each must also commute with each other for this to hold.

Distributivity

The matrix product is distributive with respect to matrix addition. That is, if A, B, C, D are matrices of respective sizes m × n, n × p, n × p, and p × q, one has (left distributivity)

and (right distributivity)

This results from the distributivity for coefficients by

Product with a scalar

If A is a matrix and c a scalar, then the matrices and are obtained by left or right multiplying all entries of A by c. If the scalars have the commutative property, then

If the product is defined (that is, the number of columns of A equals the number of rows of B), then

- and

If the scalars have the commutative property, then all four matrices are equal. More generally, all four are equal if c belongs to the center of a ring containing the entries of the matrices, because in this case, cX = Xc for all matrices X.

These properties result from the bilinearity of the product of scalars:

Transpose

If the scalars have the commutative property, the transpose of a product of matrices is the product, in the reverse order, of the transposes of the factors. That is

where T denotes the transpose, that is the interchange of rows and columns.

This identity does not hold for noncommutative entries, since the order between the entries of A and B is reversed, when one expands the definition of the matrix product.

Complex conjugate

If A and B have complex entries, then

where * denotes the entry-wise complex conjugate of a matrix.

This results from applying to the definition of matrix product the fact that the conjugate of a sum is the sum of the conjugates of the summands and the conjugate of a product is the product of the conjugates of the factors.

Transposition acts on the indices of the entries, while conjugation acts independently on the entries themselves. It results that, if A and B have complex entries, one has

where † denotes the conjugate transpose (conjugate of the transpose, or equivalently transpose of the conjugate).

Associativity

Given three matrices A, B and C, the products (AB)C and A(BC) are defined if and only if the number of columns of A equals the number of rows of B, and the number of columns of B equals the number of rows of C (in particular, if one of the products is defined, then the other is also defined). In this case, one has the associative property

As for any associative operation, this allows omitting parentheses, and writing the above products as

This extends naturally to the product of any number of matrices provided that the dimensions match. That is, if A1, A2, ..., An are matrices such that the number of columns of Ai equals the number of rows of Ai + 1 for i = 1, ..., n – 1, then the product

is defined and does not depend on the order of the multiplications, if the order of the matrices is kept fixed.

These properties may be proved by straightforward but complicated summation manipulations. This result also follows from the fact that matrices represent linear maps. Therefore, the associative property of matrices is simply a specific case of the associative property of function composition.

Computational complexity depends on parenthezation

Although the result of a sequence of matrix products does not depend on the order of operation (provided that the order of the matrices is not changed), the computational complexity may depend dramatically on this order.

For example, if A, B and C are matrices of respective sizes 10×30, 30×5, 5×60, computing (AB)C needs 10×30×5 + 10×5×60 = 4,500 multiplications, while computing A(BC) needs 30×5×60 + 10×30×60 = 27,000 multiplications.

Algorithms have been designed for choosing the best order of products, see Matrix chain multiplication. When the number n of matrices increases, it has been shown that the choice of the best order has a complexity of

Application to similarity

Any invertible matrix defines a similarity transformation (on square matrices of the same size as )

Similarity transformations map product to products, that is

In fact, one has

Square matrices

Let us denote the set of n×n square matrices with entries in a ring R, which, in practice, is often a field.

In , the product is defined for every pair of matrices. This makes a ring, which has the identity matrix I as identity element (the matrix whose diagonal entries are equal to 1 and all other entries are 0). This ring is also an associative R-algebra.

If n > 1, many matrices do not have a multiplicative inverse. For example, a matrix such that all entries of a row (or a column) are 0 does not have an inverse. If it exists, the inverse of a matrix A is denoted A−1, and, thus verifies

A matrix that has an inverse is an invertible matrix. Otherwise, it is a singular matrix.

A product of matrices is invertible if and only if each factor is invertible. In this case, one has

When R is commutative, and, in particular, when it is a field, the determinant of a product is the product of the determinants. As determinants are scalars, and scalars commute, one has thus

The other matrix invariants do not behave as well with products. Nevertheless, if R is commutative, AB and BA have the same trace, the same characteristic polynomial, and the same eigenvalues with the same multiplicities. However, the eigenvectors are generally different if AB ≠ BA.

Powers of a matrix

One may raise a square matrix to any nonnegative integer power multiplying it by itself repeatedly in the same way as for ordinary numbers. That is,

Computing the kth power of a matrix needs k – 1 times the time of a single matrix multiplication, if it is done with the trivial algorithm (repeated multiplication). As this may be very time consuming, one generally prefers using exponentiation by squaring, which requires less than 2 log2 k matrix multiplications, and is therefore much more efficient.

An easy case for exponentiation is that of a diagonal matrix. Since the product of diagonal matrices amounts to simply multiplying corresponding diagonal elements together, the kth power of a diagonal matrix is obtained by raising the entries to the power k:

Abstract algebra

The definition of matrix product requires that the entries belong to a semiring, and does not require multiplication of elements of the semiring to be commutative. In many applications, the matrix elements belong to a field, although the tropical semiring is also a common choice for graph shortest path problems. Even in the case of matrices over fields, the product is not commutative in general, although it is associative and is distributive over matrix addition. The identity matrices (which are the square matrices whose entries are zero outside of the main diagonal and 1 on the main diagonal) are identity elements of the matrix product. It follows that the n × n matrices over a ring form a ring, which is noncommutative except if n = 1 and the ground ring is commutative.

A square matrix may have a multiplicative inverse, called an inverse matrix. In the common case where the entries belong to a commutative ring R, a matrix has an inverse if and only if its determinant has a multiplicative inverse in R. The determinant of a product of square matrices is the product of the determinants of the factors. The n × n matrices that have an inverse form a group under matrix multiplication, the subgroups of which are called matrix groups. Many classical groups (including all finite groups) are isomorphic to matrix groups; this is the starting point of the theory of group representations.

Computational complexity

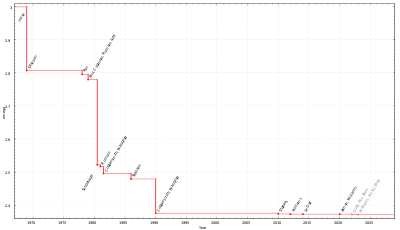

The matrix multiplication algorithm that results from the definition requires, in the worst case, multiplications and additions of scalars to compute the product of two square n×n matrices. Its computational complexity is therefore , in a model of computation for which the scalar operations take constant time (in practice, this is the case for floating point numbers, but not for integers).

Rather surprisingly, this complexity is not optimal, as shown in 1969 by Volker Strassen, who provided an algorithm, now called Strassen's algorithm, with a complexity of Strassen's algorithm can be parallelized to further improve the performance. As of December 2020, the best matrix multiplication algorithm is by Josh Alman and Virginia Vassilevska Williams and has complexity O(n2.3728596). It is not known whether matrix multiplication can be performed in O(n2 + o(1)) time. This would be optimal, since one must read the elements of a matrix in order to multiply it with another matrix.

Since matrix multiplication forms the basis for many algorithms, and many operations on matrices even have the same complexity as matrix multiplication (up to a multiplicative constant), the computational complexity of matrix multiplication appears throughout numerical linear algebra and theoretical computer science.

Generalizations

Other types of products of matrices include:

- Block matrix multiplication

- Cracovian product, defined as A ∧ B = BTA

- Frobenius inner product, the dot product of matrices considered as vectors, or, equivalently the sum of the entries of the Hadamard product

- Hadamard product of two matrices of the same size, resulting in a matrix of the same size, which is the product entry-by-entry

- Kronecker product or tensor product, the generalization to any size of the preceding

- Khatri-Rao product and Face-splitting product

- Outer product, also called dyadic product or tensor product of two column matrices, which is

- Scalar multiplication

={\hat {H}}^{\text{core}}(1)+\sum _{j=1}^{N/2}[2{\hat {J}}_{j}(1)-{\hat {K}}_{j}(1)],}](https://wikimedia.org/api/rest_v1/media/math/render/svg/fcd9f8f3d331a076258da917ded444d92e46897a)

](https://wikimedia.org/api/rest_v1/media/math/render/svg/32f43980de090a8055a1bd5b601945b2316d583f)