Anthropogenic hazards are hazards caused by human action or inaction. They are contrasted with natural hazards. Anthropogenic hazards may adversely affect humans, other organisms, biomes, and ecosystems. They can even cause an omnicide. The frequency and severity of hazards are key elements in some risk analysis methodologies. Hazards may also be described in relation to the impact that they have. A hazard only exists if there is a pathway to exposure. As an example, the center of the earth consists of molten material at very high temperatures which would be a severe hazard if contact was made with the core. However, there is no feasible way of making contact with the core, therefore the center of the earth currently poses no hazard.

Anthropogenic hazards can be grouped into societal hazards (criminality, civil disorder, terrorism, war, industrial hazards, engineering hazards, power outage, fire), hazards caused by transportation and environmental hazards.

Societal hazards

There are certain societal hazards that can occur by inadvertently overlooking a hazard, a failure to notice or by purposeful intent by human inaction or neglect, consequences as a result of little or no preemptive actions to prevent a hazard from occurring. Although not everything is within the scope of human control, there is anti-social behaviour and crimes committed by individuals or groups that can be prevented by reasonable apprehension of injury or death. People commonly report dangerous circumstances, suspicious behaviour or criminal intentions to the police and for the authorities to investigate or intervene.

Criminality

Behavior that puts others at risk of injury or death is universally regarded as criminal and is a breach of the law for which the appropriate legal authority may impose some form of penalty, such as imprisonment, a fine, or even execution. Understanding what makes individuals act in ways that put others at risk has been the subject of much research in many developed countries. Mitigating the hazard of criminality is very dependent on time and place with some areas and times of day posing a greater risk than others.

Civil disorder

Civil disorder is a broad term that typically is used by law enforcement to describe forms of disturbance when many people are involved and are set upon a common aim. Civil disorder has many causes, including large-scale criminal conspiracy, socio-economic factors (unemployment, poverty), hostility between racial and ethnic groups, and outrage over perceived moral and legal transgressions. Examples of well-known civil disorders and riots are the poll tax riots in the United Kingdom in 1990; the 1992 Los Angeles riots in which 53 people died; the 2008 Greek riots after a 15-year-old boy was fatally shot by police; and the 2010 Thai political protests in Bangkok during which 91 people died. Such behavior is only hazardous for those directly involved as participants, those controlling the disturbance, or those indirectly involved as passers-by or shopkeepers for example. For the great majority, staying out of the way of the disturbance avoids the hazard.

Terrorism

The common definition of terrorism is the use or threatened use of violence for the purpose of creating fear in order to achieve a political, religious, or ideological goal. Targets of terrorist acts can be anyone, including private citizens, government officials, military personnel, law enforcement officers, firefighters, or people serving in the interests of governments.

Definitions of terrorism may also vary geographically. In Australia, the Security Legislation Amendment (Terrorism) Act 2002, defines terrorism as "an action to advance a political, religious or ideological cause and with the intention of coercing the government or intimidating the public", while the United States Department of State operationally describes it as "premeditated, politically-motivated violence perpetrated against non-combatant targets by sub national groups or clandestine agents, usually intended to influence an audience".

War

War is a conflict between relatively large groups of people, which involves physical force inflicted by the use of weapons. Warfare has destroyed entire cultures, countries, economies and inflicted great suffering on humanity. Other terms for war can include armed conflict, hostilities, and police action. Acts of war are normally excluded from insurance contracts and sometimes from disaster planning.

Industrial hazards

Industrial accidents resulting in releases of hazardous materials usually occur in a commercial context, such as mining accidents. They often have an environmental impact, but also can be hazardous for people living in proximity. The Bhopal disaster saw the release of methyl isocyanate into the neighbouring environment seriously affecting large numbers of people. It is probably the world's worst industrial accident to date.

Engineering hazards

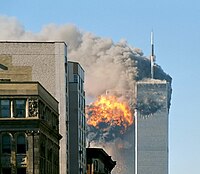

Engineering hazards occur when structures used by people fail or the materials used in their construction prove to be hazardous. This history of construction has many examples of hazards associated with structures including bridge failures such as the Tay Bridge disaster caused by under-design, the Silver Bridge collapse caused by corrosion attack, or the original Tacoma Narrows Bridge caused by aerodynamic flutter of the deck. Failure of dams was not infrequent during the Victorian era, such as the Dale Dyke dam failure in Sheffield, England in 1864, causing the Great Sheffield Flood, which killed at least 240 people. In 1889, the failure of the South Fork Dam on the Little Conemaugh River near Johnstown, Pennsylvania, produced the Johnstown Flood, which killed over 2,200. Other failures include balcony collapses, aerial walkway collapses such as the Hyatt Regency walkway collapse in Kansas City in 1981, and building collapses such as that of the World Trade Center in New York City in 2001 during the September 11 attacks.

Power outage

A power outage is an interruption of normal sources of electrical power. Short-term power outages (up to a few hours) are common and have minor adverse effect, since most businesses and health facilities are prepared to deal with them. Extended power outages, however, can disrupt personal and business activities as well as medical and rescue services, leading to business losses and medical emergencies. Extended loss of power can lead to civil disorder, as in the New York City blackout of 1977. Only very rarely do power outages escalate to disaster proportions, however, they often accompany other types of disasters, such as hurricanes and floods, which hampers relief efforts.

Electromagnetic pulses and voltage spikes from whatever cause can also damage electricity infrastructure and electrical devices.

Recent notable power outages include the 2005 Java–Bali Blackout which affected 100 million people, 2012 India blackouts which affected 600 million and the 2009 Brazil and Paraguay blackout which affected 60 million people.

Fire

Bush fires, forest fires, and mine fires are generally started by lightning, but also by human negligence or arson. They can burn thousands of square kilometers. If a fire intensifies enough to produce its own winds and "weather", it will form into a firestorm. A good example of a mine fire is the one near Centralia, Pennsylvania. Started in 1962, it ruined the town and continues to burn today. Some of the biggest city-related fires are The Great Chicago Fire, The Peshtigo Fire (both of 1871) and the Great Fire of London in 1666.

Casualties resulting from fires, regardless of their source or

initial cause, can be aggravated by inadequate emergency preparedness.

Such hazards as a lack of accessible emergency exits, poorly marked escape routes, or improperly maintained fire extinguishers or sprinkler systems may result in many more deaths and injuries than might occur with such protections.

Arson is the setting a fire with intent to cause damage. The definition of arson was originally limited to setting fire to buildings, but was later expanded to include other objects, such as bridges, vehicles, and private property. Some human-induced fires are accidental: failing machinery such as a kitchen stove is a major cause of accidental fires.

Hazards caused by transportation

Aviation

An aviation incident is an occurrence other than an accident, associated with the operation of an aircraft, which affects or could affect the safety of operations, passengers, or pilots. The category of the vehicle can range from a helicopter, an airliner, or a Space Shuttle.

Rail

The special hazards of traveling by rail include the possibility of a train crash which can result in substantial loss of life. Incidents involving freight traffic generally pose a greater hazardous risk to the environment. Less common hazards include geophysical hazards such as tsunami such as that which struck in 2004 in Sri Lanka when 1,700 people died in the Sri Lanka tsunami-rail disaster.

See also the list of train accidents by death toll.

Road

Traffic collisions are the leading cause of death, and road-based pollution creates a substantial health hazard, especially in major conurbations.

Space

Space travel presents significant hazards, mostly to the direct participants (astronauts or cosmonauts and ground support personnel), but also carry the potential of disaster to the public at large. Accidents related to space travel have killed 22 astronauts and cosmonauts, and a larger number of people on the ground.

Accidents can occur on the ground during launch, preparation, or in flight, due to equipment malfunction or the naturally hostile environment of space itself. An additional risk is posed by (unmanned) low-orbiting satellites whose orbits eventually decay due to friction with the extremely thin atmosphere. If they are large enough, massive pieces traveling at great speed can fall to the Earth before burning up, with the potential to do damage.

One of the worst human-piloted space accidents involved the Space Shuttle Challenger which disintegrated in 1986, claiming all seven lives on board. The shuttle disintegrated 73 seconds after taking off from the launch pad in Cape Canaveral, Florida.

Another example is the Space Shuttle Columbia, which disintegrated during a landing attempt over Texas in 2003, with a loss of all seven astronauts on board. The debris field extended from New Mexico to Mississippi.

Sea travel

Ships can sink, capsize or crash in disasters. Perhaps the most infamous sinking was that of the Titanic which hit an iceberg and sank, resulting in one of the worst maritime disasters in history. Other notable incidents include the capsizing of the Costa Concordia, which killed at least 32 people; and is the largest passenger ship to sink, and the sinking of the MV Doña Paz, which claimed the lives of up to 4,375 people in the worst peacetime maritime disaster in history.

Environmental hazards

Environmental hazards are those hazards where the effects are seen in biomes or ecosystems rather than directly on living organisms. Well known examples include oil spills, water pollution, slash and burn de-forestation, air pollution, and ground fissures.

Waste disposal

In managing waste many hazardous materials are put in the domestic and commercial waste stream. In part this is because modern technological living uses certain toxic or poisonous materials in the electronics and chemical industries. Which, when they are in use or transported, are usually safely contained or encapsulated and packaged to avoid any exposure. In the waste stream, the waste products exterior or encapsulation breaks or degrades and there is a release and exposure to hazardous materials into the environment, for people working in the waste disposal industry, those living around sites used for waste disposal or landfill and the general environment surrounding such sites.

Hazardous materials

Organohalogens

Organohalogens are a family of synthetic organic molecules which all contain atoms of one of the halogens. Such materials include PCBs, Dioxins, DDT, Freon and many others. Although considered harmless when first produced, many of these compounds are now known to have profound physiological effects on many organisms including man. Many are also fat soluble and become concentrated through the food chain.

Toxic metals

Many metals and their salts can exhibit toxicity to humans and many other organisms. Such metals include, Lead, Cadmium, Copper, Silver, Mercury and many of the transuranic metals.

Radioactive materials

Radioactive materials produce ionizing radiation which may be very harmful to living organisms. Damage from even a short exposure to radioactivity may have long term adverse health consequences.

Exposure may occur from nuclear fallout when nuclear weapons are detonated or nuclear containment systems are compromised. During World War II, the United States Army Air Forces dropped atomic bombs on the Japanese cities of Hiroshima and Nagasaki, leading to extensive contamination of food, land, and water. In the Soviet Union, the Mayak industrial complex (otherwise known as Chelyabinsk-40 or Chelyabinsk-65) exploded in 1957. The Kyshtym disaster was kept secret for several decades. It is the third most serious nuclear accident ever recorded. At least 22 villages were exposed to radiation and resulted in at least 10,000 displaced persons. In 1992, the former Soviet Union officially acknowledged the accident. Other Soviet republics of Ukraine and Belarus suffered also when a reactor at the Chernobyl nuclear power plant had a meltdown in 1986. To this day, several small towns and the city of Chernobyl remain abandoned and uninhabitable due to fallout.

The Hanford Site is a decommissioned nuclear production complex that produced plutonium for most of the 60,000 weapons in the U.S. nuclear arsenal. There are environmental concerns about radioactivity released from Hanford.

A number of military accidents involving nuclear weapons have also resulted in radioactive contamination, for example the 1966 Palomares B-52 crash and the 1968 Thule Air Base B-52 crash.

CBRNs

CBRN is a catch-all acronym for chemical, biological, radiological, and nuclear. The term is used to describe a non-conventional terror threat that, if used by a nation, would be considered use of a weapon of mass destruction. This term is used primarily in the United Kingdom. Planning for the possibility of a CBRN event may be appropriate for certain high-risk or high-value facilities and governments. Examples include Saddam Hussein's Halabja poison gas attack, the Sarin gas attack on the Tokyo subway and the preceding test runs in Matsumoto, Japan 100 kilometers outside of Tokyo, and Lord Amherst giving smallpox laden blankets to Native Americans.