Technology is the application of knowledge for achieving practical goals in a reproducible way. The word technology can also mean the products resulting from such efforts, including both tangible tools such as utensils or machines, and intangible ones such as software. Technology plays a critical role in science, engineering, and everyday life.

Technological advancements have led to significant changes in society. The earliest known technology is the stone tool, used during prehistoric times, followed by the control of fire, which contributed to the growth of the human brain and the development of language during the Ice Age. The invention of the wheel in the Bronze Age allowed greater travel and the creation of more complex machines. More recent technological inventions, including the printing press, telephone, and the Internet, have lowered barriers to communication and ushered in the knowledge economy.

While technology contributes to economic development and improves human prosperity, it can also have negative impacts like pollution and resource depletion, and can cause social harms like technological unemployment resulting from automation. As a result, there are ongoing philosophical and political debates about the role and use of technology, the ethics of technology, and ways to mitigate its downsides.

Etymology

Technology is a term dating back to the early 17th century that meant 'systematic treatment' (from Greek Τεχνολογία, from the Greek: τέχνη, romanized: tékhnē, lit. 'craft, art' and -λογία, 'study, knowledge'). It is predated in use by the Ancient Greek word tékhnē, used to mean 'knowledge of how to make things', which encompassed activities like architecture.

Starting in the 19th century, continental Europeans started using the terms Technik (German) or technique (French) to refer to a 'way of doing', which included all technical arts, such as dancing, navigation, or printing, whether or not they required tools or instruments. At the time, Technologie (German and French) referred either to the academic discipline studying the "methods of arts and crafts", or to the political discipline "intended to legislate on the functions of the arts and crafts."Since the distinction between Technik and Technologie is absent in English, both were translated as technology. The term was previously uncommon in English and mostly referred to the academic discipline, as in the Massachusetts Institute of Technology.

In the 20th century, as a result of scientific progress and the Second Industrial Revolution, technology stopped being considered a distinct academic discipline and took on its current-day meaning: the systemic use of knowledge to practical ends.

History

Prehistoric

Tools were initially developed by hominids through observation and trial and error. Around 2 Mya (million years ago), they learned to make the first stone tools by hammering flakes off a pebble, forming a sharp hand axe. This practice was refined 75 kya (thousand years ago) into pressure flaking, enabling much finer work.

The discovery of fire was described by Charles Darwin as "possibly the greatest ever made by man". Archaeological, dietary, and social evidence point to "continuous [human] fire-use" at least 1.5 Mya. Fire, fueled with wood and charcoal, allowed early humans to cook their food to increase its digestibility, improving its nutrient value and broadening the number of foods that could be eaten. The cooking hypothesis proposes that the ability to cook promoted an increase in hominid brain size, though some researchers find the evidence inconclusive. Archaeological evidence of hearths was dated to 790 kya; researchers believe this is likely to have intensified human socialization and may have contributed to the emergence of language.

Other technological advances made during the Paleolithic era include clothing and shelter. No consensus exists on the approximate time of adoption of either technology, but archaeologists have found archaeological evidence of clothing 90-120 kya and shelter 450 kya. As the Paleolithic era progressed, dwellings became more sophisticated and more elaborate; as early as 380 kya, humans were constructing temporary wood huts. Clothing, adapted from the fur and hides of hunted animals, helped humanity expand into colder regions; humans began to migrate out of Africa around 200 kya, initially moving to Eurasia.

Neolithic

The Neolithic Revolution (or First Agricultural Revolution) brought about an acceleration of technological innovation, and a consequent increase in social complexity. The invention of the polished stone axe was a major advance that allowed large-scale forest clearance and farming. This use of polished stone axes increased greatly in the Neolithic but was originally used in the preceding Mesolithic in some areas such as Ireland. Agriculture fed larger populations, and the transition to sedentism allowed for the simultaneous raising of more children, as infants no longer needed to be carried around by nomads. Additionally, children could contribute labor to the raising of crops more readily than they could participate in hunter-gatherer activities.

With this increase in population and availability of labor came an increase in labor specialization. What triggered the progression from early Neolithic villages to the first cities, such as Uruk, and the first civilizations, such as Sumer, is not specifically known; however, the emergence of increasingly hierarchical social structures and specialized labor, of trade and war amongst adjacent cultures, and the need for collective action to overcome environmental challenges such as irrigation, are all thought to have played a role.

Continuing improvements led to the furnace and bellows and provided, for the first time, the ability to smelt and forge gold, copper, silver, and lead – native metals found in relatively pure form in nature. The advantages of copper tools over stone, bone and wooden tools were quickly apparent to early humans, and native copper was probably used from near the beginning of Neolithic times (about 10 ka). Native copper does not naturally occur in large amounts, but copper ores are quite common and some of them produce metal easily when burned in wood or charcoal fires. Eventually, the working of metals led to the discovery of alloys such as bronze and brass (about 4,000 BCE). The first use of iron alloys such as steel dates to around 1,800 BCE.

Ancient

After harnessing fire, humans discovered other forms of energy. The earliest known use of wind power is the sailing ship; the earliest record of a ship under sail is that of a Nile boat dating to around 7,000 BCE. From prehistoric times, Egyptians likely used the power of the annual flooding of the Nile to irrigate their lands, gradually learning to regulate much of it through purposely built irrigation channels and "catch" basins. The ancient Sumerians in Mesopotamia used a complex system of canals and levees to divert water from the Tigris and Euphrates rivers for irrigation.

Archaeologists estimate that the wheel was invented independently and concurrently in Mesopotamia (in present-day Iraq), the Northern Caucasus (Maykop culture), and Central Europe. Time estimates range from 5,500 to 3,000 BCE with most experts putting it closer to 4,000 BCE. The oldest artifacts with drawings depicting wheeled carts date from about 3,500 BCE. More recently, the oldest-known wooden wheel in the world was found in the Ljubljana Marsh of Slovenia.

The invention of the wheel revolutionized trade and war. It did not take long to discover that wheeled wagons could be used to carry heavy loads. The ancient Sumerians used a potter's wheel and may have invented it. A stone pottery wheel found in the city-state of Ur dates to around 3,429 BCE, and even older fragments of wheel-thrown pottery have been found in the same area. Fast (rotary) potters' wheels enabled early mass production of pottery, but it was the use of the wheel as a transformer of energy (through water wheels, windmills, and even treadmills) that revolutionized the application of nonhuman power sources. The first two-wheeled carts were derived from travois and were first used in Mesopotamia and Iran in around 3,000 BCE.

The oldest known constructed roadways are the stone-paved streets of the city-state of Ur, dating to c. 4,000 BCE, and timber roads leading through the swamps of Glastonbury, England, dating to around the same period. The first long-distance road, which came into use around 3,500 BCE, spanned 2,400 km from the Persian Gulf to the Mediterranean Sea, but was not paved and was only partially maintained. In around 2,000 BCE, the Minoans on the Greek island of Crete built a 50 km road leading from the palace of Gortyn on the south side of the island, through the mountains, to the palace of Knossos on the north side of the island. Unlike the earlier road, the Minoan road was completely paved.

Ancient Minoan private homes had running water. A bathtub virtually identical to modern ones was unearthed at the Palace of Knossos. Several Minoan private homes also had toilets, which could be flushed by pouring water down the drain. The ancient Romans had many public flush toilets, which emptied into an extensive sewage system. The primary sewer in Rome was the Cloaca Maxima; construction began on it in the sixth century BCE and it is still in use today.

The ancient Romans also had a complex system of aqueducts, which were used to transport water across long distances. The first Roman aqueduct was built in 312 BCE. The eleventh and final ancient Roman aqueduct was built in 226 CE. Put together, the Roman aqueducts extended over 450 km, but less than 70 km of this was above ground and supported by arches.

Pre-modern

Innovations continued through the Middle Ages with the introduction of silk production (in Asia and later Europe), the horse collar, and horseshoes. Simple machines (such as the lever, the screw, and the pulley) were combined into more complicated tools, such as the wheelbarrow, windmills, and clocks. A system of universities developed and spread scientific ideas and practices, including Oxford and Cambridge.

The Renaissance era produced many innovations, including the introduction of the movable type printing press to Europe, which facilitated the communication of knowledge. Technology became increasingly influenced by science, beginning a cycle of mutual advancement.

Modern

Starting in the United Kingdom in the 18th century, the discovery of steam power set off the Industrial Revolution, which saw wide-ranging technological discoveries, particularly in the areas of agriculture, manufacturing, mining, metallurgy, and transport, and the widespread application of the factory system. This was followed a century later by the Second Industrial Revolution which led to rapid scientific discovery, standardization, and mass production. New technologies were developed, including sewage systems, electricity, light bulbs, electric motors, railroads, automobiles, and airplanes. These technological advances led to significant developments in medicine, chemistry, physics, and engineering. They were accompanied by consequential social change, with the introduction of skyscrapers accompanied by rapid urbanization. Communication improved with the invention of the telegraph, the telephone, the radio, and television.

The 20th century brought a host of innovations. In physics, the discovery of nuclear fission in the Atomic Age led to both nuclear weapons and nuclear power. Computers were invented and later shifted from analog to digital in the Digital Revolution. Information technology, particularly optical fiber and optical amplifiers led to the birth of the Internet, which ushered in the Information Age. The Space Age began with the launch of Sputnik 1 in 1957, and later the launch of crewed missions to the moon in the 1960s. Organized efforts to search for extraterrestrial intelligence have used radio telescopes to detect signs of technology use, or technosignatures, given off by alien civilizations. In medicine, new technologies were developed for diagnosis (CT, PET, and MRI scanning), treatment (like the dialysis machine, defibrillator, pacemaker, and a wide array of new pharmaceutical drugs), and research (like interferon cloning and DNA microarrays).

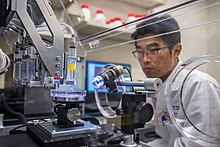

Complex manufacturing and construction techniques and organizations are needed to make and maintain more modern technologies, and entire industries have arisen to develop succeeding generations of increasingly more complex tools. Modern technology increasingly relies on training and education – their designers, builders, maintainers, and users often require sophisticated general and specific training. Moreover, these technologies have become so complex that entire fields have developed to support them, including engineering, medicine, and computer science; and other fields have become more complex, such as construction, transportation, and architecture.

Impact

Technological change is the largest cause of long-term economic growth. Throughout human history, energy production was the main constraint on economic development, and new technologies allowed humans to significantly increase the amount of available energy. First came fire, which made edible a wider variety of foods, and made it less physically demanding to digest them. Fire also enabled smelting, and the use of tin, copper, and iron tools, used for hunting or tradesmanship. Then came the agricultural revolution: humans no longer needed to hunt or gather to survive, and began to settle in towns and cities, forming more complex societies, with militaries and more organized forms of religion.

Technologies have contributed to human welfare through increased prosperity, improved comfort and quality of life, and medical progress, but they can also disrupt existing social hierarchies, cause pollution, and harm individuals or groups.

Recent years have brought about a rise in social media's cultural prominence, with potential repercussions on democracy, and economic and social life. Early on, the internet was seen as a "liberation technology" that would democratize knowledge, improve access to education, and promote democracy. Modern research has turned to investigate the internet's downsides, including disinformation, polarization, hate speech, and propaganda.

Since the 1970s, technology's impact on the environment has been criticized, leading to a surge in investment in solar, wind, and other forms of clean energy.

Social

Jobs

Since the invention of the wheel, technologies have helped increase humans' economic output. Past automation has both substituted and complemented labor; machines replaced humans at some lower-paying jobs (for example in agriculture), but this was compensated by the creation of new, higher-paying jobs. Studies have found that computers did not create significant net technological unemployment. Due to artificial intelligence being far more capable than computers, and still being in its infancy, it is not known whether it will follow the same trend; the question has been debated at length among economists and policymakers. A 2017 survey found no clear consensus among economists on whether AI would increase long-term unemployment. According to the World Economic Forum's "The Future of Jobs Report 2020", AI is predicted to replace 85 million jobs worldwide, and create 97 million new jobs by 2025. From 1990 to 2007, a study in the U.S by MIT economist Daron Acemoglu showed that an addition of one robot for every 1,000 workers decreased the employment-to-population ratio by 0.2%, or about 3.3 workers, and lowered wages by 0.42%. Concerns about technology replacing human labor however are long-lasting. As US president Lyndon Johnson said in 1964, “Technology is creating both new opportunities and new obligations for us, opportunity for greater productivity and progress; obligation to be sure that no workingman, no family must pay an unjust price for progress.” upon signing the National Commission on Technology, Automation, and Economic Progress bill.

Security

With the growing reliance of technology, there have been security and privacy concerns along with it. Billions of people use different online payment methods, such as WeChat Pay, PayPal, Alipay, and much more to help transfer money. Although security measures are placed, some criminals are able to bypass them. In March 2022, North Korea used Blender.io, a mixer which helped them to hide their cryptocurrency exchanges, to launder over $20.5 million in cryptocurrency, from Axie Infinity, and steal over $600 million worth of cryptocurrency from the game’s owner. Because of this, the U.S. Treasury Department sanctioned Blender.io, which marked the first time it has taken action against a mixer, to try and crack down on North Korean hackers. The privacy of cryptocurrency has been debated. Although many customers like the privacy of cryptocurrency, many also argue that it needs more transparency and stability.

Environmental

Technology has impacted the world with negative and positive environmental impacts, which are usually the reverse of the initial damage, such as; the creation of pollution and the attempt to undo said pollution, deforestation and the reversing of deforestation, and oil spills. All of these have had a significant impact on the environment of the earth. As technology has advanced, so has the negative environmental impact, with the releasing of greenhouse gases, like methane and carbon dioxide, into the atmosphere, causing the greenhouse effect, gradually heating the earth and causing global warming. All of this has become worse with the advancement of technology.

Pollution

Pollution, the presence of contaminants in an environment that causes adverse effects, could have been present as early as the Inca empire. They used a lead sulfide flux in the smelting of ores, along with the use of a wind-drafted clay kiln, which released lead into the atmosphere and the sediment of rivers.

Philosophy

Philosophy of technology is a branch of philosophy that studies the "practice of designing and creating artifacts", and the "nature of the things so created." It emerged as a discipline over the past two centuries, and has grown "considerably" since the 1970s. The humanities philosophy of technology is concerned with the "meaning of technology for, and its impact on, society and culture".

Initially, technology was seen as an extension of the human organism that replicated or amplified bodily and mental faculties. Marx framed it as a tool used by capitalists to oppress the proletariat, but believed that technology would be a fundamentally liberating force once it was "freed from societal deformations". Second-wave philosophers like Ortega later shifted their focus from economics and politics to "daily life and living in a techno-material culture," arguing that technology could oppress "even the members of the bourgeoisie who were its ostensible masters and possessors." Third-stage philosophers like Don Ihde and Albert Borgmann represent a turn toward de-generalization and empiricism, and considered how humans can learn to live with technology.

Early scholarship on technology was split between two arguments: technological determinism, and social construction. Technological determinism is the idea that technologies cause unavoidable social changes. It usually encompasses a related argument, technological autonomy, which asserts that technological progress follows a natural progression and cannot be prevented. Social constructivists argue that technologies follow no natural progression, and are shaped by cultural values, laws, politics, and economic incentives. Modern scholarship has shifted towards an analysis of sociotechnical systems, "assemblages of things, people, practices, and meanings", looking at the value judgments that shape technology.

Cultural critic Neil Postman distinguished tool-using societies from technological societies and from what he called "technopolies," societies that are dominated by an ideology of technological and scientific progress to the detriment of other cultural practices, values, and world views. Herbert Marcuse and John Zerzan suggest that technological society will inevitably deprive us of our freedom and psychological health.

Ethics

The ethics of technology is an interdisciplinary subfield of ethics that analyzes technology's ethical implications and explores ways to mitigate the potential negative impacts of new technologies. There is a broad range of ethical issues revolving around technology, from specific areas of focus affecting professionals working with technology to broader social, ethical, and legal issues concerning the role of technology in society and everyday life.

Prominent debates have surrounded genetically modified organisms, the use of robotic soldiers, algorithmic bias, and the issue of aligning AI behavior with human values.

Technology ethics encompasses several key fields. Bioethics looks at ethical issues surrounding biotechnologies and modern medicine, including cloning, human genetic engineering, and stem cell research. Computer ethics focuses on issues related to computing. Cyberethics explores internet-related issues like intellectual property rights, privacy, and censorship. Nanoethics examines issues surrounding the alteration of matter at the atomic and molecular level in various disciplines including computer science, engineering, and biology. And engineering ethics deals with the professional standards of engineers, including software engineers and their moral responsibilities to the public.

A wide branch of technology ethics is concerned with the ethics of artificial intelligence: it includes robot ethics, which deals with ethical issues involved in the design, construction, use, and treatment of robots, as well as machine ethics, which is concerned with ensuring the ethical behavior of artificially intelligent agents. Within the field of AI ethics, significant yet-unsolved research problems include AI alignment (ensuring that AI behaviors are aligned with their creators' intended goals and interests) and the reduction of algorithmic bias. Some researchers have warned against the hypothetical risk of an AI takeover, and have advocated for the use of AI capability control in addition to AI alignment methods.

Other fields of ethics have had to contend with technology-related issues, including military ethics, media ethics, and educational ethics.

Futures studies

Futures studies is the systematic and interdisciplinary study of social and technological progress. It aims to quantitatively and qualitatively explore the range of plausible futures and to incorporate human values in the development of new technologies. More generally, futures researchers are interested in improving "the freedom and welfare of humankind". It relies on a thorough quantitative and qualitative analysis of past and present technological trends, and attempts to rigorously extrapolate them into the future. Science fiction is often used as a source of ideas. Futures research methodologies include survey research, modeling, statistical analysis, and computer simulations.

Existential risk

Existential risk researchers analyze risks that could lead to human extinction or civilizational collapse, and look for ways to build resilience against them. Relevant research centers include the Cambridge Center for the Study of Existential Risk, and the Stanford Existential Risk Initiative. Future technologies may contribute to the risks of artificial general intelligence, biological warfare, nuclear warfare, nanotechnology, anthropogenic climate change, global warming, or stable global totalitarianism, though technologies may also help us mitigate asteroid impacts and gamma-ray bursts. In 2019 philosopher Nick Bostrom introduced the notion of a vulnerable world, "one in which there is some level of technological development at which civilization almost certainly gets devastated by default", citing the risks of a pandemic caused by bioterrorists, or an arms race triggered by the development of novel armaments and the loss of mutual assured destruction. He invites policymakers to question the assumptions that technological progress is always beneficial, that scientific openness is always preferable, or that they can afford to wait until a dangerous technology has been invented before they prepare mitigations.

Emerging technologies

Emerging technologies are novel technologies whose development or practical applications are still largely unrealized. They include nanotechnology, biotechnology, robotics, 3D printing, blockchains, and artificial intelligence.

In 2005, futurist Ray Kurzweil claimed the next technological revolution would rest upon advances in genetics, nanotechnology, and robotics, with robotics being the most impactful of the three. Genetic engineering will allow far greater control over human biological nature through a process called directed evolution. Some thinkers believe that this may shatter our sense of self, and have urged for renewed public debate exploring the issue more thoroughly; others fear that directed evolution could lead to eugenics or extreme social inequality. Nanotechnology will grant us the ability to manipulate matter "at the molecular and atomic scale", which could allow us to reshape ourselves and our environment in fundamental ways. Nanobots could be used within the human body to destroy cancer cells or form new body parts, blurring the line between biology and technology. Autonomous robots have undergone rapid progress, and are expected to replace humans at many dangerous tasks, including search and rescue, bomb disposal, firefighting, and war.

Estimates on the advent of artificial general intelligence vary, but half of machine learning experts surveyed in 2018 believe that AI will "accomplish every task better and more cheaply" than humans by 2063, and automate all human jobs by 2140. This expected technological unemployment has led to calls for increased emphasis on computer science education and debates about universal basic income. Political science experts predict that this could lead to a rise in extremism, while others see it as an opportunity to usher in a post-scarcity economy.

Movements

Appropriate technology

Some segments of the 1960s hippie counterculture grew to dislike urban living and developed a preference for locally autonomous, sustainable, and decentralized technology, termed appropriate technology. This later influenced hacker culture and technopaganism.

Technological utopianism

Technological utopianism refers to the belief that technological development is a moral good, which can and should bring about a utopia, that is, a society in which laws, governments, and social conditions serve the needs of all its citizens. Examples of techno-utopian goals include post-scarcity economics, life extension, mind uploading, cryonics, and the creation of artificial superintelligence. Major techno-utopian movements include transhumanism and singularitarianism.

The transhumanism movement is founded upon the "continued evolution of human life beyond its current human form" through science and technology, informed by "life-promoting principles and values." The movement gained wider popularity in the early 21st century.

Singularitarians believe that machine superintelligence will "accelerate technological progress" by orders of magnitude and "create even more intelligent entities ever faster", which may lead to a pace of societal and technological change that is "incomprehensible" to us. This event horizon is known as the technological singularity.

Major figures of techno-utopianism include Ray Kurzweil and Nick Bostrom. Techno-utopianism has attracted both praise and criticism from progressive, religious, and conservative thinkers.

Anti-technology backlash

Technology's central role in our lives has drawn concerns and backlash. The backlash against technology is not a uniform movement and encompasses many heterogeneous ideologies.

The earliest known revolt against technology was Luddism, a pushback against early automation in textile production. Automation had resulted in a need for fewer workers, a process known as technological unemployment.

Between the 1970s and 1990s, American terrorist Ted Kaczynski carried out a series of bombings across America and published the Unabomber Manifesto denouncing technology's negative impacts on nature and human freedom. The essay resonated with a large part of the American public. It was partly inspired by Jacques Ellul's The Technological Society.

Some subcultures, like the off-the-grid movement, advocate a withdrawal from technology and a return to nature. The ecovillage movement seeks to reestablish harmony between technology and nature.

Relation to science and engineering

Engineering is the process by which technology is developed. It often requires problem-solving under strict constraints. Technological development is "action-oriented", while scientific knowledge is fundamentally explanatory. Polish philosopher Henryk Skolimowski framed it like so: "science concerns itself with what is, technology with what is to be."

The direction of causality between scientific discovery and technological innovation has been debated by scientists, philosophers and policymakers. Because innovation is often undertaken at the edge of scientific knowledge, most technologies are not derived from scientific knowledge, but instead from engineering, tinkering and chance. For example, in the 1940s and 1950s, when knowledge of turbulent combustion or fluid dynamics was still crude, jet engines were invented through "running the device to destruction, analyzing what broke [...] and repeating the process". Scientific explanations often follow technological developments rather than preceding them. Many discoveries also arose from pure chance, like the discovery of penicillin as a result of accidental lab contamination. Since the 1960s, the assumption that government funding of basic research would lead to the discovery of marketable technologies has lost credibility. Probabilist Nassim Taleb argues that national research programs that implement the notions of serendipity and convexity through frequent trial and error are more likely to lead to useful innovations than research that aims to reach specific outcomes.

Despite this, modern technology is increasingly reliant on deep, domain-specific scientific knowledge. In 1975, there was an average of one citation of scientific literature in every three patents granted in the U.S.; by 1989, this increased to an average of one citation per patent. The average was skewed upwards by patents related to the pharmaceutical industry, chemistry, and electronics. A 2021 analysis shows that patents that are based on scientific discoveries are on average 26% more valuable than equivalent non-science-based patents.

Other animal species

The use of basic technology is also a feature of non-human animal species. Tool use was once considered a defining characteristic of the genus Homo. This view was supplanted after discovering evidence of tool use among chimpanzees and other primates, dolphins, and crows. For example, researchers have observed wild chimpanzees using basic foraging tools, pestles, levers, using leaves as sponges, and tree bark or vines as probes to fish termites. West African chimpanzees use stone hammers and anvils for cracking nuts, as do capuchin monkeys of Boa Vista, Brazil. Tool use is not the only form of animal technology use; for example, beaver dams, built with wooden sticks or large stones, are a technology with "dramatic" impacts on river habitats and ecosystems.

Popular culture

The relationship of humanity with technology has been explored in science-fiction literature, for example in Brave New World, A Clockwork Orange, Nineteen Eighty-Four, Isaac Asimov's essays, and movies like Minority Report, Total Recall, Gattaca, and Inception. It has spawned the dystopian and futuristic cyberpunk genre, which juxtaposes futuristic technology with societal collapse, dystopia or decay. Notable cyberpunk works include William Gibson's Neuromancer novel, and movies like Blade Runner, and The Matrix.