Isotopic labeling (or isotopic labelling) is a technique used to track the passage of an isotope (an atom with a detectable variation in neutron count) through chemical reaction, metabolic pathway, or a biological cell. The reactant is 'labeled' by replacing one or more specific atoms with their isotopes. The reactant is then allowed to undergo the reaction. The position of the isotopes in the products is measured to determine the sequence the isotopic atom followed in the reaction or the cell's metabolic pathway. The nuclides used in isotopic labeling may be stable nuclides or radionuclides. In the latter case, the labeling is called radiolabeling.

In isotopic labeling, there are multiple ways to detect the presence of labeling isotopes; through their mass, vibrational mode, or radioactive decay. Mass spectrometry detects the difference in an isotope's mass, while infrared spectroscopy detects the difference in the isotope's vibrational modes. Nuclear magnetic resonance detects atoms with different gyromagnetic ratios. The radioactive decay can be detected through an ionization chamber or autoradiographs of gels.

An example of the use of isotopic labeling is the study of phenol (C6H5OH) in water by replacing common hydrogen (protium) with deuterium (deuterium labeling). Upon adding phenol to deuterated water (water containing D2O in addition to the usual H2O), the substitution of deuterium for the hydrogen is observed in phenol's hydroxyl group (resulting in C6H5OD), indicating that phenol readily undergoes hydrogen-exchange reactions with water. Only the hydroxyl group is affected, indicating that the other 5 hydrogen atoms do not participate in the exchange reactions.

Isotopic tracer

An isotopic tracer, (also "isotopic marker" or "isotopic label"), is used in chemistry and biochemistry to help understand chemical reactions and interactions. In this technique, one or more of the atoms of the molecule of interest is substituted for an atom of the same chemical element, but of a different isotope (like a radioactive isotope used in radioactive tracing). Because the labeled atom has the same number of protons, it will behave in almost exactly the same way as its unlabeled counterpart and, with few exceptions, will not interfere with the reaction under investigation. The difference in the number of neutrons, however, means that it can be detected separately from the other atoms of the same element.

Nuclear magnetic resonance (NMR) and mass spectrometry (MS) are used to investigate the mechanisms of chemical reactions. NMR and MS detects isotopic differences, which allows information about the position of the labeled atoms in the products' structure to be determined. With information on the positioning of the isotopic atoms in the products, the reaction pathway the initial metabolites utilize to convert into the products can be determined. Radioactive isotopes can be tested using the autoradiographs of gels in gel electrophoresis. The radiation emitted by compounds containing the radioactive isotopes darkens a piece of photographic film, recording the position of the labeled compounds relative to one another in the gel.

Isotope tracers are commonly used in the form of isotope ratios. By studying the ratio between two isotopes of the same element, we avoid effects involving the overall abundance of the element, which usually swamp the much smaller variations in isotopic abundances. Isotopic tracers are some of the most important tools in geology because they can be used to understand complex mixing processes in earth systems. Further discussion of the application of isotopic tracers in geology is covered under the heading of isotope geochemistry.

Isotopic tracers are usually subdivided into two categories: stable isotope tracers and radiogenic isotope tracers. Stable isotope tracers involve only non-radiogenic isotopes and usually are mass-dependent. In theory, any element with two stable isotopes can be used as an isotopic tracer. However, the most commonly used stable isotope tracers involve relatively light isotopes, which readily undergo fractionation in natural systems. See also isotopic signature. A radiogenic isotope tracer involves an isotope produced by radioactive decay, which is usually in a ratio with a non-radiogenic isotope (whose abundance in the earth does not vary due to radioactive decay).

Stable isotope labeling

Stable isotope labeling involves the use of non-radioactive isotopes that can act as a tracers used to model several chemical and biochemical systems. The chosen isotope can act as a label on that compound that can be identified through nuclear magnetic resonance (NMR) and mass spectrometry (MS). Some of the most common stable isotopes are 2H, 13C, and 15N, which can further be produced into NMR solvents, amino acids, nucleic acids, lipids, common metabolites and cell growth media. The compounds produced using stable isotopes are either specified by the percentage of labeled isotopes (i.e. 30% uniformly labeled 13C glucose contains a mixture that is 30% labeled with 13 carbon isotope and 70% naturally labeled carbon) or by the specifically labeled carbon positions on the compound (i.e. 1-13C glucose which is labeled at the first carbon position of glucose).

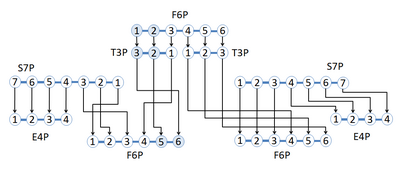

A network of reactions adopted from the glycolysis pathway and the pentose phosphate pathway is shown in which the labeled carbon isotope rearranges to different carbon positions throughout the network of reactions. The network starts with fructose 6-phosphate (F6P), which has 6 carbon atoms with a label 13C at carbon position 1 and 2. 1,2-13C F6P becomes two glyceraldehyde 3-phosphate (G3P), one 2,3-13C T3P and one unlabeled T3P. The 2,3-13C T3P can now be reacted with sedoheptulose 7-phosphate (S7P) to form an unlabeled erythrose 4-phosphate(E4P) and a 5,6-13C F6P. The unlabeled T3P will react with the S7P to synthesize unlabeled products. The figure demonstrates the use of stable isotope labeling to discover the carbon atom rearrangement through reactions using position specific labeled compounds.

Metabolic flux analysis using stable isotope labeling

Metabolic flux analysis (MFA) using stable isotope labeling is an important tool for explaining the flux of certain elements through the metabolic pathways and reactions within a cell. An isotopic label is fed to the cell, then the cell is allowed to grow utilizing the labeled feed. For stationary metabolic flux analysis the cell must reach a steady state (the isotopes entering and leaving the cell remain constant with time) or a quasi-steady state (steady state is reached for a given period of time). The isotope pattern of the output metabolite is determined. The output isotope pattern provides valuable information, which can be used to find the magnitude of flux, rate of conversion from reactants to products, through each reaction.

The figure demonstrates the ability to use different labels to determine the flux through a certain reaction. Assume the original metabolite, a three carbon compound, has the ability to either split into a two carbon metabolite and one carbon metabolite in one reaction then recombine or remain a three carbon metabolite. If the reaction is provided with two isotopes of the metabolite in equal proportion, one completely labeled (blue circles), commonly known as uniformly labeled, and one completely unlabeled (white circles). The pathway down the left side of the diagram does not display any change in the metabolites, while the right side shows the split and recombination. As shown, if the metabolite only takes the pathway down the left side, it remains in a 50–50 ratio of uniformly labeled to unlabeled metabolite. If the metabolite only takes the right side new labeling patterns can occur, all in equal proportion. Other proportions can occur depending on how much of the original metabolite follows the left side of the pathway versus the right side of the pathway. Here the proportions are shown for a situation in which half of the metabolites take the left side and half the right, but other proportions can occur. These patterns of labeled atoms and unlabeled atoms in one compound represent isotopomers. By measuring the isotopomer distribution of the differently labeled metabolites, the flux through each reaction can be determined.

MFA combines the data harvested from isotope labeling with the stoichiometry of each reaction, constraints, and an optimization procedure resolve a flux map. The irreversible reactions provide the thermodynamic constraints needed to find the fluxes. A matrix is constructed that contains the stoichiometry of the reactions. The intracellular fluxes are estimated by using an iterative method in which simulated fluxes are plugged into the stoichiometric model. The simulated fluxes are displayed in a flux map, which shows the rate of reactants being converted to products for each reaction. In most flux maps, the thicker the arrow, the larger the flux value of the reaction.

Isotope labeling measuring techniques

Any technique in measuring the difference between isotopomers can be used. The two primary methods, nuclear magnetic resonance (NMR) and mass spectrometry (MS), have been developed for measuring mass isotopomers in stable isotope labeling.

Proton NMR was the first technique used for 13C-labeling experiments. Using this method, each single protonated carbon position inside a particular metabolite pool can be observed separately from the other positions. This allows the percentage of isotopomers labeled at that specific position to be known. The limit to proton NMR is that if there are n carbon atoms in a metabolite, there can only be at most n different positional enrichment values, which is only a small fraction of the total isotopomer information. Although the use of proton NMR labeling is limiting, pure proton NMR experiments are much easier to evaluate than experiments with more isotopomer information.

In addition to Proton NMR, using 13C NMR techniques will allow a more detailed view of the distribution of the isotopomers. A labeled carbon atom will produce different hyperfine splitting signals depending on the labeling state of its direct neighbors in the molecule. A singlet peak emerges if the neighboring carbon atoms are not labeled. A doublet peak emerges if only one neighboring carbon atom is labeled. The size of the doublet split depends on the functional group of the neighboring carbon atom. If two neighboring carbon atoms are labeled, a doublet of doublets may degenerate into a triplet if the doublet splittings are equal.

The drawbacks to using NMR techniques for metabolic flux analysis purposes is that it is different from other NMR applications because it is a rather specialized discipline. An NMR spectrometer may not be directly available for all research teams. The optimization of NMR measurement parameters and proper analysis of peak structures requires a skilled NMR specialist. Certain metabolites also may require specialized measurement procedures to obtain additional isotopomer data. In addition, specially adapted software tools are needed to determine the precise quantity of peak areas as well as identifying the decomposition of entangled singlet, doublet, and triplet peaks.

As opposed to nuclear magnetic resonance, mass spectrometry (MS) is another method that is more applicable and sensitive to metabolic flux analysis experiments. MS instruments are available in different variants. Different from two-dimensional nuclear magnetic resonance (2D-NMR), the MS instruments work directly with hydrolysate.

In gas chromatography-mass spectrometry (GC-MS), the MS is coupled to a gas chromatograph to separate the compounds of the hydrolysate. The compounds eluting from the GC column are then ionized and simultaneously fragmented. The benefit in using GC-MS is that not only are the mass isotopomers of the molecular ion measured but also the mass isotopomer spectrum of several fragments, which significantly increases the measured information.

In liquid chromatography-mass spectrometry (LC-MS), the GC is replaced with a liquid chromatograph. The main difference is that chemical derivatization is not necessary. Applications of LC-MS to MFA, however, are rare.

In each case, MS instruments divide a particular isotopomer distribution by its molecular weight. All isotopomers of a particular metabolite that contain the same number of labeled carbon atoms are collected in one peak signal. Because every isotopomer contributes to exactly one peak in the MS spectrum, the percentage value can then be calculated for each peak, yielding the mass isotopomer fraction. For a metabolite with n carbon atoms, n+1 measurements are produced. After normalization, exactly n informative mass isotopomer quantities remain.

The drawback to using MS techniques is that for gas chromatography, the sample must be prepared by chemical derivatization in order to obtain molecules with charge. There are numerous compounds used to derivatize samples. N,N-Dimethylformamide dimethyl acetal (DMFDMA) and N-(tert-butyldimethylsilyl)-N-methyltrifluoroacetamide (MTBSTFA) are two examples of compounds that have been used to derivatize amino acids.

In addition, strong isotope effects observed affect the retention time of differently labeled isotopomers in the GC column. Overloading of the GC column also must be prevented.

Lastly, the natural abundance of other atoms than carbon also leads to a disturbance in the mass isotopomer spectrum. For example, each oxygen atom in the molecule might also be present as a 17O isotope and as a 18O isotope. A more significant impact of the natural abundance of isotopes is the effect of silicon with a natural abundance of the isotopes 29Si and 30Si. Si is used in derivatizing agents for MS techniques.

Radioisotopic labeling

Radioisotopic labeling is a technique for tracking the passage of a sample of substance through a system. The substance is "labeled" by including radionuclides in its chemical composition. When these decay, their presence can be determined by detecting the radiation emitted by them. Radioisotopic labeling is a special case of isotopic labeling.

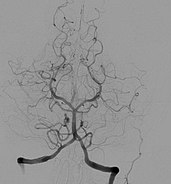

For these purposes, a particularly useful type of radioactive decay is positron emission. When a positron collides with an electron, it releases two high-energy photons traveling in diametrically opposite directions. If the positron is produced within a solid object, it is likely to do this before traveling more than a millimeter. If both of these photons can be detected, the location of the decay event can be determined very precisely.

Strictly speaking, radioisotopic labeling includes only cases where radioactivity is artificially introduced by experimenters, but some natural phenomena allow similar analysis to be performed. In particular, radiometric dating uses a closely related principle.

Applications

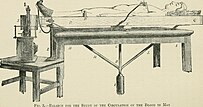

Applications in human mineral nutrition research

The use of stable isotope tracers to study mineral nutrition and metabolism in humans was first reported in the 1960s. While radioisotopes had been used in human nutrition research for several decades prior, stable isotopes presented a safer option, especially in subjects for which there is elevated concern about radiation exposure, e.g. pregnant and lactating women and children. Other advantages offered by stable isotopes include the ability to study elements having no suitable radioisotopes and to study long-term tracer behavior. Thus the use of stable isotopes became commonplace with the increasing availability of isotopically enriched materials and inorganic mass spectrometers. The use of stable isotopes instead of radioisotopes does have several drawbacks: larger quantities of tracer are required, having the potential of perturbing the naturally existing mineral; analytical sample preparation is more complex and mass spectrometry instrumentation more costly; the presence of tracer in whole bodies or particular tissues cannot be measured externally. Nonetheless, the advantages have prevailed making stable isotopes the standard in human studies.

Most of the minerals that are essential for human health and of particular interest to nutrition researchers have stable isotopes, some well-suited as biological tracers because of their low natural abundance. Iron, zinc, calcium, copper, magnesium, selenium and molybdenum are among the essential minerals having stable isotopes to which isotope tracer methods have been applied. Iron, zinc and calcium in particular have been extensively studied.

Aspects of mineral nutrition/metabolism that are studied include absorption (from the gastrointestinal tract into the body), distribution, storage, excretion and the kinetics of these processes. Isotope tracers are administered to subjects orally (with or without food, or with a mineral supplement) and/or intravenously. Isotope enrichment is then measured in blood plasma, erythrocytes, urine and/or feces. Enrichment has also been measured in breast milk and intestinal contents. Tracer experiment design sometimes differs between minerals due to differences in their metabolism. For example, iron absorption is usually determined from incorporation of tracer in erythrocytes whereas zinc or calcium absorption is measured from tracer appearance in plasma, urine or feces. The administration of multiple isotope tracers in a single study is common, permitting the use of more reliable measurement methods and simultaneous investigations of multiple aspects of metabolism.

The measurement of mineral absorption from the diet, often conceived of as bioavailability, is the most common application of isotope tracer methods to nutrition research. Among the purposes of such studies are the investigations of how absorption is influenced by type of food (e.g. plant vs animal source, breast milk vs formula), other components of the diet (e.g. phytate), disease and metabolic disorders (e.g. environmental enteric dysfunction), the reproductive cycle, quantity of mineral in diet, chronic mineral deficiency, subject age and homeostatic mechanisms. When results from such studies are available for a mineral, they may serve as a basis for estimations of the human physiological and dietary requirements of the mineral.

When tracer is administered with food for the purpose of observing mineral absorption and metabolism, it may be in the form of an intrinsic or extrinsic label. An intrinsic label is isotope that has been introduced into the food during its production, thus enriching the natural mineral content of the food, whereas extrinsic labeling refers to the addition of tracer isotope to the food during the study. Because it is a very time-consuming and expensive approach, intrinsic labeling is not routinely used. Studies comparing measurements of absorption using intrinsic and extrinsic labeling of various foods have generally demonstrated good agreement between the two labeling methods, supporting the hypothesis that extrinsic and natural minerals are handled similarly in the human gastrointestinal tract.

Enrichment is quantified from the measurement of isotope ratios, the ratio of the tracer isotope to a reference isotope, by mass spectrometry. Multiple definitions and calculations of enrichment have been adopted by different researchers. Calculations of enrichment become more complex when multiple tracers are used simultaneously. Because enriched isotope preparations are never isotopically pure, i.e. they contain all the element's isotopes in unnatural abundances, calculations of enrichment of multiple isotope tracers must account for the perturbation of each isotope ratio by the presence of the other tracers.

Due to the prevalence of mineral deficiencies and their critical impact on human health and well-being in resource-poor countries, the International Atomic Energy Agency has recently published detailed and comprehensive descriptions of stable isotope methods to facilitate the dissemination of this knowledge to researchers beyond western academic centers.

Applications in proteomics

In proteomics, the study of the full set of proteins expressed by a genome, identifying diseases biomarkers can involve the usage of stable isotope labeling by amino acids in cell culture (SILAC), that provides isotopic labeled forms of amino acid used to estimate protein levels. In protein recombinant, manipulated proteins are produced in large quantities and isotope labeling is a tool to test for relevant proteins. The method used to be about selectively enrich nuclei with 13C or 15N or deplete 1H from them. The recombinant would be expressed in E.coli with media containing 15N-ammonium chloride as a source of nitrogen. The resulting 15N labeled proteins are then purified by immobilized metal affinity and their percentage estimated. In order to increase the yield of labeled proteins and cut down the cost of isotope labeled media, an alternative procedure primarily increases the cell mass using unlabeled media before introducing it in a minimal amount of labeled media. Another application of isotope labeling would be in measuring DNA synthesis, that is cell proliferation in vitro. Uses H3-thymidine labeling to compare pattern of synthesis (or sequence) in cells.

Applications for ecosystem process analysis

Isotopic tracers are used to examine processes in natural systems, especially terrestrial and aquatic environments. In soil science 15N tracers are used extensively to study nitrogen cycling, whereas 13C and 14C, stable and radioisotopes of carbon respectively, are used for studying turnover of organic compounds and fixation of CO2 by autotrophs. For example, Marsh et al. (2005) used dual labeled (15N- and 14C) urea to demonstrate utilization of the compound by ammonia oxidizers as both an energy source (ammonia oxidation) and carbon source (chemoautotrophic carbon fixation). Deuterated water is also used for tracing the fate and ages of water in a tree or in an ecosystem.

Applications for oceanography

Tracers are also used extensively in oceanography to study a wide array of processes. The isotopes used are typically naturally occurring with well-established sources and rates of formation and decay. However, anthropogenic isotopes may also be used with great success. The researchers measure the isotopic ratios at different locations and times to infer information about the physical processes of the ocean.

Particle transport

The ocean is an extensive network of particle transport. Thorium isotopes can help researchers decipher the vertical and horizontal movement of matter. 234Th has a constant, well-defined production rate in the ocean and a half-life of 24 days. This naturally occurring isotope has been shown to vary linearly with depth. Therefore, any changes in this linear pattern can be attributed to the transport of 234Th on particles. For example, low isotopic ratios in surface water with very high values a few meters down would indicate a vertical flux in the downward direction. Furthermore, the thorium isotope may be traced within a specific depth to decipher the lateral transport of particles.

Circulation

Circulation within local systems, such as bays, estuaries, and groundwater, may be examined with radium isotopes. 223Ra has a half-life of 11 days and can occur naturally at specific locations in rivers and groundwater sources. The isotopic ratio of radium will then decrease as the water from the source river enters a bay or estuary. By measuring the amount of 223Ra at a number of different locations, a circulation pattern can be deciphered. This same exact process can also be used to study the movement and discharge of groundwater.

Various isotopes of lead can be used to study circulation on a global scale. Different oceans (i.e. the Atlantic, Pacific, Indian, etc.) have different isotopic signatures. This results from differences in isotopic ratios of sediments and rocks within the different oceans. Because the different isotopes of lead have half-lives of 50–200 years, there is not enough time for the isotopic ratios to be homogenized throughout the whole ocean. Therefore, precise analysis of Pb isotopic ratios can be used to study the circulation of the different oceans.

Tectonic processes and climate change

Isotopes with extremely long half-lives and their decay products can be used to study multi-million year processes, such as tectonics and extreme climate change. For example, in rubidium–strontium dating, the isotopic ratio of strontium (87Sr/86Sr) can be analyzed within ice cores to examine changes over the earth's lifetime. Differences in this ratio within the ice core would indicate significant alterations in the earth's geochemistry.

The aforementioned processes can be measured using naturally occurring isotopes. Nevertheless, anthropogenic isotopes are also extremely useful for oceanographic measurements. Nuclear weapons tests released a plethora of uncommon isotopes into the world's oceans. 3H, 129I, and 137Cs can be found dissolved in seawater, while 241Am and 238Pu are attached to particles. The isotopes dissolved in water are particularly useful in studying global circulation. For example, differences in lateral isotopic ratios within an ocean can indicate strong water fronts or gyres. Conversely, the isotopes attached to particles can be used to study mass transport within water columns. For instance, high levels of Am or Pu can indicate downwelling when observed at great depths, or upwelling when observed at the surface.

Methods for isotopic labeling

- Chemical synthesis

- Enzyme-mediated exchange

- Recombinant protein expression in isotopic labeled media.