Fake news or information disorder is false or misleading information (misinformation, including disinformation, propaganda, and hoaxes) presented as news. Fake news often has the aim of damaging the reputation of a person or entity, or making money through advertising revenue. Although false news has always been spread throughout history, the term "fake news" was first used in the 1890s when sensational reports in newspapers were common. Nevertheless, the term does not have a fixed definition and has been applied broadly to any type of false information presented as news. It has also been used by high-profile people to apply to any news unfavorable to them. Further, disinformation involves spreading false information with harmful intent and is sometimes generated and propagated by hostile foreign actors, particularly during elections. In some definitions, fake news includes satirical articles misinterpreted as genuine, and articles that employ sensationalist or clickbait headlines that are not supported in the text. Because of this diversity of types of false news, researchers are beginning to favour information disorder as a more neutral and informative term.

The prevalence of fake news has increased with the recent rise of social media, especially the Facebook News Feed, and this misinformation is gradually seeping into the mainstream media. Several factors have been implicated in the spread of fake news, such as political polarization, post-truth politics, motivated reasoning, confirmation bias, and social media algorithms.

Fake news can reduce the impact of real news by competing with it. For example, a BuzzFeed News analysis found that the top fake news stories about the 2016 U.S. presidential election received more engagement on Facebook than top stories from major media outlets. It also particularly has the potential to undermine trust in serious media coverage. The term has at times been used to cast doubt upon credible news, and former U.S. president Donald Trump has been credited with popularizing the term by using it to describe any negative press coverage of himself. It has been increasingly criticized, due in part to Trump's misuse, with the British government deciding to avoid the term, as it is "poorly-defined" and "conflates a variety of false information, from genuine error through to foreign interference".

Multiple strategies for fighting fake news are currently being actively researched, for various types of fake news. Politicians in certain autocratic and democratic countries have demanded effective self-regulation and legally-enforced regulation in varying forms, of social media and web search engines.

On an individual scale, the ability to actively confront false narratives, as well as taking care when sharing information can reduce the prevalence of falsified information. However, it has been noted that this is vulnerable to the effects of confirmation bias, motivated reasoning and other cognitive biases that can seriously distort reasoning, particularly in dysfunctional and polarised societies. Inoculation theory has been proposed as a method to render individuals resistant to undesirable narratives. Because new misinformation pops up all the time, it is much better timewise to inoculate the population against accepting fake news in general (a process termed "prebunking"), instead of continually debunking the same repeated lies.

Defining fake news

Fake news is false or misleading information presented as news. The term is a neologism (a new or re-purposed expression that is entering the language, driven by culture or technology changes). Fake news, or fake news websites, have no basis in fact, but are presented as being factually accurate.

Overlapping terms are bullshit, hoax news, pseudo-news, alternative facts, false news and junk news.

The National Endowment for Democracy defined fake news as: "[M]isleading content found on the internet, especially on social media [...] Much of this content is produced by for-profit websites and Facebook pages gaming the platform for advertising revenue." And distinguished it from disinformation: "[F]ake news does not meet the definition of disinformation or propaganda. Its motives are usually financial, not political, and it is usually not tied to a larger agenda."

Media scholar Nolan Higdon has defined fake news as "false or misleading content presented as news and communicated in formats spanning spoken, written, printed, electronic, and digital communication. Higdon has also argued that the definition of fake news has been applied too narrowly to select mediums and political ideologies.

While most definitions focus strictly on content accuracy and format, current research indicates that the rhetorical structure of the content might play a significant role in the perception of fake news.

Michael Radutzky, a producer of CBS 60 Minutes, said his show considers fake news to be "stories that are probably false, have enormous traction [popular appeal] in the culture, and are consumed by millions of people." These stories are not only found in politics, but also in areas like vaccination, stock values and nutrition. He did not include news that is "invoked by politicians against the media for stories that they don't like or for comments that they don't like" as fake news. Guy Campanile, also a 60 Minutes producer said, "What we are talking about are stories that are fabricated out of thin air. By most measures, deliberately, and by any definition, that's a lie."

The intent and purpose of fake news is important. In some cases, fake news may be news satire, which uses exaggeration and introduces non-factual elements that are intended to amuse or make a point, rather than to deceive. Propaganda can also be fake news.

In the context of the United States of America and its election processes in the 2010s, fake news generated considerable controversy and argument, with some commentators defining concern over it as moral panic or mass hysteria and others worried about damage done to public trust. It particularly has the potential to undermine trust in serious media coverage generally. The term has also been used to cast doubt upon credible mainstream media.

In January 2017, the United Kingdom House of Commons commenced a parliamentary inquiry into the "growing phenomenon of fake news".

In 2016, Politifact selected fake news as their Lie of the Year. There was so much of this in this United States election year, won by President Donald Trump, that no single lie stood out, so the generic term was chosen. Also in 2016, Oxford Dictionaries selected post-truth as its word of the year and defined it as the state of affairs when "objective facts are less influential in shaping public opinion than appeals to emotion and personal belief." Fake news is the boldest sign of a post-truth society. When we can't agree on basic facts—or even that there are such things as facts—how do we talk to each other?

Roots

The term "fake news" gained importance with the electoral context in Western Europe and North America. It is determined by fraudulent content in news format and its velocity. According to Bounegru, Gray, Venturini and Mauri, a lie becomes fake news when it "is picked up by dozens of other blogs, retransmitted by hundreds of websites, cross-posted over thousands of social media accounts and read by hundreds of thousands".

The evolving nature of online business models encourages the production of information that is "click-worthy" and independent of its accuracy.

The nature of trust depends on the assumptions that non-institutional forms of communication are freer from power and more able to report information that mainstream media are perceived as unable or unwilling to reveal. Declines in confidence in much traditional media and expert knowledge have created fertile grounds for alternative, and often obscure sources of information to appear as authoritative and credible. This ultimately leaves users confused about basic facts.

Popularity and viral spread

Fake news has become popular with various media outlets and platforms. Researchers at Pew Research Center discovered that over 60% of Americans access news through social media compared to traditional newspaper and magazines. With the popularity of social media, individuals can easily access fake news and disinformation. The rapid spread of false stories on social media during the 2012 elections in Italy has been documented, as has diffusion of false stories on Facebook during the 2016 US election campaign.

Fake news has the tendency to become viral among the public. With the presence of social media platforms like Twitter, it becomes easier for false information to diffuse quickly. Research has found that false political information tends to spread "three times" faster than other false news. On Twitter, false tweets have a much higher chance of being retweeted than truthful tweets. More so, it is humans who are responsible in disseminating false news and information as opposed to bots and click-farms. The tendency for humans to spread false information has to do with human behavior; according to research, humans are attracted to events and information that are surprising and new, and, as a result, causes high-arousal in the brain. Besides, motivated reasoning was found to play a role in the spread of fake news. This ultimately leads humans to retweet or share false information, which are usually characterized with clickbait and eye-catching titles. This prevents people from stopping to verify the information. As a result, massive online communities form around a piece of false news without any prior fact-checking or verification of the veracity of the information.

Of particular concern regarding viral spread of fake news is the role of super-spreaders. Brian Stelter, the anchor of Reliable Sources at CNN, has documented the systematic long-term two-way feedback that developed between President Donald Trump and Fox News presenters. The resultant conditioning of outrage in their large audience against government and the mainstream media, has proved a highly successful money-spinner for the TV network.

Its damaging effects

In 2017, the inventor of the World Wide Web, Tim Berners-Lee claimed that fake news was one of the three most significant new disturbing Internet trends that must first be resolved if the Internet is to be capable of truly "serving humanity." The other two new disturbing trends were: the recent surge in the use of the Internet by governments for citizen-surveillance purposes, and for cyber-warfare purposes.

Author Terry Pratchett, previously a journalist and press officer, was among the first to be concerned about the spread of fake news on the Internet. In a 1995 interview with Bill Gates, founder of Microsoft, he said "Let's say I call myself the Institute for Something-or-other and I decide to promote a spurious treatise saying the Jews were entirely responsible for the Second World War, and the Holocaust didn't happen, and it goes out there on the Internet and is available on the same terms as any piece of historical research which has undergone peer review and so on. There's a kind of parity of esteem of information on the net. It's all there: there's no way of finding out whether this stuff has any bottom to it or whether someone has just made it up". Gates was optimistic and disagreed, saying that authorities on the Net would index and check facts and reputations in a much more sophisticated way than in print. But it was Pratchett who more accurately predicted how the internet would propagate and legitimize fake news.

When the internet first became accessible for public use in the 1990s, its main purpose was for the seeking and accessing of information. As fake news was introduced to the Internet, this made it difficult for some people to find truthful information. The impact of fake news has become a worldwide phenomenon. Fake news is often spread through the use of fake news websites, which, in order to gain credibility, specialize in creating attention-grabbing news, which often impersonate well-known news sources. Jestin Coler, who said he does it for "fun", has indicated that he earned US$10,000 per month from advertising on his fake news websites.

Research has shown that fake news hurts social media and online based outlets far worse than traditional print and TV outlets. After a survey was conducted, it was found that 58% of people had less trust in social media news stories as opposed to 24% of people in mainstream media after learning about fake news. In 2019 Christine Michel Carter, a writer who has reported on Generation Alpha for Forbes stated that one-third of the generation can decipher false or misleading information in the media.

Types of fake news

Claire Wardle of First Draft News, has identified seven types of fake news:

- satire or parody ("no intention to cause harm but has potential to fool")

- false connection ("when headlines, visuals or captions don't support the content")

- misleading content ("misleading use of information to frame an issue or an individual")

- false context ("when genuine content is shared with false contextual information")

- impostor content ("when genuine sources are impersonated" with false, made-up sources)

- manipulated content ("when genuine information or imagery is manipulated to deceive", as with a "doctored" photo)

- fabricated content ("new content is 100% false, designed to deceive and do harm")

Scientific denialism is another potential explanatory type of fake news, defined as the act of producing false or misleading facts to unconsciously support strong pre-existing beliefs.

Criticism of the term

In 2017, Wardle announced she has now rejected the phrase "fake news" and "censors it in conversation", finding it "woefully inadequate" to describe the issues. She now speaks of "information disorder" and "information pollution", and distinguishes between three overarching types of information content problems:

- Mis-information (misinformation): false information disseminated without harmful intent.

- Dis-information (disinformation): false information created and shared by people with harmful intent.

- Mal-information (malinformation): the sharing of "genuine" information with the intent to cause harm.

Disinformation attacks are the most insidious type because of the harmful intent. For example, it is sometimes generated and propagated by hostile foreign actors, particularly during elections.

Because of the manner in which former president Donald Trump has co-opted the term, The Washington Post media columnist Margaret Sullivan has warned fellow journalists that "It's time to retire the tainted term 'fake news'. Though the term hasn't been around long, its meaning already is lost." By late 2018, the term "fake news" had become verboten and U.S. journalists, including the Poynter Institute were asking for apologies and for product retirements from companies using the term.

In October 2018, the British government decided that the term "fake news" will no longer be used in official documents because it is "a poorly-defined and misleading term that conflates a variety of false information, from genuine error through to foreign interference in democratic processes." This followed a recommendation by the House of Commons' Digital, Culture, Media and Sport Committee to avoid the term.

However, recent reviews of fake news still regard it as a useful broad construct, equivalent in meaning to fabricated news, as separate from related types of problematic news content, such as hyperpartisan news, this latter being a particular source of political polarization. Therefore, researchers are beginning to favour "information disorder" as a more neutral and informative term. For example, the Commission of Inquiry by the Aspen Institute (2021) has adopted the term Information Disorder in its investgative report.

Identification

According to an academic library guide, a number of specific aspects of fake news may help to identify it and thus avoid being unduly influenced. These include: clickbait, propaganda, satire/parody, sloppy journalism, misleading headings, manipulation, rumor mill, misinformation, media bias, audience bias, and content farms.

The International Federation of Library Associations and Institutions (IFLA) published a summary in diagram form (pictured at right) to assist people in recognizing fake news. Its main points are:

- Consider the source (to understand its mission and purpose)

- Read beyond the headline (to understand the whole story)

- Check the authors (to see if they are real and credible)

- Assess the supporting sources (to ensure they support the claims)

- Check the date of publication (to see if the story is relevant and up to date)

- Ask if it is a joke (to determine if it is meant to be satire)

- Review your own biases (to see if they are affecting your judgment)

- Ask experts (to get confirmation from independent people with knowledge).

The International Fact-Checking Network (IFCN), launched in 2015, supports international collaborative efforts in fact-checking, provides training, and has published a code of principles. In 2017 it introduced an application and vetting process for journalistic organisations. One of IFCN's verified signatories, the independent, not-for-profit media journal The Conversation, created a short animation explaining its fact checking process, which involves "extra checks and balances, including blind peer review by a second academic expert, additional scrutiny and editorial oversight".

Beginning in the 2017 school year, children in Taiwan study a new curriculum designed to teach critical reading of propaganda and the evaluation of sources. Called "media literacy", the course provides training in journalism in the new information society.

Online identification

Fake news has become increasingly prevalent over the last few years, with over 100 misleading articles and rumors spread regarding the 2016 United States presidential election alone. These fake news articles tend to come from satirical news websites or individual websites with an incentive to propagate false information, either as clickbait or to serve a purpose. Since they typically hope to intentionally promote incorrect information, such articles are quite difficult to detect.

When identifying a source of information, one must look at many attributes, including but not limited to the content of the email and social media engagements. Specifically, the language is typically more inflammatory in fake news than real articles, in part because the purpose is to confuse and generate clicks.

Furthermore, modeling techniques such as n-gram encodings and bag of words have served as other linguistic techniques to determine the legitimacy of a news source. On top of that, researchers have determined that visual-based cues also play a factor in categorizing an article, specifically some features can be designed to assess if a picture was legitimate and provides more clarity on the news. There is also many social context features that can play a role, as well as the model of spreading the news. Websites such as "Snopes" try to detect this information manually, while certain universities are trying to build mathematical models to do this themselves.

Tackling and suppression strategies

Considerable research is underway regarding strategies for confronting and suppressing fake news of all types, in particular disinformation, which is the deliberate spreading of false narratives for political purposes, or for destabilising social cohesion in targeted communities. Multiple strategies need to be tailored to individual types of fake news, depending for example on whether the fake news is deliberately produced, or rather unintentionally or unconsciously produced.

Considerable resources are available to combat fake news. Regular summaries of current events and research are available on the websites and email newsletters of a number of support organisations. Particularly notable are the First Draft Archive, the Information Futures Lab, School of Public Health, Brown University and the Nieman Foundation for Journalism (Harvard University).

Journalist Bernard Keane, in his book on misinformation in Australia, classifies strategies for dealing with fake news into three categories: (1) the liar (the perpetrator of fake news), (2) the conduit (the method of carriage of the fake news), and (3) the lied-to (the recipient of the fake news).

Strategies regarding the perpetrator

Promotion of facts over emotions

American philosopher of science Lee McIntyre, who has researched the scientific attitude and post-truth, has explained the importance of factual basis of society, in preference to one in which emotions replace facts. A disturbing modern example of this is the symbiotic relationship that developed between President Donald Trump and Fox News, in which the conspiracy beliefs of Fox hosts were repeated shortly after by Trump (and vice versa) in a continuous feedback loop. This served to promote outrage, and thus to condition and radicalise conservative Republican Fox listeners into cult-like Trump supporters, and to demonise and gaslight Democrat opponents, the mainstream media, and elites generally.

A key strategy to counter fake news based on emotions rather than facts is to flood the information space, particularly social media and web browser search results with factual news, thus drowning out misinformation. A key factor in establishing facts is the role of critical thinking, the principles of which should be imbedded more comprehensively within all school and university education courses. Critical thinking is a style of thinking in which citizens, prior to subsequent problem solving and decision-making, have learned to pay attention to the content of written words, and to judge their accuracy and fairness, among other worthy attributes.

Technique rebuttal

Because content rebuttal (presenting true facts to refute false information) does not always work, Lee McIntyre suggests the better method of technique rebuttal, in which faulty reasoning by deniers is exposed, such as cherry-picking data, and relying too much on fake experts. Deniers have lots of information, but a deficit of trust in mainstream sources. McIntyre first builds trust by respectful exchange, listening carefully to their explanation without interrupting. Then he asks questions such as “What evidence would make you change your mind?” and “Why do you trust that source?” McIntyre has used his technique to talk to flat earthers, though he admits it may not work with hard-core deniers.

Individual counteraction

Individuals should confront misinformation when spotted in online blogs, even if briefly, otherwise they fester and proliferate. The person being responded to is probably resistant to change, but many other bloggers may read and learn from an evidence-based reply. A brutal example was learned by John Kerry during the US 2004 Presidential election campaign against George W. Bush. The right-wing Swift Boat Veterans for Truth falsely claimed that Kerry showed cowardice during the Vietnam War. Kerry refused to dignify the claims with a response for two weeks, despite being pummeled in the media, and this action contributed to his marginal loss to Bush. We should never assume any claim is too outrageous to be believed.

However, caution applies regarding over-zealous debunking of fake news. It is often unwise to draw attention to fake news published on a low-impact website or blog (one that has few followers). If this fake news is debunked by a journalist in a high-profile place such as The New York Times, knowledge of the false claim spreads widely, and more people overall will end up believing it, ignoring or denying the debunk.

Backfire effect

A widely reported paper by Brendan Nyhan and Jason Riefler in 2010 found that when persons with a firm belief are presented with corrective information, their mistaken political beliefs were reinforced rather than reduced in two of their five studies. The researchers called this a backfire effect. However this finding was widely misreported as that corrective information was the sole cause of reinforced misinformation. Later studies, including by Nyhan and colleagues, failed to support a backfire effect. Instead, Nyhan now accepts that the reinforced beliefs are largely controlled by cues from high-profile elites and media that spread misinformation.

Strategies regarding carriers

Regulation of social media

Internet companies with threatened credibility have developed new responses to limit fake news and reduce financial incentives for its proliferation.

A valid criticism of social media companies is that users are presented with content that they will like, based on previous viewing preferences. An undesirable side-effect is that confirmation bias is enhanced in users, which in turn enhances the acceptance of fake news. To reduce this bias, effective self-regulation and legally-enforced regulation of social media (notably Facebook and Twitter) and web search engines (notably Google) need to become more effective and innovative.

Financial disincentives to tackle fake news also apply to some mainstream media. Brian Stelter, the anchor of Reliable Sources at CNN, has provided a substantial critique of the symbiotic but damaging relationship that developed between President Donald Trump and Fox News, which has proved an extraordinarily successful money-spinner for the Murdoch-owned TV network, despite this being a super-spreader of fake news.

General strategy

The general approach by these tech companies is the detection of problematic news via human fact-checking and automated artificial intelligence (machine learning, natural language processing and network analysis). Tech companies have utilized two basic counter-strategies: down-ranking fake news and warning messages.

In the first approach, problematic content is down-ranked by the search algorithm, for example, to the second or later pages on a Google search, so that users are less likely to see it (most users just scan the first page of search results). However, two problems arise. One is that truth is not black-and-white, and fact-checkers often disagree on how to classify the content included in computer training sets, running the risk of false positives and unjustified censorship. Also, fake news often evolves rapidly, and therefore identifiers of misinformation may be ineffective in the future.

The second approach involves attaching warnings to content that professional fact-checkers have found to be false. Much evidence indicates that corrections and warnings do produce reduced misperceptions and sharing. Despite some early evidence that fact-checking could backfire, recent research has shown that these backfire effects are extremely uncommon. But an important problem is that professional fact-checking is not scalable – it can take substantial time and effort to investigate each particular claim. Thus, many (if not most) false claims never get fact-checked. Also, the process is slow, and a warning may miss the period of peak viral spreading. Further, warnings are typically only attached to blatantly false news, rather than to biased coverage of events that actually occurred.

A third approach is to place more emphasis on reliable sources such as Wikipedia, as well as mainstream media (for example, The New York Times and The Wall Street Journal), and science communication publications (for example, Scientific American and The Conversation). However, this approach has led to mixed results, as hyperpartisan commentary and confirmation bias is found even in these sources (the media has both news and opinion pages). In addition, some sections of the community completely reject scientific commentary.

A fourth approach is to ban or specifically target so-called super-spreaders of fake news from social media.

Fact-checking

During the 2016 United States presidential election, the creation and coverage of fake news increased substantially. This resulted in a widespread response to combat the spread of fake news. The volume and reluctance of fake news websites to respond to fact-checking organizations has posed a problem to inhibiting the spread of fake news through fact checking alone. In an effort to reduce the effects of fake news, fact-checking websites, including Snopes.com and FactCheck.org, have posted guides to spotting and avoiding fake news websites. Social media sites and search engines, such as Facebook and Google, received criticism for facilitating the spread of fake news. Both of these corporations have taken measures to explicitly prevent the spread of fake news; critics, however, believe more action is needed.

After the 2016 American election and the run-up to the German election, Facebook began labeling and warning of inaccurate news and partnered with independent fact-checkers to label inaccurate news, warning readers before sharing it. After a story is flagged as disputed, it will be reviewed by the third-party fact-checkers. Then, if it has been proven to be a fake news story, the post cannot be turned into an ad or promoted. Artificial intelligence is one of the more recent technologies being developed in the United States and Europe to recognize and eliminate fake news through algorithms. In 2017, Facebook targeted 30,000 accounts related to the spread of misinformation regarding the French presidential election.

In 2020, during the COVID-19 pandemic, Facebook found that troll farms from North Macedonia and the Philippines pushed coronavirus disinformation. The publishers that used contents from these farms were banned from the platform.

In March 2018, Google launched Google News Initiative (GNI) to fight the spread of fake news. It launched GNI under the belief that quality journalism and identifying truth online is crucial. GNI has three goals: "to elevate and strengthen quality journalism, evolve business models to drive sustainable growth and empower news organizations through technological innovation". To achieve the first goal, Google created the Disinfo Lab, which combats the spread of fake news during crucial times such as elections or breaking news. The company is also working to adjust its systems to display more trustworthy content during times of breaking news. To make it easier for users to subscribe to media publishers, Google created Subscribe with Google. Additionally, they have created a dashboard, News Consumer Insights that allows news organizations to better understand their audiences using data and analytics. Google will spend $300 million through 2021 on these efforts, among others, to combat fake news.

In November 2020, YouTube (owned by Google) suspended news outlet One America News Network (OANN) for a week for spreading misinformation on coronavirus. The outlet has violated YouTube's policy multiple times. A video that falsely promoted a guaranteed cure to the virus has been deleted from the channel.

Legal and criminal sanctions in general

The use of anonymously hosted fake news websites has made it difficult to prosecute sources of fake news for libel.

Numerous countries have created laws in an attempt to regulate or prosecute harmful misinformation more generally than just with a focus on tech companies. In numerous countries, people have been arrested for allegedly spreading fake news about the COVID-19 pandemic.

Algerian lawmakers passed a law criminalising "fake news" deemed harmful to "public order and state security". The Turkish Interior Ministry has been arresting social media users whose posts were "targeting officials and spreading panic and fear by suggesting the virus had spread widely in Turkey and that officials had taken insufficient measures". Iran's military said 3600 people have been arrested for "spreading rumors" about COVID-19 in the country. In Cambodia, some individuals who expressed concerns about the spread of COVID-19 have been arrested on fake news charges. The United Arab Emirates have introduced criminal penalties for the spread of misinformation and rumours related to the outbreak.

Strategies regarding the recipient

Cognitive biases of recipient

The vast proliferation of online information, such as in blogs and tweets, has inundated the online marketplace. Because of the resulting information overload, humans cannot process all these information units (called memes), so we let our confirmation bias and other cognitive biases decide which ones to pay attention to, thus enhancing the spread of fake news. Moreover, these cognitive vulnerabilities are easily exploited by both computer algorithms that present information we may like (based on our previous social media use) and by individual manipulators who create social media bots to deliberately spread disinformation.

A recent study by Randy Stein and colleagues shows that conservatives value personal stories (non-scientific, intuitive or experiential evidence) more than do liberals (progressives), and therefore perhaps may be less swayed by scientific evidence. This study however only tested responses to apolitical messages.

Nudges as reflection prompts

People tend to react hastily and share fake news without thinking carefully about what they have read or heard, and without checking or verifying the information. "Nudging" people to consider the accuracy of incoming information has been shown to prompt people to think about it, to improve the accuracy of their judgement, and to reduce the likelihood that incorrect information is unreflectively shared. An example of a technology-based nudge is Twitter's "read before you retweet" prompt, which prompts readers to read an article and consider its contents before retweeting it.

Media critical thinking skills

Critical media literacy skills, for both printed and digital media, are essential for recipients to self-evaluate the accuracy of the media content. Media scholar Nolan Higdon argues that a critical media literacy education focused on teaching critical thinking about how to detect fake news is the most effective way for mitigating the pernicious influence of propaganda. Higdon offers a ten-step guide for detecting fake news.

Mental immune health, inoculation and prebunking

American philosopher Andy Norman, in his book Mental Immunity, argues for a new science of cognitive immunology as a practical guide to resisting bad ideas (such as conspiracy theories), as well as transcending petty tribalism. He argues that reasoned argument, the scientific method, fact-checking and critical thinking skills alone are insufficient to counter the broad scope of false information. Overlooked is the power of confirmation bias, motivated reasoning and other cognitive biases that can seriously distort the many facets of mental 'immunity' (public resilience to fake news), particularly in dysfunctional societies.

The problem is that new misinformation – and its darker cousin, intentional disinformation – keep popping up all the time. Therefore, it is much better timewise to inoculate the population against misinformation, rather than to continually having to debunk each new claim later. Inoculation builds public resilience and creates the conditions for psychological 'herd immunity'. The general term for this process is "prebunking", defined as the process of debunking lies, tactics or sources before they strike. New research shows that free online games can provide tools to fight fake news, leading to healthy skepticism when we consume the news. Google is currently (2023) rolling out novel prebunking video adverts, which have been shown to be effective in countering misinformation during trials in Eastern Europe.

Most current research is based on inoculation theory, a social psychological and communication theory that explains how an attitude or belief can be protected against persuasion or influence in much the same way a body can be protected against disease–for example, through pre-exposure to weakened versions of a stronger, future threat. The theory uses inoculation as its explanatory analogy—applied to attitudes (or beliefs) much like a vaccine is applied to an infectious disease. It has great potential for building public resilience ('immunity') against misinformation and fake news, for example, in tackling science denialism, risky health behaviours, and emotionally manipulative marketing and political messaging.

For example, John Cook and colleagues have shown that inoculation theory shows promise in countering climate change denialism. This involves a two-step process. Firstly, list and deconstruct the surprising 50 or so most common myths about climate change, by identifying the reasoning errors and logical fallacies of each one. Secondly, use the concept of parallel argumentation to explain the flaw in the argument by transplanting the same logic into a parallel situation, often an extreme or absurd one. Adding appropriate humour can be particularly effective.

History

Ancient

In the 13th century BC, Rameses the Great spread lies and propaganda portraying the Battle of Kadesh as a stunning victory for the Egyptians; he depicted scenes of himself smiting his foes during the battle on the walls of nearly all his temples. The treaty between the Egyptians and the Hittites, however, reveals that the battle was actually a stalemate.

During the first century BC, Octavian ran a campaign of misinformation against his rival Mark Antony, portraying him as a drunkard, a womanizer, and a mere puppet of the Egyptian queen Cleopatra VII. He published a document purporting to be Mark Antony's will, which claimed that Mark Antony, upon his death, wished to be entombed in the mausoleum of the Ptolemaic pharaohs. Although the document may have been forged, it invoked outrage from the Roman populace. Mark Antony ultimately killed himself after his defeat in the Battle of Actium upon hearing false rumors propagated by Cleopatra herself claiming that she had committed suicide.

During the second and third centuries AD, false rumors were spread about Christians claiming that they engaged in ritual cannibalism and incest. In the late third century AD, the Christian apologist Lactantius invented and exaggerated stories about pagans engaging in acts of immorality and cruelty, while the anti-Christian writer Porphyry invented similar stories about Christians.

Medieval

In 1475, a fake news story in Trent claimed that the Jewish community had murdered a two-and-a-half-year-old Christian infant named Simonino. The story resulted in all the Jews in the city being arrested and tortured; 15 of them were burned at the stake. Pope Sixtus IV himself attempted to stamp out the story; however, by that point, it had already spread beyond anyone's control. Stories of this kind were known as "blood libel"; they claimed that Jews purposely killed Christians, especially Christian children, and used their blood for religious or ritual purposes.

Early modern

After the invention of the printing press in 1439, publications became widespread but there was no standard of journalistic ethics to follow. By the 17th century, historians began the practice of citing their sources in footnotes. In 1610 when Galileo went on trial, the demand for verifiable news increased.

During the 18th century publishers of fake news were fined and banned in the Netherlands; one man, Gerard Lodewijk van der Macht, was banned four times by Dutch authorities—and four times he moved and restarted his press. In the American colonies, Benjamin Franklin wrote fake news about murderous "scalping" Indians working with King George III in an effort to sway public opinion in favor of the American Revolution.

Canards, the successors of the 16th century pasquinade, were sold in Paris on the street for two centuries, starting in the 17th century. In 1793, Marie Antoinette was executed in part because of popular hatred engendered by a canard on which her face had been printed.

During the era of slave-owning in the United States, supporters of slavery propagated fake news stories about African Americans, whom white people considered to have lower status. Violence occurred in reaction to the spread of some fake news events. In one instance, stories of African Americans spontaneously turning white spread through the south and struck fear into the hearts of many people.

Rumors and anxieties about slave rebellions were common in Virginia from the beginning of the colonial period, despite the only major uprising occurring in the 19th century. One particular instance of fake news regarding revolts occurred in 1730. The serving governor of Virginia at the time, Governor William Gooch, reported that a slave rebellion had occurred but was effectively put down—although this never happened. After Gooch discovered the falsehood, he ordered slaves found off plantations to be punished, tortured and made prisoners.

19th century

One instance of fake news was the Great Moon Hoax of 1835. The Sun newspaper of New York published articles about a real-life astronomer and a made-up colleague who, according to the hoax, had observed bizarre life on the Moon. The fictionalized articles successfully attracted new subscribers, and the penny paper suffered very little backlash after it admitted the next month that the series had been a hoax. Such stories were intended to entertain readers and not to mislead them.

From 1800 to 1810, James Cheetham made use of fictional stories to advocate politically against Aaron Burr. His stories were often defamatory and he was frequently sued for libel.

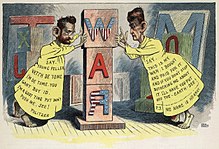

Yellow journalism peaked in the mid-1890s characterizing the sensationalist journalism that arose in the circulation war between Joseph Pulitzer's New York World and William Randolph Hearst's New York Journal. Pulitzer and other yellow journalism publishers goaded the United States into the Spanish–American War, which was precipitated when the USS Maine exploded in the harbor of Havana, Cuba. The term "fake news" itself was apparently first used in the 1890s during this era of sensationalist news reporting.

20th century

Fake news became popular and spread quickly in the 1900s. Media like newspapers, articles, and magazines were in high demand because of technology. Author Sarah Churchwell shows that when The New York Times reprinted the 1915 speech by Woodrow Wilson that popularized the phrase "America First," they also used the subheading "Fake News Condemned" to describe a section of his speech warning against propaganda and misinformation, although Wilson himself had not used the phrase "fake news". In his speech, Wilson warned of a growing problem with news that "turn[s] out to be falsehood," warning the country it "could not afford 'to let the rumors of irresponsible persons and origins get into the United States'" as that would undermine democracy and the principle of a free and accurate press. Following a claim by CNN that "Trump was... the first US President to deploy [the term "fake news"] against his opponents", Sarah Churchwell's work was cited to claim that "it was Woodrow Wilson who popularized the phrase 'fake news' in 1915" without reference, forcing her to counter this claim, saying that "the phrase 'fake news' was very much NOT popularized (or even used) by Wilson. The NY Times used it in passing but it didn't catch on. Trump was the first to popularize it."

During the First World War, an example of fake news was the anti-German atrocity propaganda regarding an alleged "German Corpse Factory" in which the German battlefield dead were supposedly rendered down for fats used to make nitroglycerine, candles, lubricants, human soap and boot dubbing. Unfounded rumors regarding such a factory circulated in the Allied press starting in 1915, and by 1917 the English-language publication North China Daily News presented these allegations as true at a time when Britain was trying to convince China to join the Allied war effort; this was based on new, allegedly true stories from The Times and the Daily Mail that turned out to be forgeries. These false allegations became known as such after the war, and in the Second World War Joseph Goebbels used the story in order to deny the ongoing massacre of Jews as British propaganda. According to Joachim Neander and Randal Marlin, the story also "encouraged later disbelief" when reports about the Holocaust surfaced after the liberation of Auschwitz and Dachau concentration camps. After Hitler and the Nazi Party rose to power in Germany in 1933, they established the Reich Ministry of Public Enlightenment and Propaganda under the control of Propaganda Minister Joseph Goebbels. The Nazis used both print and broadcast journalism to promote their agendas, either by obtaining ownership of those media or exerting political influence. The expression Big lie (in German: große Lüge) was coined by Adolf Hitler, when he dictated his 1925 book Mein Kampf. Throughout World War II, both the Axis and the Allies employed fake news in the form of propaganda to persuade the public at home and in enemy countries. The British Political Warfare Executive used radio broadcasts and distributed leaflets to discourage German troops.

The Carnegie Endowment for International Peace claimed that The New York Times printed fake news "depicting Russia as a socialist paradise." During 1932–1933, The New York Times published numerous articles by its Moscow bureau chief, Walter Duranty, who won a Pulitzer prize for a series of reports about the Soviet Union.

21st century

In the 21st century, both the impact of fake news and the use of the term became widespread.

The increasing openness, access and prevalence of the Internet resulted in its growth. New information and stories are published constantly and at a faster rate than ever, often lacking in verification, which may be consumed by anyone with an Internet connection. Fake news has grown from being sent via emails to attacking social media. Besides referring to made-up stories designed to deceive readers into clicking on links, maximizing traffic and profit, the term has also referred to satirical news, whose purpose is not to mislead but rather to inform viewers and share humorous commentary about real news and the mainstream media. United States examples of satire include the newspaper The Onion, Saturday Night Live's Weekend Update, and the television shows The Daily Show, The Colbert Report, The Late Show with Stephen Colbert.

21st-century fake news is often intended to increase the financial profits of the news outlet. In an interview with NPR, Jestin Coler, former CEO of the fake media conglomerate Disinfomedia, told who writes fake news articles, who funds these articles, and why fake news creators create and distribute false information. Coler, who has since left his role as a fake news creator, said that his company employed 20 to 25 writers at a time and made $10,000 to $30,000 monthly from advertisements. Coler began his career in journalism as a magazine salesman before working as a freelance writer. He said he entered the fake news industry to prove to himself and others just how rapidly fake news can spread. Disinfomedia is not the only outlet responsible for the distribution of fake news; Facebook users play a major role in feeding into fake news stories by making sensationalized stories "trend", according to BuzzFeed media editor Craig Silverman, and the individuals behind Google AdSense basically fund fake news websites and their content. Mark Zuckerberg, CEO of Facebook, said, "I think the idea that fake news on Facebook influenced the election in any way, I think is a pretty crazy idea" and then a few days later he blogged that Facebook was looking for ways to flag fake news stories.

Many online pro-Trump fake news stories are being sourced out of a city of Veles in Macedonia, where approximately seven different fake news organizations are employing hundreds of teenagers to rapidly produce and plagiarize sensationalist stories for different U.S. based companies and parties.

Kim LaCapria of the fact checking website Snopes.com has stated that, in America, fake news is a bipartisan phenomenon, saying that "[t]here has always been a sincerely held yet erroneous belief misinformation is more red than blue in America, and that has never been true." Jeff Green of Trade Desk agrees the phenomenon affects both sides. Green's company found that affluent and well-educated persons in their 40s and 50s are the primary consumers of fake news. He told Scott Pelley of 60 Minutes that this audience tends to live in an "echo chamber" and that these are the people who vote.

In 2014, the Russian Government used disinformation via networks such as RT to create a counter-narrative after Russian-backed Ukrainian rebels shot down Malaysia Airlines Flight 17. In 2016, NATO claimed it had seen a significant rise in Russian propaganda and fake news stories since the invasion of Crimea in 2014. Fake news stories originating from Russian government officials were also circulated internationally by Reuters news agency and published in the most popular news websites in the United States.

A 2018 study at Oxford University found that Trump's supporters consumed the "largest volume of 'junk news' on Facebook and Twitter":

On Twitter, a network of Trump supporters consumes the largest volume of junk news, and junk news is the largest proportion of news links they share," the researchers concluded. On Facebook, the skew was even greater. There, "extreme hard right pages—distinct from Republican pages—share more junk news than all the other audiences put together.

In 2018, researchers from Princeton University, Dartmouth College and the University of Exeter examined the consumption of fake news during the 2016 U.S. presidential campaign. Their findings showed that Trump supporters and older Americans (over 60) were far more likely to consume fake news than Clinton supporters. Those most likely to visit fake news websites were the 10% of Americans who consumed the most conservative information. There was a very large difference (800%) in the consumption of fake news stories as related to total news consumption between Trump supporters (6%) and Clinton supporters (1%).

The study also showed that fake pro-Trump and fake pro-Clinton news stories were read by their supporters, but with a significant difference: Trump supporters consumed far more (40%) than Clinton supporters (15%). Facebook was by far the key "gateway" website where these fake stories were spread and which led people to then go to the fake news websites. Fact checks of fake news were rarely seen by consumers, with none of those who saw a fake news story being reached by a related fact check.

Brendan Nyhan, one of the researchers, emphatically stated in an interview on NBC News: "People got vastly more misinformation from Donald Trump than they did from fake news websites—full stop."

NBC NEWS: "It feels like there's a connection between having an active portion of a party that's prone to seeking false stories and conspiracies and a president who has famously spread conspiracies and false claims. In many ways, demographically and ideologically, the president fits the profile of the fake news users that you're describing."

NYHAN: "It's worrisome if fake news websites further weaken the norm against false and misleading information in our politics, which unfortunately has eroded. But it's also important to put the content provided by fake news websites in perspective. People got vastly more misinformation from Donald Trump than they did from fake news websites—full stop."

A 2019 study by researchers at Princeton and New York University found that a person's likelihood of sharing fake-news articles correlated more strongly with age than it did education, sex, or political views. 11% of users older than 65 shared an article consistent with the study's definition of fake news. Just 3% of users ages 18 to 29 did the same.

Another issue in mainstream media is the usage of the filter bubble, a "bubble" that has been created that gives the viewer, on social media platforms, a specific piece of the information knowing they will like it. Thus creating fake news and biased news because only half the story is being shared, the portion the viewer liked. "In 1996, Nicolas Negroponte predicted a world where information technologies become increasingly customizable."

Special topics

Deepfakes and shallowfakes

Deepfakes (a portmanteau of "deep learning" and "fake") are synthetic media (AI-generated media) in which a person in an existing image or video is replaced with someone else's likeness.

Because a picture often has a greater impact than the corresponding words, deepfakes - which leverage powerful techniques from machine learning and artificial intelligence to manipulate or generate visual and audio content - have a particularly high potential to deceive. The main machine learning methods used to create deepfakes are based on deep learning and involve training generative neural network architectures, such as autoencoders or generative adversarial networks (GANs).

Deepfakes have garnered widespread attention for their uses in creating fake news (notably political), but also child sexual abuse material, celebrity pornographic videos, revenge porn, hoaxes, bullying, and financial fraud. This has elicited responses from both industry and government to detect and limit their use.

Deepfakes generally require specialist software or knowledge, but much the same effect can be achieved quickly by anyone using standard video editing software on most modern computers. These videos (termed “shallowfakes”) have obvious flaws, but nevertheless can still be widely believed as real, or at least have entertainment value that reinforces beliefs. One of the first to go viral, “The Hillary Song”, watched over 3 million times by Donald Trump supporters, shows Hillary Clinton being humiliated on stage by The Rock, a former wrestling champion. The surprised creator (who hates all politicians), pasted images of Clinton into a genuine video of The Rock humiliating a wrestling official.

Bots on social media

In the mid-1990s, Nicolas Negroponte anticipated a world where news through technology become progressively personalized. In his 1996 book Being Digital he predicted a digital life where news consumption becomes an extremely personalized experience and newspapers adapted content to reader preferences. This prediction has since been reflected in news and social media feeds of modern day.

Bots have the potential to increase the spread of fake news, as they use algorithms to decide what articles and information specific users like, without taking into account the authenticity of an article. Bots mass-produce and spread articles, regardless of the credibility of the sources, allowing them to play an essential role in the mass spread of fake news, as bots are capable of creating fake accounts and personalities on the web that are then gaining followers, recognition, and authority. Additionally, almost 30% of the spam and content spread on the Internet originates from these software bots.

In the 21st century, the capacity to mislead was enhanced by the widespread use of social media. For example, one 21st century website that enabled fake news' proliferation was the Facebook newsfeed. In late 2016 fake news gained notoriety following the uptick in news content by this means, and its prevalence on the micro-blogging site Twitter. In the United States, 62% of Americans use social media to receive news. Many people use their Facebook News Feed to get news, despite Facebook not being considered a news site. According to Craig McClain, over 66% of Facebook users obtain news from the site. This, in combination with increased political polarization and filter bubbles, led to a tendency for readers to mainly read headlines.

Numerous individuals and news outlets have stated that fake news may have influenced the outcome of the 2016 American Presidential Election. Fake news saw higher sharing on Facebook than legitimate news stories, which analysts explained was because fake news often panders to expectations or is otherwise more exciting than legitimate news. Facebook itself initially denied this characterization. A Pew Research poll conducted in December 2016 found that 64% of U.S. adults believed completely made-up news had caused "a great deal of confusion" about the basic facts of current events, while 24% claimed it had caused "some confusion" and 11% said it had caused "not much or no confusion". Additionally, 23% of those polled admitted they had personally shared fake news, whether knowingly or not. Researchers from Stanford assessed that only 8% of readers of fake news recalled and believed in the content they were reading, though the same share of readers also recalled and believed in "placebos"—stories they did not actually read, but that were produced by the authors of the study. In comparison, over 50% of the participants recalled reading and believed in true news stories.

By August 2017 Facebook stopped using the term "fake news" and used "false news" in its place instead. Will Oremus of Slate wrote that because supporters of U.S. President Donald Trump had redefined the word "fake news" to refer to mainstream media opposed to them, "it makes sense for Facebook—and others—to cede the term to the right-wing trolls who have claimed it as their own."

Research from Northwestern University concluded that 30% of all fake news traffic, as opposed to only 8% of real news traffic, could be linked back to Facebook. The research concluded fake news consumers do not exist in a filter bubble; many of them also consume real news from established news sources. The fake news audience is only 10 percent of the real news audience, and most fake news consumers spent a relatively similar amount of time on fake news compared with real news consumers—with the exception of Drudge Report readers, who spent more than 11 times longer reading the website than other users.

In the wake of western events, China's Ren Xianling of the Cyberspace Administration of China suggested a "reward and punish" system be implemented to avoid fake news.

Internet trolls

In Internet slang, a troll is a person who sows discord on the Internet by starting arguments or upsetting people, by posting inflammatory, extraneous, or off-topic messages in an online community (such as a newsgroup, forum, chat room, or blog) with the intent of provoking readers into an emotional response or off-topic discussion, often for the troll's amusement. Internet trolls also feed on attention.

The idea of internet trolls gained popularity in the 1990s, though its meaning shifted in 2011. Whereas it once denoted provocation, it is a term now widely used to signify the abuse and misuse of the Internet. Trolling comes in various forms, and can be dissected into abuse trolling, entertainment trolling, classical trolling, flame trolling, anonymous trolling, and kudos trolling. It is closely linked to fake news, as internet trolls are now largely interpreted as perpetrators of false information, information that can often be passed along unwittingly by reporters and the public alike.

When interacting with each other, trolls often share misleading information that contributes to the fake news circulated on sites like Twitter and Facebook. In the 2016 American election, Russia paid over 1,000 internet trolls to circulate fake news and disinformation about Hillary Clinton; they also created social media accounts that resembled voters in important swing states, spreading influential political standpoints. In February 2019, Glenn Greenwald wrote that a cybersecurity company New Knowledge "was caught just six weeks ago engaging in a massive scam to create fictitious Russian troll accounts on Facebook and Twitter in order to claim that the Kremlin was working to defeat Democratic Senate nominee Doug Jones in Alabama."

Fake news hoaxes

Paul Horner is perhaps the best known example of a person who deliberately creates fake news for a purpose. He has been referred to as a "hoax artist" by the Associated Press and the Chicago Tribune. The Huffington Post called Horner a "performance artist".

Horner was behind several widespread hoaxes such as: (1) that the graffiti artist Banksy had been arrested; and (2) that he had an "enormous impact" on the 2016 U.S. presidential election, according to CBS News. These stories consistently appeared in Google's top news search results, were shared widely on Facebook, were taken seriously and shared by third parties such as Trump presidential campaign manager Corey Lewandowski, Eric Trump, ABC News and the Fox News Channel. Horner later claimed that the intention was "to make Trump's supporters look like idiots for sharing my stories".

In a November 2016 interview with The Washington Post, Horner expressed regret for the role his fake news stories played in the election and surprise at how gullible people were in treating his stories as news. In February 2017 Horner said,

I truly regret my comment that I think Donald Trump is in the White House because of me. I know all I did was attack him and his supporters and got people not to vote for him. When I said that comment it was because I was confused how this evil man got elected President and I thought maybe instead of hurting his campaign, maybe I had helped it. My intention was to get his supporters NOT to vote for him and I know for a fact that I accomplished that goal. The far right, a lot of the Bible thumpers and alt-right were going to vote him regardless, but I know I swayed so many that were on the fence.

In 2017, Horner stated that a fake story of his about a rape festival in India helped generate over $250,000 in donations to GiveIndia, a site that helps rape victims in India. Horner said he dislikes being grouped with people who write fake news solely to be misleading. "They just write it just to write fake news, like there's no purpose, there's no satire, there's nothing clever.

Donald Trump and hoax news

The term "fake news" has at times been used to cast doubt upon credible news, and former U.S. president Donald Trump has been credited with popularizing and re-defining (or misusing) the term to refer to any negative press coverage of himself he dislikes, regardless of veracity. Trump has claimed that the mainstream American media (which he calls the "lying press") regularly reports "fake news" or "hoax news", despite the fact that he generated considerable false and inaccurate or misleading statements himself. According to The Washington Post's Fact Checker's database, Trump made 30,573 false or misleading claims during his four years in office, though the number of unique false claims is much lower because many of his major false claims were repeated hundreds of times each. A searchable online database is available for each documented false claim, and a datafile is available for download for use in academic studies of misinformation and lying. An analysis of the first 16,000 false claims is available as a book.

Trump has often attacked mainstream news reporting publications, deeming them "fake news" and the "enemy of the people". Every few days, Trump would issue a threat against the press due to his claims of "fake news". There have been many instances in which norms that protect press freedom have been pushed or even upended during the Trump-era.

According to Jeff Hemsley, a Syracuse University professor who studies social media, Trump uses this term for any news that is not favorable to him or which he simply dislikes. Trump provided a widely cited example of this interpretation in a tweet on May 9, 2018:

Donald J. Trump @realDonaldTrump The Fake News is working overtime. Just reported that, despite the tremendous success we are having with the economy & all things else, 91% of the Network News about me is negative (Fake). Why do we work so hard in working with the media when it is corrupt? Take away credentials?

May 9, 2018

Chris Cillizza described the tweet on CNN as an "accidental" revelation about Trump's "'fake news' attacks", and wrote: "The point can be summed up in these two words from Trump: 'negative (Fake).' To Trump, those words mean the same thing. Negative news coverage is fake news. Fake news is negative news coverage." Other writers made similar comments about the tweet. Dara Lind wrote in Vox: "It's nice of Trump to admit, explicitly, what many skeptics have suspected all along: When he complains about 'fake news,' he doesn't actually mean 'news that is untrue'; he means news that is personally inconvenient to Donald Trump." Jonathan Chait wrote in New York magazine: "Trump admits he calls all negative news 'fake'." "In a tweet this morning, Trump casually opened a window into the source code for his method of identifying liberal media bias. Anything that's negative is, by definition, fake." Philip Bump wrote in The Washington Post: "The important thing in that tweet ... is that he makes explicit his view of what constitutes fake news. It's negative news. Negative. (Fake.)" In an interview with Lesley Stahl, before the cameras were turned on, Trump explained why he attacks the press: "You know why I do it? I do it to discredit you all and demean you all so that when you write negative stories about me no one will believe you."

Author and literary critic Michiko Kakutani has described developments in the right-wing media and websites:

"Fox News and the planetary system of right-wing news sites that would orbit it and, later, Breitbart, were particularly adept at weaponizing such arguments and exploiting the increasingly partisan fervor animating the Republican base: They accused the media establishment of 'liberal bias', and substituted their own right-wing views as 'fair and balanced'—a redefinition of terms that was a harbinger of Trump's hijacking of 'fake news' to refer not to alt-right conspiracy theories and Russian troll posts, but to real news that he perceived as inconvenient or a threat to himself."

In September 2018, National Public Radio noted that Trump has expanded his use of the terms "fake" and "phony" to "an increasingly wide variety of things he doesn't like": "The range of things Trump is declaring fake is growing too. Last month he tweeted about "fake books," "the fake dossier," "fake CNN," and he added a new claim—that Google search results are "RIGGED" to mostly show only negative stories about him." They graphed his expanding use in columns labeled: "Fake news", "Fake (other) and "Phony".

By country

Europe

Austria

Politicians in Austria dealt with the impact of fake news and its spread on social media after the 2016 presidential campaign in the country. In December 2016, a court in Austria issued an injunction on Facebook Europe, mandating it block negative postings related to Eva Glawischnig-Piesczek, Austrian Green Party Chairwoman. According to The Washington Post the postings to Facebook about her "appeared to have been spread via a fake profile" and directed derogatory epithets towards the Austrian politician. The derogatory postings were likely created by the identical fake profile that had previously been utilized to attack Alexander van der Bellen, who won the election for President of Austria.

Belgium

In 2006, French-speaking broadcaster RTBF showed a fictional breaking special news report that Belgium's Flemish Region had proclaimed independence. Staged footage of the royal family evacuating and the Belgian flag being lowered from a pole were made to add credence to the report. It was not until 30 minutes into the report that a sign stating "Fiction" appeared on screen. The RTBF journalist that created the hoax said the purpose was to demonstrate the magnitude of the country's situation and if a partition of Belgium was to really happen.

Czech Republic

Fake news outlets in the Czech Republic redistribute news in Czech and English originally produced by Russian sources. Czech president Miloš Zeman has been supporting media outlets accused of spreading fake news.

The Centre Against Terrorism and Hybrid Threats (CTHH) is unit of the Ministry of the Interior of the Czech Republic primarily aimed at countering disinformation, fake news, hoaxes and foreign propaganda. The CTHH started operations on January 1, 2017. The CTHH has been criticized by Czech President Miloš Zeman, who said: "We don't need censorship. We don't need thought police. We don't need a new agency for press and information as long as we want to live in a free and democratic society."

In 2017 media activists started a website Konspiratori.cz maintaining a list of conspiracy and fake news outlets in Czech.

European Union

In 2018 European Commission introduced a first voluntary code of practice on disinformation. In 2022 this will become a strengthen co-regulation scheme, with responsibility shared between the regulators and companies signatories to the code. It will complement earlier Digital Services Act agreed by the 27-country European Union which already includes a section on combating disinformation.

Finland

Officials from 11 countries met in Helsinki in November 2016 and planned the formation of a center to combat disinformation cyber-warfare, which includes the spread of fake news on social media. The center is planned to be located in Helsinki and combine efforts from 10 countries, including Sweden, Germany, Finland and the U.S. Prime Minister of Finland from 2015 to 2019 Juha Sipilä planned to address the topic of the center in Spring 2017 with a motion before Parliament.

Deputy Secretary of State for EU Affairs Jori Arvonen said cyber-warfare, such as hybrid cyber-warfare intrusions into Finland from Russia and the Islamic State, became an increasing problem in 2016. Arvonen cited examples including online fake news, disinformation, and the "little green men" of the Russo-Ukrainian War.

France

During the ten-year period preceding 2016, France was witness to an increase in popularity of far-right alternative news sources called the fachosphere ("facho" referring to fascist); known as the extreme right on the Internet. According to sociologist Antoine Bevort, citing data from Alexa Internet rankings, the most consulted political websites in France in 2016 included Égalité et Réconciliation, François Desouche, and Les Moutons Enragés. These sites increased skepticism towards mainstream media from both left and right perspectives.

In September 2016, the country faced controversy regarding fake websites providing false information about abortion. The National Assembly moved forward with intentions to ban such fake sites. Laurence Rossignol, women's minister for France, informed parliament though the fake sites look neutral, in actuality their intentions were specifically targeted to give women fake information.

2017 presidential election. France saw an uptick in amounts of disinformation and propaganda, primarily in the midst of election cycles. A study looking at the diffusion of political news during the 2017 presidential election cycle suggests that one in four links shared in social media comes from sources that actively contest traditional media narratives. Facebook corporate deleted 30,000 Facebook accounts in France associated with fake political information.

In April 2017, Emmanuel Macron's presidential campaign was attacked by the fake news articles more than the campaigns of conservative candidate Marine Le Pen and socialist candidate Benoît Hamon. One of the fake articles even announced that Marine Le Pen won the presidency before the people of France had even voted. Macron's professional and private emails, as well as memos, contracts and accounting documents were posted on a file sharing website. The leaked documents were mixed with fake ones in social media in an attempt to sway the upcoming presidential election. Macron said he would combat fake news of the sort that had been spread during his election campaign.

Initially, the leak was attributed to APT28, a group tied to Russia's GRU military intelligence directorate. However, the head of the French cyber-security agency, ANSSI, later said that there was no evidence that the hack leading to the leaks had anything to do with Russia, saying that the attack was so simple, that "we can imagine that it was a person who did this alone. They could be in any country."

Germany

German Chancellor Angela Merkel lamented the problem of fraudulent news reports in a November 2016 speech, days after announcing her campaign for a fourth term as leader of her country. In a speech to the German parliament, Merkel was critical of such fake sites, saying they harmed political discussion. Merkel called attention to the need of government to deal with Internet trolls, bots, and fake news websites. She warned that such fraudulent news websites were a force increasing the power of populist extremism. Merkel called fraudulent news a growing phenomenon that might need to be regulated in the future. The head of Germany's foreign intelligence agency Federal Intelligence Service, Bruno Kahl, warned of the potential for cyberattacks by Russia in the 2017 German election. He said the cyberattacks would take the form of the intentional spread of disinformation. Kahl said the goal is to increase chaos in political debates. Germany's domestic intelligence agency Federal Office for the Protection of the Constitution Chief, Hans-Georg Maassen, said sabotage by Russian intelligence was a present threat to German information security. German government officials and security experts later said there was no Russian interference during the 2017 German federal election. The German term Lügenpresse, or lying press, has been used since the 19th century and specifically during World War One as a strategy to attack news spread by political opponents in the 19th and 20th century.

The award-winning German journalist Claas Relotius resigned from Der Spiegel in 2018 after admitting numerous instances of journalistic fraud.

In early April 2020, Berlin politician Andreas Geisel alleged that a shipment of 200,000 N95 masks that it had ordered from American producer 3M's China facility were intercepted in Bangkok and diverted to the United States during the COVID-19 pandemic. Berlin police president Barbara Slowik stated that she believed "this is related to the US government's export ban." However, Berlin police confirmed that the shipment was not seized by U.S. authorities, but was said to have simply been bought at a better price, widely believed to be from a German dealer or China. This revelation outraged the Berlin opposition, whose CDU parliamentary group leader Burkard Dregger accused Geisel of "deliberately misleading Berliners" in order "to cover up its own inability to obtain protective equipment". FDP interior expert Marcel Luthe said "Big names in international politics like Berlin's senator Geisel are blaming others and telling US piracy to serve anti-American clichés." Politico Europe reported that "the Berliners are taking a page straight out of the Trump playbook and not letting facts get in the way of a good story."

Hungary

Hungary's illiberal and populist prime minister Viktor Orbán has been casting George Soros, financier and philanthropist, a Hungarian-born Holocaust survivor, as the mastermind of a plot to undermine the country's sovereignty, replace native Hungarians with immigrants and destroy traditional values. This propaganda technique, together with anti-Semitism still present in the country, seems to appeal to his right wing voters, as it mobilizes them by seeding fear in society, creating an enemy image and enabling Orbán to present himself as the protector of the nation from the illusion of this enemy.

Italy

Journalists must be registered with the Ordine Dei Giornalisti (ODG) (transl.: Order of Journalists) and respect its disciplinary and training obligations, to guarantee "correct and truthful information, intended as right of individuals and of the community".

Under certain circumstances, spreading fake news may constitute a criminal offence for the Italian penal code.

Since 2018 it is possible to report fake news directly on the Polizia di Stato website.

The phenomenon is monitored by the DIS, supported by AISE and AISI.

Netherlands

In March 2018, the European Union's East StratCom Task Force compiled a list dubbed a "hall of shame" of articles with suspected Kremlin attempts to influence political decisions. However, controversy arose when three Dutch media outlets claimed they had been wrongfully singled out because of quotes attributed to people with non-mainstream views. The news outlets included ThePostOnline, GeenStijl, and De Gelderlander. All three were flagged for publishing articles critical of Ukrainian policies, and none received any forewarning or opportunity to appeal beforehand. This incident has contributed to the growing issue of what defines news as fake, and how freedoms of press and speech can be protected during attempts to curb the spread of false news.

Poland

Polish historian Jerzy Targalski noted fake news websites had infiltrated Poland through anti-establishment and right-wing sources that copied content from Russia Today. Targalski observed there existed about 20 specific fake news websites in Poland that spread Russian disinformation in the form of fake news. One example cited was fake news that Ukraine announced the Polish city of Przemyśl as occupied Polish land.

Poland's anti-EU Law and Justice (PiS) government has been accused of spreading "illiberal disinformation" to undermine public confidence in the European Union. Maria Snegovaya of Columbia University said: "The true origins of this phenomenon are local. The policies of Fidesz and Law and Justice have a lot in common with Putin's own policies."

Some mainstream outlets were long accused of fabricating half-true or outright false information. One of popular TV stations, TVN, in 2010 attributed to Jarosław Kaczyński (then an opposition leader) words that "there will be times, when true Poles will come to the power". However, Kaczyński has never uttered those words in the commented speech.

Romania

On March 16, 2020, Romanian President Klaus Iohannis signed an emergency decree, giving authorities the power to remove, report or close websites spreading "fake news" about the COVID-19 pandemic, with no opportunity to appeal.

Russia