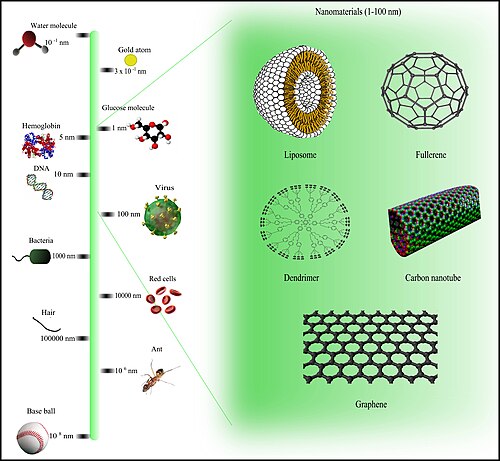

Nanotechnology is the manipulation of matter with at least one dimension sized from 1 to 100 nanometers (nm). At this scale, commonly known as the nanoscale, surface area and quantum mechanical effects become important in describing properties of matter. This definition of nanotechnology includes all types of research and technologies that deal with these special properties. It is common to see the plural form "nanotechnologies" as well as "nanoscale technologies" to refer to research and applications whose common trait is scale. An earlier understanding of nanotechnology referred to the particular technological goal of precisely manipulating atoms and molecules for fabricating macroscale products, now referred to as molecular nanotechnology.

Nanotechnology defined by scale includes fields of science such as surface science, organic chemistry, molecular biology, semiconductor physics, energy storage, engineering, microfabrication, and molecular engineering. The associated research and applications range from extensions of conventional device physics to molecular self-assembly, from developing new materials with dimensions on the nanoscale to direct control of matter on the atomic scale.

Nanotechnology may be able to create new materials and devices with diverse applications, such as in nanomedicine, nanoelectronics, agricultural sectors, biomaterials energy production, and consumer products. However, nanotechnology raises issues, including concerns about the toxicity and environmental impact of nanomaterials, and their potential effects on global economics, as well as various doomsday scenarios. These concerns have led to a debate among advocacy groups and governments on whether special regulation of nanotechnology is warranted.

Origins

The concepts that seeded nanotechnology were first discussed in 1959 by physicist Richard Feynman in his talk There's Plenty of Room at the Bottom, in which he described the possibility of synthesis via direct manipulation of atoms.

The term "nano-technology" was first used by Norio Taniguchi in 1974, though it was not widely known. Inspired by Feynman's concepts, K. Eric Drexler used the term "nanotechnology" in his 1986 book Engines of Creation: The Coming Era of Nanotechnology, which achieved popular success and helped thrust nanotechnology into the public sphere. In it he proposed the idea of a nanoscale "assembler" that would be able to build a copy of itself and of other items of arbitrary complexity with atom-level control. Also in 1986, Drexler co-founded The Foresight Institute to increase public awareness and understanding of nanotechnology concepts and implications.

The emergence of nanotechnology as a field in the 1980s occurred through the convergence of Drexler's theoretical and public work, which developed and popularized a conceptual framework, and experimental advances that drew additional attention to the prospects. In the 1980s, two breakthroughs helped to spark the growth of nanotechnology. First, the invention of the scanning tunneling microscope in 1981 enabled visualization of individual atoms and bonds, and was successfully used to manipulate individual atoms in 1989. The microscope's developers Gerd Binnig and Heinrich Rohrer at IBM Zurich Research Laboratory received a Nobel Prize in Physics in 1986. Binnig, Quate and Gerber also invented the analogous atomic force microscope that year.

Second, fullerenes (buckyballs) were discovered in 1985 by Harry Kroto, Richard Smalley, and Robert Curl, who together won the 1996 Nobel Prize in Chemistry. C60 was not initially described as nanotechnology; the term was used regarding subsequent work with related carbon nanotubes (sometimes called graphene tubes or Bucky tubes) which suggested potential applications for nanoscale electronics and devices. The discovery of carbon nanotubes is attributed to Sumio Iijima of NEC in 1991, for which Iijima won the inaugural 2008 Kavli Prize in Nanoscience.

In the early 2000s, the field garnered increased scientific, political, and commercial attention that led to both controversy and progress. Controversies emerged regarding the definitions and potential implications of nanotechnologies, exemplified by the Royal Society's report on nanotechnology. Challenges were raised regarding the feasibility of applications envisioned by advocates of molecular nanotechnology, which culminated in a public debate between Drexler and Smalley in 2001 and 2003.

Meanwhile, commercial products based on advancements in nanoscale technologies began emerging. These products were limited to bulk applications of nanomaterials and did not involve atomic control of matter. Some examples include the Silver Nano platform for using silver nanoparticles as an antibacterial agent, nanoparticle-based sunscreens, carbon fiber strengthening using silica nanoparticles, and carbon nanotubes for stain-resistant textiles.

Governments moved to promote and fund research into nanotechnology, such as American the National Nanotechnology Initiative, which formalized a size-based definition of nanotechnology and established research funding, and in Europe via the European Framework Programmes for Research and Technological Development.

By the mid-2000s scientific attention began to flourish. Nanotechnology roadmaps centered on atomically precise manipulation of matter and discussed existing and projected capabilities, goals, and applications.

Fundamental concepts

Nanotechnology is the science and engineering of functional systems at the molecular scale. In its original sense, nanotechnology refers to the projected ability to construct items from the bottom up making complete, high-performance products.

One nanometer (nm) is one billionth, or 10−9, of a meter. By comparison, typical carbon–carbon bond lengths, or the spacing between these atoms in a molecule, are in the range 0.12–0.15 nm, and DNA's diameter is around 2 nm. On the other hand, the smallest cellular life forms, the bacteria of the genus Mycoplasma, are around 200 nm in length. By convention, nanotechnology is taken as the scale range 1 to 100 nm, following the definition used by the American National Nanotechnology Initiative. The lower limit is set by the size of atoms (hydrogen has the smallest atoms, which have an approximately ,25 nm kinetic diameter). The upper limit is more or less arbitrary, but is around the size below which phenomena not observed in larger structures start to become apparent and can be made use of. These phenomena make nanotechnology distinct from devices that are merely miniaturized versions of an equivalent macroscopic device; such devices are on a larger scale and come under the description of microtechnology.

To put that scale in another context, the comparative size of a nanometer to a meter is the same as that of a marble to the size of the earth.

Two main approaches are used in nanotechnology. In the "bottom-up" approach, materials and devices are built from molecular components which assemble themselves chemically by principles of molecular recognition. In the "top-down" approach, nano-objects are constructed from larger entities without atomic-level control.

Areas of physics such as nanoelectronics, nanomechanics, nanophotonics and nanoionics have evolved to provide nanotechnology's scientific foundation.

Larger to smaller: a materials perspective

Several phenomena become pronounced as system size. These include statistical mechanical effects, as well as quantum mechanical effects, for example, the "quantum size effect" in which the electronic properties of solids alter along with reductions in particle size. Such effects do not apply at macro or micro dimensions. However, quantum effects can become significant when nanometer scales. Additionally, physical (mechanical, electrical, optical, etc.) properties change versus macroscopic systems. One example is the increase in surface area to volume ratio altering mechanical, thermal, and catalytic properties of materials. Diffusion and reactions can be different as well. Systems with fast ion transport are referred to as nanoionics. The mechanical properties of nanosystems are of interest in research.

Simple to complex: a molecular perspective

Modern synthetic chemistry can prepare small molecules of almost any structure. These methods are used to manufacture a wide variety of useful chemicals such as pharmaceuticals or commercial polymers. This ability raises the question of extending this kind of control to the next-larger level, seeking methods to assemble single molecules into supramolecular assemblies consisting of many molecules arranged in a well-defined manner.

These approaches utilize the concepts of molecular self-assembly and/or supramolecular chemistry to automatically arrange themselves into a useful conformation through a bottom-up approach. The concept of molecular recognition is important: molecules can be designed so that a specific configuration or arrangement is favored due to non-covalent intermolecular forces. The Watson–Crick basepairing rules are a direct result of this, as is the specificity of an enzyme targeting a single substrate, or the specific folding of a protein. Thus, components can be designed to be complementary and mutually attractive so that they make a more complex and useful whole.

Such bottom-up approaches should be capable of producing devices in parallel and be much cheaper than top-down methods, but could potentially be overwhelmed as the size and complexity of the desired assembly increases. Most useful structures require complex and thermodynamically unlikely arrangements of atoms. Nevertheless, many examples of self-assembly based on molecular recognition in exist in biology, most notably Watson–Crick basepairing and enzyme-substrate interactions.

Molecular nanotechnology: a long-term view

Molecular nanotechnology, sometimes called molecular manufacturing, concerns engineered nanosystems (nanoscale machines) operating on the molecular scale. Molecular nanotechnology is especially associated with molecular assemblers, machines that can produce a desired structure or device atom-by-atom using the principles of mechanosynthesis. Manufacturing in the context of productive nanosystems is not related to conventional technologies used to manufacture nanomaterials such as carbon nanotubes and nanoparticles.

When Drexler independently coined and popularized the term "nanotechnology", he envisioned manufacturing technology based on molecular machine systems. The premise was that molecular-scale biological analogies of traditional machine components demonstrated molecular machines were possible: biology was full of examples of sophisticated, stochastically optimized biological machines.

Drexler and other researchers have proposed that advanced nanotechnology ultimately could be based on mechanical engineering principles, namely, a manufacturing technology based on the mechanical functionality of these components (such as gears, bearings, motors, and structural members) that would enable programmable, positional assembly to atomic specification. The physics and engineering performance of exemplar designs were analyzed in Drexler's book Nanosystems: Molecular Machinery, Manufacturing, and Computation.

In general, assembling devices on the atomic scale requires positioning atoms on other atoms of comparable size and stickiness. Carlo Montemagno's view is that future nanosystems will be hybrids of silicon technology and biological molecular machines. Richard Smalley argued that mechanosynthesis was impossible due to difficulties in mechanically manipulating individual molecules.

This led to an exchange of letters in the American Chemical Society publication Chemical & Engineering News in 2003. Though biology clearly demonstrates that molecular machines are possible, non-biological molecular machines remained in their infancy. Alex Zettl and colleagues at Lawrence Berkeley Laboratories and UC Berkeley constructed at least three molecular devices whose motion is controlled via changing voltage: a nanotube nanomotor, a molecular actuator, and a nanoelectromechanical relaxation oscillator.

Ho and Lee at Cornell University in 1999 used a scanning tunneling microscope to move an individual carbon monoxide molecule (CO) to an individual iron atom (Fe) sitting on a flat silver crystal and chemically bound the CO to the Fe by applying a voltage.

Research

Nanomaterials

Many areas of science develop or study materials having unique properties arising from their nanoscale dimensions.

- Interface and colloid science produced many materials that may be useful in nanotechnology, such as carbon nanotubes and other fullerenes, and various nanoparticles and nanorods. Nanomaterials with fast ion transport are related to nanoionics and nanoelectronics.

- Nanoscale materials can be used for bulk applications; most commercial applications of nanotechnology are of this flavor.

- Progress has been made in using these materials for medical applications, including tissue engineering, drug delivery, antibacterials and biosensors.

- Nanoscale materials such as nanopillars are used in solar cells.

- Applications incorporating semiconductor nanoparticles in products such as display technology, lighting, solar cells and biological imaging; see quantum dots.

Bottom-up approaches

The bottom-up approach seeks to arrange smaller components into more complex assemblies.

- DNA nanotechnology utilizes Watson–Crick basepairing to construct well-defined structures out of DNA and other nucleic acids.

- Approaches from the field of "classical" chemical synthesis (inorganic and organic synthesis) aim at designing molecules with well-defined shape (e.g. bis-peptides).

- More generally, molecular self-assembly seeks to use concepts of supramolecular chemistry, and molecular recognition in particular, to cause single-molecule components to automatically arrange themselves into some useful conformation.

- Atomic force microscope tips can be used as a nanoscale "write head" to deposit a chemical upon a surface in a desired pattern in a process called dip-pen nanolithography. This technique fits into the larger subfield of nanolithography.

- Molecular-beam epitaxy allows for bottom-up assemblies of materials, most notably semiconductor materials commonly used in chip and computing applications, stacks, gating, and nanowire lasers.

Top-down approaches

These seek to create smaller devices by using larger ones to direct their assembly.

- Many technologies that descended from conventional solid-state silicon methods for fabricating microprocessors are capable of creating features smaller than 100 nm. Giant magnetoresistance-based hard drives already on the market fit this description, as do atomic layer deposition (ALD) techniques. Peter Grünberg and Albert Fert received the Nobel Prize in Physics in 2007 for their discovery of giant magnetoresistance and contributions to the field of spintronics.

- Solid-state techniques can be used to create nanoelectromechanical systems or NEMS, which are related to microelectromechanical systems or MEMS.

- Focused ion beams can directly remove material, or even deposit material when suitable precursor gasses are applied at the same time. For example, this technique is used routinely to create sub-100 nm sections of material for analysis in transmission electron microscopy.

- Atomic force microscope tips can be used as a nanoscale "write head" to deposit a resist, which is then followed by an etching process to remove material in a top-down method.

Functional approaches

Functional approaches seek to develop useful components without regard to how they might be assembled.

- Magnetic assembly for the synthesis of anisotropic superparamagnetic materials such as magnetic nano chains.

- Molecular scale electronics seeks to develop molecules with useful electronic properties. These could be used as single-molecule components in a nanoelectronic device, such as rotaxane.

- Synthetic chemical methods can be used to create synthetic molecular motors, such as in a so-called nanocar.

Biomimetic approaches

- Bionics or biomimicry seeks to apply biological methods and systems found in nature to the study and design of engineering systems and modern technology. Biomineralization is one example of the systems studied.

- Bionanotechnology is the use of biomolecules for applications in nanotechnology, including the use of viruses and lipid assemblies. Nanocellulose, a nanopolymer often used for bulk-scale applications, has gained interest owing to its useful properties such as abundance, high aspect ratio, good mechanical properties, renewability, and biocompatibility.

Speculative

These subfields seek to anticipate what inventions nanotechnology might yield, or attempt to propose an agenda along which inquiry could progress. These often take a big-picture view, with more emphasis on societal implications than engineering details.

- Molecular nanotechnology is a proposed approach that involves manipulating single molecules in finely controlled, deterministic ways. This is more theoretical than the other subfields, and many of its proposed techniques are beyond current capabilities.

- Nanorobotics considers self-sufficient machines operating at the nanoscale. There are hopes for applying nanorobots in medicine. Nevertheless, progress on innovative materials and patented methodologies have been demonstrated.

- Productive nanosystems are "systems of nanosystems" could produce atomically precise parts for other nanosystems, not necessarily using novel nanoscale-emergent properties, but well-understood fundamentals of manufacturing. Because of the discrete (i.e. atomic) nature of matter and the possibility of exponential growth, this stage could form the basis of another industrial revolution. Mihail Roco proposed four states of nanotechnology that seem to parallel the technical progress of the Industrial Revolution, progressing from passive nanostructures to active nanodevices to complex nanomachines and ultimately to productive nanosystems.

- Programmable matter seeks to design materials whose properties can be easily, reversibly and externally controlled though a fusion of information science and materials science.

- Due to the popularity and media exposure of the term nanotechnology, the words picotechnology and femtotechnology have been coined in analogy to it, although these are used only informally.

Dimensionality in nanomaterials

Nanomaterials can be classified in 0D, 1D, 2D and 3D nanomaterials. Dimensionality plays a major role in determining the characteristic of nanomaterials including physical, chemical, and biological characteristics. With the decrease in dimensionality, an increase in surface-to-volume ratio is observed. This indicates that smaller dimensional nanomaterials have higher surface area compared to 3D nanomaterials. Two dimensional (2D) nanomaterials have been extensively investigated for electronic, biomedical, drug delivery and biosensor applications.

Tools and techniques

Scanning microscopes

The atomic force microscope (AFM) and the scanning tunneling microscope (STM) are two versions of scanning probes that are used for nano-scale observation. Other types of scanning probe microscopy have much higher resolution, since they are not limited by the wavelengths of sound or light.

The tip of a scanning probe can also be used to manipulate nanostructures (positional assembly). Feature-oriented scanning may be a promising way to implement these nano-scale manipulations via an automatic algorithm. However, this is still a slow process because of low velocity of the microscope.

The top-down approach anticipates nanodevices that must be built piece by piece in stages, much as manufactured items are made. Scanning probe microscopy is an important technique both for characterization and synthesis. Atomic force microscopes and scanning tunneling microscopes can be used to look at surfaces and to move atoms around. By designing different tips for these microscopes, they can be used for carving out structures on surfaces and to help guide self-assembling structures. By using, for example, feature-oriented scanning approach, atoms or molecules can be moved around on a surface with scanning probe microscopy techniques.

Lithography

Various techniques of lithography, such as optical lithography, X-ray lithography, dip pen lithography, electron beam lithography or nanoimprint lithography offer top-down fabrication techniques where a bulk material is reduced to a nano-scale pattern.

Another group of nano-technological techniques include those used for fabrication of nanotubes and nanowires, those used in semiconductor fabrication such as deep ultraviolet lithography, electron beam lithography, focused ion beam machining, nanoimprint lithography, atomic layer deposition, and molecular vapor deposition, and further including molecular self-assembly techniques such as those employing di-block copolymers.

Bottom-up

In contrast, bottom-up techniques build or grow larger structures atom by atom or molecule by molecule. These techniques include chemical synthesis, self-assembly and positional assembly. Dual-polarization interferometry is one tool suitable for characterization of self-assembled thin films. Another variation of the bottom-up approach is molecular-beam epitaxy or MBE. Researchers at Bell Telephone Laboratories including John R. Arthur. Alfred Y. Cho, and Art C. Gossard developed and implemented MBE as a research tool in the late 1960s and 1970s. Samples made by MBE were key to the discovery of the fractional quantum Hall effect for which the 1998 Nobel Prize in Physics was awarded. MBE lays down atomically precise layers of atoms and, in the process, build up complex structures. Important for research on semiconductors, MBE is also widely used to make samples and devices for the newly emerging field of spintronics.

Therapeutic products based on responsive nanomaterials, such as the highly deformable, stress-sensitive transfersome vesicles, are approved for human use in some countries.

Applications

This section needs to be updated. (May 2024) |

As of August 21, 2008, the Project on Emerging Nanotechnologies estimated that over 800 manufacturer-identified nanotech products were publicly available, with new ones hitting the market at a pace of 3–4 per week. Most applications are "first generation" passive nanomaterials that includes titanium dioxide in sunscreen, cosmetics, surface coatings, and some food products; Carbon allotropes used to produce gecko tape; silver in food packaging, clothing, disinfectants, and household appliances; zinc oxide in sunscreens and cosmetics, surface coatings, paints and outdoor furniture varnishes; and cerium oxide as a fuel catalyst.

In the electric car industry, single wall carbon nanotubes (SWCNTs) address key lithium-ion battery challenges, including energy density, charge rate, service life, and cost. SWCNTs connect electrode particles during charge/discharge process, preventing battery premature degradation. Their exceptional ability to wrap active material particles enhanced electrical conductivity and physical properties, setting them apart multi-walled carbon nanotubes and carbon black.

Further applications allow tennis balls to last longer, golf balls to fly straighter, and bowling balls to become more durable. Trousers and socks have been infused with nanotechnology to last longer and lower temperature in the summer. Bandages are infused with silver nanoparticles to heal cuts faster. Video game consoles and personal computers may become cheaper, faster, and contain more memory thanks to nanotechnology. Also, to build structures for on chip computing with light, for example on chip optical quantum information processing, and picosecond transmission of information.

Nanotechnology may have the ability to make existing medical applications cheaper and easier to use in places like the doctors' offices and at homes. Cars use nanomaterials in such ways that car parts require fewer metals during manufacturing and less fuel to operate in the future.

Nanoencapsulation involves the enclosure of active substances within carriers. Typically, these carriers offer advantages, such as enhanced bioavailability, controlled release, targeted delivery, and protection of the encapsulated substances. In the medical field, nanoencapsulation plays a significant role in drug delivery. It facilitates more efficient drug administration, reduces side effects, and increases treatment effectiveness. Nanoencapsulation is particularly useful for improving the bioavailability of poorly water-soluble drugs, enabling controlled and sustained drug release, and supporting the development of targeted therapies. These features collectively contribute to advancements in medical treatments and patient care.

Nanotechnology may play role in tissue engineering. When designing scaffolds, researchers attempt to mimic the nanoscale features of a cell's microenvironment to direct its differentiation down a suitable lineage. For example, when creating scaffolds to support bone growth, researchers may mimic osteoclast resorption pits.

Researchers used DNA origami-based nanobots capable of carrying out logic functions to target drug delivery in cockroaches.

A nano bible (a .5mm2 silicon chip) was created by the Technion in order to increase youth interest in nanotechnology.

Implications

One concern is the effect that industrial-scale manufacturing and use of nanomaterials will have on human health and the environment, as suggested by nanotoxicology research. For these reasons, some groups advocate that nanotechnology be regulated. However, regulation might stifle scientific research and the development of beneficial innovations. Public health research agencies, such as the National Institute for Occupational Safety and Health research potential health effects stemming from exposures to nanoparticles.

Nanoparticle products may have unintended consequences. Researchers have discovered that bacteriostatic silver nanoparticles used in socks to reduce foot odor are released in the wash. These particles are then flushed into the wastewater stream and may destroy bacteria that are critical components of natural ecosystems, farms, and waste treatment processes.

Public deliberations on risk perception in the US and UK carried out by the Center for Nanotechnology in Society found that participants were more positive about nanotechnologies for energy applications than for health applications, with health applications raising moral and ethical dilemmas such as cost and availability.

Experts, including director of the Woodrow Wilson Center's Project on Emerging Nanotechnologies David Rejeski, testified that commercialization depends on adequate oversight, risk research strategy, and public engagement. As of 206 Berkeley, California was the only US city to regulate nanotechnology.

Health and environmental concerns

Inhaling airborne nanoparticles and nanofibers may contribute to pulmonary diseases, e.g. fibrosis. Researchers found that when rats breathed in nanoparticles, the particles settled in the brain and lungs, which led to significant increases in biomarkers for inflammation and stress response and that nanoparticles induce skin aging through oxidative stress in hairless mice.

A two-year study at UCLA's School of Public Health found lab mice consuming nano-titanium dioxide showed DNA and chromosome damage to a degree "linked to all the big killers of man, namely cancer, heart disease, neurological disease and aging".

A Nature Nanotechnology study suggested that some forms of carbon nanotubes could be as harmful as asbestos if inhaled in sufficient quantities. Anthony Seaton of the Institute of Occupational Medicine in Edinburgh, Scotland, who contributed to the article on carbon nanotubes said "We know that some of them probably have the potential to cause mesothelioma. So those sorts of materials need to be handled very carefully." In the absence of specific regulation forthcoming from governments, Paull and Lyons (2008) have called for an exclusion of engineered nanoparticles in food. A newspaper article reports that workers in a paint factory developed serious lung disease and nanoparticles were found in their lungs.

Regulation

Calls for tighter regulation of nanotechnology have accompanied a debate related to human health and safety risks. Some regulatory agencies cover some nanotechnology products and processes – by "bolting on" nanotechnology to existing regulations – leaving clear gaps. Davies proposed a road map describing steps to deal with these shortcomings.

Andrew Maynard, chief science advisor to the Woodrow Wilson Center's Project on Emerging Nanotechnologies, reported insufficient funding for human health and safety research, and as a result inadequate understanding of human health and safety risks. Some academics called for stricter application of the precautionary principle, slowing marketing approval, enhanced labelling and additional safety data.

A Royal Society report identified a risk of nanoparticles or nanotubes being released during disposal, destruction and recycling, and recommended that "manufacturers of products that fall under extended producer responsibility regimes such as end-of-life regulations publish procedures outlining how these materials will be managed to minimize possible human and environmental exposure".