Artificial life (often abbreviated ALife or A-Life) is a field of study wherein researchers examine systems related to natural life, its processes, and its evolution, through the use of simulations with computer models, robotics, and biochemistry.[1] The discipline was named by Christopher Langton, an American theoretical biologist, in 1986.[2] There are three main kinds of alife,[3] named for their approaches: soft,[4] from software; hard,[5] from hardware; and wet, from biochemistry. Artificial life researchers study traditional biology by trying to recreate aspects of biological phenomena.

A Braitenberg vehicle simulation, programmed in breve, an artificial life simulator

Overview

Artificial life studies the fundamental processes of living systems in artificial environments in order to gain a deeper understanding of the complex information processing that define such systems. These topics are broad, but often include evolutionary dynamics, emergent properties of collective systems, biomimicry, as well as related issues about the philosophy of the nature of life and the use of lifelike properties in artistic works.Philosophy

The modeling philosophy of artificial life strongly differs from traditional modeling by studying not only "life-as-we-know-it" but also "life-as-it-might-be".[8]A traditional model of a biological system will focus on capturing its most important parameters. In contrast, an alife modeling approach will generally seek to decipher the most simple and general principles underlying life and implement them in a simulation. The simulation then offers the possibility to analyse new and different lifelike systems.

Vladimir Georgievich Red'ko proposed to generalize this distinction to the modeling of any process, leading to the more general distinction of "processes-as-we-know-them" and "processes-as-they-could-be".[9]

At present, the commonly accepted definition of life does not consider any current alife simulations or software to be alive, and they do not constitute part of the evolutionary process of any ecosystem. However, different opinions about artificial life's potential have arisen:

- The strong alife (cf. Strong AI) position states that "life is a process which can be abstracted away from any particular medium" (John von Neumann)[citation needed]. Notably, Tom Ray declared that his program Tierra is not simulating life in a computer but synthesizing it.[10]

- The weak alife position denies the possibility of generating a "living process" outside of a chemical solution. Its researchers try instead to simulate life processes to understand the underlying mechanics of biological phenomena.

Organizations

Software-based ("soft")

Techniques

- Cellular automata were used in the early days of artificial life, and are still often used for ease of scalability and parallelization. Alife and cellular automata share a closely tied history.

- Artificial neural networks are sometimes used to model the brain of an agent. Although traditionally more of an artificial intelligence technique, neural nets can be important for simulating population dynamics of organisms that can learn. The symbiosis between learning and evolution is central to theories about the development of instincts in organisms with higher neurological complexity, as in, for instance, the Baldwin effect.

Notable simulators

This is a list of artificial life/digital organism simulators, organized by the method of creature definition.| Name | Driven By | Started | Ended |

|---|---|---|---|

| Avida | executable DNA | 1993 | ongoing |

| Neurokernel | Geppetto | 2014 | ongoing |

| Creatures | neural net/simulated biochemistry | 1996-2001 | Fandom still active to this day, some abortive attempts at new products |

| Critterding | neural net | 2005 | ongoing |

| Darwinbots | executable DNA | 2003 | ongoing |

| DigiHive | executable DNA | 2006 | 2009 |

| DOSE | executable DNA | 2012 | ongoing |

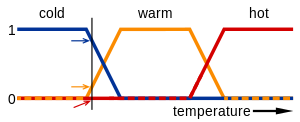

| EcoSim | Fuzzy Cognitive Map | 2009 | ongoing |

| Evolve 4.0 | executable DNA | 1996 | Prior to Nov. 2014 |

| Framsticks | executable DNA | 1996 | ongoing |

| Noble Ape | neural net | 1996 | ongoing |

| OpenWorm | Geppetto | 2011 | ongoing |

| Polyworld | neural net | 1990 | ongoing |

| Primordial Life | executable DNA | 1994 | 2003 |

| ScriptBots | executable DNA | 2010 | ongoing |

| TechnoSphere | modules | 1995 | |

| Tierra | executable DNA | 1991 | 2004 |

| 3D Virtual Creature Evolution | neural net | 2008 | NA |

Program-based

Program-based simulations contain organisms with a complex DNA language, usually Turing complete. This language is more often in the form of a computer program than actual biological DNA. Assembly derivatives are the most common languages used. An organism "lives" when its code is executed, and there are usually various methods allowing self-replication. Mutations are generally implemented as random changes to the code. Use of cellular automata is common but not required. Another example could be an artificial intelligence and multi-agent system/program.Module-based

Individual modules are added to a creature. These modules modify the creature's behaviors and characteristics either directly, by hard coding into the simulation (leg type A increases speed and metabolism), or indirectly, through the emergent interactions between a creature's modules (leg type A moves up and down with a frequency of X, which interacts with other legs to create motion). Generally these are simulators which emphasize user creation and accessibility over mutation and evolution.Parameter-based

Organisms are generally constructed with pre-defined and fixed behaviors that are controlled by various parameters that mutate. That is, each organism contains a collection of numbers or other finite parameters. Each parameter controls one or several aspects of an organism in a well-defined way.Neural net–based

These simulations have creatures that learn and grow using neural nets or a close derivative. Emphasis is often, although not always, more on learning than on natural selection.Complex systems modelling

Mathematical models of complex systems are of three types: black-box (phenomenological), white-box (mechanistic, based on the first principles) and grey-box (mixtures of phenomenological and mechanistic models) [11][12]. In black-box models, the individual-based (mechanistic) mechanisms of a complex dynamic system remain hidden.

Mathematical models for complex systems

Black-box models are completely nonmechanistic. They are phenomenological and ignore a composition and internal structure of a complex system. We cannot investigate interactions of subsystems of such a non-transparent model. A white-box model of complex dynamic system has ‘transparent walls’ and directly shows underlying mechanisms. All events at micro-, meso- and macro-levels of a dynamic system are directly visible at all stages of its white-box model evolution. In most cases mathematical modelers use the heavy black-box mathematical methods, which cannot produce mechanistic models of complex dynamic systems. Grey-box models are intermediate and combine black-box and white-box approaches.

Logical deterministic individual-based cellular automata model of single species population growth

Creation of a white-box model of complex system is associated with the problem of the necessity of an a priori basic knowledge of the modeling subject. The deterministic logical cellular automata are necessary but not sufficient condition of a white-box model. The second necessary prerequisite of a white-box model is the presence of the physical ontology of the object under study. The white-box modeling represents an automatic hyper-logical inference from the first principles because it is completely based on the deterministic logic and axiomatic theory of the subject. The purpose of the white-box modeling is to derive from the basic axioms a more detailed, more concrete mechanistic knowledge about the dynamics of the object under study. The necessity to formulate an intrinsic axiomatic system of the subject before creating its white-box model distinguishes the cellular automata models of white-box type from cellular automata models based on arbitrary logical rules. If cellular automata rules have not been formulated from the first principles of the subject, then such a model may have a weak relevance to the real problem [12].

Logical deterministic individual-based cellular automata model of interspecific competition for a single limited resource

Hardware-based ("hard")

Hardware-based artificial life mainly consist of robots, that is, automatically guided machines able to do tasks on their own.Biochemical-based ("wet")

Biochemical-based life is studied in the field of synthetic biology. It involves e.g. the creation of synthetic DNA. The term "wet" is an extension of the term "wetware".Open problems

- Generate a molecular proto-organism in vitro.

- Achieve the transition to life in an artificial chemistry in silico.

- Determine whether fundamentally novel living organizations can exist.

- Simulate a unicellular organism over its entire life cycle.

- Explain how rules and symbols are generated from physical dynamics in living systems.

- What are the potentials and limits of living systems?

- Determine what is inevitable in the open-ended evolution of life.

- Determine minimal conditions for evolutionary transitions from specific to generic response systems.

- Create a formal framework for synthesizing dynamical hierarchies at all scales.

- Determine the predictability of evolutionary consequences of manipulating organisms and ecosystems.

- Develop a theory of information processing, information flow, and information generation for evolving systems.

- How is life related to mind, machines, and culture?

- Demonstrate the emergence of intelligence and mind in an artificial living system.

- Evaluate the influence of machines on the next major evolutionary transition of life.

- Provide a quantitative model of the interplay between cultural and biological evolution.

- Establish ethical principles for artificial life.

Related subjects

- Artificial intelligence has traditionally used a top down approach, while alife generally works from the bottom up.[15]

- Artificial chemistry started as a method within the alife community to abstract the processes of chemical reactions.

- Evolutionary algorithms are a practical application of the weak alife principle applied to optimization problems. Many optimization algorithms have been crafted which borrow from or closely mirror alife techniques. The primary difference lies in explicitly defining the fitness of an agent by its ability to solve a problem, instead of its ability to find food, reproduce, or avoid death.[citation needed] The following is a list of evolutionary algorithms closely related to and used in alife:

- Multi-agent system – A multi-agent system is a computerized system composed of multiple interacting intelligent agents within an environment.

- Evolutionary art uses techniques and methods from artificial life to create new forms of art.

- Evolutionary music uses similar techniques, but applied to music instead of visual art.

- Abiogenesis and the origin of life sometimes employ alife methodologies as well.