Productivity in general is a ratio of output to input in the

production of goods and services. Productivity is increased by lowering

the amount of labor, capital, energy or materials that go into

producing any given amount of economic goods and services. Increases in

productivity are largely responsible for the increase in per capita

living standards.

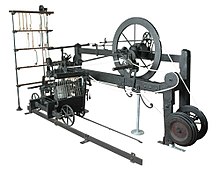

The spinning jenny and spinning mule (shown) greatly increased the productivity of thread manufacturing compared to the spinning wheel.

History

Productivity improving technologies date back to antiquity, with rather slow progress until the late Middle Ages. Important examples of early to medieval European technology include the water wheel, the horse collar, the spinning wheel, the three-field system (after 1500 the four-field system—see Crop rotation) and the blast furnace. All of these technologies had been in use in China, some for centuries, before being introduced to Europe.Technological progress was aided by literacy and the diffusion of knowledge that accelerated after the spinning wheel spread to Western Europe in the 13th century. The spinning wheel increased the supply of rags used for pulp in paper making, whose technology reached Sicily sometime in the 12th century. Cheap paper was a factor in the development of the movable type printing press, which led to a large increase in the number of books and titles published. Books on science and technology eventually began to appear, such as the mining technical manual De Re Metallica, which was the most important technology book of the 16th century and was the standard chemistry text for the next 180 years.

Francis Bacon (1561-1626) is known for the scientific method, which was a key factor in the scientific revolution. Bacon stated that the technologies that distinguished Europe of his day from the Middle Ages were paper and printing, gunpowder and the magnetic compass, known as the four great inventions. The four great inventions important to the development of Europe were of Chinese origin. Other Chinese inventions included the horse collar, cast iron, an improved plow and the seed drill.

Mining and metal refining technologies played a key role in technological progress. Much of our understanding of fundamental chemistry evolved from ore smelting and refining, with De Re Metallica being the leading chemistry text for 180 years. Railroads evolved from mine carts and the first steam engines were designed specifically for pumping water from mines. The significance of the blast furnace goes far beyond its capacity for large scale production of cast iron. The blast furnace was the first example of continuous production and is a countercurrent exchange process, various types of which are also used today in chemical and petroleum refining. Hot blast, which recycled what would have otherwise been waste heat, was one of engineering's key technologies. It had the immediate effect of dramatically reducing the energy required to produce pig iron, but reuse of heat was eventually applied to a variety of industries, particularly steam boilers, chemicals, petroleum refining and pulp and paper.

Before the 17th century scientific knowledge tended to stay within the intellectual community, but by this time it became accessible to the public in what is called "open science". Near the beginning of the Industrial Revolution came publication of the Encyclopédie, written by numerous contributors and edited by Denis Diderot and Jean le Rond d'Alembert (1751–72). It contained many articles on science and was the first general encyclopedia to provide in depth coverage on the mechanical arts, but is far more recognized for its presentation of thoughts of the Enlightenment.

Economic historians generally agree that, with certain exceptions such as the steam engine, there is no strong linkage between the 17th century scientific revolution (Descartes, Newton, etc.) and the Industrial Revolution. However, an important mechanism for the transfer of technical knowledge was scientific societies, such as The Royal Society of London for Improving Natural Knowledge, better known as the Royal Society, and the Académie des Sciences. There were also technical colleges, such as the École Polytechnique. Scotland was the first place where science was taught (in the 18th century) and was where Joseph Black discovered heat capacity and latent heat and where his friend James Watt used knowledge of heat to conceive the separate condenser as a means to improve the efficiency of the steam engine.

Probably the first period in history in which economic progress was observable after one generation was during the British Agricultural Revolution in the 18th century. However, technological and economic progress did not proceed at a significant rate until the English Industrial Revolution in the late 18th century, and even then productivity grew about 0.5% annually. High productivity growth began during the late 19th century in what is sometimes call the Second Industrial Revolution. Most major innovations of the Second Industrial Revolution were based on the modern scientific understanding of chemistry, electromagnetic theory and thermodynamics and other principles known to profession of engineering.

Major sources of productivity growth in economic history

1900s photograph of barge pullers on the Volga River. Pushing was done with poles and manual pulling using overhanging tree branches. Horses were also used.

New forms of energy and power

Before the industrial revolution the only sources of power were water, wind and muscle. Most good water power sites (those not requiring massive modern dams) in Europe were developed during the medieval period. In the 1750s John Smeaton, the "father of civil engineering," significantly improved the efficiency of the water wheel by applying scientific principles, thereby adding badly needed power for the Industrial Revolution. However water wheels remained costly, relatively inefficient and not well suited to very large power dams. Benoît Fourneyron's highly efficient turbine developed in the late 1820s eventually replaced waterwheels. Fourneyron type turbines can operate at 95% efficiency and used in today's large hydro-power installations. Hydro-power continued to be the leading source of industrial power in the United States until past the mid 19th century because of abundant sites, but steam power overtook water power in the UK decades earlier.In 1711 a Newcomen steam engine was installed for pumping water from a mine, a job that typically was done by large teams of horses, of which some mines used as many as 500. Animals convert feed to work at an efficiency of about 5%, but while this was much more than the less than 1% efficiency of the early Newcomen engine, in coal mines there was low quality coal with little market value available. Fossil fuel energy first exceeded all animal and water power in 1870. The role energy and machines replacing physical work is discussed in Ayres-Warr (2004, 2009).

While steamboats were used in some areas, as recently as the late 19th Century thousands of workers pulled barges. Until the late 19th century most coal and other minerals were mined with picks and shovels and crops were harvested and grain threshed using animal power or by hand. Heavy loads like 382 pound bales of cotton were handled on hand trucks until the early 20th century.

A young "drawer" pulling a coal tub along a mine gallery. Minecarts

were more common than the skid shown. Railroads descended from

minecarts. In Britain laws passed in 1842 and 1844 improved working

conditions in mines.

Excavation was done with shovels until the late 19th century when steam shovels came into use. It was reported that a laborer on the western division of the Erie Canal was expected to dig 5 cubic yards per day in 1860; however, by 1890 only 3-1/2 yards per day were expected. Today's large electric shovels have buckets that can hold 168 cubic meters and consume the power of a city of 100,000.

Dynamite, a safe to handle blend of nitroglycerin and diatomaceous earth was patented in 1867 by Alfred Nobel. Dynamite increased productivity of mining, tunneling, road building, construction and demolition and made projects such as the Panama Canal possible.

Steam power was applied to threshing machines in the late 19th century. There were steam engines that moved around on wheels under their own power that were used for supplying temporary power to stationary farm equipment such as threshing machines. These were called road engines, and Henry Ford seeing one as a boy was inspired to build an automobile. Steam tractors were used but never became popular.

With internal combustion came the first mass-produced tractors (Fordson c. 1917). Tractors replaced horses and mules for pulling reapers and combine harvesters, but in the 1930s self powered combines were developed. Output per man hour in growing wheat rose by a factor of about 10 from the end of World War II until about 1985, largely because of powered machinery, but also because of increased crop yields. Corn manpower showed a similar but higher productivity increase.

One of the greatest periods of productivity growth coincided with the electrification of factories which took place between 1900 and 1930 in the U.S.

Energy efficiency

In engineering and economic history the most important types of energy efficiency were in the conversion of heat to work, the reuse of heat and the reduction of friction. There was also a dramatic reduction energy required to transmit electronic signals, both voice and data.Conversion of heat to work

The early Newcomen steam engine was about 0.5% efficient and was improved to slightly over 1% by John Smeaton before Watt's improvements, which increased thermal efficiency to 2%. In 1900 it took 7 lbs coal/ kw hr.Electrical generation was the sector with the highest productivity growth in the U.S. in the early twentieth century. After the turn of the century large central stations with high pressure boilers and efficient steam turbines replaced reciprocating steam engines and by 1960 it took 0.9 lb coal per kw-hr. Counting the improvements in mining and transportation the total improvement was by a factor greater than 10. Today's steam turbines have efficiencies in the 40% range. Most electricity today is produced by thermal power stations using steam turbines.

The Newcomen and Watt engines operated near atmospheric pressure and used atmospheric pressure, in the form of a vacuum caused by condensing steam, to do work. Higher pressure engines were light enough, and efficient enough to be used for powering ships and locomotives. Multiple expansion (multi-stage) engines were developed in the 1870s and were efficient enough for the first time to allow ships to carry more freight than coal, leading to great increases in international trade.

The first important diesel ship was the MS Selandia launched in 1912. By 1950 one-third of merchant shipping was diesel powered. Today the most efficient prime mover is the two stroke marine diesel engine developed in the 1920s, now ranging in size to over 100,000 horsepower with a thermal efficiency of 50%.

Steam locomotives that used up to 20% of the U.S. coal production were replaced by diesel locomotives after World War II, saving a great deal of energy and reducing manpower for handling coal, boiler water and mechanical maintenance.

Improvements in steam engine efficiency caused a large increase in the number of steam engines and the amount of coal used, as noted by William Stanley Jevons in The Coal Question. This is called the Jevons paradox.

Electrification and the pre-electric transmission of power

Electricity consumption and economic growth are strongly correlated. Per capita electric consumption correlates almost perfectly with economic development. Electrification was the first technology to enable long distance transmission of power with minimal power losses. Electric motors did away with line shafts for distributing power and dramatically increased the productivity of factories. Very large central power stations created economies of scale and were much more efficient at producing power than reciprocating steam engines. Electric motors greatly reduced the capital cost of power compared to steam engines.The main forms of pre-electric power transmission were line shafts, hydraulic power networks and pneumatic and wire rope systems. Line shafts were the common form of power transmission in factories from the earliest industrial steam engines until factory electrification. Line shafts limited factory arrangement and suffered from high power losses. Hydraulic power came into use in the mid 19th century. It was used extensively in the Bessemer process and for cranes at ports, especially in the UK. London and a few other cities had hydraulic utilities that provided pressurized water for industrial over a wide area.

Pneumatic power began being used industry and in mining and tunneling in the last quarter of the 19th century. Common applications included rock drills and jack hammers. Wire ropes supported by large grooved wheels were able to transmit power with low loss for a distance of a few miles or kilometers. Wire rope systems appeared shortly before electrification.

Reuse of heat

Recovery of heat for industrial processes was first widely used as hot blast in blast furnaces to make pig iron in 1828. Later heat reuse included the Siemens-Martin process which was first used for making glass and later for steel with the open hearth furnace. (See: Iron and steel below). Today heat is reused in many basic industries such as chemicals, oil refining and pulp and paper, using a variety of methods such as heat exchangers in many processes. Multiple-effect evaporators use vapor from a high temperature effect to evaporate a lower temperature boiling fluid. In the recovery of kraft pulping chemicals the spent black liquor can be evaporated five or six times by reusing the vapor from one effect to boil the liquor in the preceding effect. Cogeneration is a process that uses high pressure steam to generate electricity and then uses the resulting low pressure steam for process or building heat.Industrial process have undergone numerous minor improvements which collectively made significant reductions in energy consumption per unit of production.

Reducing friction

Reducing friction was one of the major reasons for the success of railroads compared to wagons. This was demonstrated on an iron plate covered wooden tramway in 1805 at Croydon, U.K.“ A good horse on an ordinary turnpike road can draw two thousand pounds, or one ton. A party of gentlemen were invited to witness the experiment, that the superiority of the new road might be established by ocular demonstration. Twelve wagons were loaded with stones, till each wagon weighed three tons, and the wagons were fastened together. A horse was then attached, which drew the wagons with ease, six miles in two hours, having stopped four times, in order to show he had the power of starting, as well as drawing his great load.”Better lubrication, such as from petroleum oils, reduced friction losses in mills and factories. Anti-friction bearings were developed using alloy steels and precision machining techniques available in the last quarter of the 19th century. Anti-friction bearings were widely used on bicycles by the 1880s. Bearings began being used on line shafts in the decades before factory electrification and it was the pre-bearing shafts that were largely responsible for their high power losses, which were commonly 25 to 30% and often as much as 50%.

Lighting efficiency

Electric lights were far more efficient than oil or gas lighting and did not generate smoke, fumes nor as much heat. Electric light extended the work day, making factories, businesses and homes more productive. Electric light was not a great fire hazard like oil and gas light.The efficiency of electric lights has continuously improved from the first incandescent lamps to tungsten filament lights. The fluorescent lamp, which became commercial in the late 1930s, is much more efficient than incandescent lighting. Light-emitting diodes or LED's are highly efficient and long lasting.

Infrastructures

The relative energy required for transport of a tonne-km for various modes of transport are: pipelines=1(basis), water 2, rail 3, road 10, air 100.Roads

Unimproved roads were extremely slow, costly for transport and dangerous. In the 18th century layered gravel began being increasingly used, with the three layer Macadam coming into use in the early 19th century. These roads were crowned to shed water and had drainage ditches along the sides. The top layer of stones eventually crushed to fines and smoothed the surface somewhat. The lower layers were of small stones that allowed good drainage. Importantly, they offered less resistance to wagon wheels and horses hooves and feet did not sink in the mud. Plank roads also came into use in the U.S. in the 1810s-1820s. Improved roads were costly, and although they cut the cost of land transportation in half or more, they were soon overtaken by railroads as the major transportation infrastructure.Ocean shipping and inland waterways

Sailing ships could transport goods for over a 3000 miles for the cost of 30 miles by wagon. A horse that could pull a one-ton wagon could pull a 30-ton barge. During the English or First Industrial Revolution, supplying coal to the furnaces at Manchester was difficult because there were few roads and because of the high cost of using wagons. However, canal barges were known to be workable, and this was demonstrated by building the Bridgewater Canal, which opened in 1761, bringing coal from Worsley to Manchester. The Bridgewater Canal’s success started a frenzy of canal building that lasted until the appearance of railroads in the 1830s.Railroads

Railroads greatly reduced the cost of overland transportation. It is estimated that by 1890 the cost of wagon freight was U.S. 24.5 cents/ton-mile versus 0.875 cents/ton-mile by railroad, for a decline of 96%.Electric street railways (trams, trolleys or streetcars) were in the final phase of railroad building from the late 1890s and first two decades of the 20th century. Street railways were soon displaced by motor buses and automobiles after 1920.

Motorways

Highways with internal combustion powered vehicles completed the mechanization of overland transportation. When trucks appeared c. 1920 the price transporting farm goods to market or to rail stations was greatly reduced. Motorized highway transport also reduced inventories.The high productivity growth in the U.S. during the 1930s was in large part due to the highway building program of that decade.

Pipelines

Pipelines are the most energy efficient means of transportation. Iron and steel pipelines came into use during latter part of the 19th century, but only became a major infrastructure during the 20th century. Centrifugal pumps and centrifugal compressors are efficient means of pumping liquids and natural gas.Mechanization

Adriance reaper, late 19th century

Threshing machine from 1881. Steam engines were also used instead of horses. Today both threshing and reaping are done with a combine harvester.

Mechanized agriculture

The seed drill is a mechanical device for spacing and planting seed at the appropriate depth. It originated in ancient China before the 1st century BC. Saving seed was extremely important at a time when yields were measured in terms of seeds harvested per seed planted, which was typically between 3 and 5. The seed drill also saved planting labor. Most importantly, the seed drill meant crops were grown in rows, which reduced competition of plants and increase yields. It was reinvented in 16th century Europe based on verbal descriptions and crude drawings brought back from China. Jethro Tull patented a version in 1700; however, it was expensive and unreliable. Reliable seed drills appeared in the mid 19th century.Since the beginning of agriculture threshing was done by hand with a flail, requiring a great deal of labor. The threshing machine (ca. 1794) simplified the operation and allowed it to use animal power. By the 1860s threshing machines were widely introduced and ultimately displaced as much as a quarter of agricultural labor. In Europe, many of the displaced workers were driven to the brink of starvation.

Harvesting oats in a Claas Lexion 570 combine with enclosed, air-conditioned cab with rotary thresher and laser-guided hydraulic steering

Before c. 1790 a worker could harvest 1/4 acre per day with a scythe. In the early 1800s the grain cradle was introduced, significantly increasing the productivity of hand labor. It was estimated that each of Cyrus McCormick's horse pulled reapers (Ptd. 1834) freed up five men for military service in the U.S. Civil War. By 1890 two men and two horses could cut, rake and bind 20 acres of wheat per day. In the 1880s the reaper and threshing machine were combined into the combine harvester. These machines required large teams of horses or mules to pull. Over the entire 19th century the output per man hour for producing wheat rose by about 500% and for corn about 250%.

Farm machinery and higher crop yields reduced the labor to produce 100 bushels of corn from 35 to 40 hours in 1900 to 2 hours 45 minutes in 1999. The conversion of agricultural mechanization to internal combustion power began after 1915. The horse population began to decline in the 1920s after the conversion of agriculture and transportation to internal combustion. In addition to saving labor, this freed up much land previously used for supporting draft animals.

The peak years for tractor sales in the U.S. were the 1950s. There was a large surge in horsepower of farm machinery in the 1950s.

Industrial machinery

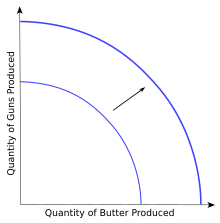

The most important mechanical devices before the Industrial Revolution were water and wind mills. Water wheels date to Roman times and windmills somewhat later. Water and wind power were first used for grinding grain into flour, but were later adapted to power trip hammers for pounding rags into pulp for making paper and for crushing ore. Just before the Industrial revolution water power was applied to bellows for iron smelting in Europe. (Water powered blast bellows were used in ancient China.) Wind and water power were also used in sawmills. The technology of building mills and mechanical clocks was important to the development of the machines of the Industrial Revolution.The spinning wheel was a medieval invention that increased thread making productivity by a factor greater than ten. One of the early developments that preceded the Industrial Revolution was the stocking frame (loom) of c. 1589. Later in the Industrial Revolution came the flying shuttle, a simple device that doubled the productivity of weaving. Spinning thread had been a limiting factor in cloth making requiring 10 spinners using the spinning wheel to supply one weaver. With the spinning jenny a spinner could spin eight threads at once. The water frame (Ptd. 1768) adapted water power to spinning, but it could only spin one thread at a time. The water frame was easy to operate and many could be located in a single building. The spinning mule (1779) allowed a large number of threads to be spun by a single machine using water power. A change in consumer preference for cotton at the time of increased cloth production resulted in the invention of the cotton gin (Ptd. 1794). Steam power eventually was used as a supplement to water during the Industrial Revolution, and both were used until electrification. A graph of productivity of spinning technologies can be found in Ayres (1989), along with much other data related this article.

With a cotton gin (1792) in one day a man could remove seed from as much upland cotton as would have previously taken a woman working two months to process at one pound per day using a roller gin.

An early example of a large productivity increase by special purpose machines is the c. 1803 Portsmouth Block Mills. With these machines 10 men could produce as many blocks as 110 skilled craftsmen.

In the 1830s several technologies came together to allow an important shift in wooden building construction. The circular saw (1777), cut nail machines (1794), and steam engine allowed slender pieces of lumber such as 2"x4"s to be efficiently produced and then nailed together in what became known as balloon framing (1832). This was the beginning of the decline of the ancient method of timber frame construction with wooden joinery.

Following mechanization in the textile industry was mechanization of the shoe industry.

The sewing machine, invented and improved during the early 19th century and produced in large numbers by the 1870s, increased productivity by more than 500%. The sewing machine was an important productivity tool for mechanized shoe production.

With the widespread availability of machine tools, improved steam engines and inexpensive transportation provided by railroads, the machinery industry became the largest sector (by profit added) of the U. S. economy by the last quarter of the 19th century, leading to an industrial economy.

The first commercially successful glass bottle blowing machine was introduced in 1905. The machine, operated by a two-man crew working 12-hour shifts, could produce 17,280 bottles in 24 hours, compared to 2,880 bottles made a crew of six men and boys working in a shop for a day. The cost of making bottles by machine was 10 to 12 cents per gross compared to $1.80 per gross by the manual glassblowers and helpers.

Machine tools

Machine tools, which cut, grind and shape metal parts, were another important mechanical innovation of the Industrial Revolution. Before machine tools it was prohibitively expensive to make precision parts, an essential requirement for many machines and interchangeable parts. Historically important machine tools are the screw-cutting lathe, milling machine and metal planer (metalworking), which all came into use between 1800 and 1840. However, around 1900, it was the combination of small electric motors, specialty steels and new cutting and grinding materials that allowed machine tools to mass-produce steel parts. Production of the Ford Model T required 32,000 machine tools.

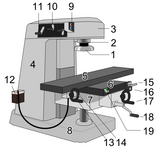

Vertical milling machine, an important machine tool. 1: milling cutter 2: spindle 3: top slide or overarm 4: column 5: table 6: Y-axis slide 7: knee 8: base

Modern manufacturing began around 1900 when machines, aided by electric, hydraulic and pneumatic power, began to replace hand methods in industry. An early example is the Owens automatic glass bottle blowing machine, which reduced labor in making bottles by over 80%.

Mining

Large mining machines, such as steam shovels, appeared in the mid-nineteenth century, but were restricted to rails until the widespread introduction of continuous track and pneumatic tires in the late 19th and early 20th centuries. Until then much mining work was mostly done with pneumatic drills, jackhammers, picks and shovels.Coal seam undercutting machines appeared around 1890 and were used for 75% of coal production by 1934. Coal loading was still being done manually with shovels around 1930, but mechanical pick up and loading machines were coming into use. The use of the coal boring machine improved productivity of sub-surface coal mining by a factor of three between 1949 and 1969.

There is currently a transition going under way from more labor-intensive methods of mining to more mechanization and even automated mining.

Mechanized materials handling

Bulk materials handling

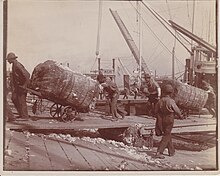

P & H 4100 XPB cable loading shovel, a type of mobile crane

Unloading

cotton c. 1900. Hydraulic cranes were in use in the U.K. for loading

ships by the 1840s, but were little used in the U.S. Steam powered conveyors and cranes were used in the U.S. by the 1880s.

In the early 20th century, electric operated cranes and motorized

mobile loaders such as forklifts were used. Today non-bulk freight is containerized.

A U.S. airman

operating a forklift. Pallets placed in rear of truck are moved around

inside with a pallet jack (below). Where available pallets are loaded

at loading docks which allow forklifts to drive on.

Dry bulk materials handling systems use a variety of stationary equipment such as conveyors, stackers, reclaimers and mobile equipment such as power shovels and loaders to handle high volumes of ores, coal, grains, sand, gravel, crushed stone, etc. Bulk materials handling systems are used at mines, for loading and unloading ships and at factories that process bulk materials into finished goods, such as steel and paper mills.

The handle on this pumpjack

is the lever for a hydraulic jack, which can easily lift loads up to

2-1/2 tonnes, depending on rating. Commonly used in warehouses and in

retail stores.

Mechanical stokers for feeding coal to locomotives were in use in the 1920s. A completely mechanized and automated coal handling and stoking system was first used to feed pulverized coal to an electric utility boiler in 1921.

Liquids and gases are handled with centrifugal pumps and compressors, respectively.

Conversion to powered material handling increased during WW 1 as shortages of unskilled labor developed and unskilled wages rose relative to skilled labor.

A noteworthy use of conveyors was Oliver Evans's automatic flour mill built in 1785.

Around 1900 various types of conveyors (belt, slat, bucket, screw or auger), overhead cranes and industrial trucks began being used for handling materials and goods in various stages of production in factories.

A well known application of conveyors is Ford. Motor Co.'s assembly line (c. 1913), although Ford used various industrial trucks, overhead cranes, slides and whatever devices necessary to minimize labor in handling parts in various parts of the factory.

Cranes

Cranes are an ancient technology but they became widespread following the Industrial Revolution. Industrial cranes were used to handle heavy machinery at the Nasmyth, Gaskell and Company (Bridgewater foundry) in the late 1830s. Hydraulic powered cranes became widely used in the late 19th century, especially at British ports. Some cities, such as London, had public utility hydraulic service networks to power. Steam cranes were also used in the late 19th century. Electric cranes, especially the overhead type, were introduce in factories at the end of the 19th century. Steam cranes were usually restricted to rails. Continuous track (caterpillar tread) was developed in the late 19th century.The important categories of cranes are:

- Overhead crane or bridge cranes-travel on a rail and have trolleys that move the hoist to any position inside the crane frame. Widely used in factories.

- Mobile crane Usually gasoline or diesel powered and travel on wheels for on or off-road, rail or continuous track. They are widely used in construction, mining, excavation handling bulk materials.

- Fixed crane In a fixed position but can usually rotate full circle. The most familiar example is the tower crane used to erect tall buildings.

Palletization

Handling goods on pallets was a significant improvement over using hand trucks or carrying sacks or boxes by hand and greatly speeded up loading and unloading of trucks, rail cars and ships. Pallets can be handled with pallet jacks or forklift trucks which began being used in industry in the 1930s and became widespread by the 1950s. Loading docks built to architectural standards allow trucks or rail cars to load and unload at the same elevation as the warehouse floor.Piggyback rail

Piggyback is the transporting of trailers or entire trucks on rail cars, which is a more fuel efficient means of shipping and saves loading, unloading and sorting labor. Wagons had been carried on rail cars in the 19th century, with horses in separate cars. Trailers began being carried on rail cars in the U.S. in 1956. Piggyback was 1% of freight in 1958, rising to 15% in 1986.Containerization

Either loading or unloading break bulk cargo on and off ships typically took several days. It was strenuous and somewhat dangerous work. Losses from damage and theft were high. The work was erratic and most longshoreman had a lot of unpaid idle time. Sorting and keeping track of break bulk cargo was also time consuming, and holding it in warehouses tied up capital.Old style ports with warehouses were congested and many lacked efficient transportation infrastructure, adding to costs and delays in port.

By handling freight in standardized containers in compartmentalized ships, either loading or unloading could typically be accomplished in one day. Containers can be more efficiently filled than break bulk because containers can be stacked several high, doubling the freight capacity for a given size ship.

Loading and unloading labor for containers is a fraction of break bulk, and damage and theft are much lower. Also, many items shipped in containers require less packaging.

Containerization with small boxes was used in both world wars, particularly WW II, but became commercial in the late 1950s. Containerization left large numbers of warehouses at wharves in port cities vacant, freeing up land for other development. See also: Intermodal freight transport

Work practices and processes

Division of labor

Before the factory system much production took place in the household, such as spinning and weaving, and was for household consumption. This was partly due to the lack of transportation infrastructures, especially in America.Division of labor was practiced in antiquity but became increasingly specialized during the Industrial Revolution, so that instead of a shoemaker cutting out leather as part of the operation of making a shoe, a worker would do nothing but cut out leather. In Adam Smith's famous example of a pin factory, workers each doing a single task were far more productive than a craftsmen making an entire pin.

Starting before and continuing into the industrial revolution, much work was subcontracted under the putting out system (also called the domestic system) whereby work was done at home. Putting out work included spinning, weaving, leather cutting and, less commonly, specialty items such as firearms parts. Merchant capitalists or master craftsmen typically provided the materials and collected the work pieces, which were made into finished product in a central workshop.

Factory system

During the industrial revolution much production took place in workshops, which were typically located in the rear or upper level of the same building where the finished goods were sold. These workshops used tools and sometimes simple machinery, which was usually hand or animal powered. The master craftsman, foreman or merchant capitalist supervised the work and maintained quality. Workshops grew in size but were displaced by the factory system in the early 19th century. Under the factory system capitalists hired workers and provided the buildings, machinery and supplies and handled the sale of the finished products.Interchangeable parts

Changes to traditional work processes that were done after analyzing the work and making it more systematic greatly increased the productivity of labor and capital. This was the changeover from the European system of craftsmanship, where a craftsman made a whole item, to the American system of manufacturing which used special purpose machines and machine tools that made parts with precision to be interchangeable. The process took decades to perfect at great expense because interchangeable parts were more costly at first. Interchangeable parts were achieved by using fixtures to hold and precisely align parts being machined, jigs to guide the machine tools and gauges to measure critical dimensions of finished parts.Scientific management

Other work processes involved minimizing the number of steps in doing individual tasks, such as bricklaying, by performing time and motion studies to determine the one best method, the system becoming known as Taylorism after Fredrick Winslow Taylor who is the best known developer of this method, which is also known as scientific management after his work The Principles of Scientific Management.Standardization

Standardization and interchangeability are considered to be main reasons for U.S. exceptionality. Standardization was part of the change to interchangeable parts, but was also facilitated by the railroad industry and mass-produced goods. Railroad track gauge standardization and standards for rail cars allowed inter-connection of railroads. Railway time formalized time zones. Industrial standards included screw sizes and threads and later electrical standards. Shipping container standards were loosely adopted in the late 1960s and formally adopted ca. 1970. Today there are vast numbers of technical standards. Commercial standards includes such things as bed sizes. Architectural standards cover numerous dimensions including stairs, doors, counter heights and other designs to make buildings safe, functional and in some cases allow a degree of interchangeability.Rationalized factory layout

Electrification allowed the placement of machinery such as machine tools in a systematic arrangement along the flow of the work. Electrification was a practical way to motorize conveyors to transfer parts and assemblies to workers, which was a key step leading to mass production and the assembly line.Modern business management

Business administration, which includes management practices and accounting systems is another important form of work practices. As the size of businesses grew in the second half of the 19th century they began being organized by departments and managed by professional managers as opposed to being run by sole proprietors or partners.Business administration as we know it was developed by railroads who had to keep up with trains, railcars, equipment, personnle and freight over large territories.

Modern business enterprise (MBE) is the organization and management of businesses, particularly large ones. MBE's employ professionals who use knowledge based techniques such areas as engineering, research and development, information technology, business administration, finance and accounting. MBE's typically benefit from economies of scale.

“Before railroad accounting we were moles burrowing in the dark." Andrew Carnegie

Continuous production

Continuous production is a method by which a process operates without interruption for long periods, perhaps even years. Continuous production began with blast furnaces in ancient times and became popular with mechanized processes following the invention of the Fourdrinier paper machine during the Industrial Revolution, which was the inspiration for continuous rolling. It began being widely used in chemical and petroleum refining industries in the late nineteenth and early twentieth centuries. It was later applied to direct strip casting of steel and other metals.Early steam engines did not supply power at a constant enough load for many continuous applications ranging from cotton spinning to rolling mills, restricting their power source to water. Advances in steam engines such as the Corliss steam engine and the development of control theory led to more constant engine speeds, which made steam power useful for sensitive tasks such as cotton spinning. AC motors, which run at constant speed even with load variations, were well suited to such processes.

Scientific agriculture

Losses of agricultural products to spoilage, insects and rats contributed greatly to productivity. Much hay stored outdoors was lost to spoilage before indoor storage or some means of coverage became common. Pasteurization of milk allowed it to be shipped by railroad.Keeping livestock indoors in winter reduces the amount of feed needed. Also, feeding chopped hay and ground grains, particularly corn (maize), was found to improve digestibility. The amount of feed required to produce a kg of live weight chicken fell from 5 in 1930 to 2 by the late 1990s and the time required fell from three months to six weeks.

Wheat

yields in developing countries, 1950 to 2004, kg/HA baseline 500. The

steep rise in crop yields in the U.S. began in the 1940s. The percentage

of growth was fastest in the early rapid growth stage. In developing

countries maize yields are still rapidly rising.

The Green Revolution increased crop yields by a factor of 3 for soybeans and between 4 and 5 for corn (maize), wheat, rice and some other crops. Using data for corn (maize) in the U.S., yields increased about 1.7 bushels per acre from the early 1940s until the first decade of the 21st century when concern was being expressed about reaching limits of photosynthesis. Because of the constant nature of the yield increase, the annual percentage increase has declined from over 5% in the 1940s to 1% today, so while yields for a while outpaced population growth, yield growth now lags population growth.

High yields would not be possible without significant applications of fertilizer, particularly nitrogen fertilizer which was made affordable by the Haber-Bosch ammonia process. Nitrogen fertilizer is applied in many parts of Asia in amounts subject to diminishing returns, which however does still give a slight increase in yield. Crops in Africa are in general starved for NPK and much of the world's soils are deficient in zinc, which leads to deficiencies in humans.

The greatest period of agricultural productivity growth in the U.S. occurred from World War 2 until the 1970s.

Land is considered a form of capital, but otherwise has received little attention relative to its importance as a factor of productivity by modern economists, although it was important in classical economics. However, higher crop yields effectively multiplied the amount of land.

New materials, processes and de-materialization

Iron and steel

The process of making cast iron was known before the 3rd century AD in China. Cast iron production reached Europe in the 14th century and Britain around 1500. Cast iron was useful for casting into pots and other implements, but was too brittle for making most tools. However, cast iron had a lower melting temperature than wrought iron and was much easier to make with primitive technology. Wrought iron was the material used for making many hardware items, tools and other implements. Before cast iron was made in Europe, wrought iron was made in small batches by the bloomery process, which was never used in China. Wrought iron could be made from cast iron more cheaply than it could be made with a bloomery.The inexpensive process for making good quality wrought iron was puddling, which became widespread after 1800. Puddling involved stirring molten cast iron until small globs sufficiently decarburized to form globs of hot wrought iron that were then removed and hammered into shapes. Puddling was extremely labor-intensive. Puddling was used until the introduction of the Bessemer and open hearth processes in the mid and late 19th century, respectively.

Blister steel was made from wrought iron by packing wrought iron in charcoal and heating for several days. See: Cementation process The blister steel could be heated and hammered with wrought iron to make shear steel, which was used for cutting edges like scissors, knives and axes. Shear steel was of non uniform quality and a better process was needed for producing watch springs, a popular luxury item in the 18th century. The successful process was crucible steel, which was made by melting wrought iron and blister steel in a crucible.

Production of steel and other metals was hampered by the difficulty in producing sufficiently high temperatures for melting. An understanding of thermodynamic principles such as recapturing heat from flue gas by preheating combustion air, known as hot blast, resulted in much higher energy efficiency and higher temperatures. Preheated combustion air was used in iron production and in the open hearth furnace. In 1780, before the introduction of hot blast in 1829, it required seven times as much coke as the weight of the product pig iron. The hundredweight of coke per short ton of pig iron was 35 in 1900, falling to 13 in 1950. By 1970 the most efficient blast furnaces used 10 hundredweight of coke per short ton of pig iron.

Steel has much higher strength than wrought iron and allowed long span bridges, high rise buildings, automobiles and other items. Steel also made superior threaded fasteners (screws, nuts, bolts), nails, wire and other hardware items. Steel rails lasted over 10 times longer than wrought iron rails.

The Bessemer and open hearth processes were much more efficient than making steel by the puddling process because they used the carbon in the pig iron as a source of heat. The Bessemer (patented in 1855) and the Siemens-Martin (c. 1865) processes greatly reduced the cost of steel. By the end of the 19th century, Gilchirst-Thomas “basic” process had reduced production costs by 90% compared to the puddling process of the mid-century.

Today a variety of alloy steels are available that have superior properties for special applications like automobiles, pipelines and drill bits. High speed or tool steels, whose development began in the late 19th century, allowed machine tools to cut steel at much higher speeds. High speed steel and even harder materials were an essential component of mass production of automobiles.

Some of the most important specialty materials are steam turbine and gas turbine blades, which have to withstand extreme mechanical stress and high temperatures.

The size of blast furnaces grew greatly over the 20th century and innovations like additional heat recovery and pulverized coal, which displaced coke and increased energy efficiency.

Bessemer steel became brittle with age because nitrogen was introduced when air was blown in. The Bessemer process was also restricted to certain ores (low phosphate hematite). By the end of the 19th century the Bessemer process was displaced by the open hearth furnace (OHF). After World War II the OHF was displaced by the basic oxygen furnace (BOF), which used oxygen instead of air and required about 35–40 minutes to produce a batch of steel compared to 8 to 9 hours for the OHF. The BOF also was more energy efficient.

By 1913, 80% of steel was being made from molten pig iron directly from the blast furnace, eliminating the step of casting the "pigs" (ingots) and remelting.

The continuous wide strip rolling mill, developed by ARMCO in 1928, was most important development in steel industry during the inter-war years. Continuous wide strip rolling started with a thick, coarse ingot. It produced a smoother sheet with more uniform thickness, which was better for stamping and gave a nice painted surface. It was good for automotive body steel and appliances. It used only a fraction of the labor of the discontinuous process, and was safer because it did not require continuous handling. Continuous rolling was made possible by improved sectional speed control.

After 1950 continuous casting contributed to productivity of converting steel to structural shapes by eliminating the intermittent step of making slabs, billets (square cross-section) or blooms (rectangular) which then usually have to be reheated before rolling into shapes. Thin slab casting, introduced in 1989, reduced labor to less than one hour per ton. Continuous thin slab casting and the BOF were the two most important productivity advancements in 20th-century steel making.

As a result of these innovations, between 1920 and 2000 labor requirements in the steel industry decreased by a factor of 1,000, from more than 3 worker-hours per tonne to just 0.003.

Sodium compounds: carbonate, bicarbonate and hydroxide are important industrial chemicals used in important products like making glass and soap. Until the invention of the Leblanc process in 1791, sodium carbonate was made, at high cost, from the ashes of seaweed and the plant barilla. The Leblanc process was replaced by the Solvay process beginning in the 1860s. With the widespread availability of inexpensive electricity, much sodium is produced along with chlorine by electro-chemical processes.

Cement

Cement is the binder for concrete, which is one of the most widely used construction materials today because of its low cost, versatility and durability. Portland cement, which was invented 1824-5, is made by calcining limestone and other naturally occurring minerals in a kiln. A great advance was the perfection of rotary cement kilns in the 1890s, the method still being used today. Reinforced concrete, which is suitable for structures, began being used in the early 20th century.Paper

Paper was made one sheet at a time by hand until development of the Fourdrinier paper machine (c. 1801) which made a continuous sheet. Paper making was severely limited by the supply of cotton and linen rags from the time of the invention of the printing press until the development of wood pulp (c. 1850s)in response to a shortage of rags. The sulfite process for making wood pulp started operation in Sweden in 1874. Paper made from sulfite pulp had superior strength properties than the previously used ground wood pulp (c. 1840). The kraft (Swedish for strong) pulping process was commercialized in the 1930s. Pulping chemicals are recovered and internally recycled in the kraft process, also saving energy and reducing pollution. Kraft paperboard is the material that the outer layers of corrugated boxes are made of. Until Kraft corrugated boxes were available, packaging consisted of poor quality paper and paperboard boxes along with wood boxes and crates. Corrugated boxes require much less labor to manufacture than wooden boxes and offer good protection to their contents. Shipping containers reduce the need for packaging.Rubber and plastics

Vulcanized rubber made the pneumatic tire possible, which in turn enabled the development of on and off-road vehicles as we know them. Synthetic rubber became important during the Second World War when supplies of natural rubber were cut off.Rubber inspired a class of chemicals known as elastomers, some of which are used by themselves or in blends with rubber and other compounds for seals and gaskets, shock absorbing bumpers and a variety of other applications.

Plastics can be inexpensively made into everyday items and have significantly lowered the cost of a variety of goods including packaging, containers, parts and household piping.

Optical fiber

Optical fiber began to replace copper wire in the telephone network during the 1980s. Optical fibers are very small diameter, allowing many to be bundled in a cable or conduit. Optical fiber is also an energy efficient means of transmitting signals.Oil and gas

Seismic exploration, beginning in the 1920s, uses reflected sound waves to map subsurface geology to help locate potential oil reservoirs. This was a great improvement over previous methods, which involved mostly luck and good knowledge of geology, although luck continued to be important in several major discoveries. Rotary drilling was a faster and more efficient way of drilling oil and water wells. It became popular after being used for the initial discovery of the East Texas field in 1930.Hard materials for cutting

Numerous new hard materials were developed for cutting edges such as in machining. Mushet steel, which was developed in 1868, was a forerunner of High speed steel, which was developed by a team led by Fredrick Winslow Taylor at Bethlehem Steel Company around 1900. High speed steel held its hardness even when it became red hot. It was followed by a number of modern alloys.From 1935 to 1955 machining cutting speeds increased from 120–200 ft/min to 1000 ft/min due to harder cutting edges, causing machining costs to fall by 75%.

One of the most important new hard materials for cutting is tungsten carbide.

Dematerialization

Dematerialization is the reduction of use of materials in manufacturing, construction, packaging or other uses. In the U.S. the quantity of raw materials per unit of output decreased approx 60% since 1900. In Japan the reduction has been 40% since 1973.Dematerialization is made possible by substitution with better materials and by engineering to reduce weight while maintaining function. Modern examples are plastic beverage containers replacing glass and paperboard, plastic shrink wrap used in shipping and light weight plastic packing materials. Dematerialization has been occurring in the U. S. steel industry where the peak in consumption occurred in 1973 on both an absolute and per capita basis. At the same time, per capita steel consumption grew globally through outsourcing. Cumulative global GDP or wealth has grown in direct proportion to energy consumption since 1970, while Jevons paradox posits that efficiency improvement leads to increased energy consumption. Access to energy globally constrains dematerialization.

Communications

Telegraphy

The telegraph appeared around the beginning of the railroad era and railroads typically installed telegraph lines along their routes for communicating with the trains.Teleprinters appeared in 1910 and had replaced between 80 and 90% of Morse code operators by 1929. It is estimated that one teletypist replaced 15 Morse code operators.

Telephone

The early use of telephones was primarily for business. Monthly service cost about one third of the average worker's earnings. The telephone along with trucks and the new road networks allowed businesses to reduce inventory sharply during the 1920s.Telephone calls were handled by operators using switchboards until the automatic switchboard was introduced in 1892. By 1929, 31.9% of the Bell system was automatic.

Automatic telephone switching originally used electro-mechanical switches controlled by vacuum tube devices, which consumed a large amount of electricity. Call volume eventually grew so fast that it was feared the telephone system would consume all electricity production, prompting Bell Labs to begin research on the transistor.

Radio frequency transmission

After WWII microwave transmission began being used for long distance telephony and transmitting television programming to local stations for rebroadcast.Fiber optics

The diffusion of telephony to households was mature by the arrival of fiber optic communications in the late 1970s. Fiber optics greatly increased the transmission capacity of information over previous copper wires and further lowered the cost of long distance communication.Communications satellites

Communications satellites came into use in the 1960s and today carry a variety of information including credit card transaction data, radio, television and telephone calls. The Global Positioning System (GPS) operates on signals from satellites.Facsimile (FAX)

Fax (short for facsimile) machines of various types had been in existence since the early 1900s but became widespread beginning in the mid-1970s.Home economics: Public water supply household gas supply and appliances

Before public water was supplied to households it was necessary for someone annually to haul up to 10,000 gallons of water to the average household.Natural gas began being supplied to households in the late 19th century.

Household appliances followed household electrification in the 1920s, with consumers buying electric ranges, toasters, refrigerators and washing machines. As a result of appliances and convenience foods, time spent on meal preparation and clean up, laundry and cleaning decreased from 58 hours/week in 1900 to 18 hours/week by 1975. Less time spent on housework allowed more women to enter the labor force.

Automation, process control and servomechanisms

Automation means automatic control, meaning a process is run with minimum operator intervention. Some of the various levels of automation are: mechanical methods, electrical relay, feedback control with a controller and computer control. Common applications of automation are for controlling temperature, flow and pressure. Automatic speed control is important in many industrial applications, especially in sectional drives, such as found in metal rolling and paper drying.

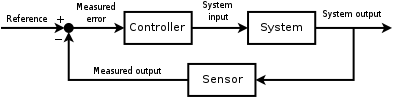

The

concept of the feedback loop to control the dynamic behavior of the

system: this is negative feedback, because the sensed value is

subtracted from the desired value to create the error signal, which is

processed by the controller, which provides proper corrective action. A

typical example would be to control the opening of a valve to hold a

liquid level in a tank. Process control is a widely used form of automation. See also: PID controller

The earliest applications of process control were mechanisms that adjusted the gap between mill stones for grinding grain and for keeping windmills facing into the wind. The centrifugal governor used for adjusting the mill stones was copied by James Watt for controlling speed of steam engines in response to changes in heat load to the boiler; however, if the load on the engine changed the governor only held the speed steady at the new rate. It took much development work to achieve the degree of steadiness necessary to operate textile machinery. A mathematical analysis of control theory was first developed by James Clerk Maxwell. Control theory was developed to its "classical" form by the 1950s.

Factory electrification brought simple electrical controls such as ladder logic, whereby push buttons could be used to activate relays to engage motor starters. Other controls such as interlocks, timers and limit switches could be added to the circuit.

Today automation usually refers to feedback control. An example is cruise control on a car, which applies continuous correction when a sensor on the controlled variable (Speed in this example) deviates from a set-point and can respond in a corrective manner to hold the setting. Process control is the usual form of automation that allows industrial operations like oil refineries, steam plants generating electricity or paper mills to be run with a minimum of manpower, usually from a number of control rooms.

The need for instrumentation grew with the rapidly growing central electric power stations after the First World War. Instrumentation was also important for heat treating ovens, chemical plants and refineries. Common instrumentation was for measuring temperature, pressure or flow. Readings were typically recorded on circle charts or strip charts. Until the 1930s control was typically "open loop", meaning that it did not use feedback. Operators made various adjustments by such means as turning handles on valves. If done from a control room a message could be sent to an operator in the plant by color coded light, letting him know whether to increase or decrease whatever was being controlled. The signal lights were operated by a switchboard, which soon became automated. Automatic control became possible with the feedback controller, which sensed the measured variable, measured the deviation from the setpoint and perhaps the rate of change and time weighted amount of deviation, compared that with the setpoint and automatically applied a calculated adjustment. A stand-alone controller may use a combination of mechanical, pneumatic, hydraulic or electronic analogs to manipulate the controlled device. The tendency was to use electronic controls after these were developed, but today the tendency is to use a computer to replace individual controllers.

By the late 1930s feedback control was gaining widespread use. Feedback control was an important technology for continuous production.

Automation of the telephone system allowed dialing local numbers instead of having calls placed through an operator. Further automation allowed callers to place long distance calls by direct dial. Eventually almost all operators were replaced with automation.

Machine tools were automated with Numerical control (NC) in the 1950s. This soon evolved into computerized numerical control (CNC).

Servomechanisms are commonly position or speed control devices that use feedback. Understanding of these devices is covered in control theory. Control theory was successfully applied to steering ships in the 1890s, but after meeting with personnel resistance it was not widely implemented for that application until after the First World War. Servomechanisms are extremely important in providing automatic stability control for airplanes and in a wide variety of industrial applications.

A set of six-axis robots used for welding.

Robots are commonly used for hazardous jobs like paint spraying, and

for repetitive jobs requiring high precision such as welding and the

assembly and soldering of electronics like car radios.

Industrial robots were used on a limited scale from the 1960s but began their rapid growth phase in the mid-1980s after the widespread availability of microprocessors used for their control. By 2000 there were over 700,000 robots worldwide.

Computers, semiconductors, data processing and information technology

Unit record equipment

Early IBM tabulating machine. Common applications were accounts receivable, payroll and billing.

Card from a Fortran

program: Z(1) = Y + W(1). The punched card carried over from

tabulating machines to stored program computers before being replaced by

terminal input and magnetic storage.

Early electric data processing was done by running punched cards through tabulating machines, the holes in the cards allowing electrical contact to increment electronic counters. Tabulating machines were in a category called unit record equipment, through which the flow of punched cards was arranged in a program-like sequence to allow sophisticated data processing. Unit record equipment was widely used before the introduction of computers.

The usefulness of tabulating machines was demonstrated by compiling the 1890 U.S. census, allowing the census to be processed in less than a year and with great labor savings compared to the estimated 13 years by the previous manual method.

Stored program computers

The first digital computers were more productive than tabulating machines, but not by a great amount. Early computers used thousands of vacuum tubes (thermionic valves) which used a lot of electricity and constantly needed replacing. By the 1950s the vacuum tubes were replaced by transistors which were much more reliable and used relatively little electricity. By the 1960s thousands of transistors and other electronic components could be manufactured on a silicon semiconductor wafer as integrated circuits, which are universally used in today's computers.Computers used paper tape and punched cards for data and programming input until the 1980s when it was still common to receive monthly utility bills printed on a punched card that was returned with the customer’s payment.

In 1973 IBM introduced point of sale (POS) terminals in which electronic cash registers were networked to the store mainframe computer. By the 1980s bar code readers were added. These technologies automated inventory management. Wal-Mart was an early adopter of POS. The Bureau of Labor Statistics estimated that bar code scanners at checkout increased ringing speed by 30% and reduced labor requirements of cashiers and baggers by 10-15%.

Data storage became better organized after the development of relational database software that allowed data to be stored in different tables. For example, a theoretical airline may have numerous tables such as: airplanes, employees, maintenance contractors, caterers, flights, airports, payments, tickets, etc. each containing a narrower set of more specific information than would a flat file, such as a spreadsheet. These tables are related by common data fields called keys. Data can be retrieved in various specific configurations by posing a query without having to pull up a whole table. This, for example, makes it easy to find a passenger's seat assignment by a variety of means such as ticket number or name, and provide only the queried information.

Since the mid-1990s, interactive web pages have allowed users to access various servers over Internet to engage in e-commerce such as online shopping, paying bills, trading stocks, managing bank accounts and renewing auto registrations. This is the ultimate form of back office automation because the transaction information is transferred directly to the database.

Computers also greatly increased productivity of the communications sector, especially in areas like the elimination of telephone operators. In engineering, computers replaced manual drafting with CAD, with a 500% average increase in a draftsman's output.[17] Software was developed for calculations used in designing electronic circuits, stress analysis, heat and material balances. Process simulation software has been developed for both steady state and dynamic simulation, the latter able to give the user a very similar experience to operating a real process like a refinery or paper mill, allowing the user to optimize the process or experiment with process modifications.

Automated teller machines (ATM's) became popular in recent decades and self checkout at retailers appeared in the 1990s.

The Airline Reservations System and banking are areas where computers are practically essential. Modern military systems also rely on computers.

In 1959 Texaco’s Port Arthur refinery became the first chemical plant to use digital process control.

Computers did not revolutionize manufacturing because automation, in the form of control systems, had already been in existence for decades, although computers did allow more sophisticated control, which led to improved product quality and process optimization.

Long term decline in productivity growth

"The years 1929-1941 were, in the aggregate, the most technologically progressive of any comparable period in U.S. economic history." Alexander J. Field

"As industrialization has proceeded, its effects, relatively speaking, have become less, not more, revolutionary"...."There has, in effect, been a general progression in industrial commodities from a deficiency to a surplus of capital relative to internal investments". Alan Sweezy, 1943U.S. productivity growth has been in long term decline since the early 1970s, with the exception of a 1996–2004 spike caused by an acceleration of Moore's law semiconductor innovation. Part of the early decline was attributed to increased governmental regulation since the 1960s, including stricter environmental regulations. Part of the decline in productivity growth is due to exhaustion of opportunities, especially as the traditionally high productivity sectors decline in size. Robert J. Gordon considered productivity to be "One big wave" that crested and is now receding to a lower level, while M. King Hubbert called the phenomenon of the great productivity gains preceding the Great Depression a "one time event."

Because of reduced population growth in the U.S. and a peaking of productivity growth, sustained U.S. GDP growth has never returned to the 4% plus rates of the pre-World War 1 decades.

The computer and computer-like semiconductor devices used in automation are the most significant productivity improving technologies developed in the final decades of the twentieth century; however, their contribution to overall productivity growth was disappointing. Most of the productivity growth occurred in the new industry computer and related industries. Economist Robert J. Gordon is among those who questioned whether computers lived up to the great innovations of the past, such as electrification. This issue is known as the productivity paradox. Gordon's (2013) analysis of productivity in the U.S. gives two possible surges in growth, one during 1891–1972 and the second in 1996–2004 due to the acceleration in Moore's law-related technological innovation.

Improvements in productivity affected the relative sizes of various economic sectors by reducing prices and employment. Agricultural productivity released labor at a time when manufacturing was growing. Manufacturing productivity growth peaked with factory electrification and automation, but still remains significant. However, as the relative size of the manufacturing sector shrank the government and service sectors, which have low productivity growth, grew.

Improvement in living standards

An

hour's work in 1998 bought 11 times as much chicken as in 1900. Many

consumer items show similar declines in terms of work time.

Chronic hunger and malnutrition were the norm for the majority of the population of the world including England and France, until the latter part of the 19th century. Until about 1750, in large part due to malnutrition, life expectancy in France was about 35 years, and only slightly higher in England. The U.S. population of the time was adequately fed, were much taller and had life expectancies of 45–50 years.

The gains in standards of living have been accomplished largely through increases in productivity. In the U.S. the amount of personal consumption that could be bought with one hour of work was about $3.00 in 1900 and increased to about $22 by 1990, measured in 2010 dollars. For comparison, a U. S. worker today earns more (in terms of buying power) working for ten minutes than subsistence workers, such as the English mill workers that Fredrick Engels wrote about in 1844, earned in a 12-hour day.