From Wikipedia, the free encyclopedia

https://en.wikipedia.org/wiki/Marxism%E2%80%93Leninism

Marxism–Leninism (Russian: марксизм-ленинизм, romanized: marksizm-leninizm) is a communist ideology that became the largest faction of the communist movement in the world in the years following the October Revolution. It was the predominant ideology of most communist governments throughout the 20th century. It was developed in the Soviet Union by Joseph Stalin and drew on elements of Bolshevism, Leninism, and Marxism. It was the state ideology of the Soviet Union, Soviet satellite states in the Eastern Bloc, and various countries in the Non-Aligned Movement and Third World during the Cold War, as well as the Communist International after Bolshevization. Today, Marxism–Leninism is the official ideology of the ruling parties of China, Cuba, Laos, and Vietnam, as well as many other communist parties. The state ideology of North Korea is derived from Marxism–Leninism, although its evolution is disputed.

Marxism–Leninism was developed from Bolshevism by Joseph Stalin in the 1920s based on his understanding and synthesis of classical Marxism and Leninism. Marxism–Leninism holds that a two-stage communist revolution is needed to replace capitalism. A vanguard party, organized through democratic centralism, would seize power on behalf of the proletariat and establish a one-party communist state. The state would control the means of production, suppress opposition, counter-revolution, and the bourgeoisie, and promote Soviet collectivism, to pave the way for an eventual communist society that would be classless and stateless.

After the death of Vladimir Lenin in 1924, Marxism–Leninism became a distinct movement in the Soviet Union when Stalin and his supporters gained control of the Communist Party of the Soviet Union (CPSU). It rejected the common notion among Western Marxists of world revolution as a prerequisite for building socialism, in favour of the concept of socialism in one country. According to its supporters, the gradual transition from capitalism to socialism was signified by the introduction of the first five-year plan and the 1936 Soviet Constitution. By the late 1920s, Stalin established ideological orthodoxy in the Russian Communist Party (Bolsheviks), the Soviet Union, and the Communist International to establish universal Marxist–Leninist praxis. The formulation of the Soviet version of dialectical and historical materialism in the 1930s by Stalin and his associates, such as in Stalin's text Dialectical and Historical Materialism, became the official Soviet interpretation of Marxism, and was taken as example by Marxist–Leninists in other countries; according to the Great Russian Encyclopedia, this text became the foundation of the philosophy of Marxism–Leninism. In 1938, Stalin's official textbook History of the Communist Party of the Soviet Union (Bolsheviks) popularised Marxism–Leninism.

The internationalism of Marxism–Leninism was expressed in supporting revolutions in other countries, initially through the Communist International and then through the concepts of the national democratic states and states of socialist orientation after de-Stalinisation. The establishment of other communist states after World War II resulted in Sovietisation, and these states tended to follow the Soviet Marxist–Leninist model of five-year plans and rapid industrialisation, political centralisation, and repression. During the Cold War, Marxist–Leninist countries like the Soviet Union and its allies were one of the major forces in international relations. With the death of Stalin and the ensuing de-Stalinisation, Marxism–Leninism underwent several revisions and adaptations such as Guevarism, Titoism, Ho Chi Minh Thought, Hoxhaism, and Maoism, with the latter two constituting anti-revisionist Marxism–Leninism. These adaptations caused several splits between communist states, resulting in the Tito–Stalin split, the Sino-Soviet split, and the Sino-Albanian split. As the Cold War waned and concluded with the demise of much of the socialist world, many of the surviving communist states reformed their economies and embraced market socialism. Complementing this economic shift, the Communist Party of China developed Maoism (also known as Mao Zedong Thought) into Deng Xiaoping Theory. Today this comprises part of the governing ideology of China, with the latest developments including Xi Jinping Thought. Meanwhile, the Communist Party of Peru developed Maoism into Marxism–Leninism–Maoism, a higher stage of anti-revisionist Maoism that rejects Dengism. The latest developments to Marxism–Leninism–Maoism include Gonzaloism, Maoism-Third Worldism, National Democracy, and Prachanda Path. Ongoing Marxist–Leninist(–Maoist) insurgencies include those being waged in the Philippines, India, and in Turkey. The Nepalese civil war, fought by Marxist–Leninist–Maoists, ended in their victory in 2006.

Criticism of Marxism–Leninism largely overlaps with criticism of communist party rule and mainly focuses on the actions and policies of Marxist–Leninist leaders, most notably Stalin and Mao Zedong. Communist states have been marked by a high degree of centralised control by the state and the ruling communist party, political repression, state atheism, collectivisation and use of labour camps. Historians such as Silvio Pons and Robert Service stated that the repression and totalitarianism came from Marxist–Leninist ideology. Historians such as Michael Geyer and Sheila Fitzpatrick have offered other explanations and criticise the focus on the upper levels of society and use of concepts such as totalitarianism which have obscured the reality of the system. While the emergence of the Soviet Union as the world's first nominally communist state led to communism's widespread association with Marxism–Leninism and the Soviet model, several academics say that Marxism–Leninism in practice was a form of state capitalism. The socio-economic nature of communist states, especially that of the Soviet Union during the Stalin era (1924–1953), has been much debated, varyingly being labelled a form of bureaucratic collectivism, state capitalism, state socialism, or a totally unique mode of production. The Eastern Bloc, including communist states in Central and Eastern Europe as well as the Third World socialist regimes, have been variously described as "bureaucratic-authoritarian systems", and China's socio-economic structure has been referred to as "nationalistic state capitalism".

Overview

Communist states

In the establishment of the Soviet Union in the former Russian Empire, Bolshevism was the ideological basis. As the only legal vanguard party, it decided almost all policies, which the communist party represented as correct. Because Leninism was the revolutionary means to achieving socialism in the praxis of government, the relationship between ideology and decision-making inclined to pragmatism and most policy decisions were taken in light of the continual and permanent development of Marxism–Leninism, with ideological adaptation to material conditions. The Bolshevik Party lost in the 1917 Russian Constituent Assembly election, obtaining 23.3% of the vote, to the Socialist Revolutionary Party, which obtained 37.6%. On 6 January 1918, the Draft Decree on the Dissolution of the Constituent Assembly was issued by the Central Executive Committee of the Congress of Soviets, a committee dominated by Vladimir Lenin, who had previously supported multi-party free elections. After the Bolshevik defeat, Lenin started referring to the assembly as a "deceptive form of bourgeois-democratic parliamentarism". This was criticised as being the development of vanguardism as a form of hierarchical party–elite that controlled society.

Within five years of the death of Lenin, Joseph Stalin completed his rise to power and was the leader of the Soviet Union who theorised and applied the socialist theories of Lenin and Karl Marx as political expediencies used to realise his plans for the Soviet Union and for world socialism. Concerning Questions of Leninism (1926) represented Marxism–Leninism as a separate communist ideology and featured a global hierarchy of communist parties and revolutionary vanguard parties in each country of the world. With that, Stalin's application of Marxism–Leninism to the situation of the Soviet Union became Stalinism, the official state ideology until his death in 1953. In Marxist political discourse, Stalinism, denoting and connoting the theory and praxis of Stalin, has two usages, namely praise of Stalin by Marxist–Leninists who believe Stalin successfully developed Lenin's legacy, and criticism of Stalin by Marxist–Leninists and other Marxists who repudiate Stalin's political purges, social-class repressions and bureaucratic terrorism.

As the Left Opposition to Stalin within the Soviet party and government, Leon Trotsky and Trotskyists argued that Marxist–Leninist ideology contradicted Marxism and Leninism in theory, therefore Stalin's ideology was not useful for the implementation of socialism in Russia. Moreover, Trotskyists within the party identified their anti-Stalinist communist ideology as Bolshevik–Leninism and supported the permanent revolution to differentiate themselves from Stalin's justification and implementation of socialism in one country.

After the Sino-Soviet split of the 1960s, the Chinese Communist Party and the Communist Party of the Soviet Union claimed to be the sole heir and successor to Stalin concerning the correct interpretation of Marxism–Leninism and ideological leader of world communism. In that vein, Mao Zedong Thought, Mao Zedong's updating and adaptation of Marxism–Leninism to Chinese conditions in which revolutionary praxis is primary and ideological orthodoxy is secondary, represents urban Marxism–Leninism adapted to pre-industrial China. The claim that Mao had adapted Marxism–Leninism to Chinese conditions evolved into the idea that he had updated it in a fundamental way applying to the world as a whole. Consequently, Mao Zedong Thought became the official state ideology of the People's Republic of China as well as the ideological basis of communist parties around the world which sympathised with China. In the late 1970s, the Peruvian communist party Shining Path developed and synthesised Mao Zedong Thought into Marxism–Leninism–Maoism, a contemporary variety of Marxism–Leninism that is a supposed higher level of Marxism–Leninism that can be applied universally.

Following the Sino-Albanian split of the 1970s, a small portion of Marxist–Leninists began to downplay or repudiate the role of Mao in the Marxist–Leninist international movement in favour of the Albanian Labour Party and stricter adherence to Stalin. The Sino-Albanian split was caused by Albania's rejection of China's Realpolitik of Sino–American rapprochement, specifically the 1972 Mao–Nixon meeting which the anti-revisionist Albanian Labour Party perceived as an ideological betrayal of Mao's own Three Worlds Theory that excluded such political rapprochement with the West. To the Albanian Marxist–Leninists, the Chinese dealings with the United States indicated Mao's lessened, practical commitments to ideological orthodoxy and proletarian internationalism. In response to Mao's apparently unorthodox deviations, Enver Hoxha, head of the Albanian Labour Party, theorised anti-revisionist Marxism–Leninism, referred to as Hoxhaism, which retained orthodox Marxism–Leninism when compared to the ideology of the post-Stalin Soviet Union.

In North Korea, Marxism–Leninism was superseded by Juche in the 1970s. This was made official in 1992 and 2009, when constitutional references to Marxism–Leninism were dropped and replaced with Juche. In 2009, the constitution was quietly amended so that not only did it remove all Marxist–Leninist references present in the first draft but also dropped all references to communism. Juche has been described by Michael Seth as a version of Korean ultranationalism, which eventually developed after losing its original Marxist–Leninist elements. According to North Korea: A Country Study by Robert L. Worden, Marxism–Leninism was abandoned immediately after the start of de-Stalinization in the Soviet Union and has been totally replaced by Juche since at least 1974. Daniel Schwekendiek wrote that what made North Korean Marxism–Leninism distinct from that of China and the Soviet Union was that it incorporated national feelings and macro-historical elements in the socialist ideology, opting for its "own style of socialism". The major Korean elements are the emphasis on traditional Confucianism and the memory of the traumatic experience of Korea under Japanese rule as well as a focus on autobiographical features of Kim Il Sung as a guerrilla hero.

The People's Democratic Republic of Yemen, abbreviated as PDRY (aka South Yemen) and existed between 1967 and 1990, was the only openly communist (Marxist-Leninist) state in the Arab world. South Yemen pursued a corresponding policy and became an important ally for the Soviet Union and Eastern bloc, because of its access to the Gulf of Aden. The USSR provided it with comprehensive assistance – loans, specialists and weapons. Relations between this communist state and many other Arab countries remained poor, since many communist figures from all over the region were hiding in South Yemen, after unsuccessful tryings to organize coup d'etats in their home countries.

There were also communist states in Africa, such as Ethiopia. In 1974, the military overthrew Emperor Haile Selassie and installed a military junta known as the Derg. The Derg quickly aligned itself with the Soviet Union on the basis of communism, implementing Marxist-Leninist ideals that were radical for their country. The brutal imposition of their radical ideas led to a debilitating civil war. In 1977, after a series of political purges and executions, Mengistu Haile Mariam became the leader of the Derg. He continued this course and brought Ethiopia closer to the USSR, which became Ethiopia's main trading partner, supplying it with everything from weapons and equipment to military advisers and specialists. Mengistu ultimately built a highly militarized state with the largest army in sub-Saharan Africa. The Soviet Union pushed Mengistu to create a "People's Democratic" system, as was the case in the Eastern Bloc countries, but Mengistu did so very reluctantly: Ethiopia became the "People's Democratic Republic of Ethiopia" only in 1987. And although the Derg was formally dissolved, roughly the same people remained in power as before.

In the other four existing socialist and people's democratic states, namely China, Cuba, Laos, and Vietnam, the ruling parties hold Marxism–Leninism as their official ideology, although they give it different interpretations in terms of practical policy. Marxism–Leninism is also the ideology of anti-revisionist, Hoxhaist, Maoist, and neo-Stalinist communist parties worldwide. The anti-revisionists criticise some rule of the communist states by claiming that they were state capitalist countries ruled by revisionists. Although the periods and countries vary among different ideologies and parties, they generally accept that the Soviet Union was socialist during Stalin's time, Maoists believe that China became state capitalist after Mao's death, and Hoxhaists believe that China was always state capitalist, and uphold the Albania as the only socialist state after the Soviet Union under Stalin.

Definition, theory, and terminology

Communist ideologies and ideas have acquired a new meaning since the Russian Revolution, as they became equivalent to the ideas of Marxism–Leninism, namely the interpretation of Marxism by Vladimir Lenin and his successors. Endorsing the final objective, namely the creation of a community-owning means of production and providing each of its participants with consumption "according to their needs", Marxism–Leninism puts forward the recognition of the class struggle as a dominating principle of a social change and development. In addition, workers (the proletariat) were to carry out the mission of reconstruction of the society. Conducting a socialist revolution led by what its proponents termed the "vanguard of the proletariat", defined as the communist party organised hierarchically through democratic centralism, was hailed to be a historical necessity by Marxist–Leninists. Moreover, the introduction of the proletarian dictatorship was advocated and classes deemed hostile were to be repressed. In the 1920s, it was first defined and formulated by Joseph Stalin based on his understanding of orthodox Marxism and Leninism.

In 1934, Karl Radek suggested the formulation Marxism–Leninism–Stalinism in an article in Pravda to stress the importance of Stalin's leadership to the Marxist–Leninist ideology. Radek's suggestion failed to catch on, as Stalin as well as CPSU's ideologists preferred to continue the usage of Marxism–Leninism. Marxism–Leninism–Maoism became the name for the ideology of the Chinese Communist Party and of other Communist parties, which broke off from national Communist parties, after the Sino–Soviet split, especially when the split was finalised by 1963. The Italian Communist Party was mainly influenced by Antonio Gramsci, who gave a more democratic implication than Lenin's for why workers remained passive. A key difference between Maoism and other forms of Marxism–Leninism is that peasants should be the bulwark of the revolutionary energy, which is led by the working class. Three common Maoist values are revolutionary populism, pragmatism, and dialectics.

According to Rachel Walker, "Marxism–Leninism" is an empty term that depends on the approach and basis of ruling Communist parties, and is dynamic and open to redefinition, being both fixed and not fixed in meaning. As a term, "Marxism–Leninism" is misleading because Marx and Lenin never sanctioned or supported the creation of an -ism after them, and is reveling because, being popularized after Lenin's death by Stalin, it contained three clear doctrinal and institutionalized principles that became a model for later Soviet-type regimes; its global influence, having at its height covered at least one-third of the world's population, has made Marxist–Leninist a convenient label for the Communist bloc as a dynamic ideological order.

Historiography

Historiography of communist states is polarised. According to John Earl Haynes and Harvey Klehr, historiography is characterised by a split between traditionalists and revisionists. "Traditionalists", who characterise themselves as objective reporters of an alleged totalitarian nature of communism and communist states, are criticised by their opponents as being anti-communist, even fascist, in their eagerness on continuing to focus on the issues of the Cold War. Alternative characterisations for traditionalists include "anti-communist", "conservative", "Draperite" (after Theodore Draper), "orthodox", and "right-wing"; Norman Markowitz, a prominent "revisionist", referred to them as "reactionaries", "right-wing romantics", "romantics", and "triumphalist" who belong to the "HUAC school of CPUSA scholarship". According to Haynes and Klehr, "revisionists" are more numerous and dominate academic institutions and learned journals. A suggested alternative formulation is "new historians of American communism", but that has not caught on because these historians describe themselves as unbiased and scholarly and contrast their work to the work of anti-communist traditionalists whom they would term biased and unscholarly. Academic Sovietology after World War II and during the Cold War was dominated by the "totalitarian model" of the Soviet Union, stressing the absolute nature of Stalin's power. The "revisionist school" beginning in the 1960s focused on relatively autonomous institutions which might influence policy at the higher level. Matt Lenoe described the "revisionist school" as representing those who "insisted that the old image of the Soviet Union as a totalitarian state bent on world domination was oversimplified or just plain wrong. They tended to be interested in social history and to argue that the Communist Party leadership had had to adjust to social forces." These "revisionist school" historians challenged the "totalitarian model", as outlined by political scientist Carl Joachim Friedrich, which stated that the Soviet Union and other communist states were totalitarian systems, with the personality cult, and almost unlimited powers of the "great leader", such as Stalin. It was considered to be outdated by the 1980s and for the post-Stalinist era.

Some academics, such as Stéphane Courtois (The Black Book of Communism), Steven Rosefielde (Red Holocaust), and Rudolph Rummel (Death by Government), wrote of mass, excess deaths under Marxist–Leninist regimes. These authors defined the political repression by communists as a "Communist democide", "Communist genocide", "Red Holocaust", or followed the "victims of Communism" narrative. Some of them compared Communism to Nazism and described deaths under Marxist–Leninist regimes (civil wars, deportations, famines, repressions, and wars) as being a direct consequence of Marxism–Leninism. Some of these works, in particular The Black Book of Communism and its 93 or 100 millions figure, are cited by political groups and Members of the European Parliament. Without denying the tragedy of the events, other scholars criticise the interpretation that sees communism as the main culprit as presenting a biased or exaggerated anti-communist narrative. Several academics propose a more nuanced analysis of Marxist–Leninist rule, stating that anti-communist narratives have exaggerated the extent of political repression and censorship in communist states and drawn comparisons with what they see as atrocities that were perpetrated by capitalist countries, particularly during the Cold War. These academics include Mark Aarons, Noam Chomsky, Jodi Dean, Kristen Ghodsee, Seumas Milne, and Michael Parenti. Ghodsee, Nathan J. Robinson, and Scott Sehon wrote about the merits of taking an anti anti-communist position that does not deny the atrocities but make a distinction between anti-authoritarian communist and other socialist currents, both of which have been victims of repression.

History

Bolsheviks, February Revolution, and Great War (1903–1917)

Although Marxism–Leninism was created after Vladimir Lenin's death by Joseph Stalin in the Soviet Union, continuing to be the official state ideology after de-Stalinisation and of other communist states, the basis for elements of Marxism–Leninism predate this. The philosophy of Marxism–Leninism originated as the pro-active, political praxis of the Bolshevik faction of the Russian Social Democratic Labour Party in realising political change in Tsarist Russia. Lenin's leadership transformed the Bolsheviks into the party's political vanguard which was composed of professional revolutionaries who practised democratic centralism to elect leaders and officers as well as to determine policy through free discussion, then decisively realised through united action. The vanguardism of proactive, pragmatic commitment to achieving revolution was the Bolsheviks' advantage in out-manoeuvring the liberal and conservative political parties who advocated social democracy without a practical plan of action for the Russian society they wished to govern. Leninism allowed the Bolshevik party to assume command of the October Revolution in 1917.

Twelve years before the October Revolution in 1917, the Bolsheviks had failed to assume control of the February Revolution of 1905 (22 January 1905 – 16 June 1907) because the centres of revolutionary action were too far apart for proper political coordination. To generate revolutionary momentum from the Tsarist army killings on Bloody Sunday (22 January 1905), the Bolsheviks encouraged workers to use political violence in order to compel the bourgeois social classes (the nobility, the gentry and the bourgeoisie) to join the proletarian revolution to overthrow the absolute monarchy of the Tsar of Russia. Most importantly, the experience of this revolution caused Lenin to conceive of the means of sponsoring socialist revolution through agitation, propaganda and a well-organised, disciplined and small political party.

Despite secret-police persecution by the Okhrana (Department for Protecting the Public Security and Order), émigré Bolsheviks returned to Russia to agitate, organise and lead, but then they returned to exile when peoples' revolutionary fervour failed in 1907. The failure of the February Revolution exiled Bolsheviks, Mensheviks, Socialist Revolutionaries and anarchists such as the Black Guards from Russia. Membership in both the Bolshevik and Menshevik ranks diminished from 1907 to 1908 while the number of people taking part in strikes in 1907 was 26% of the figure during the year of the Revolution of 1905, dropping to 6% in 1908 and 2% in 1910. The 1908–1917 period was one of disillusionment in the Bolshevik party over Lenin's leadership, with members opposing him for scandals involving his expropriations and methods of raising money for the party. This political defeat was aggravated by Tsar Nicholas II's political reformations of Imperial Russian government. In practise, the formalities of political participation (the electoral plurality of a multi-party system with the State Duma and the Russian Constitution of 1906) were the Tsar's piecemeal and cosmetic concessions to social progress because public office remained available only to the aristocracy, the gentry and the bourgeoisie. These reforms resolved neither the illiteracy, the poverty, nor malnutrition of the peasant, underclass majority of Imperial Russia.

In Swiss exile, Lenin developed Marx's philosophy and extrapolated decolonisation by colonial revolt as a reinforcement of proletarian revolution in Europe. In 1912, Lenin resolved a factional challenge to his ideological leadership of the RSDLP by the Forward Group in the party, usurping the all-party congress to transform the RSDLP into the Bolshevik party. In the early 1910s, Lenin remained highly unpopular and was so unpopular amongst international socialist movement that by 1914 it considered censoring him. Unlike the European socialists who chose bellicose nationalism to anti-war internationalism, whose philosophical and political break was consequence of the internationalist–defencist schism among socialists, the Bolsheviks opposed the Great War (1914–1918). That nationalist betrayal of socialism was denounced by a small group of socialist leaders who opposed the Great War, including Rosa Luxemburg, Karl Liebknecht and Lenin, who said that the European socialists had failed the working classes for preferring patriotic war to proletarian internationalism. To debunk patriotism and national chauvinism, Lenin explained in the essay Imperialism, the Highest Stage of Capitalism (1917) that capitalist economic expansion leads to colonial imperialism which is then regulated with nationalist wars such as the Great War among the empires of Europe. To relieve strategic pressures from the Western Front (4 August 1914 – 11 November 1918), Imperial Germany impelled the withdrawal of Imperial Russia from the war's Eastern Front (17 August 1914 – 3 March 1918) by sending Lenin and his Bolshevik cohort in a diplomatically sealed train, anticipating them partaking in revolutionary activity.

October Revolution and Russian Civil War (1917–1922)

In March 1917, the abdication of Tsar Nicholas II led to the Russian Provisional Government (March–July 1917), who then proclaimed the Russian Republic (September–November 1917). Later in the October Revolution, the Bolshevik's seizure of power against the Provisional Government resulted in their establishment of the Russian Soviet Federative Socialist Republic (1917–1991), yet parts of Russia remained occupied by the counter-revolutionary White Movement of anti-communists who had united to form the White Army to fight the Russian Civil War (1917–1922) against the Bolshevik government. Moreover, despite the White–Red civil war, Russia remained a combatant in the Great War that the Bolsheviks had quit with the Treaty of Brest-Litovsk which then provoked the Allied Intervention to the Russian Civil War by the armies of seventeen countries, featuring Great Britain, France, Italy, the United States and Imperial Japan.

Elsewhere, the successful October Revolution in Russia had facilitated the German Revolution of 1918–1919 and revolutions and interventions in Hungary (1918–1920) which produced the First Hungarian Republic and the Hungarian Soviet Republic. In Berlin, the German government aided by Freikorps units fought and defeated the Spartacist uprising which began as a general strike. In Munich, the local Freikorps fought and defeated the Bavarian Soviet Republic. In Hungary, the disorganised workers who had proclaimed the Hungarian Soviet Republic were fought and defeated by the royal armies of the Kingdom of Romania and the Kingdom of Yugoslavia as well as the army of the First Republic of Czechoslovakia. These communist forces were soon crushed by anti-communist forces and attempts to create an international communist revolution failed. However, a successful revolution occurred in Asia, when the Mongolian Revolution of 1921 established the Mongolian People's Republic (1924–1992). The percentage of Bolshevik delegates in the All-Russian Congress of Soviets increased from 13%, at the first congress in July 1917, to 66%, at the fifth congress in 1918.

As promised to the Russian peoples in October 1917, the Bolsheviks quit Russia's participation in the Great War on 3 March 1918. That same year, the Bolsheviks consolidated government power by expelling the Mensheviks, the Socialist Revolutionaries and the Left Socialist-Revolutionaries from the soviets. The Bolshevik government then established the Cheka (All-Russian Extraordinary Commission) secret police to eliminate anti–Bolshevik opposition in the country. Initially, there was strong opposition to the Bolshevik régime because they had not resolved the food shortages and material poverty of the Russian peoples as promised in October 1917. From that social discontent, the Cheka reported 118 uprisings, including the Kronstadt rebellion (7–17 March 1921) against the economic austerity of the War Communism imposed by the Bolsheviks. The principal obstacles to Russian economic development and modernisation were great material poverty and the lack of modern technology which were conditions that orthodox Marxism considered unfavourable to communist revolution. Agricultural Russia was sufficiently developed for establishing capitalism, but it was insufficiently developed for establishing socialism. For Bolshevik Russia, the 1921–1924 period featured the simultaneous occurrence of economic recovery, famine (1921–1922) and a financial crisis (1924). By 1924, considerable economic progress had been achieved and by 1926 the Bolshevik government had achieved economic production levels equal to Russia's production levels in 1913.

Initial Bolshevik economic policies from 1917 to 1918 were cautious, with limited nationalisations of the means of production which had been private property of the Russian aristocracy during the Tsarist monarchy. Lenin was immediately committed to avoid antagonising the peasantry by making efforts to coax them away from the Socialist Revolutionaries, allowing a peasant takeover of nobles' estates while no immediate nationalisations were enacted on peasants' property. The Decree on Land (8 November 1917) fulfilled Lenin's promised redistribution of Russia's arable land to the peasants, who reclaimed their farmlands from the aristocrats, ensuring the peasants' loyalty to the Bolshevik party. To overcome the civil war's economic interruptions, the policy of War Communism (1918–1921), a regulated market, state-controlled means of distribution and nationalisation of large-scale farms, was adopted to requisite and distribute grain in order to feed industrial workers in the cities whilst the Red Army was fighting the White Army's attempted restoration of the Romanov dynasty as absolute monarchs of Russia. Moreover, the politically unpopular forced grain-requisitions discouraged peasants from farming resulted in reduced harvests and food shortages that provoked labour strikes and food riots. In the event, the Russian peoples created an economy of barter and black market to counter the Bolshevik government's voiding of the monetary economy.

In 1921, the New Economic Policy restored some private enterprise to animate the Russian economy. As part of Lenin's pragmatic compromise with external financial interests in 1918, Bolshevik state capitalism temporarily returned 91% of industry to private ownership or trusts until the Soviet Russians learned the technology and the techniques required to operate and administrate industries. Importantly, Lenin declared that the development of socialism would not be able to be pursued in the manner originally thought by Marxists. A key aspect that affected the Bolshevik regime was the backward economic conditions in Russia that were considered unfavourable to orthodox Marxist theory of communist revolution. At the time, orthodox Marxists claimed that Russia was ripe for the development of capitalism, not yet for socialism. Lenin advocated the need of the development of a large corps of technical intelligentsia to assist the industrial development of Russia and advance the Marxist economic stages of development as it had too few technical experts at the time. In that vein, Lenin explained it as follows: "Our poverty is so great that we cannot, at one stroke, restore full-scale factory, state, socialist production." He added that the development of socialism would proceed according to the actual material and socio-economic conditions in Russia and not as abstractly described by Marx for industrialised Europe in the 19th century. To overcome the lack of educated Russians who could operate and administrate industry, Lenin advocated the development of a technical intelligentsia who would propel the industrial development of Russia to self-sufficiency.

Stalin's rise to power (1922–1928)

As he neared death after suffering strokes, Lenin's Testament of December 1922 named Trotsky and Stalin as the most able men in the Central Committee, but he harshly criticised them. Lenin said that Stalin should be removed from being the General Secretary of the party and that he be replaced with "some other person who is superior to Stalin only in one respect, namely, in being more tolerant, more loyal, more polite, and more attentive to comrades." Upon his death on 21 January 1924, Lenin's political testament was read aloud to the Central Committee, who chose to ignore Lenin's ordered removal of Stalin as General Secretary because enough members believed Stalin had been politically rehabilitated in 1923.

Consequent to personally spiteful disputes about the praxis of Leninism, the October Revolution veterans Lev Kamenev and Grigory Zinoviev said that the true threat to the ideological integrity of the party was Trotsky, who was a personally charismatic political leader as well as the commanding officer of the Red Army in the Russian Civil War and revolutionary partner of Lenin. To thwart Trotsky's likely election to head the party, Stalin, Kamenev and Zinoviev formed a troika that featured Stalin as General Secretary, the de facto centre of power in the party and the country. The direction of the party was decided in confrontations of politics and personality between Stalin's troika and Trotsky over which Marxist policy to pursue, either Trotsky's policy of permanent revolution or Stalin's policy of socialism in one country. Trotsky's permanent revolution advocated rapid industrialisation, elimination of private farming and having the Soviet Union promote the spread of communist revolution abroad. Stalin's socialism in one country stressed moderation and development of positive relations between the Soviet Union and other countries to increase trade and foreign investment. To politically isolate and oust Trotsky from the party, Stalin expediently advocated socialism in one country, a policy to which he was indifferent. In 1925, the 14th Congress of the All-Union Communist Party (Bolsheviks) chose Stalin's policy, defeating Trotsky as a possible leader of the party and of the Soviet Union.

In the 1925–1927 period, Stalin dissolved the troika and disowned the centrist Kamenev and Zinoviev for an expedient alliance with the three most prominent leaders of the so-called Right Opposition, namely Alexei Rykov (Premier of Russia, 1924–1929; Premier of the Soviet Union, 1924–1930), Nikolai Bukharin (General Secretary of the Comintern, 1926–1929; Editor-in-Chief of Pravda, 1918–1929), and Mikhail Tomsky (Chairman of the All-Russian Central Council of Trade Unions in the 1920s). In 1927, the party endorsed Stalin's policy of socialism in one country as the Soviet Union's national policy and expelled the leftist Trotsky and the centrists Kamenev and Zinoviev from the Politburo. In 1929, Stalin politically controlled the party and the Soviet Union by way of deception and administrative acumen. In that time, Stalin's centralised, socialism in one country régime had negatively associated Lenin's revolutionary Bolshevism with Stalinism, i.e. government by command-policy to realise projects such as the rapid industrialisation of cities and the collectivisation of agriculture. Such Stalinism also subordinated the interests (political, national and ideological) of Asian and European communist parties to the geopolitical interests of the Soviet Union.

In the 1928–1932 period of the first five-year plan, Stalin effected the dekulakisation of the farmlands of the Soviet Union, a politically radical dispossession of the kulak class of peasant-landlords from the Tsarist social order of monarchy. As Old Bolshevik revolutionaries, Bukharin, Rykov and Tomsky recommended amelioration of the dekulakisation to lessen the negative social impact in the relations between the Soviet peoples and the party, but Stalin took umbrage and then accused them of uncommunist philosophical deviations from Lenin and Marx. That implicit accusation of ideological deviationism licensed Stalin to accuse Bukharin, Rykov and Tomsky of plotting against the party and the appearance of impropriety then compelled the resignations of the Old Bolsheviks from government and from the Politburo. Stalin then completed his political purging of the party by exiling Trotsky from the Soviet Union in 1929. Afterwards, the political opposition to the practical régime of Stalinism was denounced as Trotskyism (Bolshevik–Leninism), described as a deviation from Marxism–Leninism, the state ideology of the Soviet Union.

Political developments in the Soviet Union included Stalin dismantling the remaining elements of democracy from the party by extending his control over its institutions and eliminating any possible rivals. The party's ranks grew in numbers, with the party modifying its organisation to include more trade unions and factories. The ranks and files of the party were populated with members from the trade unions and the factories, whom Stalin controlled because there were no other Old Bolsheviks to contradict Marxism–Leninism. In the late 1930s, the Soviet Union adopted the 1936 Soviet Constitution which ended weighted-voting preferences for workers, promulgated universal suffrage for every man and woman older than 18 years of age and organised the soviets (councils of workers) into two legislatures, namely the Soviet of the Union (representing electoral districts) and the Soviet of Nationalities (representing the ethnic groups of the country). By 1939, with the exception of Stalin himself, none of the original Bolsheviks of the October Revolution of 1917 remained in the party. Unquestioning loyalty to Stalin was expected by the regime of all citizens.

Stalin exercised extensive personal control over the party and unleashed an unprecedented level of violence to eliminate any potential threat to his regime. While Stalin exercised major control over political initiatives, their implementation was in the control of localities, often with local leaders interpreting the policies in a way that served themselves best. This abuse of power by local leaders exacerbated the violent purges and terror campaigns carried out by Stalin against members of the party deemed to be traitors. With the Great Purge (1936–1938), Stalin rid himself of internal enemies in the party and rid the Soviet Union of any alleged socially dangerous and counterrevolutionary person who might have offered legitimate political opposition to Marxism–Leninism.

Stalin allowed the secret police NKVD (People's Commissariat for Internal Affairs) to rise above the law and the GPU (State Political Directorate) to use political violence to eliminate any person who might be a threat, whether real, potential, or imagined. As an administrator, Stalin governed the Soviet Union by controlling the formulation of national policy, but he delegated implementation to subordinate functionaries. Such freedom of action allowed local communist functionaries much discretion to interpret the intent of orders from Moscow, but this allowed their corruption. To Stalin, the correction of such abuses of authority and economic corruption were responsibility of the NKVD. In the 1937–1938 period, the NKVD arrested 1.5 million people, purged from every stratum of Soviet society and every rank and file of the party, of which 681,692 people were killed as enemies of the state. To provide manpower (manual, intellectual and technical) to realise the construction of socialism in one country, the NKVD established the Gulag system of forced-labour camps for regular criminals and political dissidents, for culturally insubordinate artists and politically incorrect intellectuals and for homosexual people and religious anti-communists.

Socialism in one country (1928–1944)

Beginning in 1928, Stalin's five-year plans for the national economy of the Soviet Union achieved the rapid industrialisation (coal, iron and steel, electricity and petroleum, among others) and the collectivisation of agriculture. It achieved 23.6% of collectivisation within two years (1930) and 98.0% of collectivisation within thirteen years (1941). As the revolutionary vanguard, the communist party organised Russian society to realise rapid industrialisation programs as defence against Western interference with socialism in Bolshevik Russia. The five-year plans were prepared in the 1920s whilst the Bolshevik government fought the internal Russian Civil War (1917–1922) and repelled the external Allied intervention to the Russian Civil War (1918–1925). Vast industrialisation was initiated mostly based with a focus on heavy industry. The Cultural revolution in the Soviet Union focused on restructuring culture and society.

During the 1930s, the rapid industrialisation of the country accelerated the Soviet people's sociological transition from poverty to relative plenty when politically illiterate peasants passed from Tsarist serfdom to self-determination and became politically aware urban citizens. The Marxist–Leninist economic régime modernised Russia from the illiterate, peasant society characteristic of monarchy to the literate, socialist society of educated farmers and industrial workers. Industrialisation led to a massive urbanisation in the country. Unemployment was virtually eliminated in the country during the 1930s. However, this rapid industrialisation also resulted in the Soviet famine of 1930–1933 that killed millions.

Social developments in the Soviet Union included the relinquishment of the relaxed social control and allowance of experimentation under Lenin to Stalin's promotion of a rigid and authoritarian society based upon discipline, mixing traditional Russian values with Stalin's interpretation of Marxism. Organised religion was repressed, especially minority religious groups. Education was transformed. Under Lenin, the education system allowed relaxed discipline in schools that became based upon Marxist theory, but Stalin reversed this in 1934 with a conservative approach taken with the reintroduction of formal learning, the use of examinations and grades, the assertion of full authority of the teacher and the introduction of school uniforms. Art and culture became strictly regulated under the principles of socialist realism and Russian traditions that Stalin admired were allowed to continue.

Foreign policy in the Soviet Union from 1929 to 1941 resulted in substantial changes in the Soviet Union's approach to its foreign policy. In 1933, the Marxist–Leninist geopolitical perspective was that the Soviet Union was surrounded by capitalist and anti-communist enemies. As a result, the election of Adolf Hitler and his Nazi Party government in Germany initially caused the Soviet Union to sever diplomatic relations that had been established in the 1920s. In 1938, Stalin accommodated the Nazis and the anti-communist West by not defending Czechoslovakia, allowing Hitler's threat of pre-emptive war for the Sudetenland to annex the land and "rescue the oppressed German peoples" living in Czecho.

To challenge Nazi Germany's bid for European empire and hegemony, Stalin promoted anti-fascist front organisations to encourage European socialists and democrats to join the Soviet communists to fight throughout Nazi-occupied Europe, creating agreements with France to challenge Germany. After Germany and Britain signed the Munich Agreement (29 September 1938) which allowed the German occupation of Czechoslovakia (1938–1945), Stalin adopted pro-German policies for the Soviet Union's dealings with Nazi Germany. In 1939, the Soviet Union and Nazi Germany agreed to the Treaty of Non-aggression between Germany and the Union of Soviet Socialist Republics (Molotov–Ribbentrop Pact, 23 August 1939) and to jointly invade and partition Poland, by way of which Nazi Germany started the Second World War (1 September 1939).

In the 1941–1942 period of the Great Patriotic War, the German invasion of the Soviet Union (Operation Barbarossa, 22 June 1941) was ineffectively opposed by the Red Army, who were poorly led, ill-trained and under-equipped. As a result, they fought poorly and suffered great losses of soldiers (killed, wounded and captured). The weakness of the Red Army was partly consequence of the Great Purge (1936–1938) of senior officers and career soldiers whom Stalin considered politically unreliable. Strategically, the Wehrmacht's extensive and effective attack threatened the territorial integrity of the Soviet Union and the political integrity of Stalin's model of a communist state, when the Nazis were initially welcomed as liberators by the anti-communist and nationalist populations in the Byelorussian Soviet Socialist Republic, the Georgian Soviet Socialist Republic and the Ukrainian Soviet Socialist Republic.

The anti-Soviet nationalists' collaboration with the Nazi's lasted until the Schutzstaffel and the Einsatzgruppen began their Lebensraum killings of the Jewish populations, the local communists, the civil and community leaders—the Holocaust meant to realise the Nazi German colonisation of Bolshevik Russia. In response, Stalin ordered the Red Army to fight a total war against the Germanic invaders who would exterminate Slavic Russia. Hitler's attack against the Soviet Union (Nazi Germany's erstwhile ally) realigned Stalin's political priorities, from the repression of internal enemies to the existential defence against external attack. The pragmatic Stalin then entered the Soviet Union to the Grand Alliance, a common front against the Axis powers (Nazi Germany, Fascist Italy and the Empire of Japan).

In the continental European countries occupied by the Axis powers, the native communist party usually led the armed resistance (guerrilla warfare and urban guerrilla warfare) against fascist military occupation. In Mediterranean Europe, the communist Yugoslav Partisans led by Josip Broz Tito effectively resisted the German Nazi and Italian Fascist occupation. In the 1943–1944 period, the Yugoslav Partisans liberated territories with Red Army assistance and established the communist political authority that became the Socialist Federal Republic of Yugoslavia. To end the Japanese occupation of China in continental Asia, Stalin ordered Mao Zedong and the Chinese Communist Party to temporarily cease the Chinese Civil War (1927–1949) against Chiang Kai-shek and the anti-communist Kuomintang as the Second United Front in the Second Sino-Japanese War (1937–1945).

In 1943, the Red Army began to repel the Nazi invasion of the Soviet Union, especially at the Battle of Stalingrad (23 August 1942 – 2 February 1943) and at the Battle of Kursk (5 July – 23 August 1943). The Red Army then repelled the Nazi and Fascist occupation armies from Eastern Europe until the Red Army decisively defeated Nazi Germany in the Berlin Strategic Offensive Operation (16 April–2 May 1945). On concluding the Great Patriotic War (1941–1945), the Soviet Union was a military superpower with a say in determining the geopolitical order of the world. Apart from the failed Third Period policy in the early 1930s, Marxist–Leninists played an important role in anti-fascist resistance movements, with the Soviet Union contributing to the Allied victory in World War II. In accordance with the three-power Yalta Agreement (4–11 February 1945), the Soviet Union purged native fascist collaborators and these in collaboration with the Axis Powers from the Eastern European countries occupied by the Axis Powers and installed native Marxist–Leninist governments.

Cold War, de-Stalinisation and Maoism (1944–1953)

Upon Allied victory concluding the Second World War (1939–1945), the members of the Grand Alliance resumed their pre-war geopolitical rivalries and ideological tensions which disunity broke their anti-fascist wartime alliance through the concept of totalitarianism into the anti-communist Western Bloc and the Marxist–Leninist Eastern Bloc. The renewed competition for geopolitical hegemony resulted in the bi-polar Cold War (1947–1991), a protracted state of tension (military and diplomatic) between the United States and the Soviet Union which often threatened a Soviet–American nuclear war, but it usually featured proxy wars in the Third World. With the end of the Grand Alliance and the start of the Cold War, anti-fascism became part of both the official ideology and language of communist states, especially in East Germany. Fascist and anti-fascism, with the latter used to mean a general anti-capitalist struggle against the Western world and NATO, became epithets widely used by Marxist–Leninists to smear their opponents, including democratic socialists, libertarian socialists, social democrats and other anti-Stalinist leftists.

The events that precipitated the Cold War in Europe were the Soviet and Yugoslav, Bulgarian and Albanian military interventions to the Greek Civil War (1944–1949) on behalf of the Communist Party of Greece; and the Berlin Blockade (1948–1949) by the Soviet Union. The event that precipitated the Cold War in continental Asia was the resumption of the Chinese Civil War (1927–1949) fought between the anti-communist Kuomintang and the Chinese Communist Party. After military defeat exiled Generalissimo Chiang Kai-shek and his Kuomintang nationalist government to Formosa island (Taiwan), Mao Zedong established the People's Republic of China on 1 October 1949.

In the late 1940s, the geopolitics of the Eastern Bloc countries under Soviet predominance featured an official-and-personal style of socialist diplomacy that failed Stalin and Tito when Tito refused to subordinating Yugoslavia to the Soviet Union. In 1948, circumstance and cultural personality aggravated the matter into the Yugoslav–Soviet split (1948–1955) that resulted from Tito's rejection of Stalin's demand to subordinate the Federal People's Republic of Yugoslavia to the geopolitical agenda (economic and military) of the Soviet Union, i.e. Tito at Stalin's disposal. Stalin punished Tito's refusal by denouncing him as an ideological revisionist of Marxism–Leninism; by denouncing Yugoslavia's practice of Titoism as socialism deviated from the cause of world communism; and by expelling the Communist Party of Yugoslavia from the Communist Information Bureau (Cominform). The break from the Eastern Bloc allowed the development of a socialism with Yugoslav characteristics which allowed doing business with the capitalist West to develop the socialist economy and the establishment of Yugoslavia's diplomatic and commercial relations with countries of the Eastern Bloc and the Western Bloc. Yugoslavia's international relations matured into the Non-Aligned Movement (1961) of countries without political allegiance to any power bloc.

At the death of Stalin in 1953, Nikita Khrushchev became leader of the Soviet Union and of the Communist Party of the Soviet Union and then consolidated an anti-Stalinist government. In a secret meeting at the 20th Congress of the Communist Party of the Soviet Union, Khrushchev denounced Stalin and Stalinism in the speech On the Cult of Personality and Its Consequences (25 February 1956) in which he specified and condemned Stalin's dictatorial excesses and abuses of power such as the Great purge (1936–1938) and the cult of personality. Khrushchev introduced the de-Stalinisation of the party and of the Soviet Union. He realised this with the dismantling of the Gulag archipelago of forced-labour camps and freeing the prisoners as well as allowing Soviet civil society greater political freedom of expression, especially for public intellectuals of the intelligentsia such as the novelist Aleksandr Solzhenitsyn, whose literature obliquely criticised Stalin and the Stalinist police state. De-Stalinisation also ended Stalin's national-purpose policy of socialism in one country and was replaced with proletarian internationalism, by way of which Khrushchev re-committed the Soviet Union to permanent revolution to realise world communism. In that geopolitical vein, Khrushchev presented de-Stalinisation as the restoration of Leninism as the state ideology of the Soviet Union.

In the 1950s, the de-Stalinisation of the Soviet Union was ideological bad news for the People's Republic of China because Soviet and Russian interpretations and applications of Leninism and orthodox Marxism contradicted the Sinified Marxism–Leninism of Mao Zedong—his Chinese adaptations of Stalinist interpretation and praxis for establishing socialism in China. To realise that leap of Marxist faith in the development of Chinese socialism, the Chinese Communist Party developed Maoism as the official state ideology. As the specifically Chinese development of Marxism–Leninism, Maoism illuminated the cultural differences between the European-Russian and the Asian-Chinese interpretations and practical applications of Marxism–Leninism in each country. The political differences then provoked geopolitical, ideological and nationalist tensions, which derived from the different stages of development, between the urban society of the industrialised Soviet Union and the agricultural society of the pre-industrial China. The theory versus praxis arguments escalated to theoretic disputes about Marxist–Leninist revisionism and provoked the Sino-Soviet split (1956–1966) and the two countries broke their international relations (diplomatic, political, cultural and economic). China's Great Leap Forward, an idealistic massive reform project, resulted in an estimated 15 to 55 million deaths between 1959 and 1961, mostly from starvation.

In Eastern Asia, the Cold War produced the Korean War (1950–1953), the first proxy war between the Eastern Bloc and the Western Bloc, resulted from dual origins, namely the nationalist Koreans' post-war resumption of their Korean Civil War and the imperial war for regional hegemony sponsored by the United States and the Soviet Union. The international response to the North Korean invasion of South Korea was realised by the United Nations Security Council, who voted for war despite the absent Soviet Union and authorised an international military expedition to intervene, expel the northern invaders from the south of Korea and restore the geopolitical status quo ante of the Soviet and American division of Korea at the 38th Parallel of global latitude. Consequent to Chinese military intervention in behalf of North Korea, the magnitude of the infantry warfare reached operational and geographic stalemate (July 1951 – July 1953). Afterwards, the shooting war was ended with the Korean Armistice Agreement (27 July 1953); and the superpower Cold War in Asia then resumed as the Korean Demilitarised Zone.

Consequent to the Sino-Soviet split, the pragmatic China established politics of détente with the United States in an effort to publicly challenge the Soviet Union for leadership of the international Marxist–Leninist movement. Mao Zedong's pragmatism permitted geopolitical rapprochement and eventually facilitated President Richard Nixon's 1972 visit to China which subsequently ended the policy of the existence to Two Chinas when the United States sponsored the People's Republic of China to replace the Republic of China (Taiwan) as the representative of the Chinese people at the United Nations. In the due course of Sino-American rapprochement, China also assumed membership in the Security Council of the United Nations. In the post-Mao period of Sino-American détente, the Deng Xiaoping government (1982–1987) affected policies of economic liberalisation that allowed continual growth for the Chinese economy. The ideological justification is socialism with Chinese characteristics, the Chinese adaptation of Marxism–Leninism.

Third World conflicts (1954–1979)

Communist revolution erupted in the Americas in this period, including revolutions in Bolivia, Cuba, El Salvador, Grenada, Nicaragua, Peru and Uruguay. The Cuban Revolution (1953–1959) led by Fidel Castro and Che Guevara deposed the military dictatorship (1952–1959) of Fulgencio Batista and established the Republic of Cuba, a state formally recognised by the Soviet Union. In response, the United States launched a coup against the Castro government in 1961. However, the CIA's unsuccessful Bay of Pigs invasion (17 April 1961) by anti-communist Cuban exiles impelled the Republic of Cuba to side with the Soviet Union in the geopolitics of the bipolar Cold War. The Cuban Missile Crisis (22–28 October 1962) occurred when the United States opposed Cuba being armed with nuclear missiles by the Soviet Union. After a stalemate confrontation, the United States and the Soviet Union jointly resolved the nuclear-missile crisis by respectively removing United States missiles from Turkey and Italy and Soviet missiles from Cuba.

Both Bolivia, Canada and Uruguay faced Marxist–Leninist revolution in the 1960s and 1970s. In Bolivia, this included Che Guevara as a leader until being killed there by government forces. In 1970, the October Crisis (5 October – 28 December 1970) occurred in Canada, a brief revolution in the province of Quebec, where the actions of the Marxist–Leninist and separatist Quebec Liberation Front (FLQ) featured the kidnap of James Cross, the British Trade Commissioner in Canada; and the killing of Pierre Laporte, the Quebec government minister. The political manifesto of the FLQ condemned English-Canadian imperialism in French Quebec and called for an independent, socialist Quebec. The Canadian government's response included the suspension of civil liberties in Quebec and compelled the FLQ leaders' flight to Cuba. Uruguay faced Marxist–Leninist revolution from the Tupamaros movement from the 1960s to the 1970s.

In 1979, the Sandinista National Liberation Front (FSLN) led by Daniel Ortega won the Nicaraguan Revolution (1961–1990) against the government of Anastasio Somoza Debayle (1 December 1974 – 17 July 1979) to establish a socialist Nicaragua. Within months, the government of Ronald Reagan sponsored the counter-revolutionary Contras in the secret Contra War (1979–1990) against the Sandinista government. In 1989, the Contra War concluded with the signing of the Tela Accord at the port of Tela, Honduras. The Tela Accord required the subsequent, voluntary demobilisation of the Contra guerrilla armies and the FSLN army. In 1990, a second national election installed to government a majority of non-Sandinista political parties, to whom the FSLN handed political power. Since 2006, the FSLN has returned to government, winning every legislative and presidential election in the process (2006, 2011 and 2016).

The Salvadoran Civil War (1979–1992) featured the popularly supported Farabundo Martí National Liberation Front, an organisation of left-wing parties fighting against the right-wing military government of El Salvador. In 1983, the United States invasion of Grenada (25–29 October 1983) thwarted the assumption of power by the elected government of the New Jewel Movement (1973–1983), a Marxist–Leninist vanguard party led by Maurice Bishop.

In Asia, the Vietnam War (1955–1975) was the second East–West war fought during the Cold War (1947–1991). In the First Indochina War (1946–1954), the communist Việt Minh led by Ho Chi Minh defeated the French colonial re-establishment and its native associated state in Vietnam. To fill the geopolitical power vacuum caused by French defeat in southeast Asia, Vietnam was divided into South Vietnam and North Vietnam in 1954, communists took power in the North and pro-French government took power in the South, and the United States then became the Western power supporting the Republic of Vietnam (1955–1975) in the South headed by president Ngo Dinh Diem, an anti-communist politician. China and the Soviet Union helped the North. Despite possessing military superiority, the United States failed to safeguard South Vietnam from the guerrilla warfare of the Viet Cong sponsored by North Vietnam. On 30 January 1968, North Vietnam launched the Tet Offensive (the General Offensive and Uprising of Tet Mau Than, 1968). Although a military failure for the guerrillas and the army, it was a successful psychological warfare operation that decisively turned international public opinion against the United States intervention to the Vietnamese civil war, with the military withdrawal of the United States from Vietnam in 1973 and the subsequent and consequent Fall of Saigon to the North Vietnamese army on 30 April 1975.

With the end of the Vietnam War, Vietnam was reunited under Marxist–Leninist government in 1976. Marxist–Leninist regimes were also established in Vietnam's neighbour states. This included Kampuchea and Laos. Consequent to the Cambodian Civil War (1968–1975), a coalition composed of Prince Norodom Sihanouk (1941–1955), the native Cambodian Marxist–Leninists and the Maoist Khmer Rouge (1951–1999) led by Pol Pot established Democratic Kampuchea (1975–1982), a communist state led by Angkar that featured class warfare to restructure the society of old Cambodia and to be effected and realised with the abolishment of money and private property, the outlawing of religion, the killing of the intelligentsia and compulsory manual labour for the middle classes by way of death-squad state terrorism. To eliminate Western cultural influence, Kampuchea expelled all foreigners and effected the destruction of the urban bourgeoisie of old Cambodia, first by displacing the population of the capital city, Phnom Penh; and then by displacing the national populace to work farmlands to increase food supplies. Meanwhile, the Khmer Rouge purged Kampuchea of internal enemies (social class and political, cultural and ethnic) at the Killing Fields, the scope of which became crimes against humanity for the deaths of 2,700,000 people by mass murder and genocide. That social restructuring of Cambodia into Kampuchea included attacks against the Vietnamese ethnic minority of the country which aggravated the historical, ethnic rivalries between the Viet and the Khmer peoples. Beginning in September 1977, Kampuchea and the Socialist Republic of Vietnam continually engaged in border clashes. In 1978, Vietnam invaded Kampuchea and captured Phnom Penh in January 1979, deposed the Maoist Khmer Rouge from government by the proclamation of the People's Republic of Kampuchea and established the Cambodia Liberation Front for National Renewal as the government of Cambodia, the Kampuchean People's Revolutionary Party (KPRP) also came to power in January 1979.

A new front of Marxist–Leninist revolution erupted in Africa between 1961 and 1987. Angola, Benin, Congo, Ethiopia, Mozambique and Somalia became communist states governed by their respective native peoples during the 1968–1980 period. Marxist–Leninist guerrillas fought the Portuguese Colonial War (1961–1974) in three countries, namely Angola, Guinea-Bissau and Mozambique. In Ethiopia, a Marxist–Leninist revolution deposed the monarchy of Emperor Haile Selassie (1930–1974) and established the Derg government (1974–1987) of the Provisional Military Government of Socialist Ethiopia. In Rhodesia (1965–1979), Robert Mugabe led the Zimbabwe War of Liberation (1964–1979) that deposed white-minority rule and then established the Republic of Zimbabwe. In the Seychelles, France-Albert René ruled over a Marxist–Leninist one party system from 1977 to 1991. In the Gambia, Kukoi Samba Sanyang initiated a Marxist–Leninist coup in 1981 (the initiative failed and he turned to mercenary activity abroad). In 1983, in Upper Volta, Thomas Sankara established a military and peasant based version of auto-centered Marxism–Leninism. Sankara refused aid and also refused to pay the country's foreign debts. He renamed Upper Volta 'Burkina Faso' (the land of upright people). His former friend and second in command, Blaise Compaoré, ordered Sankara's murder in 1987, ending the Burkinabe social experiment.

In 1986, Yoweri Museveni's NRM force established "the Movement system," a political system where elections are held but no political parties are allowed to exist.

In Apartheid South Africa (1948–1994), the Afrikaner government of the Nationalist Party caused much geopolitical tension between the United States and the Soviet Union because of the Afrikaners' violent social control and political repression of the black and coloured populations of South Africa exercised under the guise of anti-communism and national security. The Soviet Union officially supported the overthrow of apartheid while the West and the United States in particular maintained official neutrality on the matter. In the 1976–1977 period of the Cold War, the United States and other Western countries found it morally untenable to politically support Apartheid South Africa, especially when the Afrikaner government killed 176 people (students and adults) in the police suppression of the Soweto uprising (June 1976), a political protest against Afrikaner cultural imperialism upon the non-white peoples of South Africa, specifically the imposition of the Germanic language of Afrikaans as the standard language for education which black South Africans were required to speak when addressing white people and Afrikaners; and the police assassination of Stephen Biko (September 1977), a politically moderate leader of the internal resistance to apartheid in South Africa.

Under President Jimmy Carter, the West joined the Soviet Union and others in enacting sanctions against weapons trade and weapons-grade material to South Africa. However, forceful actions by the United States against Apartheid South Africa were diminished under President Reagan as the Reagan administration feared the rise of revolution in South Africa as had happened in Zimbabwe against white minority rule. In 1979, the Soviet Union intervened in Afghanistan to establish a communist state (existed until 1992), although the act was seen as an invasion by the West which responded to the Soviet military actions by boycotting the Moscow Olympics of 1980 and providing clandestine support to the Mujahideen, including Osama bin Laden, as a means to challenge the Soviet Union. The war became a Soviet equivalent of the Vietnam War to the United States and it remained a stalemate throughout the 1980s.

Reform and collapse of Marxism-Leninism in Eastern Eurasia and elsewhere (1979–1991)

Social resistance to the policies of Marxist–Leninist regimes in Eastern Europe accelerated in strength with the rise of the Solidarity, the first non-Marxist–Leninist controlled trade union in the Warsaw Pact that was formed in the People's Republic of Poland in 1980.

In 1985, Mikhail Gorbachev rose to power in the Soviet Union and began policies of radical political reform involving political liberalisation, called perestroika and glasnost. Gorbachev's policies were designed at dismantling authoritarian elements of the state that were developed by Stalin, aiming for a return to a supposed ideal communist state that retained one-party structure while allowing the democratic election of competing candidates within the party for political office. Gorbachev also aimed to seek détente with the West and end the Cold War that was no longer economically sustainable to be pursued by the Soviet Union. The Soviet Union and the United States under President George H. W. Bush joined in pushing for the dismantlement of apartheid and oversaw the dismantlement of South African colonial rule over Namibia.

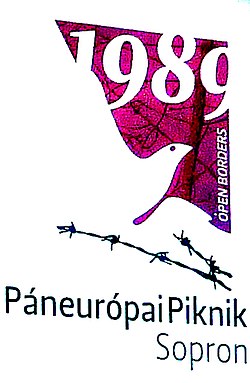

Meanwhile, the Central and Eastern European communist states politically deteriorated in response to the success of the Polish Solidarity movement and the possibility of Gorbachev-style political liberalisation. In 1989, revolts began across Central and Eastern Europe and China against Marxist–Leninist regimes. In China, the government refused to negotiate with student protestors, resulting in the 1989 Tiananmen Square massacre that stopped the revolts by force. The Pan-European Picnic, which was based on an idea by Otto von Habsburg to test the reaction of the Soviet Union, then triggered a peaceful chain reaction in August 1989, at the end of which there was no longer East Germany and the Iron Curtain and the Marxist–Leninist Eastern Bloc had collapsed. On the one hand, as a result of the Pan-European Picnic, the Marxist–Leninist rulers of the Eastern Bloc did not act decisively, but cracks appeared between them and on the other hand the media-informed Central and Eastern European population now noticed a steady loss of power in their governments.

The revolts culminated with the revolt in East Germany against the Marxist–Leninist regime of Erich Honecker and demands for the Berlin Wall to be torn down. The event in East Germany developed into a popular mass revolt with sections of the Berlin Wall being torn down and East and West Berliners uniting. Gorbachev's refusal to use Soviet forces based in East Germany to suppress the revolt was seen as a sign that the Cold War had ended. Honecker was pressured to resign from office and the new government committed itself to reunification with West Germany. The Marxist–Leninist regime of Nicolae Ceaușescu in Romania was forcefully overthrown in 1989 and Ceaușescu was executed. Almost Eastern Bloc regimes also fell during the Revolutions of 1989 (1988–1993).

Unrest and eventual collapse of Marxism–Leninism also occurred in Yugoslavia, although for different reasons than those of the Warsaw Pact. The death of Josip Broz Tito in 1980 and the subsequent vacuum of strong leadership amidst an economic crisis allowed the rise of rival ethnic nationalism in the multinational country. The first leader to exploit such nationalism for political purposes was Slobodan Milošević, who used it to seize power as president of Serbia and demanded concessions to Serbia and Serbs by the other republics in the Yugoslav federation. This resulted in a surge of both Croatian nationalism and Slovene nationalism in response and the collapse of the League of Communists of Yugoslavia in 1990, the victory of nationalists in multi-party elections in most of Yugoslavia's constituent republics and eventually civil war between the various nationalities beginning in 1991. Yugoslavia was dissolved in 1992.

The Soviet Union itself collapsed between 1990 and 1991, with a rise of secessionist nationalism and a political power dispute between Gorbachev and Boris Yeltsin, the new leader of the Russian Federation. With the Soviet Union collapsing, Gorbachev prepared the country to become a loose federation of independent states called the Commonwealth of Independent States. Hardline Marxist–Leninist leaders in the military reacted to Gorbachev's policies with the August Coup of 1991 in which hardline Marxist–Leninist military leaders overthrew Gorbachev and seized control of the government. This regime only lasted briefly as widespread popular opposition erupted in street protests and refused to submit. Gorbachev was restored to power, but the various Soviet republics were now set for independence. On 25 December 1991, Gorbachev officially announced the dissolution of the Soviet Union, ending the existence of the world's first Marxist–Leninist-led state.

Post-Cold War era (1991–present)

Since the fall of the Eastern European Marxist–Leninist regimes, the Soviet Union and a variety of African Marxist–Leninist regimes in 1991, only a few Marxist–Leninist parties remained in power. This include China, Cuba, Laos, and Vietnam. Most Marxist–Leninist communist parties outside of these nations have fared relatively poorly in elections, although other parties have remained or became a relative strong force. In Russia, the Communist Party of the Russian Federation has remained a significant political force, winning the 1995 Russian legislative election, almost winning the 1996 Russian presidential election, amid allegations of United States foreign electoral intervention, and generally remaining the second most popular party. In Ukraine, the Communist Party of Ukraine has also exerted influence and governed the country after the 1994 Ukrainian parliamentary election and again after the 2006 Ukrainian parliamentary election. The 2014 Ukrainian parliamentary election following the Russo-Ukrainian War and the annexation of Crimea by the Russian Federation resulted in the loss of its 32 members and no parliamentary representation.

In Europe, several Marxist–Leninist parties remain strong. In Cyprus, Dimitris Christofias of AKEL won the 2008 Cypriot presidential election. AKEL has consistently been the first and third most popular party, winning the 1970, 1981, 2001, and 2006 legislative elections. In the Czech Republic and Portugal, the Communist Party of Bohemia and Moravia and the Portuguese Communist Party have been the second and fourth most popular parties until the 2017 and 2009 legislative elections, respectively. From 2017 to 2021, the Communist Party of Bohemia and Moravia supported the ANO 2011–ČSSD minority government while the Portuguese Communist Party has provided confidence and supply along with the Ecologist Party "The Greens" and Left Bloc to the Socialist minority government from 2015 to 2019. In Greece, the Communist Party of Greece has led an interim and later national unity government between 1989 and 1990, constantly remaining the third or fourth most popular party. In Moldova, the Party of Communists of the Republic of Moldova won the 2001, 2005, and April 2009 parliamentary elections. The April 2009 Moldovan elections results were protested and the July 2009 Moldovan parliamentary election resulted in the formation of the Alliance for European Integration. Failing to elect the president, the 2020 Moldovan parliamentary election resulted in roughly the same representation in the parliament. According to Ion Marandici, a Moldovan political scientist, the Party of Communists differs from those in other countries because it managed to appeal to the ethnic minorities and the anti-Romanian Moldovans. After tracing the adaptation strategy of the party, he found confirming evidence for five of the factors contributing to its electoral success, already mentioned in the theoretical literature on former Marxist–Leninist parties, namely the economic situation, the weakness of the opponents, the electoral laws, the fragmentation of the political spectrum and the legacy of the old regime. However, Marandici identified seven additional explanatory factors at work in the Moldovan case, namely the foreign support for certain political parties, separatism, the appeal to the ethnic minorities, the alliance-building capacity, the reliance on the Soviet notion of the Moldovan identity, the state-building process and the control over a significant portion of the media. It is due to these seven additional factors that the party managed to consolidate and expand its constituency. In the post-Soviet states, the Party of Communists are the only ones who have been in power for so long and did not change the name of the party.