Seal of the National Security Agency | |

Flag of the National Security Agency | |

NSA Headquarters, Fort Meade, Maryland | |

| Agency overview | |

|---|---|

| Formed | November 4, 1952 |

| Preceding agency |

|

| Headquarters | Fort Meade, Maryland, U.S. 39°6′32″N 76°46′17″WCoordinates: 39°6′32″N 76°46′17″W |

| Motto | "Defending Our Nation. Securing the Future." |

| Employees | Classified (est. 30,000–40,000) |

| Annual budget | Classified (estimated $10.8 billion, 2013) |

| Agency executives | |

| Parent agency | Department of Defense |

| Website | NSA.gov |

The National Security Agency (NSA) is a national-level intelligence agency of the United States Department of Defense, under the authority of the Director of National Intelligence. The NSA is responsible for global monitoring, collection, and processing of information and data for foreign and domestic intelligence and counterintelligence purposes, specializing in a discipline known as signals intelligence (SIGINT). The NSA is also tasked with the protection of U.S. communications networks and information systems. The NSA relies on a variety of measures to accomplish its mission, the majority of which are clandestine.

Originating as a unit to decipher coded communications in World War II, it was officially formed as the NSA by President Harry S. Truman in 1952. Since then, it has become the largest of the U.S. intelligence organizations in terms of personnel and budget. The NSA currently conducts worldwide mass data collection and has been known to physically bug electronic systems as one method to this end. The NSA is also alleged to have been behind such attack software as Stuxnet, which severely damaged Iran's nuclear program. The NSA, alongside the Central Intelligence Agency (CIA), maintains a physical presence in many countries across the globe; the CIA/NSA joint Special Collection Service (a highly classified intelligence team) inserts eavesdropping devices in high value targets (such as presidential palaces or embassies). SCS collection tactics allegedly encompass "close surveillance, burglary, wiretapping, [and] breaking and entering".

Unlike the CIA and the Defense Intelligence Agency (DIA), both of which specialize primarily in foreign human espionage, the NSA does not publicly conduct human-source intelligence gathering. The NSA is entrusted with providing assistance to, and the coordination of, SIGINT elements for other government organizations – which are prevented by law from engaging in such activities on their own. As part of these responsibilities, the agency has a co-located organization called the Central Security Service (CSS), which facilitates cooperation between the NSA and other U.S. defense cryptanalysis components. To further ensure streamlined communication between the signals intelligence community divisions, the NSA Director simultaneously serves as the Commander of the United States Cyber Command and as Chief of the Central Security Service.

The NSA's actions have been a matter of political controversy on several occasions, including its spying on anti–Vietnam War leaders and the agency's participation in economic espionage. In 2013, the NSA had many of its secret surveillance programs revealed to the public by Edward Snowden, a former NSA contractor. According to the leaked documents, the NSA intercepts and stores the communications of over a billion people worldwide, including United States citizens. The documents also revealed the NSA tracks hundreds of millions of people's movements using cellphones' metadata. Internationally, research has pointed to the NSA's ability to surveil the domestic Internet traffic of foreign countries through "boomerang routing".

History

Formation

The origins of the National Security Agency can be traced back to April 28, 1917, three weeks after the U.S. Congress declared war on Germany in World War I. A code and cipher decryption unit was established as the Cable and Telegraph Section which was also known as the Cipher Bureau. It was headquartered in Washington, D.C. and was part of the war effort under the executive branch without direct Congressional authorization. During the course of the war it was relocated in the army's organizational chart several times. On July 5, 1917, Herbert O. Yardley was assigned to head the unit. At that point, the unit consisted of Yardley and two civilian clerks. It absorbed the navy's Cryptanalysis functions in July 1918. World War I ended on November 11, 1918, and the army cryptographic section of Military Intelligence (MI-8) moved to New York City on May 20, 1919, where it continued intelligence activities as the Code Compilation Company under the direction of Yardley.

The Black Chamber

After the disbandment of the U.S. Army cryptographic section of military intelligence, known as MI-8, in 1919, the U.S. government created the Cipher Bureau, also known as Black Chamber. The Black Chamber was the United States' first peacetime cryptanalytic organization. Jointly funded by the Army and the State Department, the Cipher Bureau was disguised as a New York City commercial code company; it actually produced and sold such codes for business use. Its true mission, however, was to break the communications (chiefly diplomatic) of other nations. Its most notable known success was at the Washington Naval Conference, during which it aided American negotiators considerably by providing them with the decrypted traffic of many of the conference delegations, most notably the Japanese. The Black Chamber successfully persuaded Western Union, the largest U.S. telegram company at the time, as well as several other communications companies to illegally give the Black Chamber access to cable traffic of foreign embassies and consulates. Soon, these companies publicly discontinued their collaboration.

Despite the Chamber's initial successes, it was shut down in 1929 by U.S. Secretary of State Henry L. Stimson, who defended his decision by stating, "Gentlemen do not read each other's mail".

World War II and its aftermath

During World War II, the Signal Intelligence Service (SIS) was created to intercept and decipher the communications of the Axis powers. When the war ended, the SIS was reorganized as the Army Security Agency (ASA), and it was placed under the leadership of the Director of Military Intelligence.

On May 20, 1949, all cryptologic activities were centralized under a national organization called the Armed Forces Security Agency (AFSA). This organization was originally established within the U.S. Department of Defense under the command of the Joint Chiefs of Staff. The AFSA was tasked to direct Department of Defense communications and electronic intelligence activities, except those of U.S. military intelligence units. However, the AFSA was unable to centralize communications intelligence and failed to coordinate with civilian agencies that shared its interests such as the Department of State, Central Intelligence Agency (CIA) and the Federal Bureau of Investigation (FBI). In December 1951, President Harry S. Truman ordered a panel to investigate how AFSA had failed to achieve its goals. The results of the investigation led to improvements and its redesignation as the National Security Agency.

The National Security Council issued a memorandum of October 24, 1952, that revised National Security Council Intelligence Directive (NSCID) 9. On the same day, Truman issued a second memorandum that called for the establishment of the NSA. The actual establishment of the NSA was done by a November 4 memo by Robert A. Lovett, the Secretary of Defense, changing the name of the AFSA to the NSA, and making the new agency responsible for all communications intelligence. Since President Truman's memo was a classified document, the existence of the NSA was not known to the public at that time. Due to its ultra-secrecy the U.S. intelligence community referred to the NSA as "No Such Agency".

Vietnam War

In the 1960s, the NSA played a key role in expanding U.S. commitment to the Vietnam War by providing evidence of a North Vietnamese attack on the American destroyer USS Maddox during the Gulf of Tonkin incident.

A secret operation, code-named "MINARET", was set up by the NSA to monitor the phone communications of Senators Frank Church and Howard Baker, as well as key leaders of the civil rights movement, including Martin Luther King Jr., and prominent U.S. journalists and athletes who criticized the Vietnam War. However, the project turned out to be controversial, and an internal review by the NSA concluded that its Minaret program was "disreputable if not outright illegal".

The NSA mounted a major effort to secure tactical communications among U.S. forces during the war with mixed success. The NESTOR family of compatible secure voice systems it developed was widely deployed during the Vietnam War, with about 30,000 NESTOR sets produced. However a variety of technical and operational problems limited their use, allowing the North Vietnamese to exploit and intercept U.S. communications.

Church Committee hearings

In the aftermath of the Watergate scandal, a congressional hearing in 1975 led by Senator Frank Church revealed that the NSA, in collaboration with Britain's SIGINT intelligence agency Government Communications Headquarters (GCHQ), had routinely intercepted the international communications of prominent anti-Vietnam war leaders such as Jane Fonda and Dr. Benjamin Spock. The Agency tracked these individuals in a secret filing system that was destroyed in 1974. Following the resignation of President Richard Nixon, there were several investigations of suspected misuse of FBI, CIA and NSA facilities. Senator Frank Church uncovered previously unknown activity, such as a CIA plot (ordered by the administration of President John F. Kennedy) to assassinate Fidel Castro. The investigation also uncovered NSA's wiretaps on targeted U.S. citizens.

After the Church Committee hearings, the Foreign Intelligence Surveillance Act of 1978 was passed into law. This was designed to limit the practice of mass surveillance in the United States.

From 1980s to 1990s

In 1986, the NSA intercepted the communications of the Libyan government during the immediate aftermath of the Berlin discotheque bombing. The White House asserted that the NSA interception had provided "irrefutable" evidence that Libya was behind the bombing, which U.S. President Ronald Reagan cited as a justification for the 1986 United States bombing of Libya.

In 1999, a multi-year investigation by the European Parliament highlighted the NSA's role in economic espionage in a report entitled 'Development of Surveillance Technology and Risk of Abuse of Economic Information'. That year, the NSA founded the NSA Hall of Honor, a memorial at the National Cryptologic Museum in Fort Meade, Maryland. The memorial is a, "tribute to the pioneers and heroes who have made significant and long-lasting contributions to American cryptology". NSA employees must be retired for more than fifteen years to qualify for the memorial.

NSA's infrastructure deteriorated in the 1990s as defense budget cuts resulted in maintenance deferrals. On January 24, 2000, NSA headquarters suffered a total network outage for three days caused by an overloaded network. Incoming traffic was successfully stored on agency servers, but it could not be directed and processed. The agency carried out emergency repairs at a cost of $3 million to get the system running again. (Some incoming traffic was also directed instead to Britain's GCHQ for the time being.) Director Michael Hayden called the outage a "wake-up call" for the need to invest in the agency's infrastructure.

In the 1990s the defensive arm of the NSA—the Information Assurance Directorate (IAD)—started working more openly; the first public technical talk by an NSA scientist at a major cryptography conference was J. Solinas' presentation on efficient Elliptic Curve Cryptography algorithms at Crypto 1997. The IAD's cooperative approach to academia and industry culminated in its support for a transparent process for replacing the outdated Data Encryption Standard (DES) by an Advanced Encryption Standard (AES). Cybersecurity policy expert Susan Landau attributes the NSA's harmonious collaboration with industry and academia in the selection of the AES in 2000—and the Agency's support for the choice of a strong encryption algorithm designed by Europeans rather than by Americans—to Brian Snow, who was the Technical Director of IAD and represented the NSA as cochairman of the Technical Working Group for the AES competition, and Michael Jacobs, who headed IAD at the time.

After the terrorist attacks of September 11, 2001, the NSA believed that it had public support for a dramatic expansion of its surveillance activities. According to Neal Koblitz and Alfred Menezes, the period when the NSA was a trusted partner with academia and industry in the development of cryptographic standards started to come to an end when, as part of the change in the NSA in the post-September 11 era, Snow was replaced as Technical Director, Jacobs retired, and IAD could no longer effectively oppose proposed actions by the offensive arm of the NSA.

War on Terror

In the aftermath of the September 11 attacks, the NSA created new IT systems to deal with the flood of information from new technologies like the Internet and cellphones. ThinThread contained advanced data mining capabilities. It also had a "privacy mechanism"; surveillance was stored encrypted; decryption required a warrant. The research done under this program may have contributed to the technology used in later systems. ThinThread was cancelled when Michael Hayden chose Trailblazer, which did not include ThinThread's privacy system.

Trailblazer Project ramped up in 2002 and was worked on by Science Applications International Corporation (SAIC), Boeing, Computer Sciences Corporation, IBM, and Litton Industries. Some NSA whistleblowers complained internally about major problems surrounding Trailblazer. This led to investigations by Congress and the NSA and DoD Inspectors General. The project was cancelled in early 2004.

Turbulence started in 2005. It was developed in small, inexpensive "test" pieces, rather than one grand plan like Trailblazer. It also included offensive cyber-warfare capabilities, like injecting malware into remote computers. Congress criticized Turbulence in 2007 for having similar bureaucratic problems as Trailblazer. It was to be a realization of information processing at higher speeds in cyberspace.

Global surveillance disclosures

The massive extent of the NSA's spying, both foreign and domestic, was revealed to the public in a series of detailed disclosures of internal NSA documents beginning in June 2013. Most of the disclosures were leaked by former NSA contractor Edward Snowden. On 4 September 2020, the NSA’s surveillance program was ruled unlawful by the US Court of Appeals. The court also added that the US intelligence leaders, who publicly defended it, were not telling the truth.

Mission

NSA's eavesdropping mission includes radio broadcasting, both from various organizations and individuals, the Internet, telephone calls, and other intercepted forms of communication. Its secure communications mission includes military, diplomatic, and all other sensitive, confidential or secret government communications.

According to a 2010 article in The Washington Post, "[e]very day, collection systems at the National Security Agency intercept and store 1.7 billion e-mails, phone calls and other types of communications. The NSA sorts a fraction of those into 70 separate databases."

Because of its listening task, NSA/CSS has been heavily involved in cryptanalytic research, continuing the work of predecessor agencies which had broken many World War II codes and ciphers.

In 2004, NSA Central Security Service and the National Cyber Security Division of the Department of Homeland Security (DHS) agreed to expand NSA Centers of Academic Excellence in Information Assurance Education Program.

As part of the National Security Presidential Directive 54/Homeland Security Presidential Directive 23 (NSPD 54), signed on January 8, 2008, by President Bush, the NSA became the lead agency to monitor and protect all of the federal government's computer networks from cyber-terrorism.

Operations

Operations by the National Security Agency can be divided in three types:

- Collection overseas, which falls under the responsibility of the Global Access Operations (GAO) division.

- Domestic collection, which falls under the responsibility of the Special Source Operations (SSO) division.

- Hacking operations, which falls under the responsibility of the Tailored Access Operations (TAO) division.

Collection overseas

Echelon

"Echelon" was created in the incubator of the Cold War. Today it is a legacy system, and several NSA stations are closing.

NSA/CSS, in combination with the equivalent agencies in the United Kingdom (Government Communications Headquarters), Canada (Communications Security Establishment), Australia (Australian Signals Directorate), and New Zealand (Government Communications Security Bureau), otherwise known as the UKUSA group, was reported to be in command of the operation of the so-called ECHELON system. Its capabilities were suspected to include the ability to monitor a large proportion of the world's transmitted civilian telephone, fax and data traffic.

During the early 1970s, the first of what became more than eight large satellite communications dishes were installed at Menwith Hill. Investigative journalist Duncan Campbell reported in 1988 on the "ECHELON" surveillance program, an extension of the UKUSA Agreement on global signals intelligence SIGINT, and detailed how the eavesdropping operations worked. On November 3, 1999 the BBC reported that they had confirmation from the Australian Government of the existence of a powerful "global spying network" code-named Echelon, that could "eavesdrop on every single phone call, fax or e-mail, anywhere on the planet" with Britain and the United States as the chief protagonists. They confirmed that Menwith Hill was "linked directly to the headquarters of the US National Security Agency (NSA) at Fort Meade in Maryland".

NSA's United States Signals Intelligence Directive 18 (USSID 18) strictly prohibited the interception or collection of information about "... U.S. persons, entities, corporations or organizations...." without explicit written legal permission from the United States Attorney General when the subject is located abroad, or the Foreign Intelligence Surveillance Court when within U.S. borders. Alleged Echelon-related activities, including its use for motives other than national security, including political and industrial espionage, received criticism from countries outside the UKUSA alliance.

Other SIGINT operations overseas

The NSA was also involved in planning to blackmail people with "SEXINT", intelligence gained about a potential target's sexual activity and preferences. Those targeted had not committed any apparent crime nor were they charged with one.

In order to support its facial recognition program, the NSA is intercepting "millions of images per day".

The Real Time Regional Gateway is a data collection program introduced in 2005 in Iraq by NSA during the Iraq War that consisted of gathering all electronic communication, storing it, then searching and otherwise analyzing it. It was effective in providing information about Iraqi insurgents who had eluded less comprehensive techniques. This "collect it all" strategy introduced by NSA director, Keith B. Alexander, is believed by Glenn Greenwald of The Guardian to be the model for the comprehensive worldwide mass archiving of communications which NSA is engaged in as of 2013.

A dedicated unit of the NSA locates targets for the CIA for extrajudicial assassination in the Middle East. The NSA has also spied extensively on the European Union, the United Nations and numerous governments including allies and trading partners in Europe, South America and Asia.

In June 2015, WikiLeaks published documents showing that NSA spied on French companies.

In July 2015, WikiLeaks published documents showing that NSA spied on federal German ministries since the 1990s. Even Germany's Chancellor Angela Merkel's cellphones and phone of her predecessors had been intercepted.

Boundless Informant

Edward Snowden revealed in June 2013 that between February 8 and March 8, 2013, the NSA collected about 124.8 billion telephone data items and 97.1 billion computer data items throughout the world, as was displayed in charts from an internal NSA tool codenamed Boundless Informant. Initially, it was reported that some of these data reflected eavesdropping on citizens in countries like Germany, Spain and France, but later on, it became clear that those data were collected by European agencies during military missions abroad and were subsequently shared with NSA.

Bypassing encryption

In 2013, reporters uncovered a secret memo that claims the NSA created and pushed for the adoption of the Dual EC DRBG encryption standard that contained built-in vulnerabilities in 2006 to the United States National Institute of Standards and Technology (NIST), and the International Organization for Standardization (aka ISO). This memo appears to give credence to previous speculation by cryptographers at Microsoft Research. Edward Snowden claims that the NSA often bypasses encryption altogether by lifting information before it is encrypted or after it is decrypted.

XKeyscore rules (as specified in a file xkeyscorerules100.txt, sourced by German TV stations NDR and WDR, who claim to have excerpts from its source code) reveal that the NSA tracks users of privacy-enhancing software tools, including Tor; an anonymous email service provided by the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) in Cambridge, Massachusetts; and readers of the Linux Journal.

Software backdoors

Linus Torvalds, the founder of Linux kernel, joked during a LinuxCon keynote on September 18, 2013, that the NSA, who are the founder of SELinux, wanted a backdoor in the kernel. However, later, Linus' father, a Member of the European Parliament (MEP), revealed that the NSA actually did this.

When my oldest son was asked the same question: "Has he been approached by the NSA about backdoors?" he said "No", but at the same time he nodded. Then he was sort of in the legal free. He had given the right answer, everybody understood that the NSA had approached him.

— Nils Torvalds, LIBE Committee Inquiry on Electronic Mass Surveillance of EU Citizens – 11th Hearing, 11 November 2013

IBM Notes was the first widely adopted software product to use public key cryptography for client–server and server–server authentication and for encryption of data. Until US laws regulating encryption were changed in 2000, IBM and Lotus were prohibited from exporting versions of Notes that supported symmetric encryption keys that were longer than 40 bits. In 1997, Lotus negotiated an agreement with the NSA that allowed export of a version that supported stronger keys with 64 bits, but 24 of the bits were encrypted with a special key and included in the message to provide a "workload reduction factor" for the NSA. This strengthened the protection for users of Notes outside the US against private-sector industrial espionage, but not against spying by the US government.

Boomerang routing

While it is assumed that foreign transmissions terminating in the U.S. (such as a non-U.S. citizen accessing a U.S. website) subject non-U.S. citizens to NSA surveillance, recent research into boomerang routing has raised new concerns about the NSA's ability to surveil the domestic Internet traffic of foreign countries. Boomerang routing occurs when an Internet transmission that originates and terminates in a single country transits another. Research at the University of Toronto has suggested that approximately 25% of Canadian domestic traffic may be subject to NSA surveillance activities as a result of the boomerang routing of Canadian Internet service providers.

Hardware implanting

A document included in NSA files released with Glenn Greenwald's book No Place to Hide details how the agency's Tailored Access Operations (TAO) and other NSA units gain access to hardware. They intercept routers, servers and other network hardware being shipped to organizations targeted for surveillance and install covert implant firmware onto them before they are delivered. This was described by an NSA manager as "some of the most productive operations in TAO because they preposition access points into hard target networks around the world."

Computers seized by the NSA due to interdiction are often modified with a physical device known as Cottonmouth. Cottonmouth is a device that can be inserted in the USB port of a computer in order to establish remote access to the targeted machine. According to NSA's Tailored Access Operations (TAO) group implant catalog, after implanting Cottonmouth, the NSA can establish a network bridge "that allows the NSA to load exploit software onto modified computers as well as allowing the NSA to relay commands and data between hardware and software implants."

Domestic collection

NSA's mission, as set forth in Executive Order 12333 in 1981, is to collect information that constitutes "foreign intelligence or counterintelligence" while not "acquiring information concerning the domestic activities of United States persons". NSA has declared that it relies on the FBI to collect information on foreign intelligence activities within the borders of the United States, while confining its own activities within the United States to the embassies and missions of foreign nations.

The appearance of a 'Domestic Surveillance Directorate' of the NSA was soon exposed as a hoax in 2013.

NSA's domestic surveillance activities are limited by the requirements imposed by the Fourth Amendment to the U.S. Constitution. The Foreign Intelligence Surveillance Court for example held in October 2011, citing multiple Supreme Court precedents, that the Fourth Amendment prohibitions against unreasonable searches and seizures applies to the contents of all communications, whatever the means, because "a person's private communications are akin to personal papers." However, these protections do not apply to non-U.S. persons located outside of U.S. borders, so the NSA's foreign surveillance efforts are subject to far fewer limitations under U.S. law. The specific requirements for domestic surveillance operations are contained in the Foreign Intelligence Surveillance Act of 1978 (FISA), which does not extend protection to non-U.S. citizens located outside of U.S. territory.

President's Surveillance Program

George W. Bush, president during the 9/11 terrorist attacks, approved the Patriot Act shortly after the attacks to take anti-terrorist security measures. Title 1, 2, and 9 specifically authorized measures that would be taken by the NSA. These titles granted enhanced domestic security against terrorism, surveillance procedures, and improved intelligence, respectively. On March 10, 2004, there was a debate between President Bush and White House Counsel Alberto Gonzales, Attorney General John Ashcroft, and Acting Attorney General James Comey. The Attorneys General were unsure if the NSA's programs could be considered constitutional. They threatened to resign over the matter, but ultimately the NSA's programs continued. On March 11, 2004, President Bush signed a new authorization for mass surveillance of Internet records, in addition to the surveillance of phone records. This allowed the president to be able to override laws such as the Foreign Intelligence Surveillance Act, which protected civilians from mass surveillance. In addition to this, President Bush also signed that the measures of mass surveillance were also retroactively in place.

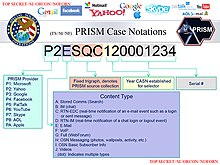

The PRISM program

Under the PRISM program, which started in 2007, NSA gathers Internet communications from foreign targets from nine major U.S. Internet-based communication service providers: Microsoft, Yahoo, Google, Facebook, PalTalk, AOL, Skype, YouTube and Apple. Data gathered include email, videos, photos, VoIP chats such as Skype, and file transfers.

Former NSA director General Keith Alexander claimed that in September 2009 the NSA prevented Najibullah Zazi and his friends from carrying out a terrorist attack. However, this claim has been debunked and no evidence has been presented demonstrating that the NSA has ever been instrumental in preventing a terrorist attack.

Hacking operations

Besides the more traditional ways of eavesdropping in order to collect signals intelligence, NSA is also engaged in hacking computers, smartphones and their networks. These operations are conducted by the Tailored Access Operations (TAO) division, which has been active since at least circa 1998.

According to the Foreign Policy magazine, "... the Office of Tailored Access Operations, or TAO, has successfully penetrated Chinese computer and telecommunications systems for almost 15 years, generating some of the best and most reliable intelligence information about what is going on inside the People's Republic of China."

In an interview with Wired magazine, Edward Snowden said the Tailored Access Operations division accidentally caused Syria's internet blackout in 2012.

Organizational structure

The NSA is led by the Director of the National Security Agency (DIRNSA), who also serves as Chief of the Central Security Service (CHCSS) and Commander of the United States Cyber Command (USCYBERCOM) and is the highest-ranking military official of these organizations. He is assisted by a Deputy Director, who is the highest-ranking civilian within the NSA/CSS.

NSA also has an Inspector General, head of the Office of the Inspector General (OIG), a General Counsel, head of the Office of the General Counsel (OGC) and a Director of Compliance, who is head of the Office of the Director of Compliance (ODOC).

Unlike other intelligence organizations such as CIA or DIA, NSA has always been particularly reticent concerning its internal organizational structure.

As of the mid-1990s, the National Security Agency was organized into five Directorates:

- The Operations Directorate, which was responsible for SIGINT collection and processing.

- The Technology and Systems Directorate, which develops new technologies for SIGINT collection and processing.

- The Information Systems Security Directorate, which was responsible for NSA's communications and information security missions.

- The Plans, Policy and Programs Directorate, which provided staff support and general direction for the Agency.

- The Support Services Directorate, which provided logistical and administrative support activities.

Each of these directorates consisted of several groups or elements, designated by a letter. There were for example the A Group, which was responsible for all SIGINT operations against the Soviet Union and Eastern Europe, and G Group, which was responsible for SIGINT related to all non-communist countries. These groups were divided in units designated by an additional number, like unit A5 for breaking Soviet codes, and G6, being the office for the Middle East, North Africa, Cuba, Central and South America.

Directorates

As of 2013, NSA has about a dozen directorates, which are designated by a letter, although not all of them are publicly known. The directorates are divided in divisions and units starting with the letter of the parent directorate, followed by a number for the division, the sub-unit or a sub-sub-unit.

The main elements of the organizational structure of the NSA are:

- DP – Associate Directorate for European Affairs

- DP1 –

- DP11 – Office of European Affairs

- DP12 – Office of Tunisian Affairs

- DP15 – Office of British Affairs

- DP1 –

- DP – Associate Directorate for European Affairs

- E – Directorate for Education and Training

- E9 –

- Known is E92 the National Cryptologic School

- E9 –

- F –

- F1 -

- F1A2 - Office of the NSA Representative of US Diplomatic Missions

- F4 –

- F406 – Office of Foreign Affairs, Pacific Field

- F6 – Special Collection Service acronymically the SCS, a joint program created by CIA and NSA in 1978 to facilitate clandestine activities such as bugging computers throughout the world, using the expertise of both agencies.

- F7 -

- F71 – Operations Center Georgia

- F74 – Operations Center in Fort Meade

- F77 – NSA Station at RAF Menwith Hill

- F1 -

- G – Directorate only known from unit G112, the office that manages the Senior Span platform, attached to the U2 spy planes. Also known by GS2E4, which manages Iranian digital networks

- H – Only known for joint activities, this directorate specializes in collaboration with other nations

- H52G – Joint Signal Activity

- I – Information Assurance Directorate (IAD), which ensures availability, integrity, authentication, confidentiality, and non-repudiation of national security and telecommunications and information systems (national security systems).

- J – Directorate only known from unit J2, the Cryptologic Intelligence Unit

- L – Installation and Logistics

- LL – Services

- LL1 – Material Management

- LL2 – Transportation, Asset, and Disposition Services

- LL23 –

- LL234 –

- LL234M – Property Support

- LL234 –

- LL23 –

- LL – Services

- M – Associate Directorate for Human Resources (ADHRS)

- Q – Security and Counterintelligence

- R – Research Directorate, which conducts research on signals intelligence and on information assurance for the U.S. Government.

- S – Signals Intelligence Directorate

(SID), which is responsible for the collection, analysis, production

and dissemination of signals intelligence. This directorate is led by a

director and a deputy director. The SID consists of the following

divisions:

- S0 – SID Workforce Performance

- S1 – Customer Relations

- S11 – Customer Gateway

- S112 – NGA Accounting Management

- S12 Known under S12 is S12C the Consumer Services unit

- S17 – Strategic Intelligence Disputes and Issues

- S11 – Customer Gateway

- S2 – Analysis and Production Centers, with the following so-called Product Lines:

- S211 –

- S211A – Advanced Analysis Lab

- S2A: South Asia

- S2B: China and Korea

- S2C: International Security

- S2D – Foreign Counterintelligence

- S2D3 –

- S2D31 – Operations Support

- S2E: Middle East/Asia

- S2E33: Operational Technologies in Middle East and Asia

- S2F: International Crime

- S2F2:

- S2F21: Transnational Crime

- S2F2:

- S2G: Counter-proliferation

- S2H: Russia

- S2I – Counter-terrorism

- S2I02 – Management Services

- S2J: Weapons and Space

- S2T: Current Threats

- S211 –

- S3 – Data Acquisition, with these divisions for the main collection programs:

- S31 – Cryptanalysis and Exploitation Services (CES)

- S311 –

- S3115 –

- S31153 – Target Analysis Branch of Network Information Exploitation

- S3115 –

- S311 –

- S32 – Tailored Access Operations (TAO), which hacks into foreign computers to conduct cyber-espionage and reportedly is "the largest and arguably the most important component of the NSA's huge Signal Intelligence (SIGINT) Directorate, consisting of over 1,000 military and civilian computer hackers, intelligence analysts, targeting specialists, computer hardware and software designers, and electrical engineers."

- S33 – Global Access Operations (GAO), which is responsible for intercepts from satellites and other international SIGINT platforms. A tool which details and maps the information collected by this unit is code-named Boundless Informant.

- S34 – Collections Strategies and Requirements Center

- S35 – Special Source Operations (SSO), which is responsible for domestic and compartmented collection programs, like for example the PRISM program. Special Source Operations is also mentioned in connection to the FAIRVIEW collection program.

- S31 – Cryptanalysis and Exploitation Services (CES)

- T – Technical Directorate (TD)

- T1 Mission Capabilities

- T2 Business Capabilities

- T3 Enterprise IT Services

- V – Threat Assessment Directorate also known as the NTOC National Threat Operations Center

- Directorate for Corporate Leadership

- Foreign Affairs Directorate, which acts as liaison with foreign intelligence services, counter-intelligence centers and the UKUSA-partners.

- Acquisitions and Procurement Directorate

- Information Sharing Services (ISS), led by a chief and a deputy chief.

In the year 2000, a leadership team was formed, consisting of the Director, the Deputy Director and the Directors of the Signals Intelligence (SID), the Information Assurance (IAD) and the Technical Directorate (TD). The chiefs of other main NSA divisions became associate directors of the senior leadership team.

After president George W. Bush initiated the President's Surveillance Program (PSP) in 2001, the NSA created a 24-hour Metadata Analysis Center (MAC), followed in 2004 by the Advanced Analysis Division (AAD), with the mission of analyzing content, Internet metadata and telephone metadata. Both units were part of the Signals Intelligence Directorate.

A 2016 proposal would combine the Signals Intelligence Directorate with Information Assurance Directorate into Directorate of Operations.

NSANet

NSANet stands for National Security Agency Network and is the official NSA intranet. It is a classified network, for information up to the level of TS/SCI to support the use and sharing of intelligence data between NSA and the signals intelligence agencies of the four other nations of the Five Eyes partnership. The management of NSANet has been delegated to the Central Security Service Texas (CSSTEXAS).

NSANet is a highly secured computer network consisting of fiber-optic and satellite communication channels which are almost completely separated from the public Internet. The network allows NSA personnel and civilian and military intelligence analysts anywhere in the world to have access to the agency's systems and databases. This access is tightly controlled and monitored. For example, every keystroke is logged, activities are audited at random and downloading and printing of documents from NSANet are recorded.

In 1998, NSANet, along with NIPRNET and SIPRNET, had "significant problems with poor search capabilities, unorganized data and old information". In 2004, the network was reported to have used over twenty commercial off-the-shelf operating systems. Some universities that do highly sensitive research are allowed to connect to it.

The thousands of Top Secret internal NSA documents that were taken by Edward Snowden in 2013 were stored in "a file-sharing location on the NSA's intranet site"; so, they could easily be read online by NSA personnel. Everyone with a TS/SCI-clearance had access to these documents. As a system administrator, Snowden was responsible for moving accidentally misplaced highly sensitive documents to safer storage locations.

Watch centers

The NSA maintains at least two watch centers:

- National Security Operations Center (NSOC), which is the NSA's current operations center and focal point for time-sensitive SIGINT reporting for the United States SIGINT System (USSS). This center was established in 1968 as the National SIGINT Watch Center (NSWC) and renamed into National SIGINT Operations Center (NSOC) in 1973. This "nerve center of the NSA" got its current name in 1996.

- NSA/CSS Threat Operations Center (NTOC), which is the primary NSA/CSS partner for Department of Homeland Security response to cyber incidents. The NTOC establishes real-time network awareness and threat characterization capabilities to forecast, alert, and attribute malicious activity and enable the coordination of Computer Network Operations. The NTOC was established in 2004 as a joint Information Assurance and Signals Intelligence project.

Employees

The number of NSA employees is officially classified but there are several sources providing estimates. In 1961, NSA had 59,000 military and civilian employees, which grew to 93,067 in 1969, of which 19,300 worked at the headquarters at Fort Meade. In the early 1980s NSA had roughly 50,000 military and civilian personnel. By 1989 this number had grown again to 75,000, of which 25,000 worked at the NSA headquarters. Between 1990 and 1995 the NSA's budget and workforce were cut by one third, which led to a substantial loss of experience.

In 2012, the NSA said more than 30,000 employees worked at Fort Meade and other facilities. In 2012, John C. Inglis, the deputy director, said that the total number of NSA employees is "somewhere between 37,000 and one billion" as a joke, and stated that the agency is "probably the biggest employer of introverts." In 2013 Der Spiegel stated that the NSA had 40,000 employees. More widely, it has been described as the world's largest single employer of mathematicians. Some NSA employees form part of the workforce of the National Reconnaissance Office (NRO), the agency that provides the NSA with satellite signals intelligence.

As of 2013 about 1,000 system administrators work for the NSA.

Personnel security

The NSA received criticism early on in 1960 after two agents had defected to the Soviet Union. Investigations by the House Un-American Activities Committee and a special subcommittee of the United States House Committee on Armed Services revealed severe cases of ignorance in personnel security regulations, prompting the former personnel director and the director of security to step down and leading to the adoption of stricter security practices. Nonetheless, security breaches reoccurred only a year later when in an issue of Izvestia of July 23, 1963, a former NSA employee published several cryptologic secrets.

The very same day, an NSA clerk-messenger committed suicide as ongoing investigations disclosed that he had sold secret information to the Soviets on a regular basis. The reluctance of Congressional houses to look into these affairs had prompted a journalist to write, "If a similar series of tragic blunders occurred in any ordinary agency of Government an aroused public would insist that those responsible be officially censured, demoted, or fired." David Kahn criticized the NSA's tactics of concealing its doings as smug and the Congress' blind faith in the agency's right-doing as shortsighted, and pointed out the necessity of surveillance by the Congress to prevent abuse of power.

Edward Snowden's leaking of the existence of PRISM in 2013 caused the NSA to institute a "two-man rule", where two system administrators are required to be present when one accesses certain sensitive information. Snowden claims he suggested such a rule in 2009.

Polygraphing

The NSA conducts polygraph tests of employees. For new employees, the tests are meant to discover enemy spies who are applying to the NSA and to uncover any information that could make an applicant pliant to coercion. As part of the latter, historically EPQs or "embarrassing personal questions" about sexual behavior had been included in the NSA polygraph. The NSA also conducts five-year periodic reinvestigation polygraphs of employees, focusing on counterintelligence programs. In addition the NSA conducts periodic polygraph investigations in order to find spies and leakers; those who refuse to take them may receive "termination of employment", according to a 1982 memorandum from the director of NSA.

There are also "special access examination" polygraphs for employees who wish to work in highly sensitive areas, and those polygraphs cover counterintelligence questions and some questions about behavior. NSA's brochure states that the average test length is between two and four hours. A 1983 report of the Office of Technology Assessment stated that "It appears that the NSA [National Security Agency] (and possibly CIA) use the polygraph not to determine deception or truthfulness per se, but as a technique of interrogation to encourage admissions." Sometimes applicants in the polygraph process confess to committing felonies such as murder, rape, and selling of illegal drugs. Between 1974 and 1979, of the 20,511 job applicants who took polygraph tests, 695 (3.4%) confessed to previous felony crimes; almost all of those crimes had been undetected.

In 2010 the NSA produced a video explaining its polygraph process. The video, ten minutes long, is titled "The Truth About the Polygraph" and was posted to the Web site of the Defense Security Service. Jeff Stein of The Washington Post said that the video portrays "various applicants, or actors playing them—it's not clear—describing everything bad they had heard about the test, the implication being that none of it is true." AntiPolygraph.org argues that the NSA-produced video omits some information about the polygraph process; it produced a video responding to the NSA video. George Maschke, the founder of the Web site, accused the NSA polygraph video of being "Orwellian".

After Edward Snowden revealed his identity in 2013, the NSA began requiring polygraphing of employees once per quarter.

Arbitrary firing

The number of exemptions from legal requirements has been criticized. When in 1964 the Congress was hearing a bill giving the director of the NSA the power to fire at will any employee, The Washington Post wrote: "This is the very definition of arbitrariness. It means that an employee could be discharged and disgraced on the basis of anonymous allegations without the slightest opportunity to defend himself." Yet, the bill was accepted by an overwhelming majority. Also, every person hired to a job in the US after 2007, at any private organization, state or federal government agency, must be reported to the New Hire Registry, ostensibly to look for child support evaders, except that employees of an intelligence agency may be excluded from reporting if the director deems it necessary for national security reasons.

Facilities

Headquarters

History of headquarters

When the agency was first established, its headquarters and cryptographic center were in the Naval Security Station in Washington, D.C. The COMINT functions were located in Arlington Hall in Northern Virginia, which served as the headquarters of the U.S. Army's cryptographic operations. Because the Soviet Union had detonated a nuclear bomb and because the facilities were crowded, the federal government wanted to move several agencies, including the AFSA/NSA. A planning committee considered Fort Knox, but Fort Meade, Maryland, was ultimately chosen as NSA headquarters because it was far enough away from Washington, D.C. in case of a nuclear strike and was close enough so its employees would not have to move their families.

Construction of additional buildings began after the agency occupied buildings at Fort Meade in the late 1950s, which they soon outgrew. In 1963 the new headquarters building, nine stories tall, opened. NSA workers referred to the building as the "Headquarters Building" and since the NSA management occupied the top floor, workers used "Ninth Floor" to refer to their leaders. COMSEC remained in Washington, D.C., until its new building was completed in 1968. In September 1986, the Operations 2A and 2B buildings, both copper-shielded to prevent eavesdropping, opened with a dedication by President Ronald Reagan. The four NSA buildings became known as the "Big Four." The NSA director moved to 2B when it opened.

Headquarters for the National Security Agency is located at 39°6′32″N 76°46′17″W in Fort George G. Meade, Maryland, although it is separate from other compounds and agencies that are based within this same military installation. Fort Meade is about 20 mi (32 km) southwest of Baltimore, and 25 mi (40 km) northeast of Washington, D.C. The NSA has two dedicated exits off Baltimore–Washington Parkway. The Eastbound exit from the Parkway (heading toward Baltimore) is open to the public and provides employee access to its main campus and public access to the National Cryptology Museum. The Westbound side exit, (heading toward Washington) is labeled "NSA Employees Only". The exit may only be used by people with the proper clearances, and security vehicles parked along the road guard the entrance.

NSA is the largest employer in the state of Maryland, and two-thirds of its personnel work at Fort Meade. Built on 350 acres (140 ha; 0.55 sq mi) of Fort Meade's 5,000 acres (2,000 ha; 7.8 sq mi), the site has 1,300 buildings and an estimated 18,000 parking spaces.

The main NSA headquarters and operations building is what James Bamford, author of Body of Secrets, describes as "a modern boxy structure" that appears similar to "any stylish office building." The building is covered with one-way dark glass, which is lined with copper shielding in order to prevent espionage by trapping in signals and sounds. It contains 3,000,000 square feet (280,000 m2), or more than 68 acres (28 ha), of floor space; Bamford said that the U.S. Capitol "could easily fit inside it four times over."

The facility has over 100 watchposts, one of them being the visitor control center, a two-story area that serves as the entrance. At the entrance, a white pentagonal structure, visitor badges are issued to visitors and security clearances of employees are checked. The visitor center includes a painting of the NSA seal.

The OPS2A building, the tallest building in the NSA complex and the location of much of the agency's operations directorate, is accessible from the visitor center. Bamford described it as a "dark glass Rubik's Cube". The facility's "red corridor" houses non-security operations such as concessions and the drug store. The name refers to the "red badge" which is worn by someone without a security clearance. The NSA headquarters includes a cafeteria, a credit union, ticket counters for airlines and entertainment, a barbershop, and a bank. NSA headquarters has its own post office, fire department, and police force.

The employees at the NSA headquarters reside in various places in the Baltimore-Washington area, including Annapolis, Baltimore, and Columbia in Maryland and the District of Columbia, including the Georgetown community. The NSA maintains a shuttle service from the Odenton station of MARC to its Visitor Control Center and has done so since 2005.

Power consumption

Following a major power outage in 2000, in 2003 and in follow-ups through 2007, The Baltimore Sun reported that the NSA was at risk of electrical overload because of insufficient internal electrical infrastructure at Fort Meade to support the amount of equipment being installed. This problem was apparently recognized in the 1990s but not made a priority, and "now the agency's ability to keep its operations going is threatened."

On August 6, 2006, The Baltimore Sun reported that the NSA had completely maxed out the grid, and that Baltimore Gas & Electric (BGE, now Constellation Energy) was unable to sell them any more power. NSA decided to move some of its operations to a new satellite facility.

BGE provided NSA with 65 to 75 megawatts at Fort Meade in 2007, and expected that an increase of 10 to 15 megawatts would be needed later that year. In 2011, the NSA was Maryland's largest consumer of power. In 2007, as BGE's largest customer, NSA bought as much electricity as Annapolis, the capital city of Maryland.

One estimate put the potential for power consumption by the new Utah Data Center at US$40 million per year.

Computing assets

In 1995, The Baltimore Sun reported that the NSA is the owner of the single largest group of supercomputers.

NSA held a groundbreaking ceremony at Fort Meade in May 2013 for its High Performance Computing Center 2, expected to open in 2016. Called Site M, the center has a 150 megawatt power substation, 14 administrative buildings and 10 parking garages. It cost $3.2 billion and covers 227 acres (92 ha; 0.355 sq mi). The center is 1,800,000 square feet (17 ha; 0.065 sq mi) and initially uses 60 megawatts of electricity.

Increments II and III are expected to be completed by 2030, and would quadruple the space, covering 5,800,000 square feet (54 ha; 0.21 sq mi) with 60 buildings and 40 parking garages. Defense contractors are also establishing or expanding cybersecurity facilities near the NSA and around the Washington metropolitan area.

National Computer Security Center

The DoD Computer Security Center was founded in 1981 and renamed the National Computer Security Center (NCSC) in 1985. NCSC was responsible for computer security throughout the federal government. NCSC was part of NSA, and during the late 1980s and the 1990s, NSA and NCSC published Trusted Computer System Evaluation Criteria in a six-foot high Rainbow Series of books that detailed trusted computing and network platform specifications. The Rainbow books were replaced by the Common Criteria, however, in the early 2000s.

Other U.S. facilities

As of 2012, NSA collected intelligence from four geostationary satellites. Satellite receivers were at Roaring Creek Station in Catawissa, Pennsylvania and Salt Creek Station in Arbuckle, California. It operated ten to twenty taps on U.S. telecom switches. NSA had installations in several U.S. states and from them observed intercepts from Europe, the Middle East, North Africa, Latin America, and Asia.

NSA had facilities at Friendship Annex (FANX) in Linthicum, Maryland, which is a 20 to 25-minute drive from Fort Meade; the Aerospace Data Facility at Buckley Air Force Base in Aurora outside Denver, Colorado; NSA Texas in the Texas Cryptology Center at Lackland Air Force Base in San Antonio, Texas; NSA Georgia at Fort Gordon in Augusta, Georgia; NSA Hawaii in Honolulu; the Multiprogram Research Facility in Oak Ridge, Tennessee, and elsewhere.

On January 6, 2011, a groundbreaking ceremony was held to begin construction on NSA's first Comprehensive National Cyber-security Initiative (CNCI) Data Center, known as the "Utah Data Center" for short. The $1.5B data center is being built at Camp Williams, Utah, located 25 miles (40 km) south of Salt Lake City, and will help support the agency's National Cyber-security Initiative. It is expected to be operational by September 2013. Construction of Utah Data Center finished in May 2019.

In 2009, to protect its assets and access more electricity, NSA sought to decentralize and expand its existing facilities in Fort Meade and Menwith Hill, the latter expansion expected to be completed by 2015.

The Yakima Herald-Republic cited Bamford, saying that many of NSA's bases for its Echelon program were a legacy system, using outdated, 1990s technology. In 2004, NSA closed its operations at Bad Aibling Station (Field Station 81) in Bad Aibling, Germany. In 2012, NSA began to move some of its operations at Yakima Research Station, Yakima Training Center, in Washington state to Colorado, planning to leave Yakima closed. As of 2013, NSA also intended to close operations at Sugar Grove, West Virginia.

International stations

Following the signing in 1946–1956 of the UKUSA Agreement between the United States, United Kingdom, Canada, Australia and New Zealand, who then cooperated on signals intelligence and ECHELON, NSA stations were built at GCHQ Bude in Morwenstow, United Kingdom; Geraldton, Pine Gap and Shoal Bay, Australia; Leitrim and Ottawa, Ontario, Canada; Misawa, Japan; and Waihopai and Tangimoana, New Zealand.

NSA operates RAF Menwith Hill in North Yorkshire, United Kingdom, which was, according to BBC News in 2007, the largest electronic monitoring station in the world. Planned in 1954, and opened in 1960, the base covered 562 acres (227 ha; 0.878 sq mi) in 1999.

The agency's European Cryptologic Center (ECC), with 240 employees in 2011, is headquartered at a US military compound in Griesheim, near Frankfurt in Germany. A 2011 NSA report indicates that the ECC is responsible for the "largest analysis and productivity in Europe" and focuses on various priorities, including Africa, Europe, the Middle East and counterterrorism operations.

In 2013, a new Consolidated Intelligence Center, also to be used by NSA, is being built at the headquarters of the United States Army Europe in Wiesbaden, Germany. NSA's partnership with Bundesnachrichtendienst (BND), the German foreign intelligence service, was confirmed by BND president Gerhard Schindler.

Thailand

Thailand is a "3rd party partner" of the NSA along with nine other nations. These are non-English-speaking countries that have made security agreements for the exchange of SIGINT raw material and end product reports.

Thailand is the site of at least two US SIGINT collection stations. One is at the US Embassy in Bangkok, a joint NSA-CIA Special Collection Service (SCS) unit. It presumably eavesdrops on foreign embassies, governmental communications, and other targets of opportunity.

The second installation is a FORNSAT (foreign satellite interception) station in the Thai city of Khon Kaen. It is codenamed INDRA, but has also been referred to as LEMONWOOD. The station is approximately 40 hectares (99 acres) in size and consists of a large 3,700–4,600 m2 (40,000–50,000 ft2) operations building on the west side of the ops compound and four radome-enclosed parabolic antennas. Possibly two of the radome-enclosed antennas are used for SATCOM intercept and two antennas used for relaying the intercepted material back to NSA. There is also a PUSHER-type circularly-disposed antenna array (CDAA) just north of the ops compound.

NSA activated Khon Kaen in October 1979. Its mission was to eavesdrop on the radio traffic of Chinese army and air force units in southern China, especially in and around the city of Kunming in Yunnan Province. Back in the late 1970s the base consisted only of a small CDAA antenna array that was remote-controlled via satellite from the NSA listening post at Kunia, Hawaii, and a small force of civilian contractors from Bendix Field Engineering Corp. whose job it was to keep the antenna array and satellite relay facilities up and running 24/7.

According to the papers of the late General William Odom, the INDRA facility was upgraded in 1986 with a new British-made PUSHER CDAA antenna as part of an overall upgrade of NSA and Thai SIGINT facilities whose objective was to spy on the neighboring communist nations of Vietnam, Laos, and Cambodia.

The base apparently fell into disrepair in the 1990s as China and Vietnam became more friendly towards the US, and by 2002 archived satellite imagery showed that the PUSHER CDAA antenna had been torn down, perhaps indicating that the base had been closed. At some point in the period since 9/11, the Khon Kaen base was reactivated and expanded to include a sizeable SATCOM intercept mission. It is likely that the NSA presence at Khon Kaen is relatively small, and that most of the work is done by civilian contractors.

Research and development

NSA has been involved in debates about public policy, both indirectly as a behind-the-scenes adviser to other departments, and directly during and after Vice Admiral Bobby Ray Inman's directorship. NSA was a major player in the debates of the 1990s regarding the export of cryptography in the United States. Restrictions on export were reduced but not eliminated in 1996.

Its secure government communications work has involved the NSA in numerous technology areas, including the design of specialized communications hardware and software, production of dedicated semiconductors (at the Ft. Meade chip fabrication plant), and advanced cryptography research. For 50 years, NSA designed and built most of its computer equipment in-house, but from the 1990s until about 2003 (when the U.S. Congress curtailed the practice), the agency contracted with the private sector in the fields of research and equipment.

Data Encryption Standard

NSA was embroiled in some minor controversy concerning its involvement in the creation of the Data Encryption Standard (DES), a standard and public block cipher algorithm used by the U.S. government and banking community. During the development of DES by IBM in the 1970s, NSA recommended changes to some details of the design. There was suspicion that these changes had weakened the algorithm sufficiently to enable the agency to eavesdrop if required, including speculation that a critical component—the so-called S-boxes—had been altered to insert a "backdoor" and that the reduction in key length might have made it feasible for NSA to discover DES keys using massive computing power. It has since been observed that the S-boxes in DES are particularly resilient against differential cryptanalysis, a technique which was not publicly discovered until the late 1980s but known to the IBM DES team.

Advanced Encryption Standard

The involvement of NSA in selecting a successor to Data Encryption Standard (DES), the Advanced Encryption Standard (AES), was limited to hardware performance testing (see AES competition). NSA has subsequently certified AES for protection of classified information when used in NSA-approved systems.

NSA encryption systems

The NSA is responsible for the encryption-related components in these legacy systems:

- FNBDT Future Narrow Band Digital Terminal

- KL-7 ADONIS off-line rotor encryption machine (post-WWII – 1980s)

- KW-26 ROMULUS electronic in-line teletypewriter encryptor (1960s–1980s)

- KW-37 JASON fleet broadcast encryptor (1960s–1990s)

- KY-57 VINSON tactical radio voice encryptor

- KG-84 Dedicated Data Encryption/Decryption

- STU-III secure telephone unit, phased out by the STE

The NSA oversees encryption in following systems which are in use today:

- EKMS Electronic Key Management System

- Fortezza encryption based on portable crypto token in PC Card format

- SINCGARS tactical radio with cryptographically controlled frequency hopping

- STE secure terminal equipment

- TACLANE product line by General Dynamics C4 Systems

The NSA has specified Suite A and Suite B cryptographic algorithm suites to be used in U.S. government systems; the Suite B algorithms are a subset of those previously specified by NIST and are expected to serve for most information protection purposes, while the Suite A algorithms are secret and are intended for especially high levels of protection.

SHA

The widely used SHA-1 and SHA-2 hash functions were designed by NSA. SHA-1 is a slight modification of the weaker SHA-0 algorithm, also designed by NSA in 1993. This small modification was suggested by NSA two years later, with no justification other than the fact that it provides additional security. An attack for SHA-0 that does not apply to the revised algorithm was indeed found between 1998 and 2005 by academic cryptographers. Because of weaknesses and key length restrictions in SHA-1, NIST deprecates its use for digital signatures, and approves only the newer SHA-2 algorithms for such applications from 2013 on.

A new hash standard, SHA-3, has recently been selected through the competition concluded October 2, 2012 with the selection of Keccak as the algorithm. The process to select SHA-3 was similar to the one held in choosing the AES, but some doubts have been cast over it, since fundamental modifications have been made to Keccak in order to turn it into a standard. These changes potentially undermine the cryptanalysis performed during the competition and reduce the security levels of the algorithm.

Dual_EC_DRBG random number generator cryptotrojan

NSA promoted the inclusion of a random number generator called Dual EC DRBG in the U.S. National Institute of Standards and Technology's 2007 guidelines. This led to speculation of a backdoor which would allow NSA access to data encrypted by systems using that pseudorandom number generator (PRNG).

This is now deemed to be plausible based on the fact that output of next iterations of PRNG can provably be determined if relation between two internal Elliptic Curve points is known. Both NIST and RSA are now officially recommending against the use of this PRNG.

Clipper chip

Because of concerns that widespread use of strong cryptography would hamper government use of wiretaps, NSA proposed the concept of key escrow in 1993 and introduced the Clipper chip that would offer stronger protection than DES but would allow access to encrypted data by authorized law enforcement officials. The proposal was strongly opposed and key escrow requirements ultimately went nowhere. However, NSA's Fortezza hardware-based encryption cards, created for the Clipper project, are still used within government, and NSA ultimately declassified and published the design of the Skipjack cipher used on the cards.

Perfect Citizen

Perfect Citizen is a program to perform vulnerability assessment by the NSA on U.S. critical infrastructure. It was originally reported to be a program to develop a system of sensors to detect cyber attacks on critical infrastructure computer networks in both the private and public sector through a network monitoring system named Einstein. It is funded by the Comprehensive National Cybersecurity Initiative and thus far Raytheon has received a contract for up to $100 million for the initial stage.

Academic research

NSA has invested many millions of dollars in academic research under grant code prefix MDA904, resulting in over 3,000 papers as of October 11, 2007. NSA/CSS has, at times, attempted to restrict the publication of academic research into cryptography; for example, the Khufu and Khafre block ciphers were voluntarily withheld in response to an NSA request to do so. In response to a FOIA lawsuit, in 2013 the NSA released the 643-page research paper titled, "Untangling the Web: A Guide to Internet Research," written and compiled by NSA employees to assist other NSA workers in searching for information of interest to the agency on the public Internet.

Patents

NSA has the ability to file for a patent from the U.S. Patent and Trademark Office under gag order. Unlike normal patents, these are not revealed to the public and do not expire. However, if the Patent Office receives an application for an identical patent from a third party, they will reveal NSA's patent and officially grant it to NSA for the full term on that date.

One of NSA's published patents describes a method of geographically locating an individual computer site in an Internet-like network, based on the latency of multiple network connections. Although no public patent exists, NSA is reported to have used a similar locating technology called trilateralization that allows real-time tracking of an individual's location, including altitude from ground level, using data obtained from cellphone towers.

Insignia and memorials

The heraldic insignia of NSA consists of an eagle inside a circle, grasping a key in its talons. The eagle represents the agency's national mission. Its breast features a shield with bands of red and white, taken from the Great Seal of the United States and representing Congress. The key is taken from the emblem of Saint Peter and represents security.

When the NSA was created, the agency had no emblem and used that of the Department of Defense. The agency adopted its first of two emblems in 1963. The current NSA insignia has been in use since 1965, when then-Director, LTG Marshall S. Carter (USA) ordered the creation of a device to represent the agency.

The NSA's flag consists of the agency's seal on a light blue background.

Crews associated with NSA missions have been involved in a number of dangerous and deadly situations. The USS Liberty incident in 1967 and USS Pueblo incident in 1968 are examples of the losses endured during the Cold War.

The National Security Agency/Central Security Service Cryptologic Memorial honors and remembers the fallen personnel, both military and civilian, of these intelligence missions. It is made of black granite, and has 171 names carved into it, as of 2013. It is located at NSA headquarters. A tradition of declassifying the stories of the fallen was begun in 2001.

Controversy and litigation

In the United States, at least since 2001, there has been legal controversy over what signal intelligence can be used for and how much freedom the National Security Agency has to use signal intelligence. In 2015, the government made slight changes in how it uses and collects certain types of data, specifically phone records. The government was not analyzing the phone records as of early 2019. The surveillance programs were deemed unlawful in September 2020 in a court of appeals case.

Warrantless wiretaps

On December 16, 2005, The New York Times reported that, under White House pressure and with an executive order from President George W. Bush, the National Security Agency, in an attempt to thwart terrorism, had been tapping phone calls made to persons outside the country, without obtaining warrants from the United States Foreign Intelligence Surveillance Court, a secret court created for that purpose under the Foreign Intelligence Surveillance Act (FISA).

One such surveillance program, authorized by the U.S. Signals Intelligence Directive 18 of President George Bush, was the Highlander Project undertaken for the National Security Agency by the U.S. Army 513th Military Intelligence Brigade. NSA relayed telephone (including cell phone) conversations obtained from ground, airborne, and satellite monitoring stations to various U.S. Army Signal Intelligence Officers, including the 201st Military Intelligence Battalion. Conversations of citizens of the U.S. were intercepted, along with those of other nations.

Proponents of the surveillance program claim that the President has executive authority to order such action, arguing that laws such as FISA are overridden by the President's Constitutional powers. In addition, some argued that FISA was implicitly overridden by a subsequent statute, the Authorization for Use of Military Force, although the Supreme Court's ruling in Hamdan v. Rumsfeld deprecates this view. In the August 2006 case ACLU v. NSA, U.S. District Court Judge Anna Diggs Taylor concluded that NSA's warrantless surveillance program was both illegal and unconstitutional. On July 6, 2007, the 6th Circuit Court of Appeals vacated the decision on the grounds that the ACLU lacked standing to bring the suit.

On January 17, 2006, the Center for Constitutional Rights filed a lawsuit, CCR v. Bush, against the George W. Bush Presidency. The lawsuit challenged the National Security Agency's (NSA's) surveillance of people within the U.S., including the interception of CCR emails without securing a warrant first.

In September 2008, the Electronic Frontier Foundation (EFF) filed a class action lawsuit against the NSA and several high-ranking officials of the Bush administration, charging an "illegal and unconstitutional program of dragnet communications surveillance," based on documentation provided by former AT&T technician Mark Klein.

As a result of the USA Freedom Act passed by Congress in June 2015, the NSA had to shut down its bulk phone surveillance program on November 29 of the same year. The USA Freedom Act forbids the NSA to collect metadata and content of phone calls unless it has a warrant for terrorism investigation. In that case the agency has to ask the telecom companies for the record, which will only be kept for six months. The NSA's use of large telecom companies to assist it with its surveillance efforts has caused several privacy concerns.

AT&T Internet monitoring

In May 2008, Mark Klein, a former AT&T employee, alleged that his company had cooperated with NSA in installing Narus hardware to replace the FBI Carnivore program, to monitor network communications including traffic between U.S. citizens.

Data mining

NSA was reported in 2008 to use its computing capability to analyze "transactional" data that it regularly acquires from other government agencies, which gather it under their own jurisdictional authorities. As part of this effort, NSA now monitors huge volumes of records of domestic email data, web addresses from Internet searches, bank transfers, credit-card transactions, travel records, and telephone data, according to current and former intelligence officials interviewed by The Wall Street Journal. The sender, recipient, and subject line of emails can be included, but the content of the messages or of phone calls are not.

A 2013 advisory group for the Obama administration, seeking to reform NSA spying programs following the revelations of documents released by Edward J. Snowden. One mentioned in 'Recommendation 30' on page 37, "...that the National Security Council staff should manage an interagency process to review on a regular basis the activities of the US Government regarding attacks that exploit a previously unknown vulnerability in a computer application." Retired cyber security expert Richard A. Clarke was a group member and stated on April 11, 2014 that NSA had no advance knowledge of Heartbleed.

Illegally obtained evidence

In August 2013 it was revealed that a 2005 IRS training document showed that NSA intelligence intercepts and wiretaps, both foreign and domestic, were being supplied to the Drug Enforcement Administration (DEA) and Internal Revenue Service (IRS) and were illegally used to launch criminal investigations of US citizens. Law enforcement agents were directed to conceal how the investigations began and recreate an apparently legal investigative trail by re-obtaining the same evidence by other means.

Barack Obama administration

In the months leading to April 2009, the NSA intercepted the communications of U.S. citizens, including a Congressman, although the Justice Department believed that the interception was unintentional. The Justice Department then took action to correct the issues and bring the program into compliance with existing laws. United States Attorney General Eric Holder resumed the program according to his understanding of the Foreign Intelligence Surveillance Act amendment of 2008, without explaining what had occurred.

Polls conducted in June 2013 found divided results among Americans regarding NSA's secret data collection. Rasmussen Reports found that 59% of Americans disapprove, Gallup found that 53% disapprove, and Pew found that 56% are in favor of NSA data collection.

Section 215 metadata collection

On April 25, 2013, the NSA obtained a court order requiring Verizon's Business Network Services to provide metadata on all calls in its system to the NSA "on an ongoing daily basis" for a three-month period, as reported by The Guardian on June 6, 2013. This information includes "the numbers of both parties on a call ... location data, call duration, unique identifiers, and the time and duration of all calls" but not "[t]he contents of the conversation itself". The order relies on the so-called "business records" provision of the Patriot Act.

In August 2013, following the Snowden leaks, new details about the NSA's data mining activity were revealed. Reportedly, the majority of emails into or out of the United States are captured at "selected communications links" and automatically analyzed for keywords or other "selectors". Emails that do not match are deleted.

The utility of such a massive metadata collection in preventing terrorist attacks is disputed. Many studies reveal the dragnet like system to be ineffective. One such report, released by the New America Foundation concluded that after an analysis of 225 terrorism cases, the NSA "had no discernible impact on preventing acts of terrorism."

Defenders of the program said that while metadata alone cannot provide all the information necessary to prevent an attack, it assures the ability to "connect the dots" between suspect foreign numbers and domestic numbers with a speed only the NSA's software is capable of. One benefit of this is quickly being able to determine the difference between suspicious activity and real threats. As an example, NSA director General Keith B. Alexander mentioned at the annual Cybersecurity Summit in 2013, that metadata analysis of domestic phone call records after the Boston Marathon bombing helped determine that rumors of a follow-up attack in New York were baseless.

In addition to doubts about its effectiveness, many people argue that the collection of metadata is an unconstitutional invasion of privacy. As of 2015, the collection process remains legal and grounded in the ruling from Smith v. Maryland (1979). A prominent opponent of the data collection and its legality is U.S. District Judge Richard J. Leon, who issued a report in 2013 in which he stated: "I cannot imagine a more 'indiscriminate' and 'arbitrary invasion' than this systematic and high tech collection and retention of personal data on virtually every single citizen for purposes of querying and analyzing it without prior judicial approval...Surely, such a program infringes on 'that degree of privacy' that the founders enshrined in the Fourth Amendment".