The molecular clock is a technique that uses the mutation rate of biomolecules to deduce the time in prehistory when two or more life forms diverged. The biomolecular data used for such calculations are usually nucleotide sequences for DNA or amino acid sequences for proteins. The benchmarks for determining the mutation rate are often fossil or archaeological dates. The molecular clock was first tested in 1962 on the hemoglobin protein variants of various animals, and is commonly used in molecular evolution to estimate times of speciation or radiation. It is sometimes called a gene clock or an evolutionary clock.

Early discovery and genetic equidistance

The notion of the existence of a so-called "molecular clock" was first attributed to Émile Zuckerkandl and Linus Pauling who, in 1962, noticed that the number of amino acid differences in hemoglobin between different lineages changes roughly linearly with time, as estimated from fossil evidence.[1] They generalized this observation to assert that the rate of evolutionary change of any specified protein was approximately constant over time and over different lineages (based on the molecular clock hypothesis (MCH)).The genetic equidistance phenomenon was first noted in 1963 by Emanuel Margoliash, who wrote: "It appears that the number of residue differences between cytochrome c of any two species is mostly conditioned by the time elapsed since the lines of evolution leading to these two species originally diverged. If this is correct, the cytochrome c of all mammals should be equally different from the cytochrome c of all birds. Since fish diverges from the main stem of vertebrate evolution earlier than either birds or mammals, the cytochrome c of both mammals and birds should be equally different from the cytochrome c of fish. Similarly, all vertebrate cytochrome c should be equally different from the yeast protein."[2] For example, the difference between the cytochrome c of a carp and a frog, turtle, chicken, rabbit, and horse is a very constant 13% to 14%. Similarly, the difference between the cytochrome c of a bacterium and yeast, wheat, moth, tuna, pigeon, and horse ranges from 64% to 69%. Together with the work of Emile Zuckerkandl and Linus Pauling, the genetic equidistance result directly led to the formal postulation of the molecular clock hypothesis in the early 1960s.[3]

Relationship with neutral theory

The observation of a clock-like rate of molecular change was originally purely phenomenological. Later, the work of Motoo Kimura[4] developed the neutral theory of molecular evolution, which predicted a molecular clock. Let there be N individuals, and to keep this calculation simple, let the individuals be haploid (i.e. have one copy of each gene). Let the rate of neutral mutations (i.e. mutations with no effect on fitness) in a new individual be . The probability that this new mutation will become fixed

in the population is then 1/N, since each copy of the gene is as good

as any other. Every generation, each individual can have new mutations,

so there are

. The probability that this new mutation will become fixed

in the population is then 1/N, since each copy of the gene is as good

as any other. Every generation, each individual can have new mutations,

so there are  N new neutral mutations in the population as a whole. That means that each generation,

N new neutral mutations in the population as a whole. That means that each generation,  new neutral mutations will become fixed. If most changes seen during molecular evolution are neutral, then fixations in a population will accumulate at a clock-rate that is equal to the rate of neutral mutations in an individual.

new neutral mutations will become fixed. If most changes seen during molecular evolution are neutral, then fixations in a population will accumulate at a clock-rate that is equal to the rate of neutral mutations in an individual.Calibration

The molecular clock alone can only say that one time period is twice as long as another: it cannot assign concrete dates. For viral phylogenetics and ancient DNA studies—two areas of evolutionary biology where it is possible to sample sequences over an evolutionary timescale—the dates of the intermediate samples can be used to more precisely calibrate the molecular clock. However, most phylogenies require that the molecular clock be calibrated against independent evidence about dates, such as the fossil record.[5] There are two general methods for calibrating the molecular clock using fossil data: node calibration and tip calibration.[6]Node calibration

Sometimes referred to as node dating, node calibration is a method for phylogeny calibration that is done by placing fossil constraints at nodes. A node calibration fossil is the oldest discovered representative of that clade, which is used to constrain its minimum age. Due to the fragmentary nature of the fossil record, the true most recent common ancestor of a clade will likely never be found.[6] In order to account for this in node calibration analyses, a maximum clade age must be estimated. Determining the maximum clade age is challenging because it relies on negative evidence—the absence of older fossils in that clade. There are a number of methods for deriving the maximum clade age using birth-death models, fossil stratigraphic distribution analyses, or taphonomic controls.[7] Alternatively, instead of a maximum and a minimum, a prior probability of the divergence time can be established and used to calibrate the clock. There are several prior probability distributions including normal, lognormal, exponential, gamma, uniform, etc.) that can be used to express the probability of the true age of divergence relative to the age of the fossil;[8] however, there are very few methods for estimating the shape and parameters of the probability distribution empirically.[9] The placement of calibration nodes on the tree informs the placement of the unconstrained nodes, giving divergence date estimates across the phylogeny. Historical methods of clock calibration could only make use of a single fossil constraint (non-parametric rate smoothing),[10] while modern analyses (BEAST[11] and r8s[12]) allow for the use of multiple fossils to calibrate the molecular clock. Simulation studies have shown that increasing the number of fossil constraints increases the accuracy of divergence time estimation.[13]Tip calibration

Sometimes referred to as tip dating, tip calibration is a method of molecular clock calibration in which fossils are treated as taxa and placed on the tips of the tree. This is achieved by creating a matrix that includes a molecular dataset for the extant taxa along with a morphological dataset for both the extinct and the extant taxa.[7] Unlike node calibration, this method reconstructs the tree topology and places the fossils simultaneously. Molecular and morphological models work together simultaneously, allowing morphology to inform the placement of fossils.[6] Tip calibration makes use of all relevant fossil taxa during clock calibration, rather than relying on only the oldest fossil of each clade. This method does not rely on the interpretation of negative evidence to infer maximum clade ages.[7]Total evidence dating

This approach to tip calibration goes a step further by simultaneously estimating fossil placement, topology, and the evolutionary timescale. In this method, the age of a fossil can inform its phylogenetic position in addition to morphology. By allowing all aspects of tree reconstruction to occur simultaneously, the risk of biased results is decreased.[6] This approach has been improved upon by pairing it with different models. One current method of molecular clock calibration is total evidence dating paired with the fossilized birth-death (FBD) model and a model of morphological evolution.[14] The FBD model is novel in that it allows for “sampled ancestors,” which are fossil taxa that are the direct ancestor of a living taxon or lineage. This allows fossils to be placed on a branch above an extant organism, rather than being confined to the tips.[15]Methods

Bayesian methods can provide more appropriate estimates of divergence times, especially if large datasets—such as those yielded by phylogenomics—are employed.[16]Non-constant rate of molecular clock

Sometimes only a single divergence date can be estimated from fossils, with all other dates inferred from that. Other sets of species have abundant fossils available, allowing the MCH of constant divergence rates to be tested. DNA sequences experiencing low levels of negative selection showed divergence rates of 0.7–0.8% per Myr in bacteria, mammals, invertebrates, and plants.[17] In the same study, genomic regions experiencing very high negative or purifying selection (encoding rRNA) were considerably slower (1% per 50 Myr).In addition to such variation in rate with genomic position, since the early 1990s variation among taxa has proven fertile ground for research too,[18] even over comparatively short periods of evolutionary time (for example mockingbirds[19]). Tube-nosed seabirds have molecular clocks that on average run at half speed of many other birds,[20] possibly due to long generation times, and many turtles have a molecular clock running at one-eighth the speed it does in small mammals, or even slower.[21] Effects of small population size are also likely to confound molecular clock analyses. Researchers such as Francisco Ayala have more fundamentally challenged the molecular clock hypothesis.[22][23] According to Ayala's 1999 study, five factors combine to limit the application of molecular clock models:

- Changing generation times (If the rate of new mutations depends at least partly on the number of generations rather than the number of years)

- Population size (Genetic drift is stronger in small populations, and so more mutations are effectively neutral)

- Species-specific differences (due to differing metabolism, ecology, evolutionary history, ...)

- Change in function of the protein studied (can be avoided in closely related species by utilizing non-coding DNA sequences or emphasizing silent mutations)

- Changes in the intensity of natural selection.

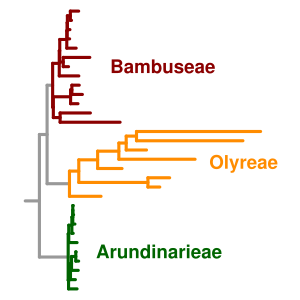

Woody bamboos (tribes Arundinarieae and Bambuseae) have long generation times and lower mutation rates, as expressed by short branches in the phylogenetic tree, than the fast-evolving herbaceous bamboos (Olyreae).

Molecular clock users have developed workaround solutions using a number of statistical approaches including maximum likelihood techniques and later Bayesian modeling. In particular, models that take into account rate variation across lineages have been proposed in order to obtain better estimates of divergence times. These models are called relaxed molecular clocks[24] because they represent an intermediate position between the 'strict' molecular clock hypothesis and Joseph Felsenstein's many-rates model[25] and are made possible through MCMC techniques that explore a weighted range of tree topologies and simultaneously estimate parameters of the chosen substitution model. It must be remembered that divergence dates inferred using a molecular clock are based on statistical inference and not on direct evidence.

The molecular clock runs into particular challenges at very short and very long timescales. At long timescales, the problem is saturation. When enough time has passed, many sites have undergone more than one change, but it is impossible to detect more than one. This means that the observed number of changes is no longer linear with time, but instead flattens out. Even at intermediate genetic distances, with phylogenetic data still sufficient to estimate topology, signal for the overall scale of the tree can be weak under complex likelihood models, leading to highly uncertain molecular clock estimates.[26]

At very short time scales, many differences between samples do not represent fixation of different sequences in the different populations. Instead, they represent alternative alleles that were both present as part of a polymorphism in the common ancestor. The inclusion of differences that have not yet become fixed leads to a potentially dramatic inflation of the apparent rate of the molecular clock at very short timescales.[27][28]