Computer graphics deals with generating images with the aid of computers. Today, computer graphics is a core technology in digital photography, film, video games, cell phone and computer displays, and many specialized applications. A great deal of specialized hardware and software has been developed, with the displays of most devices being driven by computer graphics hardware. It is a vast and recently developed area of computer science. The phrase was coined in 1960 by computer graphics researchers Verne Hudson and William Fetter of Boeing. It is often abbreviated as CG, or typically in the context of film as computer generated imagery (CGI). The non-artistic aspects of computer graphics are the subject of computer science research.

Some topics in computer graphics include user interface design, sprite graphics, rendering, ray tracing, geometry processing, computer animation, vector graphics, 3D modeling, shaders, GPU design, implicit surfaces, visualization, scientific computing, image processing, computational photography, scientific visualization, computational geometry and computer vision, among others. The overall methodology depends heavily on the underlying sciences of geometry, optics, physics, and perception.

Computer graphics is responsible for displaying art and image data effectively and meaningfully to the consumer. It is also used for processing image data received from the physical world, such as photo and video content. Computer graphics development has had a significant impact on many types of media and has revolutionized animation, movies, advertising, video games, in general.

Overview

The term computer graphics has been used in a broad sense to describe "almost everything on computers that is not text or sound". Typically, the term computer graphics refers to several different things:

- the representation and manipulation of image data by a computer

- the various technologies used to create and manipulate images

- methods for digitally synthesizing and manipulating visual content, see study of computer graphics

Today, computer graphics is widespread. Such imagery is found in and on television, newspapers, weather reports, and in a variety of medical investigations and surgical procedures. A well-constructed graph can present complex statistics in a form that is easier to understand and interpret. In the media "such graphs are used to illustrate papers, reports, theses", and other presentation material.

Many tools have been developed to visualize data. Computer-generated imagery can be categorized into several different types: two dimensional (2D), three dimensional (3D), and animated graphics. As technology has improved, 3D computer graphics have become more common, but 2D computer graphics are still widely used. Computer graphics has emerged as a sub-field of computer science which studies methods for digitally synthesizing and manipulating visual content. Over the past decade, other specialized fields have been developed like information visualization, and scientific visualization more concerned with "the visualization of three dimensional phenomena (architectural, meteorological, medical, biological, etc.), where the emphasis is on realistic renderings of volumes, surfaces, illumination sources, and so forth, perhaps with a dynamic (time) component".

History

The precursor sciences to the development of modern computer graphics were the advances in electrical engineering, electronics, and television that took place during the first half of the twentieth century. Screens could display art since the Lumiere brothers' use of mattes to create special effects for the earliest films dating from 1895, but such displays were limited and not interactive. The first cathode ray tube, the Braun tube, was invented in 1897 – it in turn would permit the oscilloscope and the military control panel – the more direct precursors of the field, as they provided the first two-dimensional electronic displays that responded to programmatic or user input. Nevertheless, computer graphics remained relatively unknown as a discipline until the 1950s and the post-World War II period – during which time the discipline emerged from a combination of both pure university and laboratory academic research into more advanced computers and the United States military's further development of technologies like radar, advanced aviation, and rocketry developed during the war. New kinds of displays were needed to process the wealth of information resulting from such projects, leading to the development of computer graphics as a discipline.

1950s

Early projects like the Whirlwind and SAGE Projects introduced the CRT as a viable display and interaction interface and introduced the light pen as an input device. Douglas T. Ross of the Whirlwind SAGE system performed a personal experiment in which he wrote a small program that captured the movement of his finger and displayed its vector (his traced name) on a display scope. One of the first interactive video games to feature recognizable, interactive graphics – Tennis for Two – was created for an oscilloscope by William Higinbotham to entertain visitors in 1958 at Brookhaven National Laboratory and simulated a tennis match. In 1959, Douglas T. Ross innovated again while working at MIT on transforming mathematic statements into computer generated 3D machine tool vectors by taking the opportunity to create a display scope image of a Disney cartoon character.

Electronics pioneer Hewlett-Packard went public in 1957 after incorporating the decade prior, and established strong ties with Stanford University through its founders, who were alumni. This began the decades-long transformation of the southern San Francisco Bay Area into the world's leading computer technology hub – now known as Silicon Valley. The field of computer graphics developed with the emergence of computer graphics hardware.

Further advances in computing led to greater advancements in interactive computer graphics. In 1959, the TX-2 computer was developed at MIT's Lincoln Laboratory. The TX-2 integrated a number of new man-machine interfaces. A light pen could be used to draw sketches on the computer using Ivan Sutherland's revolutionary Sketchpad software. Using a light pen, Sketchpad allowed one to draw simple shapes on the computer screen, save them and even recall them later. The light pen itself had a small photoelectric cell in its tip. This cell emitted an electronic pulse whenever it was placed in front of a computer screen and the screen's electron gun fired directly at it. By simply timing the electronic pulse with the current location of the electron gun, it was easy to pinpoint exactly where the pen was on the screen at any given moment. Once that was determined, the computer could then draw a cursor at that location. Sutherland seemed to find the perfect solution for many of the graphics problems he faced. Even today, many standards of computer graphics interfaces got their start with this early Sketchpad program. One example of this is in drawing constraints. If one wants to draw a square for example, they do not have to worry about drawing four lines perfectly to form the edges of the box. One can simply specify that they want to draw a box, and then specify the location and size of the box. The software will then construct a perfect box, with the right dimensions and at the right location. Another example is that Sutherland's software modeled objects – not just a picture of objects. In other words, with a model of a car, one could change the size of the tires without affecting the rest of the car. It could stretch the body of car without deforming the tires.

1960s

The phrase "computer graphics" has been credited to William Fetter, a graphic designer for Boeing in 1960. Fetter in turn attributed it to Verne Hudson, also at Boeing.

In 1961 another student at MIT, Steve Russell, created another important title in the history of video games, Spacewar! Written for the DEC PDP-1, Spacewar was an instant success and copies started flowing to other PDP-1 owners and eventually DEC got a copy. The engineers at DEC used it as a diagnostic program on every new PDP-1 before shipping it. The sales force picked up on this quickly enough and when installing new units, would run the "world's first video game" for their new customers. (Higginbotham's Tennis For Two had beaten Spacewar by almost three years, but it was almost unknown outside of a research or academic setting.)

At around the same time (1961–1962) in the University of Cambridge, Elizabeth Waldram wrote code to display radio-astronomy maps on a cathode ray tube.

E. E. Zajac, a scientist at Bell Telephone Laboratory (BTL), created a film called "Simulation of a two-giro gravity attitude control system" in 1963. In this computer-generated film, Zajac showed how the attitude of a satellite could be altered as it orbits the Earth. He created the animation on an IBM 7090 mainframe computer. Also at BTL, Ken Knowlton, Frank Sinden, Ruth A. Weiss and Michael Noll started working in the computer graphics field. Sinden created a film called Force, Mass and Motion illustrating Newton's laws of motion in operation. Around the same time, other scientists were creating computer graphics to illustrate their research. At Lawrence Radiation Laboratory, Nelson Max created the films Flow of a Viscous Fluid and Propagation of Shock Waves in a Solid Form. Boeing Aircraft created a film called Vibration of an Aircraft.

Also sometime in the early 1960s, automobiles would also provide a boost through the early work of Pierre Bézier at Renault, who used Paul de Casteljau's curves – now called Bézier curves after Bézier's work in the field – to develop 3d modeling techniques for Renault car bodies. These curves would form the foundation for much curve-modeling work in the field, as curves – unlike polygons – are mathematically complex entities to draw and model well.

It was not long before major corporations started taking an interest in computer graphics. TRW, Lockheed-Georgia, General Electric and Sperry Rand are among the many companies that were getting started in computer graphics by the mid-1960s. IBM was quick to respond to this interest by releasing the IBM 2250 graphics terminal, the first commercially available graphics computer. Ralph Baer, a supervising engineer at Sanders Associates, came up with a home video game in 1966 that was later licensed to Magnavox and called the Odyssey. While very simplistic, and requiring fairly inexpensive electronic parts, it allowed the player to move points of light around on a screen. It was the first consumer computer graphics product. David C. Evans was director of engineering at Bendix Corporation's computer division from 1953 to 1962, after which he worked for the next five years as a visiting professor at Berkeley. There he continued his interest in computers and how they interfaced with people. In 1966, the University of Utah recruited Evans to form a computer science program, and computer graphics quickly became his primary interest. This new department would become the world's primary research center for computer graphics through the 1970s.

Also, in 1966, Ivan Sutherland continued to innovate at MIT when he invented the first computer-controlled head-mounted display (HMD). It displayed two separate wireframe images, one for each eye. This allowed the viewer to see the computer scene in stereoscopic 3D. The heavy hardware required for supporting the display and tracker was called the Sword of Damocles because of the potential danger if it were to fall upon the wearer. After receiving his Ph.D. from MIT, Sutherland became Director of Information Processing at ARPA (Advanced Research Projects Agency), and later became a professor at Harvard. In 1967 Sutherland was recruited by Evans to join the computer science program at the University of Utah – a development which would turn that department into one of the most important research centers in graphics for nearly a decade thereafter, eventually producing some of the most important pioneers in the field. There Sutherland perfected his HMD; twenty years later, NASA would re-discover his techniques in their virtual reality research. At Utah, Sutherland and Evans were highly sought after consultants by large companies, but they were frustrated at the lack of graphics hardware available at the time, so they started formulating a plan to start their own company.

In 1968, Dave Evans and Ivan Sutherland founded the first computer graphics hardware company, Evans & Sutherland. While Sutherland originally wanted the company to be located in Cambridge, Massachusetts, Salt Lake City was instead chosen due to its proximity to the professors' research group at the University of Utah.

Also in 1968 Arthur Appel described the first ray casting algorithm, the first of a class of ray tracing-based rendering algorithms that have since become fundamental in achieving photorealism in graphics by modeling the paths that rays of light take from a light source, to surfaces in a scene, and into the camera.

In 1969, the ACM initiated A Special Interest Group on Graphics (SIGGRAPH) which organizes conferences, graphics standards, and publications within the field of computer graphics. By 1973, the first annual SIGGRAPH conference was held, which has become one of the focuses of the organization. SIGGRAPH has grown in size and importance as the field of computer graphics has expanded over time.

1970s

Subsequently, a number of breakthroughs in the field – particularly important early breakthroughs in the transformation of graphics from utilitarian to realistic – occurred at the University of Utah in the 1970s, which had hired Ivan Sutherland. He was paired with David C. Evans to teach an advanced computer graphics class, which contributed a great deal of founding research to the field and taught several students who would grow to found several of the industry's most important companies – namely Pixar, Silicon Graphics, and Adobe Systems. Tom Stockham led the image processing group at UU which worked closely with the computer graphics lab.

One of these students was Edwin Catmull. Catmull had just come from The Boeing Company and had been working on his degree in physics. Growing up on Disney, Catmull loved animation yet quickly discovered that he did not have the talent for drawing. Now Catmull (along with many others) saw computers as the natural progression of animation and they wanted to be part of the revolution. The first computer animation that Catmull saw was his own. He created an animation of his hand opening and closing. He also pioneered texture mapping to paint textures on three-dimensional models in 1974, now considered one of the fundamental techniques in 3D modeling. It became one of his goals to produce a feature-length motion picture using computer graphics – a goal he would achieve two decades later after his founding role in Pixar. In the same class, Fred Parke created an animation of his wife's face. The two animations were included in the 1976 feature film Futureworld.

As the UU computer graphics laboratory was attracting people from all over, John Warnock was another of those early pioneers; he later founded Adobe Systems and create a revolution in the publishing world with his PostScript page description language, and Adobe would go on later to create the industry standard photo editing software in Adobe Photoshop and a prominent movie industry special effects program in Adobe After Effects.

James Clark was also there; he later founded Silicon Graphics, a maker of advanced rendering systems that would dominate the field of high-end graphics until the early 1990s.

A major advance in 3D computer graphics was created at UU by these early pioneers – hidden surface determination. In order to draw a representation of a 3D object on the screen, the computer must determine which surfaces are "behind" the object from the viewer's perspective, and thus should be "hidden" when the computer creates (or renders) the image. The 3D Core Graphics System (or Core) was the first graphical standard to be developed. A group of 25 experts of the ACM Special Interest Group SIGGRAPH developed this "conceptual framework". The specifications were published in 1977, and it became a foundation for many future developments in the field.

Also in the 1970s, Henri Gouraud, Jim Blinn and Bui Tuong Phong contributed to the foundations of shading in CGI via the development of the Gouraud shading and Blinn–Phong shading models, allowing graphics to move beyond a "flat" look to a look more accurately portraying depth. Jim Blinn also innovated further in 1978 by introducing bump mapping, a technique for simulating uneven surfaces, and the predecessor to many more advanced kinds of mapping used today.

The modern videogame arcade as is known today was birthed in the 1970s, with the first arcade games using real-time 2D sprite graphics. Pong in 1972 was one of the first hit arcade cabinet games. Speed Race in 1974 featured sprites moving along a vertically scrolling road. Gun Fight in 1975 featured human-looking animated characters, while Space Invaders in 1978 featured a large number of animated figures on screen; both used a specialized barrel shifter circuit made from discrete chips to help their Intel 8080 microprocessor animate their framebuffer graphics.

1980s

The 1980s began to see the modernization and commercialization of computer graphics. As the home computer proliferated, a subject which had previously been an academics-only discipline was adopted by a much larger audience, and the number of computer graphics developers increased significantly.

In the early 1980s, metal–oxide–semiconductor (MOS) very-large-scale integration (VLSI) technology led to the availability of 16-bit central processing unit (CPU) microprocessors and the first graphics processing unit (GPU) chips, which began to revolutionize computer graphics, enabling high-resolution graphics for computer graphics terminals as well as personal computer (PC) systems. NEC's µPD7220 was the first GPU, fabricated on a fully integrated NMOS VLSI chip. It supported up to 1024x1024 resolution, and laid the foundations for the emerging PC graphics market. It was used in a number of graphics cards, and was licensed for clones such as the Intel 82720, the first of Intel's graphics processing units. MOS memory also became cheaper in the early 1980s, enabling the development of affordable framebuffer memory, notably video RAM (VRAM) introduced by Texas Instruments (TI) in the mid-1980s. In 1984, Hitachi released the ARTC HD63484, the first complementary MOS (CMOS) GPU. It was capable of displaying high-resolution in color mode and up to 4K resolution in monochrome mode, and it was used in a number of graphics cards and terminals during the late 1980s. In 1986, TI introduced the TMS34010, the first fully programmable MOS graphics processor.

Computer graphics terminals during this decade became increasingly intelligent, semi-standalone and standalone workstations. Graphics and application processing were increasingly migrated to the intelligence in the workstation, rather than continuing to rely on central mainframe and mini-computers. Typical of the early move to high-resolution computer graphics intelligent workstations for the computer-aided engineering market were the Orca 1000, 2000 and 3000 workstations, developed by Orcatech of Ottawa, a spin-off from Bell-Northern Research, and led by David Pearson, an early workstation pioneer. The Orca 3000 was based on the 16-bit Motorola 68000 microprocessor and AMD bit-slice processors, and had Unix as its operating system. It was targeted squarely at the sophisticated end of the design engineering sector. Artists and graphic designers began to see the personal computer, particularly the Commodore Amiga and Macintosh, as a serious design tool, one that could save time and draw more accurately than other methods. The Macintosh remains a highly popular tool for computer graphics among graphic design studios and businesses. Modern computers, dating from the 1980s, often use graphical user interfaces (GUI) to present data and information with symbols, icons and pictures, rather than text. Graphics are one of the five key elements of multimedia technology.

In the field of realistic rendering, Japan's Osaka University developed the LINKS-1 Computer Graphics System, a supercomputer that used up to 257 Zilog Z8001 microprocessors, in 1982, for the purpose of rendering realistic 3D computer graphics. According to the Information Processing Society of Japan: "The core of 3D image rendering is calculating the luminance of each pixel making up a rendered surface from the given viewpoint, light source, and object position. The LINKS-1 system was developed to realize an image rendering methodology in which each pixel could be parallel processed independently using ray tracing. By developing a new software methodology specifically for high-speed image rendering, LINKS-1 was able to rapidly render highly realistic images. It was used to create the world's first 3D planetarium-like video of the entire heavens that was made completely with computer graphics. The video was presented at the Fujitsu pavilion at the 1985 International Exposition in Tsukuba." The LINKS-1 was the world's most powerful computer, as of 1984. Also in the field of realistic rendering, the general rendering equation of David Immel and James Kajiya was developed in 1986 – an important step towards implementing global illumination, which is necessary to pursue photorealism in computer graphics.

The continuing popularity of Star Wars and other science fiction franchises were relevant in cinematic CGI at this time, as Lucasfilm and Industrial Light & Magic became known as the "go-to" house by many other studios for topnotch computer graphics in film. Important advances in chroma keying ("bluescreening", etc.) were made for the later films of the original trilogy. Two other pieces of video would also outlast the era as historically relevant: Dire Straits' iconic, near-fully-CGI video for their song "Money for Nothing" in 1985, which popularized CGI among music fans of that era, and a scene from Young Sherlock Holmes the same year featuring the first fully CGI character in a feature movie (an animated stained-glass knight). In 1988, the first shaders – small programs designed specifically to do shading as a separate algorithm – were developed by Pixar, which had already spun off from Industrial Light & Magic as a separate entity – though the public would not see the results of such technological progress until the next decade. In the late 1980s, Silicon Graphics (SGI) computers were used to create some of the first fully computer-generated short films at Pixar, and Silicon Graphics machines were considered a high-water mark for the field during the decade.

The 1980s is also called the golden era of videogames; millions-selling systems from Atari, Nintendo and Sega, among other companies, exposed computer graphics for the first time to a new, young, and impressionable audience – as did MS-DOS-based personal computers, Apple IIs, Macs, and Amigas, all of which also allowed users to program their own games if skilled enough. For the arcades, advances were made in commercial, real-time 3D graphics. In 1988, the first dedicated real-time 3D graphics boards were introduced for arcades, with the Namco System 21 and Taito Air System. On the professional side, Evans & Sutherland and SGI developed 3D raster graphics hardware that directly influenced the later single-chip graphics processing unit (GPU), a technology where a separate and very powerful chip is used in parallel processing with a CPU to optimize graphics.

The decade also saw computer graphics applied to many additional professional markets, including location-based entertainment and education with the E&S Digistar, vehicle design, vehicle simulation, and chemistry.

1990s

The 1990s' overwhelming note was the emergence of 3D modeling on a mass scale and an impressive rise in the quality of CGI generally. Home computers became able to take on rendering tasks that previously had been limited to workstations costing thousands of dollars; as 3D modelers became available for home systems, the popularity of Silicon Graphics workstations declined and powerful Microsoft Windows and Apple Macintosh machines running Autodesk products like 3D Studio or other home rendering software ascended in importance. By the end of the decade, the GPU would begin its rise to the prominence it still enjoys today.

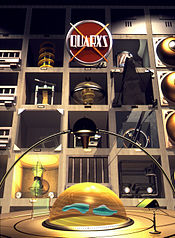

The field began to see the first rendered graphics that could truly pass as photorealistic to the untrained eye (though they could not yet do so with a trained CGI artist) and 3D graphics became far more popular in gaming, multimedia, and animation. At the end of the 1980s and the beginning of the nineties were created, in France, the very first computer graphics TV series: La Vie des bêtes by studio Mac Guff Ligne (1988), Les Fables Géométriques (1989–1991) by studio Fantôme, and Quarxs, the first HDTV computer graphics series by Maurice Benayoun and François Schuiten (studio Z-A production, 1990–1993).

In film, Pixar began its serious commercial rise in this era under Edwin Catmull, with its first major film release, in 1995 – Toy Story – a critical and commercial success of nine-figure magnitude. The studio to invent the programmable shader would go on to have many animated hits, and its work on prerendered video animation is still considered an industry leader and research trail breaker.

In video games, in 1992, Virtua Racing, running on the Sega Model 1 arcade system board, laid the foundations for fully 3D racing games and popularized real-time 3D polygonal graphics among a wider audience in the video game industry. The Sega Model 2 in 1993 and Sega Model 3 in 1996 subsequently pushed the boundaries of commercial, real-time 3D graphics. Back on the PC, Wolfenstein 3D, Doom and Quake, three of the first massively popular 3D first-person shooter games, were released by id Software to critical and popular acclaim during this decade using a rendering engine innovated primarily by John Carmack. The Sony PlayStation, Sega Saturn, and Nintendo 64, among other consoles, sold in the millions and popularized 3D graphics for home gamers. Certain late-1990s first-generation 3D titles became seen as influential in popularizing 3D graphics among console users, such as platform games Super Mario 64 and The Legend Of Zelda: Ocarina Of Time, and early 3D fighting games like Virtua Fighter, Battle Arena Toshinden, and Tekken.

Technology and algorithms for rendering continued to improve greatly. In 1996, Krishnamurty and Levoy invented normal mapping – an improvement on Jim Blinn's bump mapping. 1999 saw Nvidia release the seminal GeForce 256, the first home video card billed as a graphics processing unit or GPU, which in its own words contained "integrated transform, lighting, triangle setup/clipping, and rendering engines". By the end of the decade, computers adopted common frameworks for graphics processing such as DirectX and OpenGL. Since then, computer graphics have only become more detailed and realistic, due to more powerful graphics hardware and 3D modeling software. AMD also became a leading developer of graphics boards in this decade, creating a "duopoly" in the field which exists this day.

2000s

CGI became ubiquitous in earnest during this era. Video games and CGI cinema had spread the reach of computer graphics to the mainstream by the late 1990s and continued to do so at an accelerated pace in the 2000s. CGI was also adopted en masse for television advertisements widely in the late 1990s and 2000s, and so became familiar to a massive audience.

The continued rise and increasing sophistication of the graphics processing unit were crucial to this decade, and 3D rendering capabilities became a standard feature as 3D-graphics GPUs became considered a necessity for desktop computer makers to offer. The Nvidia GeForce line of graphics cards dominated the market in the early decade with occasional significant competing presence from ATI. As the decade progressed, even low-end machines usually contained a 3D-capable GPU of some kind as Nvidia and AMD both introduced low-priced chipsets and continued to dominate the market. Shaders which had been introduced in the 1980s to perform specialized processing on the GPU would by the end of the decade become supported on most consumer hardware, speeding up graphics considerably and allowing for greatly improved texture and shading in computer graphics via the widespread adoption of normal mapping, bump mapping, and a variety of other techniques allowing the simulation of a great amount of detail.

Computer graphics used in films and video games gradually began to be realistic to the point of entering the uncanny valley. CGI movies proliferated, with traditional animated cartoon films like Ice Age and Madagascar as well as numerous Pixar offerings like Finding Nemo dominating the box office in this field. The Final Fantasy: The Spirits Within, released in 2001, was the first fully computer-generated feature film to use photorealistic CGI characters and be fully made with motion capture. The film was not a box-office success, however. Some commentators have suggested this may be partly because the lead CGI characters had facial features which fell into the "uncanny valley". Other animated films like The Polar Express drew attention at this time as well. Star Wars also resurfaced with its prequel trilogy and the effects continued to set a bar for CGI in film.

In videogames, the Sony PlayStation 2 and 3, the Microsoft Xbox line of consoles, and offerings from Nintendo such as the GameCube maintained a large following, as did the Windows PC. Marquee CGI-heavy titles like the series of Grand Theft Auto, Assassin's Creed, Final Fantasy, BioShock, Kingdom Hearts, Mirror's Edge and dozens of others continued to approach photorealism, grow the video game industry and impress, until that industry's revenues became comparable to those of movies. Microsoft made a decision to expose DirectX more easily to the independent developer world with the XNA program, but it was not a success. DirectX itself remained a commercial success, however. OpenGL continued to mature as well, and it and DirectX improved greatly; the second-generation shader languages HLSL and GLSL began to be popular in this decade.

In scientific computing, the GPGPU technique to pass large amounts of data bidirectionally between a GPU and CPU was invented; speeding up analysis on many kinds of bioinformatics and molecular biology experiments. The technique has also been used for Bitcoin mining and has applications in computer vision.

2010s

In the 2010s, CGI has been nearly ubiquitous in video, pre-rendered graphics are nearly scientifically photorealistic, and real-time graphics on a suitably high-end system may simulate photorealism to the untrained eye.

Texture mapping has matured into a multistage process with many layers; generally, it is not uncommon to implement texture mapping, bump mapping or isosurfaces or normal mapping, lighting maps including specular highlights and reflection techniques, and shadow volumes into one rendering engine using shaders, which are maturing considerably. Shaders are now very nearly a necessity for advanced work in the field, providing considerable complexity in manipulating pixels, vertices, and textures on a per-element basis, and countless possible effects. Their shader languages HLSL and GLSL are active fields of research and development. Physically based rendering or PBR, which implements many maps and performs advanced calculation to simulate real optic light flow, is an active research area as well, along with advanced areas like ambient occlusion, subsurface scattering, Rayleigh scattering, photon mapping, and many others. Experiments into the processing power required to provide graphics in real time at ultra-high-resolution modes like 4K Ultra HD are beginning, though beyond reach of all but the highest-end hardware.

In cinema, most animated movies are CGI now; a great many animated CGI films are made per year, but few, if any, attempt photorealism due to continuing fears of the uncanny valley. Most are 3D cartoons.

In videogames, the Microsoft Xbox One, Sony PlayStation 4, and Nintendo Switch currently dominate the home space and are all capable of highly advanced 3D graphics; the Windows PC is still one of the most active gaming platforms as well.

Image types

Two-dimensional

2D computer graphics are the computer-based generation of digital images—mostly from models, such as digital image, and by techniques specific to them.

2D computer graphics are mainly used in applications that were originally developed upon traditional printing and drawing technologies such as typography. In those applications, the two-dimensional image is not just a representation of a real-world object, but an independent artifact with added semantic value; two-dimensional models are therefore preferred because they give more direct control of the image than 3D computer graphics, whose approach is more akin to photography than to typography.

Pixel art

A large form of digital art, pixel art is created through the use of raster graphics software, where images are edited on the pixel level. Graphics in most old (or relatively limited) computer and video games, graphing calculator games, and many mobile phone games are mostly pixel art.

Sprite graphics

A sprite is a two-dimensional image or animation that is integrated into a larger scene. Initially including just graphical objects handled separately from the memory bitmap of a video display, this now includes various manners of graphical overlays.

Originally, sprites were a method of integrating unrelated bitmaps so that they appeared to be part of the normal bitmap on a screen, such as creating an animated character that can be moved on a screen without altering the data defining the overall screen. Such sprites can be created by either electronic circuitry or software. In circuitry, a hardware sprite is a hardware construct that employs custom DMA channels to integrate visual elements with the main screen in that it super-imposes two discrete video sources. Software can simulate this through specialized rendering methods.

Vector graphics

Vector graphics formats are complementary to raster graphics. Raster graphics is the representation of images as an array of pixels and is typically used for the representation of photographic images. Vector graphics consists in encoding information about shapes and colors that comprise the image, which can allow for more flexibility in rendering. There are instances when working with vector tools and formats is best practice, and instances when working with raster tools and formats is best practice. There are times when both formats come together. An understanding of the advantages and limitations of each technology and the relationship between them is most likely to result in efficient and effective use of tools.

Three-dimensional

3D graphics, compared to 2D graphics, are graphics that use a three-dimensional representation of geometric data. For the purpose of performance, this is stored in the computer. This includes images that may be for later display or for real-time viewing.

Despite these differences, 3D computer graphics rely on similar algorithms as 2D computer graphics do in the frame and raster graphics (like in 2D) in the final rendered display. In computer graphics software, the distinction between 2D and 3D is occasionally blurred; 2D applications may use 3D techniques to achieve effects such as lighting, and primarily 3D may use 2D rendering techniques.

3D computer graphics are the same as 3D models. The model is contained within the graphical data file, apart from the rendering. However, there are differences that include the 3D model is the representation of any 3D object. Until visually displayed a model is not graphic. Due to printing, 3D models are not only confined to virtual space. 3D rendering is how a model can be displayed. Also can be used in non-graphical computer simulations and calculations.

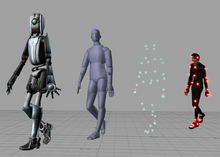

Computer animation

Computer animation is the art of creating moving images via the use of computers. It is a subfield of computer graphics and animation. Increasingly it is created by means of 3D computer graphics, though 2D computer graphics are still widely used for stylistic, low bandwidth, and faster real-time rendering needs. Sometimes the target of the animation is the computer itself, but sometimes the target is another medium, such as film. It is also referred to as CGI (Computer-generated imagery or computer-generated imaging), especially when used in films.

Virtual entities may contain and be controlled by assorted attributes, such as transform values (location, orientation, and scale) stored in an object's transformation matrix. Animation is the change of an attribute over time. Multiple methods of achieving animation exist; the rudimentary form is based on the creation and editing of keyframes, each storing a value at a given time, per attribute to be animated. The 2D/3D graphics software will change with each keyframe, creating an editable curve of a value mapped over time, in which results in animation. Other methods of animation include procedural and expression-based techniques: the former consolidates related elements of animated entities into sets of attributes, useful for creating particle effects and crowd simulations; the latter allows an evaluated result returned from a user-defined logical expression, coupled with mathematics, to automate animation in a predictable way (convenient for controlling bone behavior beyond what a hierarchy offers in skeletal system set up).

To create the illusion of movement, an image is displayed on the computer screen then quickly replaced by a new image that is similar to the previous image, but shifted slightly. This technique is identical to the illusion of movement in television and motion pictures.

Concepts and principles

Images are typically created by devices such as cameras, mirrors, lenses, telescopes, microscopes, etc.

Digital images include both vector images and raster images, but raster images are more commonly used.

Pixel

In digital imaging, a pixel (or picture element) is a single point in a raster image. Pixels are placed on a regular 2-dimensional grid, and are often represented using dots or squares. Each pixel is a sample of an original image, where more samples typically provide a more accurate representation of the original. The intensity of each pixel is variable; in color systems, each pixel has typically three components such as red, green, and blue.

Graphics are visual presentations on a surface, such as a computer screen. Examples are photographs, drawing, graphics designs, maps, engineering drawings, or other images. Graphics often combine text and illustration. Graphic design may consist of the deliberate selection, creation, or arrangement of typography alone, as in a brochure, flier, poster, web site, or book without any other element. Clarity or effective communication may be the objective, association with other cultural elements may be sought, or merely, the creation of a distinctive style.

Primitives

Primitives are basic units which a graphics system may combine to create more complex images or models. Examples would be sprites and character maps in 2D video games, geometric primitives in CAD, or polygons or triangles in 3D rendering. Primitives may be supported in hardware for efficient rendering, or the building blocks provided by a graphics application.

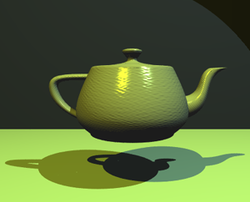

Rendering

Rendering is the generation of a 2D image from a 3D model by means of computer programs. A scene file contains objects in a strictly defined language or data structure; it would contain geometry, viewpoint, texture, lighting, and shading information as a description of the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The rendering program is usually built into the computer graphics software, though others are available as plug-ins or entirely separate programs. The term "rendering" may be by analogy with an "artist's rendering" of a scene. Although the technical details of rendering methods vary, the general challenges to overcome in producing a 2D image from a 3D representation stored in a scene file are outlined as the graphics pipeline along a rendering device, such as a GPU. A GPU is a device able to assist the CPU in calculations. If a scene is to look relatively realistic and predictable under virtual lighting, the rendering software should solve the rendering equation. The rendering equation does not account for all lighting phenomena, but is a general lighting model for computer-generated imagery. 'Rendering' is also used to describe the process of calculating effects in a video editing file to produce final video output.

- 3D projection

- 3D projection is a method of mapping three dimensional points to a two dimensional plane. As most current methods for displaying graphical data are based on planar two dimensional media, the use of this type of projection is widespread. This method is used in most real-time 3D applications and typically uses rasterization to produce the final image.

- Ray tracing

- Ray tracing is a technique from the family of image order algorithms for generating an image by tracing the path of light through pixels in an image plane. The technique is capable of producing a high degree of photorealism; usually higher than that of typical scanline rendering methods, but at a greater computational cost.

- Shading

- Shading refers to depicting depth in 3D models or illustrations by varying levels of darkness. It is a process used in drawing for depicting levels of darkness on paper by applying media more densely or with a darker shade for darker areas, and less densely or with a lighter shade for lighter areas. There are various techniques of shading including cross hatching where perpendicular lines of varying closeness are drawn in a grid pattern to shade an area. The closer the lines are together, the darker the area appears. Likewise, the farther apart the lines are, the lighter the area appears. The term has been recently generalized to mean that shaders are applied.

- Texture mapping

- Texture mapping is a method for adding detail, surface texture, or colour to a computer-generated graphic or 3D model. Its application to 3D graphics was pioneered by Dr Edwin Catmull in 1974. A texture map is applied (mapped) to the surface of a shape, or polygon. This process is akin to applying patterned paper to a plain white box. Multitexturing is the use of more than one texture at a time on a polygon. Procedural textures (created from adjusting parameters of an underlying algorithm that produces an output texture), and bitmap textures (created in an image editing application or imported from a digital camera) are, generally speaking, common methods of implementing texture definition on 3D models in computer graphics software, while intended placement of textures onto a model's surface often requires a technique known as UV mapping (arbitrary, manual layout of texture coordinates) for polygon surfaces, while non-uniform rational B-spline (NURB) surfaces have their own intrinsic parameterization used as texture coordinates. Texture mapping as a discipline also encompasses techniques for creating normal maps and bump maps that correspond to a texture to simulate height and specular maps to help simulate shine and light reflections, as well as environment mapping to simulate mirror-like reflectivity, also called gloss.

- Anti-aliasing

- Rendering resolution-independent entities (such as 3D models) for viewing on a raster (pixel-based) device such as a liquid-crystal display or CRT television inevitably causes aliasing artifacts mostly along geometric edges and the boundaries of texture details; these artifacts are informally called "jaggies". Anti-aliasing methods rectify such problems, resulting in imagery more pleasing to the viewer, but can be somewhat computationally expensive. Various anti-aliasing algorithms (such as supersampling) are able to be employed, then customized for the most efficient rendering performance versus quality of the resultant imagery; a graphics artist should consider this trade-off if anti-aliasing methods are to be used. A pre-anti-aliased bitmap texture being displayed on a screen (or screen location) at a resolution different than the resolution of the texture itself (such as a textured model in the distance from the virtual camera) will exhibit aliasing artifacts, while any procedurally defined texture will always show aliasing artifacts as they are resolution-independent; techniques such as mipmapping and texture filtering help to solve texture-related aliasing problems.

Volume rendering

Volume rendering is a technique used to display a 2D projection of a 3D discretely sampled data set. A typical 3D data set is a group of 2D slice images acquired by a CT or MRI scanner.

Usually these are acquired in a regular pattern (e.g., one slice every millimeter) and usually have a regular number of image pixels in a regular pattern. This is an example of a regular volumetric grid, with each volume element, or voxel represented by a single value that is obtained by sampling the immediate area surrounding the voxel.

3D modeling

3D modeling is the process of developing a mathematical, wireframe representation of any three-dimensional object, called a "3D model", via specialized software. Models may be created automatically or manually; the manual modeling process of preparing geometric data for 3D computer graphics is similar to plastic arts such as sculpting. 3D models may be created using multiple approaches: use of NURBs to generate accurate and smooth surface patches, polygonal mesh modeling (manipulation of faceted geometry), or polygonal mesh subdivision (advanced tessellation of polygons, resulting in smooth surfaces similar to NURB models). A 3D model can be displayed as a two-dimensional image through a process called 3D rendering, used in a computer simulation of physical phenomena, or animated directly for other purposes. The model can also be physically created using 3D Printing devices.

![Oil absorption by an aerogel.[38] (Scientific Reports)](https://upload.wikimedia.org/wikipedia/commons/thumb/3/3b/Oil_absorption_by_BN_aerogel.jpg/120px-Oil_absorption_by_BN_aerogel.jpg)

![An aerogel held up by hair.[38] (Scientific Reports)](https://upload.wikimedia.org/wikipedia/commons/thumb/6/6d/BN_aerogel_on_hair.jpg/120px-BN_aerogel_on_hair.jpg)