Quantum mechanics is the study of matter and matter's interactions with energy on the scale of atomic and subatomic particles. By contrast, classical physics explains matter and energy only on a scale familiar to human experience, including the behavior of astronomical bodies such as the Moon. Classical physics is still used in much of modern science and technology. However, towards the end of the 19th century, scientists discovered phenomena in both the large (macro) and the small (micro) worlds that classical physics could not explain. The desire to resolve inconsistencies between observed phenomena and classical theory led to a revolution in physics, a shift in the original scientific paradigm: the development of quantum mechanics.

Many aspects of quantum mechanics yield unexpected results, defying expectations and deemed counterintuitive. These aspects can seem paradoxical as they map behaviors quite differently from those seen at larger scales. In the words of quantum physicist Richard Feynman, quantum mechanics deals with "nature as She is—absurd". Features of quantum mechanics often defy simple explanations in everyday language. One example of this is the uncertainty principle: precise measurements of position cannot be combined with precise measurements of velocity. Another example is entanglement: a measurement made on one particle (such as an electron that is measured to have spin 'up') will correlate with a measurement on a second particle (an electron will be found to have spin 'down') if the two particles have a shared history. This will apply even if it is impossible for the result of the first measurement to have been transmitted to the second particle before the second measurement takes place.

Quantum mechanics helps people understand chemistry, because it explains how atoms interact with each other and form molecules. Many remarkable phenomena can be explained using quantum mechanics, like superfluidity. For example, if liquid helium cooled to a temperature near absolute zero is placed in a container, it spontaneously flows up and over the rim of its container; this is an effect which cannot be explained by classical physics.

History

James C. Maxwell's unification of the equations governing electricity, magnetism, and light in the late 19th century led to experiments on the interaction of light and matter. Some of these experiments had aspects which could not be explained until quantum mechanics emerged in the early part of the 20th century.

Evidence of quanta from the photoelectric effect

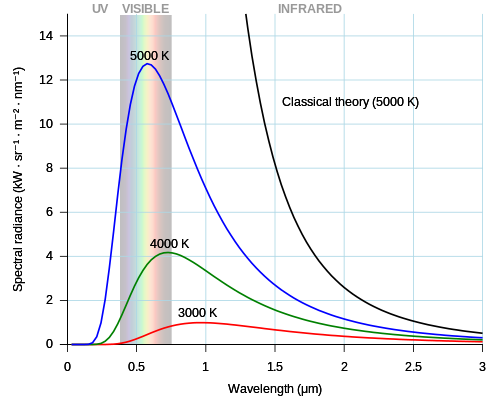

The seeds of the quantum revolution appear in the discovery by J.J. Thomson in 1897 that cathode rays were not continuous but "corpuscles" (electrons). Electrons had been named just six years earlier as part of the emerging theory of atoms. In 1900, Max Planck, unconvinced by the atomic theory, discovered that he needed discrete entities like atoms or electrons to explain black-body radiation.

Very hot – red hot or white hot – objects look similar when heated to the same temperature. This look results from a common curve of light intensity at different frequencies (colors), which is called black-body radiation. White hot objects have intensity across many colors in the visible range. The lowest frequencies above visible colors are infrared light, which also give off heat. Continuous wave theories of light and matter cannot explain the black-body radiation curve. Planck spread the heat energy among individual "oscillators" of an undefined character but with discrete energy capacity; this model explained black-body radiation.

At the time, electrons, atoms, and discrete oscillators were all exotic ideas to explain exotic phenomena. But in 1905 Albert Einstein proposed that light was also corpuscular, consisting of "energy quanta", in contradiction to the established science of light as a continuous wave, stretching back a hundred years to Thomas Young's work on diffraction.

Einstein's revolutionary proposal started by reanalyzing Planck's black-body theory, arriving at the same conclusions by using the new "energy quanta". Einstein then showed how energy quanta connected to Thomson's electron. In 1902, Philipp Lenard directed light from an arc lamp onto freshly cleaned metal plates housed in an evacuated glass tube. He measured the electric current coming off the metal plate, at higher and lower intensities of light and for different metals. Lenard showed that amount of current – the number of electrons – depended on the intensity of the light, but that the velocity of these electrons did not depend on intensity. This is the photoelectric effect. The continuous wave theories of the time predicted that more light intensity would accelerate the same amount of current to higher velocity, contrary to this experiment. Einstein's energy quanta explained the volume increase: one electron is ejected for each quantum: more quanta mean more electrons.

Einstein then predicted that the electron velocity would increase in direct proportion to the light frequency above a fixed value that depended upon the metal. Here the idea is that energy in energy-quanta depends upon the light frequency; the energy transferred to the electron comes in proportion to the light frequency. The type of metal gives a barrier, the fixed value, that the electrons must climb over to exit their atoms, to be emitted from the metal surface and be measured.

Ten years elapsed before Millikan's definitive experiment verified Einstein's prediction. During that time many scientists rejected the revolutionary idea of quanta. But Planck's and Einstein's concept was in the air and soon began to affect other physics and quantum theories.

Quantization of bound electrons in atoms

Experiments with light and matter in the late 1800s uncovered a reproducible but puzzling regularity. When light was shown through purified gases, certain frequencies (colors) did not pass. These dark absorption 'lines' followed a distinctive pattern: the gaps between the lines decreased steadily. By 1889, the Rydberg formula predicted the lines for hydrogen gas using only a constant number and the integers to index the lines. The origin of this regularity was unknown. Solving this mystery would eventually become the first major step toward quantum mechanics.

Throughout the 19th century evidence grew for the atomic nature of matter. With Thomson's discovery of the electron in 1897, scientist began the search for a model of the interior of the atom. Thomson proposed negative electrons swimming in a pool of positive charge. Between 1908 and 1911, Rutherford showed that the positive part was only 1/3000th of the diameter of the atom.

Models of "planetary" electrons orbiting a nuclear "Sun" were proposed, but cannot explain why the electron does not simply fall into the positive charge. In 1913 Niels Bohr and Ernest Rutherford connected the new atom models to the mystery of the Rydberg formula: the orbital radius of the electrons were constrained and the resulting energy differences matched the energy differences in the absorption lines. This meant that absorption and emission of light from atoms was energy quantized: only specific energies that matched the difference in orbital energy would be emitted or absorbed.

Trading one mystery – the regular pattern of the Rydberg formula – for another mystery – constraints on electron orbits – might not seem like a big advance, but the new atom model summarized many other experimental findings. The quantization of the photoelectric effect and now the quantization of the electron orbits set the stage for the final revolution.

Throughout the first and the modern era of quantum mechanics the concept that classical mechanics must be valid macroscopically constrained possible quantum models. This concept was formalized by Bohr in 1923 as the correspondence principle. It requires quantum theory to converge to classical limits. A related concept is Ehrenfest's theorem, which shows that the average values obtained from quantum mechanics (e.g. position and momentum) obey classical laws.

Quantization of spin

In 1922 Otto Stern and Walther Gerlach demonstrated that the magnetic properties of silver atoms defy classical explanation, the work contributing to Stern’s 1943 Nobel Prize in Physics. They fired a beam of silver atoms through a magnetic field. According to classical physics, the atoms should have emerged in a spray, with a continuous range of directions. Instead, the beam separated into two, and only two, diverging streams of atoms. Unlike the other quantum effects known at the time, this striking result involves the state of a single atom. In 1927, T.E. Phipps and J.B. Taylor obtained a similar, but less pronounced effect using hydrogen atoms in their ground state, thereby eliminating any doubts that may have been caused by the use of silver atoms.

In 1924, Wolfgang Pauli called it "two-valuedness not describable classically" and associated it with electrons in the outermost shell. The experiments lead to formulation of its theory described to arise from spin of the electron in 1925, by Samuel Goudsmit and George Uhlenbeck, under the advice of Paul Ehrenfest.

Quantization of matter

In 1924 Louis de Broglie proposed that electrons in an atom are constrained not in "orbits" but as standing waves. In detail his solution did not work, but his hypothesis – that the electron "corpuscle" moves in the atom as a wave – spurred Erwin Schrödinger to develop a wave equation for electrons; when applied to hydrogen the Rydberg formula was accurately reproduced.

Max Born's 1924 paper "Zur Quantenmechanik" was the first use of the words "quantum mechanics" in print. His later work included developing quantum collision models; in a footnote to a 1926 paper he proposed the Born rule connecting theoretical models to experiment.

In 1927 at Bell Labs, Clinton Davisson and Lester Germer fired slow-moving electrons at a crystalline nickel target which showed a diffraction pattern indicating wave nature of electron whose theory was fully explained by Hans Bethe. A similar experiment by George Paget Thomson and Alexander Reid, firing electrons at thin celluloid foils and later metal films, observing rings, independently discovered matter wave nature of electrons.

Further developments

In 1928 Paul Dirac published his relativistic wave equation simultaneously incorporating relativity, predicting anti-matter, and providing a complete theory for the Stern–Gerlach result. These successes launched a new fundamental understanding of our world at small scale: quantum mechanics.

Planck and Einstein started the revolution with quanta that broke down the continuous models of matter and light. Twenty years later "corpuscles" like electrons came to be modeled as continuous waves. This result came to be called wave-particle duality, one iconic idea along with the uncertainty principle that sets quantum mechanics apart from older models of physics.

Quantum radiation, quantum fields

In 1923 Compton demonstrated that the Planck-Einstein energy quanta from light also had momentum; three years later the "energy quanta" got a new name "photon". Despite its role in almost all stages of the quantum revolution, no explicit model for light quanta existed until 1927 when Paul Dirac began work on a quantum theory of radiation that became quantum electrodynamics. Over the following decades this work evolved into quantum field theory, the basis for modern quantum optics and particle physics.

Wave–particle duality

The concept of wave–particle duality says that neither the classical concept of "particle" nor of "wave" can fully describe the behavior of quantum-scale objects, either photons or matter. Wave–particle duality is an example of the principle of complementarity in quantum physics. An elegant example of wave-particle duality is the double-slit experiment.

In the double-slit experiment, as originally performed by Thomas Young in 1803, and then Augustin Fresnel a decade later, a beam of light is directed through two narrow, closely spaced slits, producing an interference pattern of light and dark bands on a screen. The same behavior can be demonstrated in water waves: the double-slit experiment was seen as a demonstration of the wave nature of light.

Variations of the double-slit experiment have been performed using electrons, atoms, and even large molecules, and the same type of interference pattern is seen. Thus it has been demonstrated that all matter possesses wave characteristics.

If the source intensity is turned down, the same interference pattern will slowly build up, one "count" or particle (e.g. photon or electron) at a time. The quantum system acts as a wave when passing through the double slits, but as a particle when it is detected. This is a typical feature of quantum complementarity: a quantum system acts as a wave in an experiment to measure its wave-like properties, and like a particle in an experiment to measure its particle-like properties. The point on the detector screen where any individual particle shows up is the result of a random process. However, the distribution pattern of many individual particles mimics the diffraction pattern produced by waves.

Uncertainty principle

Suppose it is desired to measure the position and speed of an object—for example, a car going through a radar speed trap. It can be assumed that the car has a definite position and speed at a particular moment in time. How accurately these values can be measured depends on the quality of the measuring equipment. If the precision of the measuring equipment is improved, it provides a result closer to the true value. It might be assumed that the speed of the car and its position could be operationally defined and measured simultaneously, as precisely as might be desired.

In 1927, Heisenberg proved that this last assumption is not correct. Quantum mechanics shows that certain pairs of physical properties, for example, position and speed, cannot be simultaneously measured, nor defined in operational terms, to arbitrary precision: the more precisely one property is measured, or defined in operational terms, the less precisely can the other be thus treated. This statement is known as the uncertainty principle. The uncertainty principle is not only a statement about the accuracy of our measuring equipment but, more deeply, is about the conceptual nature of the measured quantities—the assumption that the car had simultaneously defined position and speed does not work in quantum mechanics. On a scale of cars and people, these uncertainties are negligible, but when dealing with atoms and electrons they become critical.

Heisenberg gave, as an illustration, the measurement of the position and momentum of an electron using a photon of light. In measuring the electron's position, the higher the frequency of the photon, the more accurate is the measurement of the position of the impact of the photon with the electron, but the greater is the disturbance of the electron. This is because from the impact with the photon, the electron absorbs a random amount of energy, rendering the measurement obtained of its momentum increasingly uncertain, for one is necessarily measuring its post-impact disturbed momentum from the collision products and not its original momentum (momentum which should be simultaneously measured with position). With a photon of lower frequency, the disturbance (and hence uncertainty) in the momentum is less, but so is the accuracy of the measurement of the position of the impact.

At the heart of the uncertainty principle is a fact that for any mathematical analysis in the position and velocity domains, achieving a sharper (more precise) curve in the position domain can only be done at the expense of a more gradual (less precise) curve in the speed domain, and vice versa. More sharpness in the position domain requires contributions from more frequencies in the speed domain to create the narrower curve, and vice versa. It is a fundamental tradeoff inherent in any such related or complementary measurements, but is only really noticeable at the smallest (Planck) scale, near the size of elementary particles.

The uncertainty principle shows mathematically that the product of the uncertainty in the position and momentum of a particle (momentum is velocity multiplied by mass) could never be less than a certain value, and that this value is related to the Planck constant.

Wave function collapse

Wave function collapse means that a measurement has forced or converted a quantum (probabilistic or potential) state into a definite measured value. This phenomenon is only seen in quantum mechanics rather than classical mechanics.

For example, before a photon actually "shows up" on a detection screen it can be described only with a set of probabilities for where it might show up. When it does appear, for instance in the CCD of an electronic camera, the time and space where it interacted with the device are known within very tight limits. However, the photon has disappeared in the process of being captured (measured), and its quantum wave function has disappeared with it. In its place, some macroscopic physical change in the detection screen has appeared, e.g., an exposed spot in a sheet of photographic film, or a change in electric potential in some cell of a CCD.

Eigenstates and eigenvalues

Because of the uncertainty principle, statements about both the position and momentum of particles can assign only a probability that the position or momentum has some numerical value. Therefore, it is necessary to formulate clearly the difference between the state of something indeterminate, such as an electron in a probability cloud, and the state of something having a definite value. When an object can definitely be "pinned-down" in some respect, it is said to possess an eigenstate.

In the Stern–Gerlach experiment discussed above, the quantum model predicts two possible values of spin for the atom compared to the magnetic axis. These two eigenstates are named arbitrarily 'up' and 'down'. The quantum model predicts these states will be measured with equal probability, but no intermediate values will be seen. This is what the Stern–Gerlach experiment shows.

The eigenstates of spin about the vertical axis are not simultaneously eigenstates of spin about the horizontal axis, so this atom has an equal probability of being found to have either value of spin about the horizontal axis. As described in the section above, measuring the spin about the horizontal axis can allow an atom that was spun up to spin down: measuring its spin about the horizontal axis collapses its wave function into one of the eigenstates of this measurement, which means it is no longer in an eigenstate of spin about the vertical axis, so can take either value.

The Pauli exclusion principle

In 1924, Wolfgang Pauli proposed a new quantum degree of freedom (or quantum number), with two possible values, to resolve inconsistencies between observed molecular spectra and the predictions of quantum mechanics. In particular, the spectrum of atomic hydrogen had a doublet, or pair of lines differing by a small amount, where only one line was expected. Pauli formulated his exclusion principle, stating, "There cannot exist an atom in such a quantum state that two electrons within [it] have the same set of quantum numbers."

A year later, Uhlenbeck and Goudsmit identified Pauli's new degree of freedom with the property called spin whose effects were observed in the Stern–Gerlach experiment.

Dirac wave equation

In 1928, Paul Dirac extended the Pauli equation, which described spinning electrons, to account for special relativity. The result was a theory that dealt properly with events, such as the speed at which an electron orbits the nucleus, occurring at a substantial fraction of the speed of light. By using the simplest electromagnetic interaction, Dirac was able to predict the value of the magnetic moment associated with the electron's spin and found the experimentally observed value, which was too large to be that of a spinning charged sphere governed by classical physics. He was able to solve for the spectral lines of the hydrogen atom and to reproduce from physical first principles Sommerfeld's successful formula for the fine structure of the hydrogen spectrum.

Dirac's equations sometimes yielded a negative value for energy, for which he proposed a novel solution: he posited the existence of an antielectron and a dynamical vacuum. This led to the many-particle quantum field theory.

Quantum entanglement

In quantum physics, a group of particles can interact or be created together in such a way that the quantum state of each particle of the group cannot be described independently of the state of the others, including when the particles are separated by a large distance. This is known as quantum entanglement.

An early landmark in the study of entanglement was the Einstein–Podolsky–Rosen (EPR) paradox, a thought experiment proposed by Albert Einstein, Boris Podolsky and Nathan Rosen which argues that the description of physical reality provided by quantum mechanics is incomplete. In a 1935 paper titled "Can Quantum-Mechanical Description of Physical Reality be Considered Complete?", they argued for the existence of "elements of reality" that were not part of quantum theory, and speculated that it should be possible to construct a theory containing these hidden variables.

The thought experiment involves a pair of particles prepared in what would later become known as an entangled state. Einstein, Podolsky, and Rosen pointed out that, in this state, if the position of the first particle were measured, the result of measuring the position of the second particle could be predicted. If instead the momentum of the first particle were measured, then the result of measuring the momentum of the second particle could be predicted. They argued that no action taken on the first particle could instantaneously affect the other, since this would involve information being transmitted faster than light, which is forbidden by the theory of relativity. They invoked a principle, later known as the "EPR criterion of reality", positing that: "If, without in any way disturbing a system, we can predict with certainty (i.e., with probability equal to unity) the value of a physical quantity, then there exists an element of reality corresponding to that quantity." From this, they inferred that the second particle must have a definite value of both position and of momentum prior to either quantity being measured. But quantum mechanics considers these two observables incompatible and thus does not associate simultaneous values for both to any system. Einstein, Podolsky, and Rosen therefore concluded that quantum theory does not provide a complete description of reality. In the same year, Erwin Schrödinger used the word "entanglement" and declared: "I would not call that one but rather the characteristic trait of quantum mechanics."

The Irish physicist John Stewart Bell carried the analysis of quantum entanglement much further. He deduced that if measurements are performed independently on the two separated particles of an entangled pair, then the assumption that the outcomes depend upon hidden variables within each half implies a mathematical constraint on how the outcomes on the two measurements are correlated. This constraint would later be named the Bell inequality. Bell then showed that quantum physics predicts correlations that violate this inequality. Consequently, the only way that hidden variables could explain the predictions of quantum physics is if they are "nonlocal", which is to say that somehow the two particles are able to interact instantaneously no matter how widely they ever become separated. Performing experiments like those that Bell suggested, physicists have found that nature obeys quantum mechanics and violates Bell inequalities. In other words, the results of these experiments are incompatible with any local hidden variable theory.

Quantum field theory

The idea of quantum field theory began in the late 1920s with British physicist Paul Dirac, when he attempted to quantize the energy of the electromagnetic field; just as in quantum mechanics the energy of an electron in the hydrogen atom was quantized. Quantization is a procedure for constructing a quantum theory starting from a classical theory.

Merriam-Webster defines a field in physics as "a region or space in which a given effect (such as magnetism) exists". Other effects that manifest themselves as fields are gravitation and static electricity. In 2008, physicist Richard Hammond wrote:

Sometimes we distinguish between quantum mechanics (QM) and quantum field theory (QFT). QM refers to a system in which the number of particles is fixed, and the fields (such as the electromechanical field) are continuous classical entities. QFT ... goes a step further and allows for the creation and annihilation of particles ...

He added, however, that quantum mechanics is often used to refer to "the entire notion of quantum view".

In 1931, Dirac proposed the existence of particles that later became known as antimatter. Dirac shared the Nobel Prize in Physics for 1933 with Schrödinger "for the discovery of new productive forms of atomic theory".

Quantum electrodynamics

Quantum electrodynamics (QED) is the name of the quantum theory of the electromagnetic force. Understanding QED begins with understanding electromagnetism. Electromagnetism can be called "electrodynamics" because it is a dynamic interaction between electrical and magnetic forces. Electromagnetism begins with the electric charge.

Electric charges are the sources of and create, electric fields. An electric field is a field that exerts a force on any particles that carry electric charges, at any point in space. This includes the electron, proton, and even quarks, among others. As a force is exerted, electric charges move, a current flows, and a magnetic field is produced. The changing magnetic field, in turn, causes electric current (often moving electrons). The physical description of interacting charged particles, electrical currents, electrical fields, and magnetic fields is called electromagnetism.

In 1928 Paul Dirac produced a relativistic quantum theory of electromagnetism. This was the progenitor to modern quantum electrodynamics, in that it had essential ingredients of the modern theory. However, the problem of unsolvable infinities developed in this relativistic quantum theory. Years later, renormalization largely solved this problem. Initially viewed as a provisional, suspect procedure by some of its originators, renormalization eventually was embraced as an important and self-consistent tool in QED and other fields of physics. Also, in the late 1940s Feynman diagrams provided a way to make predictions with QED by finding a probability amplitude for each possible way that an interaction could occur. The diagrams showed in particular that the electromagnetic force is the exchange of photons between interacting particles.

The Lamb shift is an example of a quantum electrodynamics prediction that has been experimentally verified. It is an effect whereby the quantum nature of the electromagnetic field makes the energy levels in an atom or ion deviate slightly from what they would otherwise be. As a result, spectral lines may shift or split.

Similarly, within a freely propagating electromagnetic wave, the current can also be just an abstract displacement current, instead of involving charge carriers. In QED, its full description makes essential use of short-lived virtual particles. There, QED again validates an earlier, rather mysterious concept.

Standard Model

The Standard Model of particle physics is the quantum field theory that describes three of the four known fundamental forces (electromagnetic, weak and strong interactions – excluding gravity) in the universe and classifies all known elementary particles. It was developed in stages throughout the latter half of the 20th century, through the work of many scientists worldwide, with the current formulation being finalized in the mid-1970s upon experimental confirmation of the existence of quarks. Since then, proof of the top quark (1995), the tau neutrino (2000), and the Higgs boson (2012) have added further credence to the Standard Model. In addition, the Standard Model has predicted various properties of weak neutral currents and the W and Z bosons with great accuracy.

Although the Standard Model is believed to be theoretically self-consistent and has demonstrated success in providing experimental predictions, it leaves some physical phenomena unexplained and so falls short of being a complete theory of fundamental interactions. For example, it does not fully explain baryon asymmetry, incorporate the full theory of gravitation as described by general relativity, or account for the universe's accelerating expansion as possibly described by dark energy. The model does not contain any viable dark matter particle that possesses all of the required properties deduced from observational cosmology. It also does not incorporate neutrino oscillations and their non-zero masses. Accordingly, it is used as a basis for building more exotic models that incorporate hypothetical particles, extra dimensions, and elaborate symmetries (such as supersymmetry) to explain experimental results at variance with the Standard Model, such as the existence of dark matter and neutrino oscillations.

Interpretations

The physical measurements, equations, and predictions pertinent to quantum mechanics are all consistent and hold a very high level of confirmation. However, the question of what these abstract models say about the underlying nature of the real world has received competing answers. These interpretations are widely varying and sometimes somewhat abstract. For instance, the Copenhagen interpretation states that before a measurement, statements about a particle's properties are completely meaningless, while the many-worlds interpretation describes the existence of a multiverse made up of every possible universe.

Light behaves in some aspects like particles and in other aspects like waves. Matter—the "stuff" of the universe consisting of particles such as electrons and atoms—exhibits wavelike behavior too. Some light sources, such as neon lights, give off only certain specific frequencies of light, a small set of distinct pure colors determined by neon's atomic structure. Quantum mechanics shows that light, along with all other forms of electromagnetic radiation, comes in discrete units, called photons, and predicts its spectral energies (corresponding to pure colors), and the intensities of its light beams. A single photon is a quantum, or smallest observable particle, of the electromagnetic field. A partial photon is never experimentally observed. More broadly, quantum mechanics shows that many properties of objects, such as position, speed, and angular momentum, that appeared continuous in the zoomed-out view of classical mechanics, turn out to be (in the very tiny, zoomed-in scale of quantum mechanics) quantized. Such properties of elementary particles are required to take on one of a set of small, discrete allowable values, and since the gap between these values is also small, the discontinuities are only apparent at very tiny (atomic) scales.

Applications

Everyday applications

The relationship between the frequency of electromagnetic radiation and the energy of each photon is why ultraviolet light can cause sunburn, but visible or infrared light cannot. A photon of ultraviolet light delivers a high amount of energy—enough to contribute to cellular damage such as occurs in a sunburn. A photon of infrared light delivers less energy—only enough to warm one's skin. So, an infrared lamp can warm a large surface, perhaps large enough to keep people comfortable in a cold room, but it cannot give anyone a sunburn.

Technological applications

Applications of quantum mechanics include the laser, the transistor, the electron microscope, and magnetic resonance imaging. A special class of quantum mechanical applications is related to macroscopic quantum phenomena such as superfluid helium and superconductors. The study of semiconductors led to the invention of the diode and the transistor, which are indispensable for modern electronics.

In even a simple light switch, quantum tunneling is absolutely vital, as otherwise the electrons in the electric current could not penetrate the potential barrier made up of a layer of oxide. Flash memory chips found in USB drives also use quantum tunneling, to erase their memory cells.